What is the future of serverless computing? The trajectory of serverless computing is poised for significant evolution, moving beyond its foundational principles to encompass a broader spectrum of capabilities and applications. Serverless architecture, characterized by its event-driven nature and automated resource management, has already reshaped how applications are developed and deployed, offering advantages in cost efficiency, scalability, and developer productivity.

This exploration delves into the current state of serverless, examines emerging technologies influencing its development, and investigates its impact across diverse industries. We will analyze the evolution of serverless computing, the tools and frameworks that streamline development, and the critical security considerations. Furthermore, the future of DevOps and the integration of serverless with CI/CD pipelines will be explored. The goal is to present a comprehensive and forward-looking analysis of serverless computing’s trajectory.

Current State of Serverless Computing

Serverless computing has rapidly evolved from a niche concept to a mainstream architecture, fundamentally changing how applications are built and deployed. This shift is driven by its potential to reduce operational overhead, improve resource utilization, and accelerate development cycles. Understanding its current state requires examining its core principles, advantages, and how it contrasts with traditional models.

Core Principles and Functionality

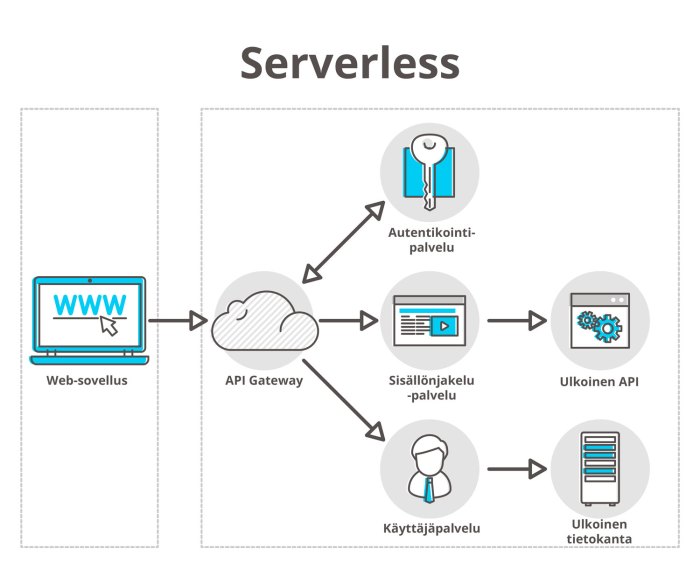

Serverless computing, despite its name, doesn’t mean the absence of servers. Instead, it signifies that developers no longer manage the underlying infrastructure. The cloud provider handles server provisioning, scaling, and management. This model focuses on executing code in response to events, such as HTTP requests, database updates, or scheduled triggers.

- Event-Driven Architecture: Serverless applications are primarily driven by events. Functions, the fundamental unit of serverless execution, are triggered by specific events. This event-driven approach enables applications to react dynamically to changes in real-time.

- Function as a Service (FaaS): FaaS is the core of serverless computing. It allows developers to upload individual functions, which are then executed in response to events. These functions are typically stateless and designed to perform a specific task.

- Automatic Scaling: Cloud providers automatically scale serverless functions based on demand. This ensures that applications can handle varying workloads without manual intervention. The scaling is typically handled behind the scenes, ensuring seamless performance.

- Pay-per-use Pricing: Users are charged only for the actual compute time consumed by their functions. This eliminates the need to pay for idle resources, resulting in cost efficiency. The billing is usually granular, often measured in milliseconds.

Key Advantages of Serverless Architecture

The adoption of serverless computing is fueled by its compelling advantages, making it attractive for various use cases. These advantages span across cost, scalability, and operational efficiency.

- Cost Savings: The pay-per-use model of serverless computing significantly reduces costs compared to traditional infrastructure. Organizations only pay for the resources they consume, eliminating the expenses associated with idle servers. For example, a small e-commerce site that only experiences high traffic during specific sales periods can use serverless to handle peak loads without paying for constant infrastructure.

- Scalability: Serverless applications automatically scale to handle any workload. The cloud provider manages the scaling process, ensuring that applications can handle sudden spikes in traffic without requiring manual intervention. This scalability is crucial for applications with unpredictable traffic patterns. For example, an image processing service using serverless functions can scale up automatically to handle a large batch of image uploads.

- Ease of Deployment: Serverless platforms simplify the deployment process. Developers can focus on writing code without worrying about server configuration, management, or maintenance. This allows for faster development cycles and quicker time-to-market. The deployment process often involves simply uploading the function code to the cloud provider’s platform.

- Reduced Operational Overhead: Serverless architecture reduces the operational burden on development teams. The cloud provider handles server provisioning, patching, and security updates. This frees up developers to focus on writing code and building features. This also reduces the need for specialized operations staff.

Comparison with Traditional Infrastructure Models

Comparing serverless computing with traditional infrastructure models highlights the key differences in terms of resource management, cost, and operational overhead. The following table provides a detailed comparison across key dimensions.

| Feature | Serverless Computing | Traditional Infrastructure (e.g., VMs) | Containerization (e.g., Docker) | Managed Services (e.g., PaaS) |

|---|---|---|---|---|

| Resource Management | Cloud provider manages all resources; automatic scaling. | Manual server provisioning, scaling, and management. | Container orchestration (e.g., Kubernetes) required for scaling and management. | Cloud provider manages underlying infrastructure; limited control over resources. |

| Cost Model | Pay-per-use; billed for compute time and resources consumed. | Fixed costs based on server capacity; often underutilized resources. | Variable costs based on resource usage; requires infrastructure setup. | Subscription-based or pay-as-you-go; cost depends on service and usage. |

| Scalability | Automatic and elastic scaling based on demand. | Manual scaling or auto-scaling groups; can be slow to respond. | Scalability managed through orchestration tools; more complex. | Scalability varies by service; generally easier than VMs. |

| Operational Overhead | Minimal; cloud provider handles server management, patching, and security. | High; requires dedicated operations teams for server maintenance. | Moderate; requires container orchestration knowledge and management. | Moderate; cloud provider handles some aspects, but still requires configuration. |

Emerging Technologies Influencing Serverless

The serverless computing paradigm is not static; its evolution is significantly shaped by emerging technologies. These technologies enhance serverless capabilities, broaden its applicability, and address its limitations. Containerization, edge computing, and advancements in artificial intelligence and machine learning are pivotal in driving the future of serverless, enabling more sophisticated, efficient, and scalable applications.

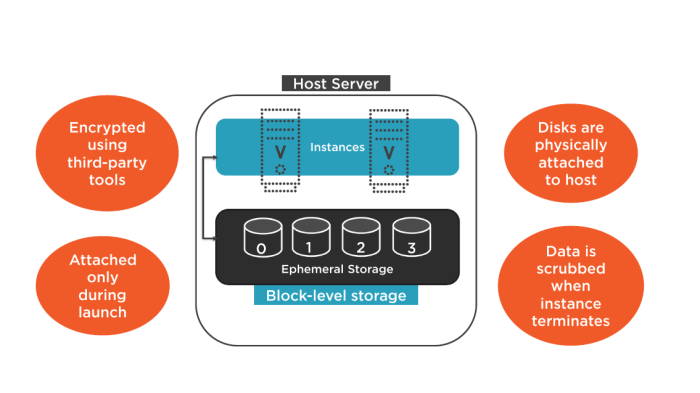

Containerization in Serverless

Containerization, particularly through technologies like Docker and orchestration platforms like Kubernetes, plays a critical role in the serverless ecosystem. Containerization facilitates the packaging of applications and their dependencies into isolated units, ensuring consistent execution across different environments.The benefits of containerization in serverless are:

- Improved Portability: Containers encapsulate the application and its dependencies, making it highly portable. Serverless functions can be packaged as containers and deployed consistently across various cloud providers or on-premises infrastructure.

- Enhanced Isolation: Containers provide a higher degree of isolation compared to traditional serverless functions. This isolation improves security by limiting the impact of vulnerabilities and allows for more precise resource allocation.

- Simplified Deployment and Management: Container orchestration tools, such as Kubernetes, automate the deployment, scaling, and management of containerized serverless functions. This simplifies operational tasks and reduces the overhead associated with managing complex applications.

- Increased Flexibility: Containerization allows developers to leverage a wider range of programming languages, frameworks, and tools within a serverless environment. This flexibility expands the possibilities for serverless application development.

A real-world example of containerized serverless functions can be found in applications that process large datasets. Containerizing data processing pipelines enables the deployment of these pipelines across different cloud platforms, scaling them dynamically based on demand. The use of Kubernetes further automates the management of these containers, ensuring high availability and efficient resource utilization.

Edge Computing and Serverless

Edge computing, which involves processing data closer to the source, is increasingly integrated with serverless architectures. This integration offers several advantages, including reduced latency, improved responsiveness, and enhanced data privacy.The impact of edge computing on serverless applications is:

- Reduced Latency: By executing serverless functions at the edge, data processing is performed closer to the end-users or devices, minimizing the time it takes for requests to be processed and responses to be delivered.

- Improved Responsiveness: Edge computing enables applications to respond to user interactions and events in real-time, enhancing the user experience.

- Enhanced Data Privacy: Processing data at the edge reduces the need to transmit sensitive data to centralized cloud servers, improving data privacy and compliance with regulations.

- Increased Scalability: Edge deployments can scale more effectively than centralized deployments, as they can distribute the workload across multiple edge locations.

Consider a content delivery network (CDN) that uses serverless functions at the edge. When a user requests a video, a serverless function at the nearest edge location can process the request, optimize the video stream for the user’s device, and deliver the content with minimal latency. This is an example of how edge computing and serverless work together to provide a seamless user experience.

AI and ML Integration in Serverless

Advancements in artificial intelligence (AI) and machine learning (ML) are transforming serverless environments, enabling developers to build intelligent and automated applications. Serverless platforms provide the infrastructure needed to deploy and scale AI/ML models, making them accessible to a wider audience.The ways in which AI and ML are being integrated into serverless environments include:

- Model Deployment and Inference: Serverless functions can be used to deploy and execute machine learning models for tasks such as image recognition, natural language processing, and predictive analytics.

- Automated Data Processing: Serverless architectures can be used to automate data preprocessing, feature engineering, and model training, streamlining the machine learning workflow.

- Real-time Analytics: Serverless functions can process real-time data streams and provide instant insights, enabling applications to react to events and changes in the environment.

- Intelligent Applications: Serverless allows for the development of intelligent applications that can personalize user experiences, automate tasks, and improve decision-making.

For example, an e-commerce platform can use serverless functions to deploy a recommendation engine. When a user browses the website, a serverless function can analyze their behavior, predict their preferences, and recommend relevant products in real-time. This is a practical application of AI/ML within a serverless environment, improving user engagement and sales.

Serverless Computing in Different Industries

Serverless computing’s flexibility and scalability are driving its adoption across diverse industries, offering opportunities to optimize operations, reduce costs, and accelerate innovation. Its event-driven architecture allows businesses to respond dynamically to changing demands, creating agile and efficient systems. The following sections explore specific applications of serverless technologies within e-commerce, healthcare, and finance.

Serverless Applications in E-commerce

E-commerce businesses are increasingly leveraging serverless computing to manage fluctuating workloads, personalize customer experiences, and improve operational efficiency. This shift is driven by the need for agility and responsiveness in a competitive market where online traffic can vary significantly.

- Dynamic Scaling for Peak Loads: Serverless platforms automatically scale resources based on demand. During seasonal sales or promotional events, serverless functions can handle surges in traffic without manual intervention, preventing website crashes or performance degradation. For instance, a large online retailer might experience a tenfold increase in traffic during Black Friday. Serverless functions, such as those managing product catalog searches or payment processing, can automatically scale up to accommodate this load, ensuring a seamless customer experience.

This contrasts with traditional infrastructure where pre-provisioned resources might be insufficient or underutilized during off-peak hours.

- Personalized Recommendations and User Experience: Serverless functions can be employed to deliver personalized product recommendations in real-time. By analyzing user behavior data, such as browsing history and purchase patterns, serverless functions can trigger the generation of customized product suggestions. These functions can be triggered by events like a user adding an item to their cart or viewing a specific product. Amazon.com, for example, utilizes serverless architectures to power its recommendation engine, enhancing customer engagement and driving sales.

- Order Processing and Fulfillment: Serverless architectures can streamline order processing workflows. When an order is placed, a serverless function can be triggered to handle tasks such as validating payment, updating inventory, and sending order confirmation emails. This event-driven approach allows for parallel processing, accelerating the fulfillment process. Furthermore, serverless functions can integrate with various third-party services, such as shipping providers, to provide real-time tracking updates.

- A/B Testing and Feature Rollouts: Serverless computing facilitates rapid A/B testing of new features and functionalities. Developers can deploy different versions of code as serverless functions and route user traffic based on specific criteria. This allows for controlled experiments and data-driven decision-making. If a new feature is successful, it can be quickly scaled and rolled out to all users.

Serverless Transformations in Healthcare

The healthcare industry is experiencing a digital transformation, and serverless computing plays a significant role in improving patient care, enhancing data management, and streamlining administrative tasks. The ability to handle large datasets, secure sensitive information, and integrate with various healthcare systems makes serverless an attractive option.

- Patient Data Management and Analysis: Serverless functions can be used to process and analyze large volumes of patient data stored in databases or data lakes. These functions can be triggered by events such as the ingestion of new patient records or updates to existing medical histories. Data analysis can be performed in near real-time, enabling healthcare providers to identify trends, detect anomalies, and personalize treatment plans.

- Application Deployment and Integration: Serverless platforms simplify the deployment and management of healthcare applications. Serverless functions can be used to build and deploy APIs that integrate with electronic health records (EHR) systems, medical devices, and other healthcare applications. This facilitates data sharing and interoperability between different systems, improving communication and collaboration among healthcare providers.

- Real-time Monitoring and Alerting: Serverless functions can be used to monitor patient health data from wearable devices or medical sensors. When abnormal readings are detected, serverless functions can trigger alerts to notify healthcare providers, enabling timely intervention and improved patient outcomes. For instance, a serverless function might monitor a patient’s heart rate and automatically alert a physician if the rate exceeds a predefined threshold.

- Secure Data Storage and Compliance: Serverless computing can be used to create secure and compliant data storage solutions. Serverless functions can be used to encrypt data, manage access controls, and ensure compliance with regulations such as HIPAA (Health Insurance Portability and Accountability Act). This is critical for protecting sensitive patient information and maintaining patient privacy.

Serverless Application in the Financial Industry

The financial industry is characterized by complex systems, stringent security requirements, and high transaction volumes. Serverless computing offers opportunities to improve operational efficiency, reduce costs, and enhance the security of financial applications.

Consider a hypothetical scenario involving a financial institution, “FinTech Solutions,” aiming to modernize its fraud detection system.

Scenario: FinTech Solutions processes millions of transactions daily. Their current fraud detection system struggles to keep pace with the volume and velocity of transactions, leading to delays and potential financial losses. They decide to implement a serverless architecture to address these challenges.

- Event-Driven Architecture for Real-time Fraud Detection: When a transaction occurs, it triggers a serverless function. This function, powered by machine learning models, analyzes the transaction in real-time, evaluating factors such as transaction amount, location, time of day, and the user’s transaction history. If the transaction is flagged as suspicious, the function triggers an alert.

- Scalable Processing and Reduced Costs: The serverless architecture automatically scales resources based on transaction volume. During peak hours, when transaction volume is high, the system automatically scales up to handle the increased load without manual intervention. This eliminates the need for pre-provisioning of resources and reduces operational costs.

- Improved Security and Compliance: Serverless functions can be integrated with security services such as multi-factor authentication and data encryption to protect sensitive financial data. This architecture supports compliance with regulatory requirements, such as GDPR and PCI DSS.

- Enhanced Operational Efficiency: Serverless functions can automate routine tasks, such as data validation, report generation, and system monitoring. This frees up financial analysts to focus on more strategic activities, such as identifying new fraud patterns and improving fraud detection models.

Serverless and the Developer Experience

Serverless computing’s adoption hinges significantly on the developer experience. A seamless and efficient development workflow is crucial for attracting and retaining developers, accelerating the adoption of serverless architectures, and realizing the promised benefits of increased productivity and reduced operational overhead. This section explores the tools, best practices, and deployment procedures that contribute to a positive serverless development experience.

Tools and Frameworks Simplifying Serverless Development

The serverless ecosystem has matured significantly, leading to the emergence of numerous tools and frameworks designed to streamline the development process. These tools automate repetitive tasks, provide abstractions over complex infrastructure, and enhance developer productivity.One of the most popular is the Serverless Framework, an open-source framework that simplifies the deployment and management of serverless applications. It supports multiple cloud providers, including AWS, Azure, and Google Cloud Platform.

The framework uses a YAML configuration file to define resources, events, and functions, automating the deployment process and abstracting away the complexities of infrastructure management. For instance, a typical `serverless.yml` file might define an AWS Lambda function triggered by an API Gateway event:“`yamlservice: my-serviceprovider: name: aws runtime: nodejs18.x region: us-east-1functions: hello: handler: handler.hello events:

http

path: /hello method: get“`This configuration automatically provisions the necessary resources on AWS, including the Lambda function, API Gateway endpoint, and associated IAM roles.Another essential tool is the AWS SAM (Serverless Application Model) CLI. SAM extends CloudFormation, enabling developers to define serverless applications using a simplified template format.

SAM provides features such as local testing, debugging, and rapid deployment. Developers can use the `sam local invoke` command to test their functions locally, simulating the AWS Lambda environment, thus enabling faster iteration cycles.Other frameworks like Claudia.js and Apex offer different approaches to serverless development. Claudia.js focuses on simplifying the deployment and management of serverless applications, particularly for Node.js developers, while Apex provides a command-line interface and focuses on building and deploying serverless functions with a streamlined workflow.Beyond frameworks, Integrated Development Environments (IDEs) have integrated features to support serverless development.

IDEs like VS Code, IntelliJ IDEA, and others provide extensions for debugging, code completion, and deployment directly from the IDE, enhancing the developer experience. They also offer integrations with cloud provider services, such as AWS Toolkit for VS Code, which provides features for deploying, testing, and managing serverless applications.Containerization technologies, such as Docker, also play a crucial role in serverless development.

Serverless platforms, like AWS Lambda, support deploying functions as container images. This approach allows developers to package their application dependencies and runtime environments in a container, providing consistency across different environments and simplifying dependency management.

Best Practices for Debugging and Monitoring Serverless Applications

Debugging and monitoring serverless applications present unique challenges compared to traditional applications. The distributed nature of serverless architectures and the ephemeral nature of functions require specialized tools and techniques. Adhering to best practices ensures that developers can quickly identify and resolve issues, maintain application health, and optimize performance.Key aspects of debugging and monitoring include:

- Logging: Implementing comprehensive logging is crucial for understanding application behavior. Developers should log relevant information at different stages of the function’s execution, including input parameters, environment variables, function execution start and end times, and any errors or exceptions that occur. Cloud providers offer logging services such as AWS CloudWatch Logs, Azure Monitor, and Google Cloud Logging, which allow developers to collect, store, and analyze logs.

Structured logging formats, like JSON, are beneficial for easy parsing and analysis.

- Tracing: Distributed tracing helps track requests as they flow through multiple serverless functions and services. Tools like AWS X-Ray, Azure Application Insights, and Google Cloud Trace provide visibility into the end-to-end execution of requests, allowing developers to identify performance bottlenecks and pinpoint the source of errors. Tracing provides insights into latency, dependencies, and error rates across various services.

- Monitoring Metrics: Monitoring key performance indicators (KPIs) is essential for maintaining application health and optimizing performance. Developers should monitor metrics such as function invocations, execution duration, error rates, and resource utilization. Cloud providers offer monitoring services that automatically collect and display these metrics. Setting up alerts based on predefined thresholds helps detect and respond to issues proactively.

- Local Testing: Before deploying code, local testing helps in identifying and fixing bugs quickly. Frameworks like the Serverless Framework and SAM CLI provide tools for simulating the serverless environment locally. Developers can invoke functions, simulate events, and test different scenarios without deploying to the cloud.

- Error Handling: Implementing robust error handling is crucial for preventing application failures. Developers should handle exceptions gracefully, log error messages, and implement retry mechanisms for transient failures. Proper error handling ensures that the application continues to function even when encountering issues.

- Debugging Tools: Using debugging tools provided by cloud providers can significantly aid in the troubleshooting process. AWS Lambda supports remote debugging, allowing developers to step through the code and inspect variables in real-time. Azure Functions and Google Cloud Functions also offer similar debugging capabilities.

By implementing these best practices, developers can create robust, reliable, and easily maintainable serverless applications.

Step-by-Step Procedure for Deploying a Simple Serverless Function

Deploying a simple serverless function involves several steps, from writing the code to deploying it to a cloud provider. This procedure provides a practical guide for developers, using AWS Lambda and Node.js as an example.

1. Prerequisites

- An AWS account.

- Node.js and npm (or yarn) installed.

- The AWS CLI configured with appropriate credentials.

- The Serverless Framework installed globally (`npm install -g serverless`).

2. Create a Project Directory

Create a new directory for your serverless project and navigate into it: “`bash mkdir my-serverless-app cd my-serverless-app “`

3. Initialize the Serverless Project

Use the Serverless Framework to initialize a new project. This command creates a `serverless.yml` file and a basic function handler: “`bash serverless create –template aws-nodejs –path . “`

4. Write the Function Code

Open the `handler.js` file and replace the existing code with the following simple function that returns a greeting: “`javascript ‘use strict’; module.exports.hello = async (event) => return statusCode: 200, body: JSON.stringify( message: ‘Go Serverless v3.0! Your function executed successfully!’, input: event, , null, 2 ), ; ; “`

5. Configure the Serverless Deployment

Modify the `serverless.yml` file to define the function, runtime, and events. For a simple HTTP endpoint, the configuration might look like this: “`yaml service: my-serverless-app frameworkVersion: ‘3’ provider: name: aws runtime: nodejs18.x region: us-east-1 iam: role: statements:

Effect

“Allow” Action: “s3:*” Resource: “*” functions: hello: handler: handler.hello events:

http

method: get path: /hello “`

6. Deploy the Function

Use the Serverless Framework to deploy the function to AWS. This command packages the code, uploads it to AWS, and provisions the necessary resources (Lambda function, API Gateway endpoint, etc.): “`bash serverless deploy “`

7. Test the Function

After deployment, the Serverless Framework will output the API Gateway endpoint URL. You can test the function by accessing this URL in a web browser or using a tool like `curl`: “`bash curl

8. Monitor and Manage

After deployment, you can use the AWS console or the Serverless Framework to monitor function invocations, logs, and performance metrics. Use the monitoring and debugging tools described earlier to ensure the function operates correctly and efficiently.This step-by-step procedure provides a basic framework for deploying a simple serverless function. Developers can extend this process by adding more complex features, integrating with other services, and implementing robust error handling and monitoring strategies.

Security Considerations in Serverless Environments

Serverless computing, while offering numerous benefits, introduces unique security challenges that must be carefully addressed. The distributed nature of serverless architectures, the reliance on third-party services, and the ephemeral nature of functions require a proactive and comprehensive security strategy. Ignoring these considerations can expose applications to a range of vulnerabilities, potentially leading to data breaches, service disruptions, and financial losses.

Common Security Vulnerabilities in Serverless Architectures

Serverless environments present a distinct attack surface compared to traditional architectures. Understanding these vulnerabilities is crucial for implementing effective security measures.

- Function Code Vulnerabilities: Functions, being the core execution units in serverless, are prime targets. Common vulnerabilities include:

- Injection flaws: Similar to web applications, serverless functions are susceptible to SQL injection, command injection, and other injection attacks if input validation is inadequate. For example, a function that constructs a database query based on user input without proper sanitization could be exploited to retrieve sensitive data.

- Broken authentication and authorization: Incorrectly implemented authentication and authorization mechanisms can allow unauthorized access to functions and resources. This can involve hardcoded credentials, weak password policies, or misconfigured IAM roles.

- Cross-Site Scripting (XSS): If a function handles user-supplied data that is then rendered in a web interface, XSS vulnerabilities can arise, allowing attackers to inject malicious scripts.

- Insecure Dependencies: Serverless functions often rely on third-party libraries and packages. If these dependencies have known vulnerabilities, they can be exploited by attackers. Regular dependency scanning and patching are crucial.

- Event Injection and Manipulation: Serverless applications are triggered by events. Attackers can exploit this by:

- Event injection: Malicious actors can inject crafted events to trigger unintended function executions. For example, sending a malformed message to an event queue could trigger a denial-of-service condition.

- Event data manipulation: Modifying event data to alter the behavior of a function. This could include changing the price of an item in an e-commerce application or manipulating sensor readings in an IoT system.

- Configuration and Deployment Vulnerabilities: Misconfigurations and insecure deployment practices can create security risks.

- Insecure Storage Configuration: Misconfigured storage buckets (e.g., AWS S3) can expose sensitive data if not properly secured with appropriate access controls.

- Secrets Management: Hardcoding secrets (API keys, database credentials) within function code or storing them insecurely creates significant risks.

- Over-Privileged IAM Roles: Granting functions excessive permissions through IAM roles can allow attackers to escalate privileges if a function is compromised.

- Denial of Service (DoS) and Distributed Denial of Service (DDoS) Attacks: Serverless applications, especially those with public endpoints, are susceptible to DoS and DDoS attacks. Attackers can exploit this by:

- Resource exhaustion: Triggering a large number of function invocations or sending excessive amounts of data to exhaust resources and cause service disruptions.

- Exploiting scaling behavior: Leveraging the auto-scaling capabilities of serverless platforms to amplify the impact of a DoS attack.

- Supply Chain Attacks: Serverless functions rely on dependencies, and the build and deployment processes can be vulnerable. Attackers can:

- Compromise third-party libraries: Injecting malicious code into open-source or commercial dependencies.

- Manipulate build pipelines: Inserting malicious code into the build process or deployment infrastructure.

Implementing Security Best Practices for Serverless Applications

A layered approach to security is essential for mitigating the vulnerabilities associated with serverless computing. This involves integrating security considerations throughout the development lifecycle, from design to deployment and monitoring.

- Secure Coding Practices:

- Input Validation and Sanitization: Implement robust input validation and sanitization to prevent injection attacks. This includes validating data types, lengths, and formats.

- Principle of Least Privilege: Grant functions only the minimum necessary permissions through IAM roles. Avoid broad, wildcard permissions.

- Regular Code Reviews: Conduct regular code reviews to identify and address potential security vulnerabilities. Automated static analysis tools can assist in this process.

- Dependency Management: Use a software composition analysis (SCA) tool to identify and manage dependencies. Regularly update dependencies to patch known vulnerabilities.

- Secure Secrets Management: Utilize dedicated secrets management services (e.g., AWS Secrets Manager, Azure Key Vault) to store and manage sensitive credentials. Never hardcode secrets in code.

- Follow Secure Coding Standards: Adhere to established secure coding guidelines and best practices, such as those provided by OWASP.

- Infrastructure Security:

- Network Security: Implement network security controls, such as VPCs and subnets, to isolate serverless functions and restrict network access. Use firewalls and security groups to control inbound and outbound traffic.

- Encryption: Encrypt data at rest and in transit. Use TLS/SSL for secure communication and encrypt data stored in storage buckets and databases.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address vulnerabilities in the infrastructure.

- Vulnerability Scanning: Implement vulnerability scanning tools to identify and remediate vulnerabilities in the function code, dependencies, and infrastructure.

- Monitoring and Logging:

- Centralized Logging: Implement centralized logging to collect and analyze logs from all serverless functions and related services.

- Real-time Monitoring: Monitor function invocations, errors, and performance metrics in real time. Set up alerts to detect and respond to suspicious activity.

- Security Information and Event Management (SIEM): Integrate logs and monitoring data with a SIEM solution for advanced threat detection and incident response.

- Audit Trails: Enable audit trails to track all actions performed within the serverless environment, including changes to configurations and access to resources.

- Deployment and Automation:

- Automated Deployment Pipelines: Automate the deployment process using CI/CD pipelines to ensure consistent and secure deployments.

- Infrastructure as Code (IaC): Manage infrastructure configurations using IaC tools (e.g., Terraform, CloudFormation) to ensure consistency and repeatability.

- Immutable Infrastructure: Use immutable infrastructure to prevent unauthorized modifications to deployed functions and infrastructure.

- Security Testing in CI/CD: Integrate security testing (e.g., static analysis, vulnerability scanning, and penetration testing) into the CI/CD pipeline.

Importance of Identity and Access Management (IAM) in Serverless Security

IAM plays a critical role in securing serverless applications by controlling access to resources and defining permissions. A robust IAM strategy is fundamental to prevent unauthorized access and minimize the impact of security breaches.

- Principle of Least Privilege: Granting functions only the minimum necessary permissions they need to perform their tasks. This reduces the attack surface and limits the potential damage from compromised functions. For example, a function that only needs to read data from a database should not be granted write or delete permissions.

- Role-Based Access Control (RBAC): Using roles to group permissions and assign them to functions or users. This simplifies the management of permissions and ensures consistency across the application.

- Fine-Grained Permissions: Defining granular permissions to restrict access to specific resources or actions. This allows for more precise control over access and reduces the risk of unauthorized access. For instance, instead of granting a function full access to an S3 bucket, grant it access only to specific objects or prefixes.

- Multi-Factor Authentication (MFA): Enforcing MFA for all users who access the serverless environment. This adds an extra layer of security and prevents unauthorized access even if credentials are compromised.

- Regular Auditing of IAM Configurations: Regularly review and audit IAM configurations to ensure they are aligned with security best practices and to identify and address any potential misconfigurations or vulnerabilities.

- Monitoring and Alerting on IAM Events: Monitor IAM-related events, such as changes to permissions or attempts to access unauthorized resources. Set up alerts to notify security teams of any suspicious activity.

Serverless Cost Optimization Strategies

Serverless computing, while offering significant advantages in terms of scalability and reduced operational overhead, presents unique challenges in cost management. The pay-per-use model, while generally cost-effective, can lead to unexpected expenses if not carefully monitored and optimized. Effective cost optimization in serverless environments requires a proactive approach that encompasses monitoring, service selection, and resource management. This section delves into the strategies necessary to minimize costs and maximize the value derived from serverless deployments.

Monitoring and Cost Optimization

Effective cost management begins with robust monitoring. It’s crucial to understand how resources are being consumed and to identify potential areas for optimization. This involves tracking not only the direct costs of serverless services but also the indirect costs associated with data transfer, storage, and other related services.

- Establish a Baseline: Before implementing any optimization strategies, establish a baseline of your current spending. This involves collecting data on resource usage, service costs, and the performance of your applications. Use the cloud provider’s cost management tools to track spending over time and identify any unexpected spikes or trends.

- Implement Detailed Monitoring: Implement granular monitoring of serverless functions, APIs, and other services. This includes tracking metrics such as invocation count, execution time, memory usage, and error rates. Use monitoring tools provided by your cloud provider (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) or third-party solutions to collect and analyze this data.

- Analyze Resource Consumption: Analyze the collected data to identify patterns and anomalies in resource consumption. Look for functions that are frequently invoked, consume excessive memory, or experience high error rates. This analysis can help pinpoint areas where optimization efforts will have the greatest impact.

- Set Up Alerts: Configure alerts to notify you of any unusual spending patterns or resource utilization. Set thresholds for metrics such as invocation count, execution time, and error rates. When these thresholds are exceeded, you should receive notifications that allow you to quickly address potential cost issues.

- Optimize Function Performance: One of the most effective ways to reduce costs is to optimize the performance of your serverless functions. This includes optimizing code to reduce execution time and memory usage. The goal is to make each function invocation as efficient as possible.

- Regularly Review and Refine: Cost optimization is an ongoing process. Regularly review your monitoring data, adjust your optimization strategies, and refine your resource allocation based on the evolving needs of your applications.

Choosing Serverless Services to Minimize Expenses

Selecting the appropriate serverless services is a critical factor in controlling costs. Different services have different pricing models, and the optimal choice depends on the specific requirements of your application. Consider the following when choosing serverless services:

- Understand Pricing Models: Each serverless provider offers different pricing models. Some services are priced based on the number of invocations, others on execution time, and still others on the amount of data processed. Familiarize yourself with the pricing models of the services you plan to use and choose the models that best align with your application’s usage patterns.

- Evaluate Service Alternatives: Evaluate alternative serverless services that can accomplish the same task. For example, consider using a managed message queue service instead of building your own messaging system. Managed services often have more predictable costs and reduce the operational burden.

- Optimize Resource Allocation: When using serverless functions, carefully consider the memory and compute resources allocated to each function. Over-provisioning resources can lead to unnecessary costs. Test different resource configurations to find the optimal balance between performance and cost.

- Use Serverless Databases Strategically: Serverless databases offer scalability and pay-per-use pricing. However, it’s important to choose the right database type for your application’s needs. Consider the data access patterns and the expected workload when selecting a database.

- Leverage Caching: Implement caching mechanisms to reduce the load on your serverless functions and databases. Caching can significantly reduce the number of invocations and data transfer costs.

- Consider Managed Services: Take advantage of managed services whenever possible. Managed services often have lower operational costs and can be more cost-effective than building and managing your own infrastructure.

Cost Comparison Between Different Serverless Providers

The cost comparison between different serverless providers is highly dependent on the specific use case. Pricing models vary across providers, making a direct comparison challenging. However, it is possible to illustrate cost differences using a hypothetical use case.Consider a simple web application that processes image uploads. The application uses a serverless function to resize the images, store them in object storage, and generate thumbnails.

The following table provides a simplified cost comparison based on estimated pricing for a monthly workload of 1 million image uploads, with each image averaging 1 MB in size and an average function execution time of 200 milliseconds:

| Provider | Serverless Function (per invocation) | Object Storage (per GB) | Data Transfer (per GB) | Estimated Monthly Cost |

|---|---|---|---|---|

| AWS | $0.0000002 per invocation | $0.023 per GB | $0.09 per GB | $150 |

| Azure | $0.0000003 per invocation | $0.018 per GB | $0.08 per GB | $175 |

| Google Cloud | $0.0000004 per invocation | $0.020 per GB | $0.10 per GB | $180 |

Note: These figures are estimates and may vary based on region, service level agreements, and specific usage patterns. The costs are calculated based on the current pricing models of each provider. The estimated monthly cost is calculated by summing the cost of serverless function invocations, object storage, and data transfer.

Analysis: In this simplified example, AWS appears to be the most cost-effective option for this specific use case. However, the cost differences are relatively small. The optimal choice depends on factors beyond just cost, such as developer experience, integration with other services, and specific performance requirements.

Disclaimer: The data provided is for illustrative purposes only and does not constitute financial advice. Pricing is subject to change, and it is essential to consult the official pricing documentation of each cloud provider for the most accurate and up-to-date information.

The Role of Serverless in Microservices Architectures

Serverless computing and microservices architectures represent a powerful combination for modern application development. This synergy allows for highly scalable, resilient, and cost-effective applications. The decomposition of applications into independent, deployable microservices aligns seamlessly with the event-driven, pay-per-use model of serverless, creating a dynamic and efficient operational paradigm.

Complementary Nature of Serverless Functions and Microservices

Serverless functions provide a natural fit for implementing the individual components of a microservices architecture. They enable developers to focus on business logic rather than infrastructure management.

- Event-Driven Execution: Serverless functions are inherently event-driven. This characteristic complements microservices, which often communicate asynchronously via events. For example, a user signing up for a service could trigger an event that invokes a serverless function to send a welcome email, update a database, and initiate other related actions.

- Independent Deployment and Scaling: Each serverless function can be deployed and scaled independently. This allows for granular scaling of individual microservices based on their specific workload demands. If one microservice experiences a surge in traffic, only the corresponding serverless function instances are scaled, leaving other microservices unaffected.

- Reduced Operational Overhead: Serverless platforms handle the underlying infrastructure, including server provisioning, scaling, and patching. This frees developers from these operational tasks, allowing them to concentrate on building and deploying microservices more rapidly.

- Cost Optimization: The pay-per-use pricing model of serverless aligns with the granular nature of microservices. Developers only pay for the compute resources consumed by each function, resulting in significant cost savings, especially during periods of low traffic or when microservices are infrequently used.

Benefits of Serverless for Building and Deploying Microservices

Leveraging serverless for microservices offers several advantages, improving development velocity, operational efficiency, and overall application performance.

- Faster Time-to-Market: Serverless simplifies the development and deployment process. Developers can quickly build, test, and deploy microservices without managing servers, leading to faster time-to-market for new features and updates.

- Enhanced Scalability and Resilience: Serverless platforms automatically scale functions based on demand. This ensures that microservices can handle fluctuating workloads without manual intervention. The inherent fault tolerance of serverless platforms also improves the resilience of microservices, as failures in one function do not necessarily affect others.

- Improved Cost Efficiency: The pay-per-use pricing model of serverless optimizes costs by charging only for the actual compute time used by each function. This is particularly beneficial for microservices with variable workloads or infrequent usage.

- Simplified Operations: Serverless platforms automate many operational tasks, such as server provisioning, scaling, and patching. This reduces the operational burden on development teams, allowing them to focus on building and maintaining microservices.

- Increased Developer Productivity: By abstracting away infrastructure management, serverless enables developers to focus on writing code and delivering business value. This leads to increased productivity and faster innovation cycles.

Case Study: Successful Serverless Microservices Implementation

A prominent example of successful serverless microservices implementation can be observed in the adoption by Netflix.

- Context: Netflix utilizes a microservices architecture to manage its vast and complex streaming platform. The company has a massive user base, which generates enormous traffic, requiring a scalable and resilient system.

- Implementation: Netflix leverages AWS Lambda, a serverless compute service, extensively throughout its architecture. Microservices are deployed as Lambda functions and are triggered by various events, such as user interactions, content uploads, and internal system processes. For example, when a user clicks the “Play” button, an event is triggered, and a serverless function is invoked to initiate the streaming process.

- Benefits Realized:

- Scalability: The serverless architecture enables Netflix to automatically scale its services to handle peak traffic during prime time viewing hours.

- Resilience: The inherent fault tolerance of AWS Lambda ensures that individual microservices can fail without impacting the overall streaming experience.

- Cost Optimization: Netflix benefits from the pay-per-use pricing model, optimizing costs, especially during periods of lower traffic.

- Development Velocity: The serverless approach accelerates the development and deployment of new features and content updates.

- Impact: The use of serverless microservices has significantly contributed to Netflix’s ability to provide a reliable, scalable, and cost-effective streaming service to millions of users worldwide. The company’s success with this architecture serves as a compelling case study for other organizations considering similar implementations.

Serverless and the Future of DevOps

Serverless computing is fundamentally reshaping the landscape of DevOps practices, demanding a shift in operational strategies and toolsets. The dynamic nature of serverless architectures, characterized by event-driven functions and automated scaling, necessitates a proactive and highly automated approach to deployment, monitoring, and incident response. This transformation requires DevOps teams to embrace new methodologies and technologies to effectively manage the lifecycle of serverless applications.

Changing DevOps Practices in Serverless Environments

The transition to serverless mandates a significant evolution in traditional DevOps practices. This shift impacts areas such as infrastructure management, application deployment, and monitoring strategies. The ephemeral nature of serverless functions and the distributed architecture necessitate a more agile and automated approach.

- Infrastructure as Code (IaC): Serverless environments largely eliminate the need for traditional infrastructure provisioning and management. However, IaC remains critical for defining and managing the resources that support serverless applications, such as API gateways, databases, and storage buckets. Tools like AWS CloudFormation, Terraform, and Serverless Framework are essential for defining infrastructure as code, enabling version control, and ensuring consistent deployments. For example, defining an AWS Lambda function, an API Gateway, and associated IAM roles using infrastructure-as-code allows for repeatable and automated deployments, reducing the risk of manual configuration errors.

- Automated Deployment Pipelines: Continuous Integration and Continuous Deployment (CI/CD) pipelines become even more critical in serverless environments. The rapid development cycles and frequent deployments inherent in serverless require robust CI/CD pipelines to automate the build, test, and deployment processes. This automation ensures that code changes are quickly and reliably delivered to production. For example, a CI/CD pipeline might automatically build and test a serverless function upon a code commit, and then deploy it to a staging environment for further testing before promoting it to production.

- Advanced Monitoring and Observability: Monitoring and observability are paramount in serverless. The distributed and event-driven nature of serverless applications makes it challenging to trace the flow of requests and identify performance bottlenecks. Comprehensive monitoring solutions that provide real-time metrics, distributed tracing, and log aggregation are crucial for understanding application behavior and troubleshooting issues. Tools like AWS CloudWatch, Datadog, and New Relic provide the necessary capabilities for monitoring serverless applications.

- Shift Left Security: Security considerations must be integrated early in the development lifecycle. Security vulnerabilities can be introduced in the code itself, in the configuration of serverless resources, or in the dependencies used by the application. Implementing security best practices, such as code scanning, dependency management, and vulnerability assessment, early in the CI/CD pipeline helps to identify and mitigate security risks before they reach production.

For example, incorporating static code analysis tools into the CI pipeline can automatically detect and flag potential security vulnerabilities in the code.

Integration of Serverless with CI/CD Pipelines

The seamless integration of serverless with CI/CD pipelines is crucial for realizing the full benefits of serverless computing, such as rapid deployment cycles, automated scaling, and reduced operational overhead. This integration involves automating the build, test, and deployment processes for serverless functions and associated resources.

- Build Automation: The build process for serverless applications typically involves packaging the code for serverless functions, managing dependencies, and creating deployment packages. CI/CD pipelines automate this process, ensuring that the code is built consistently and reliably. For instance, a CI pipeline might use tools like npm or Maven to manage dependencies and package the code into a deployable artifact.

- Automated Testing: Comprehensive testing is essential to ensure the quality and reliability of serverless applications. CI/CD pipelines automate the execution of various tests, including unit tests, integration tests, and end-to-end tests. This automated testing process helps to identify and fix bugs early in the development lifecycle. For example, a CI pipeline might run unit tests against individual serverless functions and integration tests to verify the interactions between different functions and external services.

- Automated Deployment: CI/CD pipelines automate the deployment of serverless functions and associated resources to different environments, such as development, staging, and production. This automation reduces the risk of manual errors and ensures that deployments are consistent and repeatable. For instance, a CD pipeline might use tools like AWS CodePipeline or Jenkins to deploy serverless functions to production after successful testing.

- Infrastructure Provisioning: IaC tools can be integrated into the CI/CD pipeline to automate the provisioning and management of infrastructure resources. This allows developers to define infrastructure as code and manage it alongside their application code, ensuring consistency and repeatability. For example, a CI/CD pipeline might use Terraform to provision an API Gateway and a database before deploying a serverless function that interacts with them.

Importance of Automation in Serverless Deployments and Management

Automation is a cornerstone of successful serverless deployments and management. Serverless architectures inherently benefit from automation due to their dynamic nature, scalability, and event-driven characteristics. Automation streamlines various aspects of the serverless lifecycle, reducing operational overhead and improving efficiency.

- Automated Scaling: Serverless platforms automatically scale functions based on demand, but automation is crucial for managing the scaling configuration. This includes setting concurrency limits, configuring auto-scaling policies, and monitoring function performance. Automated scaling ensures that applications can handle traffic spikes without manual intervention. For example, using AWS Lambda’s auto-scaling features in conjunction with CloudWatch metrics allows applications to automatically adjust their capacity based on the number of incoming requests.

- Automated Rollbacks: Deploying new versions of serverless functions can sometimes introduce errors. Automated rollbacks allow for reverting to a previous, known-good version quickly. Automation enables a swift return to a stable state if an issue arises.

- Automated Monitoring and Alerting: Implementing automated monitoring and alerting is essential for proactive issue detection and resolution. Automation tools can collect metrics, logs, and traces, and trigger alerts when predefined thresholds are exceeded. For example, setting up alerts in CloudWatch that trigger when a Lambda function’s error rate exceeds a certain threshold can help identify and address issues before they impact users.

- Automated Configuration Management: Serverless applications often rely on configuration settings, such as environment variables and secrets. Automation tools can manage these configurations, ensuring that they are securely stored, consistently applied, and updated as needed. For example, using AWS Systems Manager Parameter Store to securely store and manage environment variables ensures that sensitive information is protected and easily accessible to serverless functions.

Challenges and Limitations of Serverless Computing

Serverless computing, despite its advantages, presents several challenges that can impact its adoption and effectiveness. Understanding these limitations is crucial for organizations considering or already using serverless architectures. These challenges range from vendor lock-in and cold starts to operational complexities and security concerns.

Vendor Lock-in in Serverless Environments

Vendor lock-in is a significant concern in serverless environments due to the proprietary nature of many serverless platforms. This dependence can restrict flexibility and increase costs.

- Platform-Specific Features: Serverless providers often offer unique features and services tightly integrated with their platform. Migrating to another provider can be complex, requiring significant code refactoring and architectural changes. For instance, a function using AWS Lambda’s specific extensions for image processing would need substantial modifications to work on Google Cloud Functions or Azure Functions.

- Service Dependencies: Serverless applications frequently rely on a provider’s ecosystem of services (databases, storage, API gateways, etc.). Moving these dependencies to a different provider is often not straightforward and can involve data migration, service re-configuration, and potential downtime. Consider a serverless application heavily utilizing AWS DynamoDB; migrating to Google Cloud Datastore would require data transfer and code adjustments to interface with the new database.

- Pricing Models: Different providers have varying pricing models, which can lead to cost unpredictability. Switching providers might necessitate substantial adjustments to resource allocation and application architecture to optimize costs. A company might find its costs escalating unexpectedly if it migrates from a provider with generous free tiers to one with different pricing structures, particularly under high traffic loads.

Addressing the Cold Start Problem

The cold start problem, where functions take time to initialize, is a key performance bottleneck in serverless computing. Several strategies can mitigate this issue.

- Provisioned Concurrency: Many providers offer provisioned concurrency, which pre-warms function instances to reduce latency. This guarantees a certain number of instances are ready to serve requests, thereby minimizing cold starts. For example, AWS Lambda’s provisioned concurrency allows users to specify the number of concurrent instances to keep warm.

- Keep-Alive Techniques: Implementing keep-alive mechanisms, such as scheduled function invocations or “ping” requests, can prevent functions from becoming idle and cold. Regularly invoking functions, even with minimal activity, ensures they remain warm. A simple cron job can be set up to trigger a function every few minutes, keeping it active.

- Code Optimization: Optimizing function code and dependencies can reduce startup time. This includes minimizing the size of deployment packages, using optimized programming languages and frameworks, and lazy-loading dependencies. Reducing the size of the function package from 100MB to 10MB can significantly decrease cold start times.

- Choosing Appropriate Languages and Runtimes: Certain programming languages and runtimes have faster startup times than others. Choosing languages like Go or Node.js over Python, in certain contexts, can lead to faster function initialization.

Illustration of Serverless Limitations:

Imagine a serverless e-commerce platform experiencing a sudden surge in traffic during a flash sale. The platform uses AWS Lambda functions triggered by API Gateway. The illustration demonstrates the limitations: initially, only a few Lambda instances are active. When the surge occurs, new instances are rapidly created to handle the increased load. However, the cold start problem causes delays as these new instances take time to initialize.

This results in latency spikes, leading to a degraded user experience and potentially lost sales. Furthermore, the platform’s reliance on AWS services creates vendor lock-in; migrating to a different provider to address these issues requires significant effort. The pricing model of AWS Lambda, which charges per invocation and duration, adds to the cost unpredictability, as the surge in traffic directly impacts the expenses.

This illustrates the critical need to address cold starts, manage vendor lock-in, and optimize costs in serverless environments for optimal performance and scalability.

Conclusive Thoughts

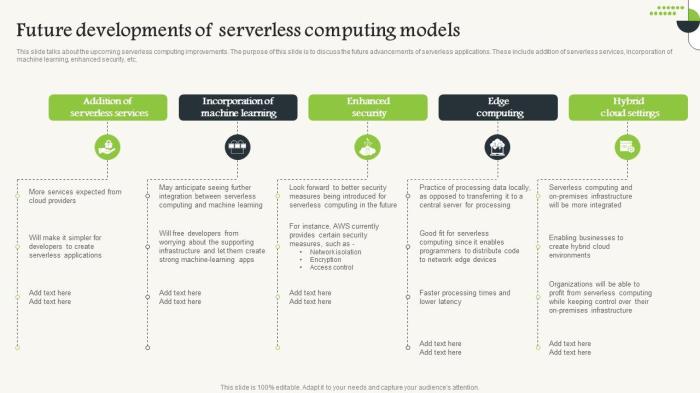

In conclusion, the future of serverless computing promises continued innovation and expansion. From advancements in AI integration to the refinement of DevOps practices, serverless is evolving to meet the demands of modern application development. While challenges such as vendor lock-in and the cold start problem persist, ongoing developments and the increasing adoption across industries suggest a bright future. The continued focus on cost optimization, enhanced security measures, and developer-friendly tools will further solidify serverless computing as a key paradigm in the technology landscape.

Essential FAQs

What are the main benefits of using serverless computing?

Serverless computing offers several key advantages, including cost savings through pay-per-use pricing, automatic scalability that adjusts to demand, reduced operational overhead by offloading infrastructure management, and faster deployment cycles. These benefits contribute to increased agility and focus on application development rather than server administration.

What are the common challenges of serverless computing?

Common challenges include vendor lock-in, the cold start problem (where functions can experience latency on initial invocation), debugging and monitoring complexities due to distributed architectures, and the potential for increased complexity in application design. Security considerations also require careful attention.

How does serverless impact DevOps practices?

Serverless significantly alters DevOps practices by automating infrastructure provisioning, enabling faster CI/CD pipelines, and shifting the focus from server management to code deployment and application monitoring. It encourages a more streamlined and agile development process, promoting continuous integration and continuous delivery.

Is serverless suitable for all types of applications?

While serverless is ideal for many applications, it may not be suitable for all. Applications requiring consistently high throughput, low-latency responses, or those with complex state management might benefit more from traditional infrastructure. However, serverless is rapidly evolving to address these limitations, expanding its applicability.