Understanding the business value of a workload is crucial in today’s dynamic IT landscape. It’s not merely about running applications; it’s about aligning IT infrastructure with core business objectives. This exploration delves into how effectively managing and optimizing workloads directly contributes to revenue generation, cost reduction, and enhanced customer satisfaction.

This document will explore various facets of workload management, from defining a workload and identifying its characteristics, to examining the strategies for optimization, security, and scalability. Furthermore, we will investigate the importance of automation, disaster recovery, and cost management in maximizing the value derived from your workloads. The ultimate goal is to provide actionable insights and a framework for assessing and improving the business impact of your IT investments.

Defining “Workload” and its Context

Understanding the concept of a “workload” is crucial for evaluating its business value. In the realm of IT infrastructure, a workload represents a specific task or set of tasks performed by a system. This encompasses everything from running a simple web application to managing complex data processing pipelines. The characteristics of these workloads directly impact the resources required and, consequently, the value they generate for the business.

Defining a Workload in IT Infrastructure

A workload in IT infrastructure can be defined as the execution of a specific application or set of processes that consume resources, such as CPU, memory, storage, and network bandwidth, to achieve a defined business objective. These objectives can range from serving web pages to analyzing large datasets. The efficient management and optimization of these workloads are key to maximizing their contribution to the overall business value.

Examples of Different Types of Workloads

Different types of workloads exist, each with unique characteristics and resource demands. These differences directly influence how they impact business operations and, therefore, their value.

- Database Workloads: These workloads involve the storage, retrieval, and management of data. They often require high storage capacity, fast input/output (I/O) performance, and robust security measures. For example, a retail company’s customer database, which requires continuous availability and rapid transaction processing.

- Web Application Workloads: These workloads serve web content and handle user interactions. They typically demand high network bandwidth, scalability to handle fluctuating user traffic, and efficient processing capabilities. Consider a popular e-commerce website, which needs to respond quickly to customer requests and handle a large number of concurrent users.

- Batch Processing Workloads: These workloads involve processing large volumes of data in scheduled batches. They often require significant compute resources and efficient data transfer capabilities. An example is a financial institution’s end-of-day transaction processing, which involves consolidating and analyzing a large volume of financial data.

- Containerized Workloads: These workloads involve applications packaged in containers. They offer portability, scalability, and efficient resource utilization. An example includes microservices-based applications deployed across various environments.

- Machine Learning (ML) and Artificial Intelligence (AI) Workloads: These workloads involve training and deploying ML models. They require significant compute power, often utilizing GPUs, and specialized software libraries. For instance, an ML-driven fraud detection system in a banking environment, which requires continuous model training and real-time analysis of financial transactions.

How Workload Characteristics Influence Business Value

The characteristics of a workload, such as its compute, storage, and network requirements, directly impact its business value. Understanding these relationships is crucial for optimizing resource allocation and maximizing the return on investment.

- Compute: The computational demands of a workload directly affect its performance and efficiency. Workloads requiring high compute power, such as complex simulations or data analysis, benefit from optimized CPU utilization and access to powerful processing units (e.g., GPUs).

Optimizing compute resources can reduce processing times, improve user experience, and enable faster decision-making.

- Storage: The storage requirements of a workload impact data access speed, storage costs, and data availability. Workloads involving large datasets or frequent data access benefit from fast storage solutions (e.g., SSDs) and efficient data management strategies. Consider a media streaming service, which requires vast storage capacity and high-speed data delivery to provide a seamless user experience.

- Network: The network demands of a workload affect its ability to communicate with other systems and users. Workloads requiring high network bandwidth, such as video streaming or real-time data processing, benefit from low-latency networks and efficient data transfer protocols. For example, a global financial trading platform needs a high-speed, reliable network to ensure timely transaction execution and access to market data.

Identifying Business Objectives and Workload Alignment

Understanding the business value of a workload necessitates a clear understanding of the business objectives it supports. Aligning workloads with these objectives is crucial for ensuring that IT investments contribute directly to the success of the organization. This section explores common business objectives, examines how different workload types contribute to these objectives, and provides a framework for assessing alignment.

Common Business Objectives Supported by Workloads

Workloads are designed to achieve various business objectives. These objectives often intersect and are interconnected, forming a comprehensive strategy for business growth and sustainability.

- Revenue Generation: Workloads can directly or indirectly contribute to increasing revenue. For example, e-commerce platforms, customer relationship management (CRM) systems, and marketing automation tools are designed to drive sales, improve customer acquisition, and increase customer lifetime value.

- Cost Reduction: Optimizing operational efficiency is a key objective, and workloads play a vital role. Workloads such as cloud computing, automation tools, and data analytics platforms can help reduce operational costs by streamlining processes, improving resource utilization, and identifying cost-saving opportunities.

- Customer Satisfaction: Enhancing the customer experience is a critical objective for businesses. Workloads such as customer service applications, recommendation engines, and personalized content delivery systems contribute to improving customer satisfaction by providing better service, relevant information, and personalized experiences.

- Risk Mitigation: Workloads can be implemented to reduce risk. Examples include disaster recovery solutions, cybersecurity systems, and compliance monitoring tools. These help protect against data breaches, system failures, and regulatory non-compliance.

- Innovation and Competitive Advantage: Workloads can support innovation by enabling the development of new products and services. They can also provide a competitive advantage by facilitating faster time-to-market, improved decision-making, and the ability to adapt to changing market conditions. For instance, research and development workloads, machine learning platforms, and data analytics tools can drive innovation.

- Compliance and Governance: Workloads are also used to ensure compliance with regulations and internal policies. Compliance monitoring systems, data governance platforms, and audit trails are examples of workloads designed to meet these objectives.

Workload Alignment with Business Objectives

Different workload types contribute to achieving the business objectives mentioned above in various ways. Understanding these alignments is critical for effective workload management and optimization.

- E-commerce Platforms: These directly support revenue generation by facilitating online sales. They also contribute to customer satisfaction by providing a user-friendly shopping experience and supporting customer service functions.

- CRM Systems: CRM systems enhance customer satisfaction and contribute to revenue generation by improving customer relationship management, streamlining sales processes, and providing insights into customer behavior.

- Data Warehouses and Business Intelligence (BI) Tools: These workloads provide data-driven insights that support informed decision-making, impacting revenue generation, cost reduction, and innovation. They also support compliance and governance through data integrity and reporting capabilities.

- Cloud Computing: Cloud services can support cost reduction through infrastructure optimization and improve business agility, contributing to revenue generation and innovation.

- Customer Service Applications: These are essential for enhancing customer satisfaction by providing efficient and effective customer support. They can also contribute to revenue generation by resolving issues quickly and improving customer retention.

- Supply Chain Management (SCM) Systems: SCM systems support cost reduction by optimizing supply chain operations and contribute to customer satisfaction by ensuring timely product delivery.

- Cybersecurity Systems: Cybersecurity systems mitigate risks, protecting data and systems, which supports business continuity and compliance.

- Manufacturing Execution Systems (MES): MES help optimize production processes, reduce costs, and improve product quality, contributing to cost reduction and customer satisfaction.

Framework for Assessing Workload Alignment with Business Goals

A structured framework is crucial for assessing how well workloads align with business goals. This framework ensures that IT investments are directly contributing to the organization’s success. The framework involves several key steps:

- Define Business Objectives: Clearly articulate the specific business objectives the organization aims to achieve. This involves identifying key performance indicators (KPIs) that measure success.

- Identify Workloads: Catalog all existing workloads and their functionalities. Understand the purpose of each workload and the resources it consumes.

- Map Workloads to Objectives: Determine how each workload supports the defined business objectives. This involves identifying the direct and indirect contributions of each workload to the KPIs.

- Assess Alignment: Evaluate the degree of alignment between workloads and business objectives. This can be done qualitatively (e.g., through surveys or interviews) and quantitatively (e.g., by measuring the impact of workloads on KPIs).

- Prioritize and Optimize: Prioritize workloads based on their alignment with business objectives. Optimize workloads that are critical for achieving these objectives, and consider decommissioning or re-architecting those that do not align well.

- Monitor and Iterate: Continuously monitor the performance of workloads and their impact on business objectives. Regularly review and update the alignment assessment as business needs evolve.

Example: A retail company’s primary business objective is to increase online sales. They use an e-commerce platform. The alignment assessment would involve:

- Defining the Objective: Increase online sales by 15% within the next year (KPI: Revenue from online sales).

- Identifying the Workload: E-commerce platform.

- Mapping: The platform directly supports online sales by facilitating transactions, managing inventory, and providing a user-friendly experience.

- Assessing: Measuring the platform’s impact on online sales revenue, customer conversion rates, and average order value.

- Prioritizing: Prioritizing the platform for optimization (e.g., improving site performance, enhancing the user experience).

- Monitoring: Continuously tracking online sales revenue and customer satisfaction scores.

Measuring Workload Performance and its Impact

Understanding the business value of a workload necessitates rigorous measurement of its performance and a clear understanding of how that performance impacts business outcomes. This involves identifying key performance indicators (KPIs), analyzing their trends, and correlating them with tangible business benefits. Effective monitoring and analysis enable informed decision-making, resource optimization, and ultimately, improved profitability.

Key Performance Indicators for Workload Performance

Defining and tracking relevant KPIs is crucial for gauging workload effectiveness. These metrics provide insights into various aspects of performance, from user experience to resource utilization. The selection of appropriate KPIs depends on the specific workload and its objectives.

- Response Time: This measures the time it takes for a workload to respond to a request. It’s a critical indicator of user experience, especially for interactive applications. For instance, a slow response time can lead to user frustration and abandonment.

- Throughput: Throughput represents the amount of work a workload can process within a specific timeframe, often measured in transactions per second (TPS) or requests per minute (RPM). Higher throughput indicates greater efficiency and the ability to handle increased demand.

- Error Rates: Error rates quantify the frequency of failures or errors encountered by the workload. High error rates signal potential issues with the application code, infrastructure, or underlying data. Common error rate metrics include the percentage of failed requests or the number of errors per minute.

- Resource Utilization: This assesses how efficiently the workload utilizes resources like CPU, memory, storage, and network bandwidth. Monitoring resource utilization helps identify bottlenecks and opportunities for optimization. High resource utilization, especially sustained at 100%, could indicate a need for scaling or optimization.

- Availability: Availability measures the percentage of time the workload is operational and accessible to users. High availability is crucial for business continuity and ensuring users can access services when needed. This is often expressed as a percentage, such as “99.99% uptime.”

Translating Improved Workload Performance into Business Benefits

Enhanced workload performance directly translates into a variety of tangible business benefits. These benefits are realized through improved efficiency, reduced costs, and increased customer satisfaction. A well-performing workload contributes significantly to the overall success of the business.

- Increased Revenue: Faster response times and higher throughput can lead to increased sales and revenue, especially for e-commerce platforms and online services. For example, Amazon’s focus on fast loading times and quick checkout processes directly contributes to its revenue generation.

- Reduced Costs: Optimized resource utilization and efficient processing can lower operational costs. This includes reduced infrastructure expenses, decreased energy consumption, and lower labor costs associated with troubleshooting and maintenance.

- Improved Customer Satisfaction: Faster response times, higher availability, and fewer errors lead to a better user experience, which, in turn, increases customer satisfaction and loyalty. Happy customers are more likely to return and recommend the service.

- Enhanced Productivity: Efficient workloads streamline internal processes, leading to increased employee productivity. This is particularly important for internal applications used by employees to perform their daily tasks.

- Better Decision-Making: Workload performance data provides valuable insights that enable data-driven decision-making. This can lead to improved resource allocation, more effective marketing campaigns, and better product development.

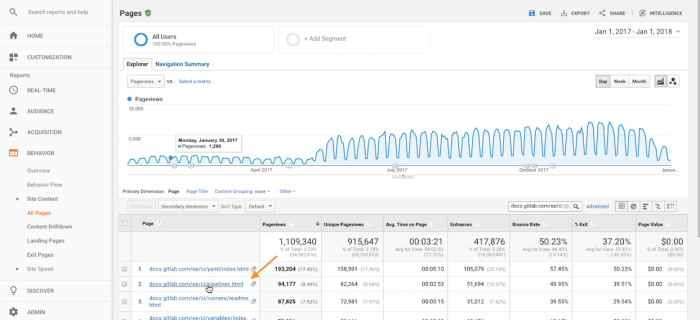

Workload Performance Metrics and Business Outcomes

The relationship between workload performance metrics and business outcomes can be quantified and tracked. The following table illustrates how changes in key performance indicators directly impact business results.

| Workload Performance Metric | Improvement | Impact on Business Outcome | Example |

|---|---|---|---|

| Response Time | Reduced by 20% | Increased Customer Conversion Rate | An e-commerce site sees a 15% increase in sales after reducing page load times. |

| Throughput | Increased by 15% | Higher Order Fulfillment Capacity | A logistics company processes 10% more orders per day with improved warehouse management system performance. |

| Error Rate | Reduced by 50% | Decreased Customer Support Costs | A SaaS provider reduces customer support tickets by 20% due to fewer application errors. |

| Resource Utilization (CPU) | Reduced by 10% | Lower Infrastructure Costs | A cloud-based application reduces monthly server costs by 8% through optimized resource allocation. |

Workload Optimization Strategies and Business Value

Optimizing workloads is crucial for maximizing the return on investment in IT infrastructure and ensuring that business objectives are met efficiently. This involves a systematic approach to improving performance, reducing costs, and enhancing overall business agility. By implementing the right strategies, organizations can unlock significant business value.

Resource Allocation Strategies

Effective resource allocation involves strategically distributing computing resources, such as CPU, memory, and storage, to meet the demands of various workloads. Proper resource allocation ensures that each workload receives the necessary resources to function optimally without over-provisioning or under-provisioning.To understand resource allocation, consider the following:

- Dynamic Resource Allocation: This involves automatically adjusting resources based on real-time demand. For example, cloud-based services often use auto-scaling to allocate more virtual machines (VMs) to handle peak traffic and scale down during periods of low activity.

- Resource Pooling: Resources are pooled together and allocated as needed. This allows for efficient utilization, as idle resources from one workload can be used by another.

- Capacity Planning: This involves forecasting future resource needs based on anticipated growth and workload demands. This ensures that the infrastructure can handle future requirements.

The impact of resource allocation on business value is significant:

- Cost Reduction: Efficient resource allocation minimizes the need for over-provisioning, reducing capital expenditures (CAPEX) on hardware and operational expenditures (OPEX) on energy and maintenance.

- Improved Performance: Properly allocated resources ensure that workloads receive the necessary processing power, leading to faster response times and improved user experience.

- Increased Efficiency: Resource pooling and dynamic allocation optimize resource utilization, minimizing waste and maximizing the throughput of workloads.

Code Optimization Strategies

Code optimization focuses on improving the efficiency and performance of the software code that runs workloads. This involves techniques such as refactoring, algorithm optimization, and efficient data structures.Consider these code optimization techniques:

- Refactoring: Restructuring existing code to improve readability, maintainability, and performance without changing its external behavior.

- Algorithm Optimization: Choosing the most efficient algorithms for specific tasks. For example, using a more efficient sorting algorithm can significantly reduce processing time for large datasets.

- Data Structure Optimization: Selecting the most appropriate data structures for storing and retrieving data. For example, using a hash table for quick lookups can improve performance compared to linear search.

- Caching: Storing frequently accessed data in a cache to reduce the need to retrieve it from slower storage, improving response times.

The benefits of code optimization include:

- Faster Application Performance: Optimized code executes more quickly, resulting in faster response times and improved user experience.

- Reduced Resource Consumption: Efficient code requires fewer CPU cycles, less memory, and less I/O, leading to lower infrastructure costs.

- Improved Scalability: Optimized code can handle larger workloads and higher traffic volumes without performance degradation.

Infrastructure Upgrade Strategies

Infrastructure upgrades involve modernizing the underlying hardware and software that supports workloads. This can include upgrading servers, storage systems, network components, and operating systems.Consider the following infrastructure upgrade approaches:

- Server Upgrades: Replacing older servers with newer models that offer improved processing power, memory capacity, and energy efficiency.

- Storage Upgrades: Implementing faster storage solutions, such as solid-state drives (SSDs) or NVMe drives, to improve data access times.

- Network Upgrades: Upgrading network infrastructure to support higher bandwidth and lower latency, improving communication speeds between servers and clients.

- Software Updates: Keeping software up-to-date with the latest versions and security patches to improve performance and security.

Infrastructure upgrades contribute to business value in several ways:

- Enhanced Performance: Upgraded infrastructure can handle workloads more efficiently, leading to faster response times and improved user experience.

- Increased Capacity: Upgraded systems can handle larger workloads and higher traffic volumes.

- Improved Reliability: Newer hardware and software often have improved reliability and fault tolerance.

- Reduced Operational Costs: Upgraded infrastructure can be more energy-efficient and require less maintenance.

Cost-Benefit Analysis of Workload Optimization

A cost-benefit analysis is essential for evaluating the financial viability of workload optimization strategies. This involves comparing the costs of implementing optimization measures with the expected benefits.The following are the steps involved in a cost-benefit analysis:

- Identify Costs: This includes the costs of hardware, software, labor, and any other expenses associated with implementing the optimization strategy.

- Estimate Benefits: This includes the expected cost savings, performance improvements, and other benefits, such as increased revenue or improved customer satisfaction.

- Calculate Net Benefit: Subtract the total costs from the total benefits to determine the net benefit.

- Calculate Return on Investment (ROI): Divide the net benefit by the total costs to determine the ROI.

Consider this example:

A company is running a database workload on aging servers. Implementing an infrastructure upgrade to faster servers and optimizing the database code is being considered. The cost of the upgrade is $50,000. The estimated benefits are a 20% reduction in server costs ($10,000 per year), a 15% improvement in query performance (resulting in faster customer service), and a 10% reduction in energy consumption ($2,000 per year). The estimated benefits are calculated over a 3-year period.

Cost: $50,000

Benefits:

- Cost Savings: $10,000/year x 3 years = $30,000

- Energy Savings: $2,000/year x 3 years = $6,000

- Total Benefits: $36,000

Net Benefit: $36,000 – $50,000 = -$14,000 (Loss)

Return on Investment (ROI): -$14,000 / $50,000 = -28%

In this case, the ROI is negative, indicating that the optimization strategy may not be financially viable based on the initial estimations.

However, if we consider the improved customer satisfaction from faster service and the increased revenue that could result, the overall value of the project could be improved. The cost-benefit analysis can be adjusted as more data becomes available.

The Role of Automation in Enhancing Workload Value

Automation plays a pivotal role in maximizing the business value of workloads. By streamlining processes, reducing manual intervention, and optimizing resource allocation, automation significantly contributes to improved efficiency, cost savings, and overall business agility. This section explores how automation enhances workload value and provides practical examples of its implementation.

Improving Workload Efficiency and Reducing Operational Costs

Automation directly impacts workload efficiency and operational costs. It replaces repetitive manual tasks with automated processes, leading to faster execution, reduced errors, and minimized human intervention. This shift frees up IT staff to focus on strategic initiatives rather than routine maintenance, thus increasing overall productivity.

- Faster Deployment and Scaling: Automated deployment tools, such as those using Infrastructure as Code (IaC), allow for rapid provisioning of resources. This accelerates the deployment of new workloads and enables quick scaling up or down based on demand. For example, companies like Netflix use automated scaling to handle peak viewing times, ensuring optimal performance without requiring manual intervention.

- Reduced Error Rates: Automation minimizes human errors, which can lead to costly downtime and data breaches. Automated testing and validation processes ensure that workloads are deployed and configured correctly, reducing the likelihood of errors.

- Optimized Resource Utilization: Automation tools can dynamically adjust resource allocation based on workload demands. This prevents over-provisioning and under-utilization, leading to significant cost savings. For instance, cloud providers use automated resource management to optimize the utilization of virtual machines and storage.

- Proactive Monitoring and Remediation: Automated monitoring systems can detect and automatically remediate issues before they impact users. This proactive approach reduces downtime and improves service availability. Tools like Prometheus and Grafana, when integrated with automation, can provide real-time insights and automated responses to performance anomalies.

- Lower Labor Costs: By automating routine tasks, businesses can reduce the need for manual labor, leading to lower operational costs. Automation can handle tasks like patching, backups, and routine maintenance, freeing up IT staff to focus on more strategic projects.

Identifying Areas Where Automation Provides the Most Significant Business Value

Certain areas within workload management benefit most from automation. Focusing on these areas can yield the greatest return on investment (ROI) in terms of efficiency gains and cost reductions.

- Workload Deployment: Automating the deployment process ensures consistency, reduces errors, and accelerates time-to-market. Tools like Ansible, Chef, and Puppet are widely used for automating deployment tasks.

- Configuration Management: Automating configuration tasks ensures consistency across all systems and reduces the risk of misconfigurations. IaC tools, such as Terraform, are essential for managing infrastructure configurations.

- Monitoring and Alerting: Automated monitoring systems provide real-time insights into workload performance and automatically trigger alerts when issues arise. This allows for rapid response and minimizes downtime.

- Scaling and Resource Management: Automating scaling allows workloads to automatically adjust to changing demands, ensuring optimal performance and resource utilization. Cloud providers offer auto-scaling features that can be configured based on various metrics.

- Backup and Recovery: Automated backup and recovery processes ensure data integrity and business continuity. Automated backup schedules and recovery procedures reduce the risk of data loss and minimize downtime in case of failures.

- Security and Compliance: Automating security tasks, such as vulnerability scanning and patching, helps maintain a secure environment and ensures compliance with regulatory requirements.

Creating a Process Flow Diagram Illustrating Automated Workload Deployment

The process flow diagram illustrates the steps involved in an automated workload deployment, highlighting the key stages and the role of automation in each stage.

Diagram Description:

The diagram begins with a “Request” stage, where a user or system initiates a workload deployment request. This triggers the process flow.

1. Requirements Gathering and Planning: The system gathers information about the workload requirements, including the desired infrastructure, software, and configuration settings. This stage involves automated processes to define the scope and specifications of the workload.

2. Infrastructure Provisioning: Using Infrastructure as Code (IaC) tools (e.g., Terraform, Ansible), the system automatically provisions the necessary infrastructure resources, such as virtual machines, storage, and network configurations. This stage eliminates manual setup and ensures consistency.

3. Software Installation and Configuration: The system automatically installs and configures the required software components, such as operating systems, middleware, and applications. Configuration management tools (e.g., Ansible, Chef, Puppet) ensure consistent configurations across all systems.

4. Testing and Validation: Automated testing tools and processes validate the deployment to ensure that the workload is functioning correctly and meets the defined requirements. This includes unit tests, integration tests, and performance tests.

5. Deployment and Release: Once the testing is successful, the workload is deployed to the production environment. The automated release process includes steps to migrate data, update DNS records, and notify users of the new deployment.

6. Monitoring and Management: Automated monitoring systems continuously monitor the workload’s performance and health. The system automatically triggers alerts and responses to issues, ensuring that the workload remains operational and optimized.

The diagram is structured as a sequential flow, each step is automated. This ensures efficiency, reduces errors, and accelerates the deployment process. This comprehensive diagram illustrates the key steps in an automated workload deployment process, highlighting the role of automation in each stage.

Workload Security and its Contribution to Business Value

Securing workloads is not merely a technical requirement; it’s a critical business imperative. Robust workload security directly impacts a company’s financial stability, brand reputation, and customer relationships. Ignoring this aspect can lead to severe consequences, undermining all the other efforts to optimize a workload’s value. This section explores how prioritizing security protects business assets and enhances overall business value.

Protecting Business Assets and Maintaining Customer Trust

Protecting business assets and maintaining customer trust is crucial for sustainable business operations. Security measures safeguard sensitive data, intellectual property, and critical infrastructure. This protection allows businesses to operate without the fear of significant disruptions or legal liabilities.

- Data Protection: Workload security safeguards sensitive customer data, financial records, and proprietary information. Data breaches can result in substantial fines under regulations like GDPR or CCPA, along with significant legal costs.

- Operational Continuity: Security measures, such as disaster recovery and incident response plans, ensure business operations can continue even during cyberattacks or system failures. For example, in 2022, the Colonial Pipeline ransomware attack caused significant fuel shortages and economic disruption, highlighting the importance of operational resilience.

- Intellectual Property Protection: Workload security protects valuable intellectual property, including patents, trade secrets, and software code. This helps maintain a competitive advantage and prevents financial losses due to theft or unauthorized use.

- Reputation Management: A strong security posture builds customer trust. Companies that demonstrate a commitment to data protection and cybersecurity are perceived as more reliable and trustworthy. A 2023 survey by IBM found that 60% of consumers would stop doing business with a company if it experienced a data breach.

Financial and Reputational Risks Associated with Workload Security Breaches

Workload security breaches expose businesses to significant financial and reputational risks. The consequences of a security incident can be devastating, impacting a company’s bottom line and its standing in the market.

- Financial Losses: Data breaches and cyberattacks can result in substantial financial losses. These losses include the cost of incident response, legal fees, regulatory fines, and the expense of providing credit monitoring services to affected customers. For example, the average cost of a data breach in 2023 was $4.45 million, according to IBM.

- Operational Disruptions: A security breach can lead to significant operational disruptions, causing downtime and impacting productivity. The cost of downtime can be substantial, especially for businesses that rely on real-time data processing or online transactions.

- Legal and Regulatory Penalties: Companies that fail to comply with data protection regulations face significant legal and regulatory penalties. Fines can be substantial, and companies may also face lawsuits from affected customers.

- Reputational Damage: A security breach can severely damage a company’s reputation, leading to a loss of customer trust and a decline in brand value. Negative publicity and loss of customer confidence can significantly impact revenue and market share.

- Loss of Competitive Advantage: A security breach can expose sensitive information, such as trade secrets and customer data, to competitors. This can result in a loss of competitive advantage and a decline in market share.

Security Checklist for Common Workload Types

Designing a security checklist tailored to specific workload types is a practical approach to ensure comprehensive protection. Different workloads have unique security requirements, and a customized checklist can help businesses address potential vulnerabilities effectively.

Web Applications:

- Input Validation: Implement thorough input validation to prevent injection attacks (e.g., SQL injection, cross-site scripting).

- Authentication and Authorization: Use strong authentication methods (e.g., multi-factor authentication) and robust authorization mechanisms to control user access.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address vulnerabilities.

- Web Application Firewall (WAF): Deploy a WAF to filter malicious traffic and protect against common web application attacks.

- Secure Coding Practices: Enforce secure coding practices and conduct code reviews to prevent vulnerabilities.

Database Servers:

- Strong Access Controls: Implement strong access controls, including least privilege principles, to restrict database access to authorized users.

- Data Encryption: Encrypt sensitive data both at rest and in transit to protect against unauthorized access.

- Regular Backups: Implement regular backups and disaster recovery plans to ensure data availability and business continuity.

- Database Auditing: Enable database auditing to monitor user activity and detect suspicious behavior.

- Security Patching: Apply security patches promptly to address known vulnerabilities.

Virtual Machines (VMs):

- VM Isolation: Isolate VMs to prevent lateral movement in case of a security breach.

- Security Hardening: Harden VMs by configuring security settings, disabling unnecessary services, and applying security patches.

- Regular Monitoring: Implement regular monitoring and logging to detect and respond to security incidents.

- Image Security: Secure VM images to ensure they are free from malware and vulnerabilities.

- Network Segmentation: Segment the network to limit the impact of a security breach.

Workload Scalability and Business Growth

Workload scalability is a critical factor in determining a business’s capacity to grow and adapt to market changes. It refers to the ability of a workload to handle increasing demands without compromising performance or incurring significant costs. Successfully scaling workloads enables businesses to capitalize on opportunities, maintain a competitive edge, and ensure customer satisfaction.

Importance of Scalability in Supporting Business Growth

The ability to scale workloads is paramount for businesses aiming for expansion. Without proper scalability, businesses can encounter significant bottlenecks that hinder growth, such as system crashes, performance degradation, and ultimately, lost revenue. Scalable workloads provide a foundation for businesses to handle fluctuations in demand, explore new markets, and innovate without being constrained by technological limitations.

Examples of Scalable Workloads Handling Increased Demand

Many businesses have demonstrated the power of scalable workloads in managing surges in demand. The ability to adapt to user traffic and resource requirements is crucial in these scenarios.

- E-commerce Platforms: During peak shopping seasons, such as Black Friday or Cyber Monday, e-commerce platforms experience a dramatic increase in traffic. Scalable workloads, utilizing technologies like cloud computing and load balancing, can dynamically allocate resources to handle this surge. This ensures that customers can browse products, make purchases, and complete transactions without experiencing delays or service interruptions. For instance, Amazon, a leader in e-commerce, relies heavily on scalable infrastructure to manage the massive influx of users during its peak sales events.

- Online Gaming: Online games often experience spikes in player activity, especially after new game releases or during special events. Scalable workload management is vital to ensure that servers can handle the increased number of players, maintain smooth gameplay, and prevent lag or downtime. Game developers use scalable architectures, including cloud-based solutions, to dynamically provision server resources based on player demand.

- Streaming Services: Streaming services like Netflix and Spotify must handle substantial fluctuations in user traffic. During primetime hours or when popular content is released, these services experience a significant surge in users. Scalable workloads are essential for delivering content without buffering or performance issues. These services utilize content delivery networks (CDNs) and cloud infrastructure to scale resources on demand, ensuring a seamless user experience.

Impact of Scalability on Business Agility and Market Responsiveness

Scalability directly impacts a business’s agility and its ability to respond quickly to market changes. The capacity to adapt to changing market conditions, launch new products or services rapidly, and seize opportunities before competitors is enhanced by scalable workloads.

- Faster Time to Market: Scalable workloads enable businesses to deploy new applications and services more quickly. By avoiding the need for lengthy infrastructure upgrades or capacity planning cycles, companies can accelerate their time to market. This agility is critical in fast-paced industries where being first to market can provide a significant competitive advantage.

- Enhanced Innovation: Scalable infrastructure allows businesses to experiment with new technologies and features without fear of overwhelming their existing systems. This fosters innovation and encourages companies to explore new business models and revenue streams.

- Improved Customer Experience: Scalable workloads ensure that systems can handle peak loads, delivering consistent performance and preventing service disruptions. This translates to a better customer experience, leading to increased customer satisfaction, loyalty, and positive word-of-mouth referrals.

- Cost Efficiency: Scalable cloud-based infrastructure, in particular, offers significant cost efficiencies. Businesses can pay only for the resources they use, eliminating the need for large upfront investments in hardware and software. This pay-as-you-go model allows businesses to optimize their IT spending and reduce operational costs.

Cost Management of Workloads and Business Value

Managing workload costs effectively is crucial for maximizing business value. By understanding the various cost components and implementing optimization strategies, organizations can improve profitability, allocate resources efficiently, and gain a competitive advantage. This section explores the key aspects of cost management in the context of workloads.

Cost Components of Workloads

Understanding the different cost components associated with running workloads is essential for effective cost management. These components can be broadly categorized as follows:

- Infrastructure Costs: These costs relate to the underlying hardware and resources required to run the workload. They include expenses such as servers, storage, networking equipment, and associated power and cooling. The specific costs vary depending on whether the workload is hosted on-premises, in a colocation facility, or in the cloud.

- Licensing Costs: Software licenses represent a significant expense for many workloads. This includes operating systems, database management systems, application servers, and other software required for the workload to function. Licensing models vary, including perpetual licenses, subscription-based models, and pay-as-you-go options.

- Operational Expenses (OpEx): OpEx encompasses the ongoing costs associated with managing and maintaining the workload. This includes salaries for IT staff, monitoring tools, patching and updates, security measures, and data backup and recovery. These expenses are critical for ensuring the availability, performance, and security of the workload.

- Data Transfer Costs: For workloads that involve significant data transfer, especially in cloud environments, data transfer costs can be substantial. This includes the cost of transferring data into and out of the cloud, as well as data transfer between different regions or availability zones.

- Support and Maintenance Costs: Support and maintenance costs cover vendor support contracts, hardware maintenance, and other services required to keep the workload running smoothly. These costs can vary depending on the complexity of the workload and the level of support required.

Strategies for Optimizing Workload Costs

Optimizing workload costs requires a multi-faceted approach that balances cost reduction with performance and security. Several strategies can be employed:

- Right-sizing Resources: Ensure that the allocated resources (CPU, memory, storage) are appropriate for the workload’s needs. Over-provisioning leads to wasted resources and unnecessary costs, while under-provisioning can impact performance. Regularly monitor resource utilization and adjust accordingly.

- Cloud Cost Optimization Tools: Leverage cloud provider tools and third-party solutions to monitor and analyze cloud spending. These tools provide insights into cost drivers, identify optimization opportunities, and help set budgets and alerts.

- Automation: Automate tasks such as resource provisioning, scaling, and de-provisioning to reduce manual effort and minimize operational expenses. Automation can also help ensure resources are utilized efficiently.

- Reserved Instances and Committed Use Discounts: Cloud providers often offer discounts for committing to use resources for a specific period. Utilizing these options can significantly reduce costs for predictable workloads.

- Data Tiering and Storage Optimization: Implement data tiering strategies to move less frequently accessed data to lower-cost storage tiers. Optimize storage configurations and leverage data compression and deduplication techniques to reduce storage costs.

- Containerization and Serverless Computing: Containerization and serverless computing can optimize resource utilization and reduce costs. Containers package applications and their dependencies, making them portable and efficient. Serverless computing allows you to run code without managing servers, paying only for the actual compute time used.

- Regular Audits and Reviews: Conduct regular audits of workload costs to identify areas for improvement. Review resource utilization, licensing costs, and operational expenses to identify opportunities for optimization.

Cloud Provider Pricing Model Comparison

Different cloud providers offer varying pricing models for their services. Understanding these models is crucial for making informed decisions and optimizing costs. The following table provides a comparison of the pricing models offered by major cloud providers. Note that the pricing information is subject to change and is provided for illustrative purposes.

| Cloud Provider | Pricing Model | Key Features | Example Use Cases |

|---|---|---|---|

| Amazon Web Services (AWS) | Pay-as-you-go, Reserved Instances, Spot Instances, Savings Plans | Pay-as-you-go for compute, storage, and other services. Reserved Instances offer significant discounts for committed usage. Spot Instances provide deeply discounted prices for unused compute capacity. Savings Plans provide flexible pricing based on commitment. | Web applications, data processing, machine learning, disaster recovery. AWS offers a wide range of services and pricing options to suit diverse workloads. |

| Microsoft Azure | Pay-as-you-go, Reserved Instances, Spot VMs, Azure Hybrid Benefit | Pay-as-you-go pricing for compute, storage, and other services. Reserved Instances offer discounts for committed usage. Spot VMs provide discounted prices for unused compute capacity. Azure Hybrid Benefit allows customers to use existing on-premises Windows Server and SQL Server licenses to save on cloud costs. | Enterprise applications, virtual machines, database services, hybrid cloud deployments. Azure is well-suited for organizations with existing Microsoft infrastructure. |

| Google Cloud Platform (GCP) | Pay-as-you-go, Committed Use Discounts, Sustained Use Discounts, Preemptible VMs | Pay-as-you-go pricing for compute, storage, and other services. Committed Use Discounts offer significant savings for committed usage. Sustained Use Discounts automatically apply discounts for long-running virtual machines. Preemptible VMs offer deeply discounted prices for short-lived workloads. | Data analytics, machine learning, containerized applications, and web applications. GCP is known for its innovative technologies and competitive pricing. |

| Oracle Cloud Infrastructure (OCI) | Pay-as-you-go, Universal Credits, Bring Your Own License (BYOL) | Pay-as-you-go pricing for compute, storage, and other services. Universal Credits provide a flexible purchasing model. BYOL allows customers to bring their existing Oracle software licenses to the cloud. | Enterprise applications, database workloads, and high-performance computing. OCI offers competitive pricing and performance for Oracle-specific workloads. |

Disaster Recovery and Business Continuity for Workloads

Disaster recovery (DR) and business continuity (BC) are crucial components of a comprehensive workload strategy. They ensure that critical business operations can continue with minimal disruption in the face of unexpected events, such as natural disasters, cyberattacks, or system failures. Implementing robust DR and BC plans protects valuable data, maintains service availability, and ultimately safeguards the financial health and reputation of an organization.

The Role of Disaster Recovery and Business Continuity in Protecting Critical Workloads

Disaster recovery and business continuity work in tandem to protect workloads. Disaster recovery focuses on restoring IT systems and data after a disruptive event. Business continuity, on the other hand, encompasses the broader strategy of maintaining essential business functions during and after a disruption. They work together to minimize downtime and data loss.

- Disaster Recovery (DR): The primary goal of DR is to restore IT infrastructure and data to a functional state after a disaster. This often involves replicating data and applications to a secondary site or cloud environment. DR plans define the steps, resources, and timelines required for recovery. The Recovery Time Objective (RTO) and Recovery Point Objective (RPO) are key metrics.

- Recovery Time Objective (RTO): The maximum acceptable time allowed to restore a system or function after a failure.

- Recovery Point Objective (RPO): The maximum acceptable amount of data loss, measured in time, that can occur during a disaster.

- Business Continuity (BC): BC focuses on maintaining critical business processes and operations during a disruption. This involves planning for various scenarios, including power outages, network failures, and personnel shortages. BC plans address how the business will continue to operate, even with limited resources.

- Relationship: DR is a subset of BC. While DR specifically addresses IT system recovery, BC encompasses a wider range of business functions and dependencies. A strong BC plan integrates DR strategies to ensure a holistic approach to resilience.

Identifying the Business Value of Minimizing Downtime and Data Loss

Minimizing downtime and data loss translates directly into significant business value. It protects revenue streams, maintains customer trust, and avoids costly legal and reputational damage.

- Revenue Protection: Downtime can lead to significant revenue losses. For example, an e-commerce site experiencing an outage during peak shopping hours can lose thousands, or even millions, of dollars in sales.

- Customer Satisfaction and Retention: Consistent service availability builds customer loyalty. Frequent outages can damage customer trust, leading to churn and negative brand perception.

- Reduced Operational Costs: Downtime can incur significant operational costs, including:

- Lost employee productivity.

- Costs associated with recovery efforts (e.g., IT staff overtime, data recovery services).

- Potential legal and regulatory penalties for data breaches or service disruptions.

- Compliance and Reputation: In many industries, data protection and service availability are mandated by regulations. A failure to meet these requirements can result in substantial fines and reputational damage. Effective DR and BC plans demonstrate a commitment to compliance and business resilience.

Design a High-Level Disaster Recovery Plan for a Sample Workload

A high-level DR plan Artikels the steps needed to restore a workload in the event of a disaster. The plan should be tailored to the specific needs and criticality of the workload. Let’s consider a sample workload: a web application for an online retail store.

- Assessment and Prioritization:

- Identify the critical components of the web application: web servers, database servers, application servers, and load balancers.

- Determine the RTO and RPO for each component based on business requirements. For example, the RTO for the database might be 4 hours, and the RPO might be 1 hour, to minimize data loss.

- Assess the existing infrastructure and identify potential vulnerabilities.

- Recovery Site Selection:

- Choose a suitable recovery site: a secondary data center, a cloud environment (e.g., AWS, Azure, Google Cloud), or a hybrid approach. The choice depends on factors like cost, RTO/RPO requirements, and existing infrastructure.

- Consider the geographical distance between the primary and recovery sites to minimize the impact of regional disasters.

- Data Replication:

- Implement a data replication strategy:

- Synchronous replication: Data is written to both the primary and recovery sites simultaneously. Offers the lowest RPO but can impact performance.

- Asynchronous replication: Data is written to the primary site first, and then replicated to the recovery site with a delay. Provides a better performance but may result in some data loss.

- Regularly test data replication to ensure its functionality.

- Implement a data replication strategy:

- Failover and Failback Procedures:

- Define the steps to failover to the recovery site:

- Identify the trigger for failover (e.g., a system failure, a network outage).

- Document the manual and automated steps to switch traffic to the recovery site.

- Include detailed instructions for each team involved (IT, database administrators, network engineers).

- Establish procedures for failback to the primary site after the issue is resolved.

- Define the steps to failover to the recovery site:

- Testing and Maintenance:

- Regularly test the DR plan through simulated disaster scenarios.

- Document test results and update the plan accordingly.

- Maintain the recovery environment to ensure it is up-to-date and compatible with the production environment.

Example: An online retailer uses a cloud-based DR solution with asynchronous replication and a 2-hour RPO. They regularly test their failover procedures by simulating a server outage. In a real disaster, they can quickly switch to the recovery site, minimizing downtime and data loss. The DR plan is reviewed and updated every six months.

Emerging Trends in Workload Management and Business Implications

The landscape of workload management is in constant flux, driven by technological advancements and evolving business needs. Understanding these emerging trends is crucial for organizations seeking to maximize the value derived from their workloads. Embracing these shifts allows businesses to become more agile, efficient, and competitive in the digital age. This section explores key trends and their implications for business value.

Serverless Computing and Its Impact

Serverless computing represents a significant shift in how applications are deployed and managed. This model allows developers to build and run applications without managing servers, leading to increased agility and reduced operational overhead.

- Reduced Operational Costs: Serverless architectures often utilize a “pay-per-use” model, where organizations are charged only for the compute time consumed. This can significantly lower operational costs compared to traditional server-based environments, especially for workloads with variable demand.

- Enhanced Scalability and Agility: Serverless platforms automatically scale resources based on demand, enabling applications to handle peak loads seamlessly. This inherent scalability contributes to improved user experience and business responsiveness.

- Faster Development Cycles: Developers can focus on writing code without worrying about infrastructure provisioning or management. This focus on code allows for accelerated development cycles and faster time-to-market for new features and applications.

- Improved Resource Utilization: With serverless, resources are allocated only when code is executing. This leads to better resource utilization and reduces the risk of over-provisioning, common in traditional environments.

Containerization and its Implications

Containerization, using technologies like Docker and Kubernetes, has revolutionized application deployment and portability. Containers package applications and their dependencies into isolated units, making them easily deployable across different environments.

- Increased Application Portability: Containers encapsulate applications and their dependencies, making them portable across various infrastructure environments, including on-premises, cloud, and hybrid deployments.

- Improved Resource Efficiency: Containers share the host operating system’s kernel, leading to more efficient resource utilization compared to virtual machines, which require their own operating system instances.

- Simplified Deployment and Management: Container orchestration platforms, like Kubernetes, automate the deployment, scaling, and management of containerized applications, simplifying operations and reducing administrative overhead.

- Enhanced Application Isolation: Containers provide isolation, preventing applications from interfering with each other. This isolation enhances security and improves the stability of applications.

Edge Computing and Its Influence

Edge computing moves computation and data storage closer to the data source, enabling real-time processing and reduced latency. This is particularly important for applications requiring immediate responsiveness, such as IoT devices and autonomous vehicles.

- Reduced Latency: By processing data at the edge, applications can respond to events in real-time, minimizing the delay associated with transmitting data to a central data center or cloud.

- Improved Bandwidth Efficiency: Processing data locally reduces the amount of data that needs to be transmitted over the network, conserving bandwidth and reducing costs.

- Enhanced Data Security and Privacy: Processing sensitive data at the edge can help maintain data privacy and security by minimizing the need to transmit data to centralized locations.

- Increased Reliability: Edge devices can continue to operate even if the connection to the cloud is temporarily unavailable, ensuring the continuous operation of critical applications.

The Evolution of Workload Management: An Illustrative Description

Imagine an illustration depicting the evolution of workload management. The image starts with a depiction of monolithic applications running on physical servers, representing the early stages. This scene is characterized by high resource consumption, manual provisioning, and limited scalability. Gradually, the image transforms to show virtual machines, demonstrating the advent of virtualization. This transition introduces improved resource utilization and simplified management.

The next stage reveals containers and microservices, signifying the shift towards modularity and portability. These components are depicted running on cloud platforms, showing the dynamic scaling and increased agility. Finally, the image culminates in a serverless architecture. Here, the infrastructure is almost invisible, with functions and events triggering automated resource allocation. The illustration highlights the progression from rigid, resource-intensive environments to dynamic, scalable, and cost-effective solutions, showcasing the ongoing journey of workload management towards greater efficiency and business value.

Conclusive Thoughts

In conclusion, the business value of a workload is multifaceted, encompassing performance, security, scalability, and cost-effectiveness. By strategically managing and optimizing these aspects, businesses can unlock significant advantages, including increased efficiency, reduced operational costs, and improved agility. Embracing emerging trends and continuously evaluating workload strategies will be key to maintaining a competitive edge in the evolving digital world.

Query Resolution

What is a workload in the context of IT?

A workload refers to the processing, computing, and data management tasks performed by a system. This encompasses everything from running a database to executing a web application or performing batch processing.

How does workload performance impact business value?

Improved workload performance, measured by metrics like response time and throughput, directly translates to enhanced user experience, increased efficiency, and potentially higher revenue. Conversely, poor performance can lead to lost customers and reduced productivity.

What are some key workload optimization strategies?

Workload optimization strategies include resource allocation, code optimization, infrastructure upgrades, and automation. These strategies aim to improve performance, reduce costs, and enhance the overall efficiency of the workload.

How does automation enhance workload value?

Automation improves workload value by increasing efficiency, reducing operational costs, and minimizing the potential for human error. Automated deployment, scaling, and management of workloads free up IT staff to focus on strategic initiatives.