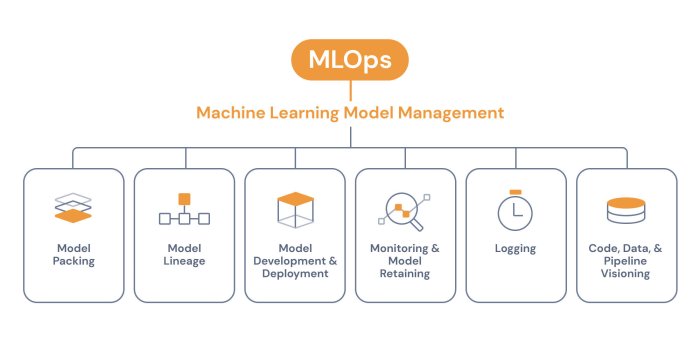

MLOps, a rapidly evolving field, bridges the gap between machine learning (ML) and IT operations (Ops). This approach streamlines the entire ML lifecycle, from model development to deployment and monitoring. Understanding MLOps is crucial for organizations seeking to leverage the power of ML effectively.

This exploration delves into the fundamental principles of MLOps, highlighting its key stages, associated tools, and the significant benefits it offers. We’ll also discuss the challenges inherent in implementing MLOps, and strategies for effective collaboration between data scientists and operations teams.

Defining MLOps

MLOps, a combination of machine learning (ML) and operations (Ops), is a set of practices and tools designed to streamline the entire machine learning lifecycle. It aims to bridge the gap between data scientists and engineers, ensuring that machine learning models can be deployed, monitored, and maintained reliably and efficiently. This facilitates the transition from model development to production-ready deployment, contributing significantly to the successful implementation of machine learning solutions.The core concept behind MLOps lies in its ability to automate and manage the complete lifecycle of machine learning projects, from model training to deployment and monitoring.

This approach contrasts with traditional software development methodologies, which often lack the specific mechanisms for handling the unique complexities of machine learning. MLOps emphasizes collaboration and communication between data scientists, engineers, and operations teams, leading to more effective and reliable deployments.

MLOps Definition

MLOps is a collection of practices and tools that integrates machine learning (ML) development with operational processes. Its primary purpose is to improve the efficiency and reliability of deploying, monitoring, and maintaining machine learning models in production. This unified approach ensures the models are consistently deployed and perform as expected.

Relationship between ML and Ops

Machine learning models, while powerful, often require significant operational support to function optimally in production. MLOps bridges this gap by providing the necessary infrastructure, tools, and processes for deploying, monitoring, and maintaining ML models. It ensures that models developed by data scientists are effectively integrated into the operational workflows of the organization. A good example of this is the continuous integration and continuous deployment (CI/CD) pipeline used in software development, which is adapted in MLOps to handle the unique needs of machine learning models.

Core Goals and Objectives of MLOps

MLOps implementations are aimed at several core goals and objectives. These include:

- Increased efficiency and speed of model deployment: Automation of tasks like model training, testing, and deployment reduces the time it takes to get models into production. This can lead to faster time to market and more frequent model updates.

- Improved model performance and reliability: Continuous monitoring and feedback mechanisms in MLOps allow for early detection of performance issues and enable timely adjustments, leading to more reliable and robust models.

- Enhanced collaboration and communication: MLOps fosters better communication and collaboration between data scientists, engineers, and operations teams, leading to a more cohesive and efficient workflow.

- Reduced operational costs: Automation of tasks and improved model management contribute to reduced operational costs, leading to significant savings over time.

Comparison: Traditional Software Development vs. MLOps

Traditional software development and MLOps methodologies differ significantly in their approaches to model development and deployment. The table below highlights these key differences:

| Feature | Traditional Software Development | MLOps | Key Difference |

|---|---|---|---|

| Model Development | Separate from deployment, often relying on manual processes. | Integrated with deployment and operations throughout the entire lifecycle. | MLOps integrates the entire model lifecycle, while traditional methods treat it separately. |

| Deployment | Typically a one-time event or infrequent updates. | Continuous deployment and monitoring, enabling frequent model updates and adjustments. | MLOps facilitates continuous deployment and model updates, while traditional methods are less frequent. |

| Monitoring and Maintenance | Limited or ad-hoc monitoring after deployment. | Continuous monitoring and feedback loops, allowing for real-time adjustments. | MLOps emphasizes continuous monitoring and feedback, while traditional methods lack these continuous loops. |

| Collaboration | Often siloed development teams. | Collaborative environment bringing together data scientists, engineers, and operations teams. | MLOps promotes collaboration between various teams, while traditional methods may be less collaborative. |

Core Principles of MLOps

MLOps, as a discipline, relies on a set of core principles to ensure the seamless and efficient deployment of machine learning models. These principles act as a guiding framework, ensuring the process is repeatable, reliable, and scalable. By adhering to these principles, organizations can streamline the entire machine learning lifecycle, from model development to deployment and monitoring.The core principles of MLOps are not simply best practices; they are fundamental to achieving success in the field.

They provide a structure for managing the complexities of machine learning projects, fostering collaboration, and enabling continuous improvement. Understanding and applying these principles is critical for successful model deployment and maintenance.

Version Control and Collaboration

Effective version control is crucial for managing the various components of a machine learning project. This includes code, data, models, and configurations. Version control systems facilitate collaboration among team members, enabling them to track changes, resolve conflicts, and revert to previous versions if necessary. A shared repository ensures everyone works from the same baseline, reducing errors and enhancing transparency.

Git, for example, is a widely used version control system that supports branching, merging, and tagging, crucial for managing different model versions and iterations. This ensures traceability, enabling the team to identify the exact changes and versions responsible for any specific model performance.

Automated Pipelines

Automated pipelines are essential for automating the entire machine learning lifecycle, from data preparation to model deployment. These pipelines automate tasks such as data ingestion, preprocessing, model training, evaluation, and deployment. Automated pipelines not only improve efficiency but also reduce the risk of human error. By automating the process, teams can focus on more strategic tasks, and the entire process becomes more reliable and repeatable.

A well-designed pipeline ensures consistent results, regardless of the person running the tasks. This reliability is crucial for production deployments where consistency is paramount.

Monitoring and Feedback Loops

Continuous monitoring of deployed models is vital for ensuring performance and identifying potential issues. Monitoring tools track metrics such as accuracy, latency, and resource utilization. Feedback loops allow for the continuous improvement of models based on real-time data and user feedback. This ensures models remain relevant and accurate in dynamic environments. Real-time feedback is essential for adaptive models, enabling them to continuously learn and improve their performance as data evolves.

Alerting systems, triggered by predefined thresholds, are key to identifying and addressing model performance issues promptly.

Continuous Integration and Continuous Delivery (CI/CD)

CI/CD pipelines automate the software development process, ensuring that changes are integrated and deployed frequently and reliably. In MLOps, CI/CD pipelines are crucial for deploying machine learning models in a controlled and repeatable manner. This allows for rapid iteration and deployment of new models, enabling organizations to adapt to changing business needs. CI/CD pipelines also promote consistency, reducing the risk of errors and ensuring models are deployed effectively.

This process ensures that models are deployed in a structured and controlled manner, improving the overall stability and reliability of the machine learning infrastructure.

Table: Comparison of MLOps Tools and Platforms

| Tool/Platform | Version Control | Automated Pipelines | Monitoring & Feedback |

|---|---|---|---|

| MLflow | Integrates with Git | Supports experiment tracking and model deployment | Provides logging and tracking of metrics |

| Kubeflow | Integrates with Git | Orchestrates containerized ML workflows | Provides dashboards and monitoring tools |

| Domino Data Lab | Integrates with Git | Automated model training and deployment pipelines | Real-time model performance monitoring |

| Amazon SageMaker | Integrates with Git | Automated model training and deployment pipelines | Provides monitoring and logging features |

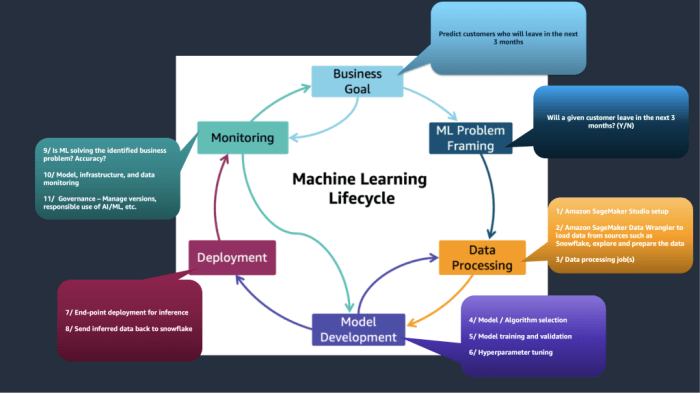

MLOps Lifecycle Stages

The MLOps lifecycle is a crucial aspect of successfully deploying and managing machine learning models. It encompasses a structured approach to the entire model development, deployment, and maintenance process. Understanding and effectively managing each stage of this lifecycle is essential for achieving efficient and reliable model delivery.The MLOps lifecycle stages provide a framework for managing the entire machine learning pipeline, from initial data preparation to model monitoring and retraining.

Each stage plays a critical role in ensuring the smooth operation and continuous improvement of machine learning models in production. Efficiently navigating these stages is key to successful model deployment and maintenance.

Data Preparation and Collection

Data quality is paramount in machine learning. Data preparation and collection involve identifying, acquiring, cleaning, and transforming raw data into a format suitable for model training. This stage ensures the model receives accurate and reliable input data, significantly impacting the model’s performance and reliability. Key activities include data sourcing, data validation, data cleaning, and feature engineering. Deliverables include a standardized dataset ready for model training, documented data quality metrics, and detailed data transformation processes.

Challenges include data inconsistencies, missing values, and the need for extensive data preprocessing, which can be addressed through robust data validation techniques, imputation methods for missing values, and well-defined feature engineering strategies.

Model Training and Evaluation

This stage focuses on developing, training, and evaluating the machine learning model. Key activities include selecting appropriate algorithms, preparing training data, training the model, and evaluating its performance against established metrics. Deliverables include a trained model, performance metrics (accuracy, precision, recall, F1-score), and a model training log. Challenges include selecting the optimal model architecture, addressing overfitting and underfitting, and ensuring the model generalizes well to unseen data.

Solutions include using cross-validation techniques, employing regularization methods, and conducting comprehensive testing with diverse datasets.

Model Deployment and Monitoring

This stage focuses on deploying the trained model into a production environment and continuously monitoring its performance. Key activities include choosing a deployment strategy, packaging the model, integrating it with existing systems, and implementing monitoring tools. Deliverables include a deployed model accessible via an API, monitoring dashboards, and alert mechanisms for performance degradation. Challenges include ensuring seamless integration with existing infrastructure, handling high-traffic loads, and identifying and responding to model drift.

Solutions include using containerization technologies (like Docker), employing load balancing mechanisms, and establishing proactive monitoring systems to detect performance issues early.

Model Retraining and Maintenance

This stage involves periodically retraining the model to maintain its accuracy and adapt to changing data patterns. Key activities include identifying the need for retraining, collecting new data, retraining the model, and updating the deployed model. Deliverables include a retrained model, updated performance metrics, and a documented retraining process. Challenges include determining the optimal retraining frequency, handling the computational cost of retraining, and ensuring a smooth transition to the updated model.

Solutions include establishing automated retraining schedules, leveraging cloud-based resources for computational needs, and implementing a rollback strategy for handling unexpected issues.

MLOps Lifecycle Workflow

| Stage | Key Activities | Deliverables | Dependencies |

|---|---|---|---|

| Data Preparation & Collection | Data sourcing, cleaning, transformation | Cleaned dataset, data documentation | None |

| Model Training & Evaluation | Model selection, training, evaluation | Trained model, performance metrics | Data Preparation & Collection |

| Model Deployment & Monitoring | Deployment, integration, monitoring | Deployed model, monitoring dashboards | Model Training & Evaluation |

| Model Retraining & Maintenance | Retraining, updating | Retrained model, updated metrics | Model Deployment & Monitoring |

Tools and Technologies in MLOps

MLOps relies heavily on a suite of tools and technologies to streamline the machine learning (ML) lifecycle. These tools automate tasks, monitor performance, and facilitate collaboration among data scientists, engineers, and business stakeholders. This section will explore crucial tools and technologies used in MLOps, demonstrating their use in automating various stages of the MLOps lifecycle.Effective MLOps implementation hinges on the selection and integration of appropriate tools.

A well-chosen set of tools not only streamlines tasks but also fosters collaboration and enhances the overall efficiency of the ML pipeline.

Model Training and Development Tools

Model training and development tools are critical in MLOps, enabling efficient model development, testing, and versioning. These tools often integrate with other MLOps tools to ensure seamless workflows. Key tools in this category include:

- Jupyter Notebooks/Lab: These interactive environments are popular among data scientists for experimentation and model development. They facilitate rapid prototyping and collaboration, but managing versions and reproducibility across teams can be challenging.

- TensorFlow/PyTorch: These powerful deep learning frameworks provide the computational engine for building and training complex ML models. They are highly versatile but require specialized expertise for optimal utilization.

- MLflow: MLflow is a platform for managing the entire ML lifecycle, from experimentation to deployment. It tracks experiments, manages models, and facilitates reproducibility. MLflow offers a unified interface for various tools and frameworks.

Model Deployment and Monitoring Tools

Deployment and monitoring tools are crucial for ensuring the continuous availability and performance of ML models in production. They allow for real-time monitoring of model performance and provide mechanisms for automated model retraining.

- Kubernetes: A container orchestration platform that enables deployment and management of ML models in a scalable and robust manner. It provides the infrastructure for deploying and managing containerized ML models, enabling flexibility and scalability.

- Docker: Docker containers package software applications and their dependencies, ensuring consistency across different environments. This isolation and portability are essential for consistent model performance and deployment.

- Monitoring tools (e.g., Prometheus, Grafana): These tools provide insights into the performance of deployed models. They track metrics such as latency, accuracy, and resource consumption, enabling proactive identification and resolution of issues.

CI/CD Pipelines for ML

CI/CD pipelines are essential for automating the deployment process, ensuring continuous integration and continuous delivery of ML models. Automated pipelines enable faster model releases, increased reliability, and reduced deployment errors.

- GitHub Actions/GitLab CI/Jenkins: These platforms automate the build, test, and deployment stages of the software development lifecycle. They can be extended to manage the entire ML pipeline, enabling automation of model training, validation, and deployment.

- Automated Testing Frameworks: Automated testing ensures the quality and reliability of the ML model throughout the development process. Tools like pytest, unittest, and others are commonly used to automate unit, integration, and end-to-end tests.

Comparison of Different Tools

A comparative analysis of various MLOps tools reveals their distinct strengths and weaknesses. The ideal choice depends on the specific needs and complexity of the ML project.

| Tool | Features | Capabilities |

|---|---|---|

| MLflow | Experiment tracking, model registry, deployment | Excellent for managing the entire ML lifecycle |

| Kubernetes | Container orchestration, scalability | Crucial for deploying and managing complex ML models in production |

| GitHub Actions | Automated CI/CD pipelines | Streamlines the deployment process for ML models |

Automation in the MLOps Lifecycle

These tools automate different stages of the MLOps lifecycle. For instance, MLflow automates model tracking and management, while Kubernetes automates model deployment and scaling. CI/CD pipelines automate the deployment process. This automation streamlines the ML lifecycle, leading to faster model releases, improved quality, and reduced manual effort.

Benefits of Implementing MLOps

Implementing MLOps offers a multitude of benefits, significantly impacting the efficiency, productivity, and overall success of machine learning projects. It streamlines the entire machine learning lifecycle, fostering collaboration between data scientists, engineers, and operations teams. This streamlined approach leads to faster deployment cycles, improved model reliability, and reduced operational costs.MLOps provides a structured framework for managing the complexities of machine learning projects, enabling organizations to deliver high-quality models to production environments consistently and efficiently.

This structured approach allows for greater control over the entire model lifecycle, from development to deployment and monitoring. The benefits extend to improved collaboration and communication between teams, fostering a more unified and productive approach to machine learning projects.

Improved Efficiency and Productivity

The adoption of MLOps principles leads to a noticeable increase in efficiency and productivity across the machine learning development lifecycle. Automated processes, such as continuous integration and continuous delivery (CI/CD), streamline the deployment pipeline, reducing manual intervention and human error. This automation significantly reduces the time required to deploy models and iterate on them. Improved collaboration between teams also enhances communication and knowledge sharing, leading to a more efficient and productive workflow.

Enhanced Model Deployment Speed and Reliability

MLOps significantly accelerates the model deployment process. Using automated tools and pipelines, models can be deployed to production environments quickly and reliably. This speed is crucial for responding to market demands and gaining a competitive edge. Furthermore, the automated processes involved in MLOps help to ensure the reliability and quality of deployed models, minimizing the risk of errors and improving the overall performance.

Reduced Operational Costs and Resource Utilization

Implementing MLOps can lead to substantial cost savings and improved resource utilization. By automating tasks and streamlining processes, MLOps reduces the need for manual intervention and human oversight, leading to a more efficient use of resources. This reduced operational overhead translates into lower costs and allows organizations to focus their resources on higher-value tasks, such as model development and improvement.

Moreover, MLOps can help optimize resource utilization by identifying and addressing bottlenecks in the model deployment pipeline.

Enhanced Collaboration and Communication

MLOps fosters collaboration and communication among data scientists, engineers, and operations teams. Shared tools, standardized processes, and clear communication channels promote a more unified approach to machine learning projects. This improved communication can significantly enhance the speed and accuracy of problem-solving and the delivery of valuable insights. For instance, clear documentation and version control mechanisms aid communication and ensure that all stakeholders are aligned on the project’s progress and future goals.

Challenges in MLOps Implementation

Implementing MLOps, while offering significant advantages, presents several hurdles. These challenges often stem from the unique nature of machine learning, including the iterative development process, the reliance on data, and the need for continuous monitoring and improvement. Overcoming these obstacles requires careful planning, resource allocation, and a deep understanding of the intricacies of the ML lifecycle.The successful deployment and management of machine learning models often face unforeseen difficulties.

Addressing these challenges is crucial for achieving the desired outcomes and realizing the full potential of MLOps. A proactive approach to anticipate and mitigate these issues is essential for a smooth transition and sustained success.

Data Management Challenges

Data is the lifeblood of machine learning. Effective data management is critical for model training and deployment. Inconsistencies, quality issues, and evolving data formats can significantly impede the MLOps process. Managing data across various stages, from ingestion to versioning, and ensuring its integrity throughout the ML lifecycle is a key challenge. Furthermore, maintaining data privacy and compliance with regulations like GDPR is crucial.

Data silos and lack of centralized data governance can further complicate data management efforts.

Model Versioning and Management Issues

Model versioning and management are crucial for tracking changes and maintaining reproducibility. Complex model architectures and the iterative nature of machine learning development can lead to numerous model versions. Efficiently tracking these versions, understanding the differences between them, and managing their deployment is a key challenge. Version control systems and robust model registries become essential tools for managing model versions and their associated metadata.

Monitoring and Managing Deployed Models

Monitoring deployed models is essential for ensuring their continued performance and identifying potential issues. As models operate in real-world environments, their performance may degrade over time due to evolving data patterns or drift. Developing robust monitoring systems to detect and address performance issues is critical. These systems need to be scalable, reliable, and capable of handling high volumes of data.

A key challenge is identifying the root cause of performance degradation or unexpected behavior.

Scaling MLOps Solutions

Scaling MLOps solutions to accommodate increasing model complexity and data volumes is another significant challenge. As machine learning models become more sophisticated, the computational resources and infrastructure required for training and deployment increase. Developing scalable and flexible infrastructure capable of handling large datasets and complex models is crucial. Ensuring efficient resource allocation and managing the associated costs is also critical for scaling.

Furthermore, maintaining a consistent and reliable infrastructure across different environments, such as development, testing, and production, can be a considerable hurdle.

MLOps and Data Science Collaboration

Effective implementation of MLOps hinges on strong collaboration between data scientists and operations teams. A harmonious partnership ensures that machine learning models, developed with meticulous care, are deployed and maintained reliably, delivering sustained value. This collaborative approach is vital for organizations seeking to leverage the full potential of machine learning.

Crucial Interaction Between Teams

Data scientists, focused on model development and refinement, often operate in a different environment than operations teams, concerned with model deployment, monitoring, and maintenance. MLOps bridges this gap, facilitating seamless knowledge transfer and shared responsibility. Data scientists contribute their expertise in model building, while operations teams provide the infrastructure, tools, and processes for model deployment and monitoring. This synergy maximizes efficiency and reduces the risk of model failure or inconsistencies in real-world application.

Necessary Skills and Expertise

Data scientists require proficiency in model building, algorithm selection, data preprocessing, and evaluation metrics. They need a strong understanding of the underlying data and the business problem they are trying to solve. Operations teams, on the other hand, require expertise in infrastructure management, deployment pipelines, monitoring tools, and security protocols. They must also possess a solid grasp of the technical aspects of the models and the impact of operational decisions on model performance.

This collaborative approach requires a high degree of mutual understanding and respect for each other’s roles.

Ensuring Seamless Communication and Collaboration

Clear communication channels and defined roles are crucial for effective collaboration. Regular meetings, shared documentation, and clear communication protocols, such as using a shared platform for reporting and updates, facilitate a seamless flow of information. Data scientists can document their models’ intricacies, assumptions, and limitations, enabling operations teams to understand the models’ behavior and potential vulnerabilities. A shared understanding of the model’s purpose and its impact on the business is paramount.

Methods for Model Transfer

Efficient model transfer requires a structured process. This process should include standardized model packaging, version control, and documentation. Data scientists should package their models, including necessary data dependencies, using tools such as Docker containers or Python libraries. Clear documentation explaining the model’s architecture, input requirements, and output interpretation is essential. Version control systems ensure that different versions of the model are tracked and easily retrieved.

Operations teams can then use these packages to seamlessly deploy the models to production environments. This method minimizes the risk of errors and ensures that the model functions as intended in the production environment.

Security Considerations in MLOps

Robust security is paramount in MLOps, as machine learning models and the data they process often contain sensitive information. Neglecting security can lead to data breaches, compromised models, and reputational damage. This section explores critical security considerations throughout the MLOps lifecycle, from data ingestion to model deployment.Security in MLOps is not a separate process but an integral part of every stage.

It requires proactive measures to protect sensitive data, models, and infrastructure from various threats, including unauthorized access, malicious attacks, and accidental data breaches.

Importance of Security in MLOps

Ensuring the security of machine learning models and associated data is crucial for maintaining trust and preventing potential harm. Compromised models can lead to inaccurate predictions or even malicious outcomes, while data breaches can expose sensitive personal information and financial data. Robust security measures are essential for protecting the integrity and confidentiality of data throughout the entire MLOps lifecycle.

This includes safeguarding training data, model parameters, and deployed model outputs.

Security Risks Associated with ML Models and Data

Various security risks are inherent in the ML model development and deployment process. These include:

- Data breaches: Unauthorized access to sensitive data used for model training can expose confidential information, leading to significant financial and reputational losses. Examples include breaches of customer data used for personalized recommendations or medical data for disease prediction.

- Model poisoning: Malicious actors can inject corrupted data into the training dataset, leading to biased or inaccurate models. This can compromise the integrity of predictions and decisions made by the model.

- Evasion attacks: Adversaries may try to manipulate input data to evade the model’s predictions, leading to incorrect or undesirable outcomes. For example, in fraud detection, an attacker might try to disguise fraudulent transactions to bypass the model’s detection.

- Supply chain vulnerabilities: Security weaknesses in the tools and libraries used in the MLOps pipeline can expose the entire system to risk. Vulnerable open-source components can be exploited by attackers.

- Unauthorized access to models: Malicious actors may attempt to gain access to deployed models to understand their inner workings, extract sensitive information, or even modify the model’s behavior.

Safeguarding Sensitive Data Throughout the MLOps Lifecycle

A multi-layered approach to data security is crucial. This includes:

- Data anonymization and pseudonymization: Transforming sensitive data into a format that preserves its utility for model training while removing identifying information. Techniques include data masking and aggregation.

- Access control and authentication: Implementing strict access controls to restrict access to sensitive data and models based on user roles and permissions. This includes multi-factor authentication and role-based access control (RBAC).

- Encryption: Encrypting data at rest and in transit to protect it from unauthorized access and interception. Use of strong encryption algorithms is crucial.

- Regular security audits and vulnerability assessments: Conducting periodic security audits and vulnerability assessments of the MLOps pipeline to identify and address potential weaknesses. This helps to proactively address potential security threats.

Best Practices for Securing ML Models and Data

Secure development practices are vital to mitigate risks throughout the entire MLOps lifecycle:

- Principle of least privilege: Grant users only the necessary access rights to perform their tasks. This limits the potential damage from a security breach.

- Input validation and sanitization: Validate and sanitize all inputs to the model to prevent malicious code injection or other attacks. This includes data validation to prevent unexpected inputs that could lead to errors.

- Regular security training: Educate all team members about security best practices and potential threats. This is vital to maintain a security-conscious culture within the team.

- Continuous monitoring: Monitor the system for anomalies and suspicious activities. This enables quick detection of potential security breaches and enables rapid response to potential incidents.

- Compliance with regulations: Ensure compliance with relevant data privacy regulations (e.g., GDPR, CCPA) and other industry standards. This helps to maintain a trusted and compliant environment.

Case Studies and Real-World Examples

Implementing MLOps successfully requires understanding how other organizations have navigated the challenges and leveraged the benefits. Real-world case studies provide valuable insights into the strategies, technologies, and considerations involved in deploying and maintaining machine learning models at scale. These examples illustrate how MLOps practices have been applied in diverse industries, offering lessons learned and best practices for future implementations.Real-world MLOps deployments demonstrate a spectrum of successes and failures, highlighting the importance of tailoring strategies to specific needs and contexts.

Analyzing these cases allows us to understand how organizations have addressed challenges like model deployment, monitoring, and maintenance, ultimately improving the reliability and efficiency of their machine learning pipelines.

Financial Services Industry

The financial sector often requires high levels of accuracy and security in their machine learning models. MLOps implementation in finance typically focuses on fraud detection, risk assessment, and customer churn prediction. A successful example involves a major bank that leveraged MLOps to automate the deployment of models for fraud detection. By integrating CI/CD pipelines, they were able to quickly deploy updated models, reducing fraud rates and improving operational efficiency.

The benefits included faster model iteration cycles, reduced manual intervention, and enhanced security through automated security scans and deployments. Challenges included ensuring compliance with strict regulatory requirements, maintaining data privacy, and managing the complexity of large datasets. The use of containerization and orchestration tools proved critical in streamlining deployments.

E-commerce Platforms

E-commerce companies often use machine learning for personalized recommendations, demand forecasting, and inventory management. A successful case study from an e-commerce giant involved implementing MLOps to improve the performance of its recommendation engine. This involved automating the model training and deployment process, enabling faster iteration cycles for model improvements and leading to increased customer satisfaction and revenue. The benefits included increased efficiency in model development, reduced deployment time, and improved model accuracy.

The challenges included managing the large volumes of data generated by online transactions, ensuring the scalability of the MLOps pipeline, and maintaining the security of customer data.

Healthcare Providers

The healthcare sector uses machine learning for diagnostics, drug discovery, and patient risk prediction. A successful example from a healthcare provider involved using MLOps to automate the deployment of models for patient risk assessment. This involved creating a standardized workflow for model development, training, and deployment, resulting in a significant reduction in manual effort. The benefits included a streamlined process for model deployment, reduced operational costs, and increased speed of model delivery.

The challenges included ensuring the accuracy and reliability of the models, maintaining patient data privacy, and complying with healthcare regulations. The key was to create a robust governance framework that addressed these concerns.

Manufacturing

Manufacturing companies leverage MLOps to optimize production processes, predict equipment failures, and improve quality control. A notable case involves a manufacturing company that utilized MLOps to improve its predictive maintenance system. Implementing automated model retraining and deployment enabled the company to predict equipment failures more accurately, reducing downtime and increasing productivity. Benefits included a faster time-to-market for new models, reduced maintenance costs, and increased equipment lifespan.

Challenges included integrating diverse manufacturing data sources, ensuring model reliability in dynamic environments, and managing the complexity of industrial equipment. Careful data validation and model validation steps were crucial.

Summary Table of Key Findings

| Industry | Key Use Case | Strategies | Benefits | Challenges |

|---|---|---|---|---|

| Financial Services | Fraud detection, risk assessment | CI/CD pipelines, containerization | Faster model iteration, reduced manual intervention | Regulatory compliance, data privacy |

| E-commerce | Personalized recommendations | Automated model training/deployment | Increased efficiency, reduced deployment time | Large data volumes, scalability |

| Healthcare | Patient risk assessment | Standardized workflows | Streamlined deployment, reduced costs | Model accuracy, patient privacy |

| Manufacturing | Predictive maintenance | Automated retraining/deployment | Faster time-to-market, reduced downtime | Data integration, model reliability |

Final Thoughts

In conclusion, MLOps is not just a set of tools, but a holistic approach to managing the ML lifecycle. By embracing its core principles, organizations can significantly enhance the efficiency, reliability, and scalability of their ML deployments. The benefits extend from faster model deployment to reduced operational costs, making MLOps an essential aspect of any modern ML strategy.

User Queries

What are the key differences between traditional software development and MLOps methodologies?

Traditional software development often focuses on pre-defined specifications and stable codebases. MLOps, however, must accommodate iterative model improvements, dynamic data, and continuous monitoring. This requires a more agile and adaptable approach, emphasizing continuous feedback loops.

What are some common challenges in implementing MLOps?

Challenges include managing complex data pipelines, ensuring model versioning consistency, and effectively monitoring deployed models in real-world environments. Scaling MLOps solutions to accommodate increasing data volume and model complexity is also a significant hurdle.

How can data scientists and operations teams collaborate effectively in MLOps?

Clear communication channels and shared understanding of each other’s roles are crucial. Establishing standardized processes for model transfer and documentation is essential for seamless collaboration. Training programs and workshops can also help bridge the knowledge gap between teams.

What are the crucial security considerations in MLOps?

MLOps requires careful attention to data security throughout the lifecycle. This includes securing sensitive data, safeguarding ML models, and implementing robust access controls. Regular security audits and compliance with industry best practices are also essential.