The serverless-first development approach represents a paradigm shift in software architecture, prioritizing the utilization of serverless computing as the foundation for application design and deployment. This methodology moves away from managing underlying infrastructure, allowing developers to focus on writing code and delivering value. This approach leverages cloud providers’ services to handle tasks such as server provisioning, scaling, and maintenance, ultimately aiming for increased efficiency and reduced operational overhead.

Serverless-first development encapsulates a variety of technologies and practices. It typically involves Function-as-a-Service (FaaS), API gateways, and event-driven architectures, all working in concert to build scalable, resilient, and cost-effective applications. Understanding the nuances of this approach requires a deep dive into its core principles, comparing it to traditional development models, and examining the benefits it offers for businesses and developers alike.

Introduction to Serverless-First Development

Serverless-first development represents a paradigm shift in software engineering, prioritizing the utilization of serverless computing as the foundational architecture for application development. This approach aims to leverage the benefits of serverless technologies from the outset, fundamentally altering how applications are designed, built, and deployed. It necessitates a deliberate choice to favor serverless services over traditional server-based infrastructure wherever feasible.The core tenets of serverless-first development revolve around embracing a “pay-as-you-go” model, automated scaling, and event-driven architectures.

This allows developers to concentrate on writing code and business logic rather than managing servers, leading to improved agility, reduced operational overhead, and potentially lower costs.

Defining Serverless Computing

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. Developers deploy code (typically in the form of functions) and are charged only for the actual compute time consumed by their code execution. The cloud provider handles all aspects of server management, including provisioning, scaling, and patching. This abstraction of infrastructure allows developers to focus solely on writing and deploying code, significantly streamlining the development process.

Key Benefits of a Serverless-First Approach

Adopting a serverless-first approach offers several significant advantages, impacting various facets of the software development lifecycle.

- Reduced Operational Overhead: The cloud provider manages all aspects of server infrastructure, including server provisioning, maintenance, and scaling. This frees developers from the responsibility of managing servers, allowing them to focus on writing code and business logic.

- Scalability and High Availability: Serverless platforms automatically scale compute resources based on demand. This inherent scalability ensures that applications can handle fluctuating workloads without manual intervention, guaranteeing high availability. For example, a news website built with a serverless-first approach can seamlessly accommodate a surge in traffic during breaking news events.

- Cost Optimization: The “pay-as-you-go” pricing model of serverless computing eliminates the need to pay for idle resources. Developers are charged only for the compute time consumed by their code execution. This can lead to significant cost savings, particularly for applications with intermittent or unpredictable workloads. For instance, a batch processing job that runs for only a few minutes a day would incur minimal costs compared to running on a continuously provisioned server.

- Increased Developer Productivity: Serverless platforms simplify the development process by abstracting away server management complexities. This allows developers to focus on writing code and building features, accelerating the development cycle and improving overall productivity. The streamlined development process facilitates faster time-to-market for new features and applications.

- Enhanced Agility and Innovation: The rapid development and deployment capabilities of serverless platforms enable developers to experiment with new features and technologies more easily. This fosters a culture of innovation and allows organizations to respond quickly to changing market demands.

Comparing Serverless-First to Traditional Development

Serverless-first development represents a paradigm shift from traditional application development, particularly in how infrastructure is managed, costs are incurred, and applications are deployed. Understanding the distinctions between these two approaches is crucial for making informed decisions about architectural choices and development strategies.

Infrastructure Management Differences

The approach to infrastructure management fundamentally differs between serverless-first and traditional development. Traditional development often involves significant upfront investment and ongoing maintenance responsibilities, while serverless-first development abstracts away much of this complexity.

Traditional development infrastructure typically involves:

- Provisioning and Managing Servers: Developers or operations teams are responsible for procuring, configuring, and maintaining physical or virtual servers. This includes tasks such as hardware selection, operating system installation, patching, and security updates. This model often necessitates expertise in server administration and network configuration.

- Capacity Planning: Determining the appropriate server capacity to handle expected traffic and scaling needs is crucial. Over-provisioning leads to wasted resources and increased costs, while under-provisioning can result in performance issues and service outages. This requires forecasting and monitoring.

- Deployment and Scaling: Deploying applications involves complex processes, including setting up deployment pipelines, configuring load balancers, and managing scaling infrastructure. Scaling typically involves manually adjusting server instances or implementing auto-scaling rules.

- Monitoring and Maintenance: Continuous monitoring of server health, performance, and security is essential. This includes tasks such as logging, alerting, and incident response. Ongoing maintenance involves applying security patches, updating software, and performing routine backups.

Serverless-first development infrastructure, in contrast, focuses on:

- Abstracted Infrastructure: Serverless platforms, such as AWS Lambda, Azure Functions, and Google Cloud Functions, provide a fully managed environment where developers deploy code without managing servers. The platform handles all aspects of infrastructure management, including provisioning, scaling, and maintenance.

- Event-Driven Architecture: Applications are often designed around event triggers, such as HTTP requests, database updates, or scheduled events. This allows for granular scaling and efficient resource utilization, as functions are only invoked when needed.

- Automatic Scaling: Serverless platforms automatically scale resources based on demand. Developers do not need to manually configure scaling rules or manage server instances.

- Simplified Deployment: Deployment processes are streamlined, often involving simple code uploads or configuration changes. The platform handles the complexities of deploying and managing the application.

- Focus on Code: Developers can concentrate on writing code and business logic, rather than managing infrastructure. This can lead to increased developer productivity and faster time to market.

Cost Implications of Each Development Model

The cost structures of serverless-first and traditional development models differ significantly. These differences are primarily related to resource allocation, operational overhead, and the pricing models employed by cloud providers.

Traditional development costs typically involve:

- Upfront Infrastructure Costs: Investments in hardware, software licenses, and initial setup expenses can be substantial. This includes the cost of servers, networking equipment, operating systems, and databases.

- Ongoing Operational Costs: Recurring costs associated with server maintenance, power consumption, data center rent, and IT staff salaries are substantial. This includes expenses for system administrators, network engineers, and other IT professionals.

- Fixed Costs and Capacity Planning: Regardless of actual usage, organizations are often required to pay for fixed infrastructure resources, such as server instances. Over-provisioning to handle peak loads can lead to wasted resources and unnecessary costs.

- Capital Expenditure (CAPEX) vs. Operational Expenditure (OPEX): Traditional models often involve significant capital expenditure (CAPEX) for infrastructure, leading to high upfront costs.

Serverless-first development costs are generally characterized by:

- Pay-per-Use Pricing: Serverless platforms typically employ a pay-per-use pricing model, where customers are charged only for the actual resources consumed. This includes compute time, memory usage, and the number of function invocations.

- Granular Resource Allocation: Resources are allocated dynamically based on demand, ensuring that organizations pay only for the resources they need. This can lead to significant cost savings, especially for applications with variable workloads.

- Reduced Operational Overhead: Serverless platforms abstract away infrastructure management, reducing the need for dedicated IT staff and minimizing operational costs.

- Scalability and Efficiency: Serverless platforms automatically scale resources based on demand, eliminating the need for manual scaling and ensuring that applications can handle peak loads without over-provisioning.

- Operational Expenditure (OPEX) Focus: Serverless models primarily involve operational expenditure (OPEX), aligning costs with actual usage and reducing upfront investments.

Example of Cost Comparison:

Consider a web application with fluctuating traffic patterns. In a traditional model, a company might provision a server with a fixed capacity to handle peak loads. During periods of low traffic, the server would be underutilized, leading to wasted resources and unnecessary costs. In a serverless-first model, the application would automatically scale based on demand, incurring costs only for the actual compute time and resources used.

During periods of low traffic, costs would be minimal, while during peak loads, the application would scale up automatically, ensuring optimal performance without the need for manual intervention or over-provisioning. Real-world case studies often show substantial cost savings, sometimes up to 50% or more, when migrating from traditional infrastructure to serverless architectures.

Core Components of Serverless-First Architecture

Serverless-first architecture represents a paradigm shift in application development, emphasizing the utilization of cloud-native services to minimize infrastructure management and maximize developer productivity. This approach relies on a set of core components working in concert to enable scalable, cost-effective, and event-driven applications.

Essential Components of a Typical Serverless Architecture

A serverless architecture comprises several key elements that collectively define its operational characteristics. These components, often provided as managed services by cloud providers, contribute to the overall efficiency and scalability of the system.

- Function-as-a-Service (FaaS): FaaS platforms, such as AWS Lambda, Azure Functions, and Google Cloud Functions, are the fundamental building blocks. They allow developers to execute code in response to events without provisioning or managing servers. This “pay-per-use” model is a core tenet of serverless.

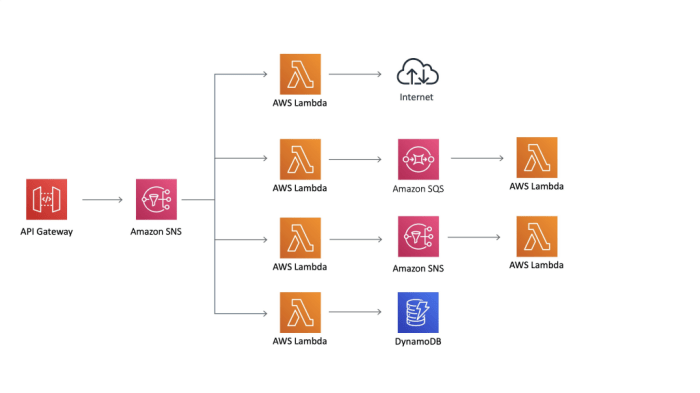

- API Gateway: An API Gateway, such as AWS API Gateway, acts as a front door for all API requests. It handles routing, security, and rate limiting, abstracting the underlying serverless functions from direct client access. This separation enhances security and simplifies API management.

- Event Sources: Serverless applications are inherently event-driven. Event sources, such as HTTP requests, database updates, scheduled tasks, and message queues (e.g., Amazon SQS, Azure Service Bus, Google Cloud Pub/Sub), trigger the execution of FaaS functions. These events initiate the processing workflows.

- Databases and Storage: Serverless applications utilize managed database and storage services. Examples include Amazon DynamoDB, Azure Cosmos DB, Google Cloud Datastore, and cloud storage services like Amazon S3, Azure Blob Storage, and Google Cloud Storage. These services provide scalable and reliable data persistence.

- Authentication and Authorization: Secure serverless applications require robust authentication and authorization mechanisms. Services like AWS Cognito, Azure Active Directory B2C, and Google Cloud Identity Platform handle user identity management and access control.

- Monitoring and Logging: Comprehensive monitoring and logging are essential for troubleshooting and performance optimization. Cloud providers offer services such as AWS CloudWatch, Azure Monitor, and Google Cloud Operations to collect, analyze, and visualize application metrics and logs.

Function-as-a-Service (FaaS) in Serverless-First Applications

FaaS is the cornerstone of serverless-first development. It enables developers to focus solely on writing code that responds to events, abstracting away the complexities of server management. The core benefit of FaaS is its ability to scale automatically based on demand.

- Event-Driven Execution: FaaS functions are triggered by events, such as HTTP requests, database changes, or scheduled tasks. This event-driven architecture enables highly responsive and scalable applications.

- Scalability and Elasticity: FaaS platforms automatically scale the number of function instances based on the incoming event load. This elasticity ensures that applications can handle sudden spikes in traffic without manual intervention.

- Cost Efficiency: The “pay-per-use” pricing model of FaaS means developers only pay for the compute time consumed by their functions. This can significantly reduce operational costs, especially for applications with variable workloads.

- Simplified Deployment: FaaS platforms simplify the deployment process by allowing developers to upload their code packages directly. The platform handles the underlying infrastructure, including server provisioning, scaling, and patching.

- Language Support: FaaS platforms typically support multiple programming languages, such as Python, Node.js, Java, and Go, providing flexibility for developers to choose their preferred tools.

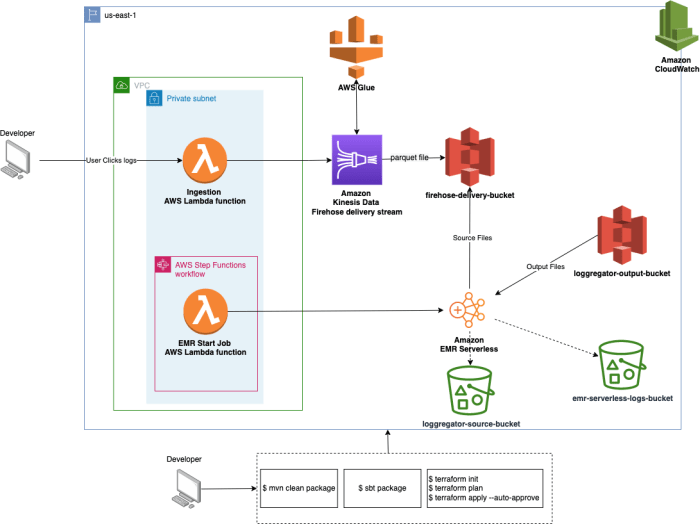

Architecture Diagram of a Serverless-First Application

Consider a serverless application designed to process image uploads. The following diagram illustrates a typical architecture using common cloud services.

The diagram depicts a system where a user uploads an image via a web application. The following components and their interactions are represented:

1. User Interaction

The user interacts with a web application. The web application initiates the process by sending the image upload request.

2. API Gateway

The API Gateway acts as the entry point for all incoming requests. It receives the upload request from the web application.

Cloud Storage (e.g., Amazon S3):

The API Gateway, upon receiving the upload request, triggers a serverless function, which then stores the uploaded image into a cloud storage service like Amazon S3. The storage service provides scalable object storage.

Function-as-a-Service (FaaS) (e.g., AWS Lambda):

A serverless function, such as an AWS Lambda function, is triggered by the image upload event in S3. This function performs image processing tasks. These tasks might include resizing, format conversion, and watermarking. The FaaS platform automatically scales the function instances as needed.

Database (e.g., Amazon DynamoDB):

After processing the image, the serverless function stores metadata about the processed image (e.g., file name, size, processing status) in a database, such as Amazon DynamoDB. This database provides fast and scalable data storage.

Monitoring and Logging (e.g., AWS CloudWatch):

The system utilizes monitoring and logging services, such as AWS CloudWatch, to collect metrics and logs from all components. This data is used for performance monitoring, troubleshooting, and alerting.

This architecture demonstrates the core principles of serverless-first development: event-driven execution, automatic scaling, and pay-per-use pricing. The use of managed services reduces operational overhead and allows developers to focus on application logic. This architecture is a common pattern for handling various tasks, such as file processing, data transformation, and API backends, enabling scalability and cost efficiency.

Benefits of Serverless-First for Businesses

Serverless-first development offers significant advantages for businesses, transforming how applications are built, deployed, and managed. This approach allows organizations to be more agile, reduce operational costs, and accelerate time-to-market, leading to a more competitive and efficient business model. The benefits are multi-faceted, impacting various aspects of the business, from development cycles to resource allocation.

Business Agility and Time-to-Market

Serverless-first architectures inherently promote agility, allowing businesses to respond rapidly to market changes and customer demands. This is achieved through faster development cycles and simplified deployment processes. The ability to iterate quickly is crucial in today’s dynamic business environment.

- Rapid Development and Deployment: Serverless platforms eliminate the need to manage servers, allowing developers to focus solely on writing code. This accelerates the development process significantly. Deployment is often as simple as uploading code, and the platform handles scaling and infrastructure management. For example, a study by the Serverless Framework revealed that teams using serverless can deploy new features up to 50% faster than teams using traditional methods.

- Scalability and Elasticity: Serverless applications automatically scale based on demand. This eliminates the need to provision and manage infrastructure capacity manually. Businesses can handle traffic spikes and fluctuating workloads without worrying about over-provisioning or performance bottlenecks. This dynamic scalability is particularly beneficial for applications with unpredictable user traffic, such as e-commerce sites during peak seasons or applications that respond to real-time events.

- Faster Iteration Cycles: The streamlined development and deployment processes inherent in serverless architectures enable faster iteration cycles. Developers can quickly test new features, gather user feedback, and deploy updates without significant downtime. This rapid feedback loop fosters continuous improvement and allows businesses to adapt to changing market needs more effectively.

Use Cases Providing Significant Advantages

Serverless-first development is particularly advantageous in specific use cases where its core strengths – scalability, cost-efficiency, and agility – are most impactful. Several applications are ideally suited for this architectural approach.

- Web and Mobile Backends: Serverless functions are perfectly suited for building the backend of web and mobile applications. They can handle API requests, process data, and interact with databases without requiring any server management. Platforms like AWS Lambda, Azure Functions, and Google Cloud Functions provide the infrastructure needed. For instance, a mobile application can use serverless functions to handle user authentication, data storage, and push notifications.

- Real-Time Data Processing: Serverless architectures excel at processing real-time data streams from various sources, such as IoT devices, social media feeds, and financial transactions. Serverless functions can be triggered by data events, allowing for immediate processing and analysis. For example, a smart home system can use serverless functions to analyze sensor data and trigger actions, such as adjusting the thermostat or turning on lights.

- Event-Driven Applications: Serverless platforms are designed to handle event-driven architectures. This means that applications can be built to react to events in real-time, such as user actions, database updates, or scheduled tasks. Serverless functions can be triggered by events, allowing for asynchronous processing and improved application responsiveness. For example, an e-commerce site can use serverless functions to send order confirmation emails, update inventory levels, and process payments.

- Chatbots and Conversational Interfaces: Serverless functions can be used to build scalable and cost-effective chatbots and conversational interfaces. These functions can handle user interactions, process natural language, and integrate with external services. For example, a customer service chatbot can use serverless functions to answer customer questions, provide support, and escalate issues to human agents.

- Image and Video Processing: Serverless functions can be used to perform various image and video processing tasks, such as resizing images, generating thumbnails, and transcoding videos. This can be particularly useful for content-rich applications and media platforms. A social media platform can use serverless functions to automatically resize and optimize images uploaded by users.

Reducing Operational Overhead

Serverless-first architectures significantly reduce operational overhead, allowing businesses to focus on their core competencies rather than managing infrastructure. This leads to lower operational costs and improved efficiency.

- Reduced Infrastructure Management: Serverless platforms abstract away the complexities of server management, including provisioning, scaling, and patching. This frees up IT staff to focus on more strategic tasks, such as developing new features and improving application performance. This reduced operational burden can translate into significant cost savings, as businesses no longer need to hire and train specialized staff to manage servers.

- Automated Scaling and Resource Allocation: Serverless platforms automatically scale resources based on demand, eliminating the need for manual scaling and capacity planning. This ensures that applications can handle peak loads without performance degradation and prevents over-provisioning, which can lead to unnecessary costs. This automated scaling capability is particularly beneficial for applications with fluctuating workloads.

- Cost Optimization: Serverless pricing models are typically based on pay-per-use, meaning that businesses only pay for the resources they consume. This can lead to significant cost savings compared to traditional infrastructure-as-a-service (IaaS) models, where businesses pay for provisioned resources regardless of usage. For example, a business that only uses its application during peak hours can significantly reduce its infrastructure costs by using serverless functions that are only active when needed.

- Simplified Monitoring and Maintenance: Serverless platforms often provide built-in monitoring and logging capabilities, making it easier to track application performance and identify issues. Maintenance tasks, such as security patching and software updates, are typically handled by the platform provider. This reduces the time and effort required to maintain applications, freeing up developers to focus on innovation.

Common Serverless Technologies and Platforms

Serverless-first development relies on a suite of technologies and platforms that enable developers to build and deploy applications without managing servers. The choice of platform and technologies has a significant impact on the architecture, cost, and performance of serverless applications. Understanding the landscape of available options is crucial for making informed decisions.

Popular Serverless Platforms and Technologies

The serverless ecosystem offers a variety of platforms and technologies. These platforms typically provide a range of services, including compute, storage, databases, and API management, all designed to be used in a serverless manner.

- AWS Lambda: Amazon Web Services (AWS) Lambda is a compute service that lets you run code without provisioning or managing servers. It executes code in response to events and automatically manages the underlying compute resources. AWS Lambda supports multiple programming languages, including Node.js, Python, Java, Go, and C#.

- AWS API Gateway: Another critical AWS service is the API Gateway, which allows developers to create, publish, maintain, monitor, and secure APIs at any scale. It supports RESTful APIs, WebSocket APIs, and HTTP APIs, making it a versatile solution for serverless application backends.

- Azure Functions: Microsoft Azure Functions is a serverless compute service that enables developers to run event-triggered code without managing infrastructure. It supports multiple programming languages and provides features such as triggers, bindings, and integrations with other Azure services.

- Azure API Management: Azure API Management offers a platform for publishing, managing, and analyzing APIs in the cloud. It allows developers to secure, scale, and monitor APIs, providing a comprehensive solution for API lifecycle management.

- Google Cloud Functions: Google Cloud Functions is a serverless execution environment for building and connecting cloud services. It allows developers to write code in response to events and automatically scales to handle the workload. It integrates seamlessly with other Google Cloud services.

- Google Cloud API Gateway: Google Cloud API Gateway is a fully managed service that helps developers create, secure, and manage APIs at scale. It supports various API types, including RESTful APIs and gRPC APIs.

- Serverless Framework: The Serverless Framework is an open-source framework for building serverless applications. It simplifies the deployment and management of serverless functions and resources across different cloud providers.

Strengths and Weaknesses of Different Serverless Providers

Each serverless provider has its strengths and weaknesses, which developers should consider when choosing a platform. The best choice depends on the specific requirements of the application, the existing infrastructure, and the team’s expertise.

- AWS:

- Strengths: Mature platform with a wide range of services, extensive documentation, large community support, and global infrastructure.

- Weaknesses: Can be complex to navigate due to the vast number of services and can be expensive if not managed carefully.

- Azure:

- Strengths: Strong integration with other Microsoft products, robust enterprise features, and competitive pricing.

- Weaknesses: Smaller community compared to AWS, and some services may not be as mature.

- Google Cloud:

- Strengths: Innovative technologies, strong focus on data analytics and machine learning, and competitive pricing.

- Weaknesses: Smaller market share compared to AWS and Azure, and the user interface can be less intuitive for some users.

The Role of API Gateways in Serverless-First Applications

API gateways are essential components in serverless-first applications, acting as a central point of entry for all API requests. They provide a range of functionalities that enhance the security, scalability, and manageability of serverless backends.

- Request Routing: API gateways route incoming requests to the appropriate serverless functions based on the API endpoint, HTTP method, and other criteria.

- Authentication and Authorization: They handle user authentication and authorization, ensuring that only authorized users can access the APIs. API gateways often integrate with identity providers such as OAuth 2.0 and OpenID Connect.

- Rate Limiting and Throttling: API gateways protect serverless functions from overload by implementing rate limiting and throttling policies. This prevents denial-of-service attacks and ensures fair usage of resources.

- Request Transformation: They can transform incoming requests before forwarding them to the backend functions. This may involve modifying headers, converting data formats, or enriching the request with additional information.

- Response Transformation: Similarly, API gateways can transform responses from the backend functions before sending them to the client. This may involve formatting the response, masking sensitive data, or aggregating data from multiple functions.

- Monitoring and Logging: API gateways provide monitoring and logging capabilities, allowing developers to track API usage, identify performance bottlenecks, and troubleshoot issues. They often integrate with monitoring tools like CloudWatch (AWS) or Azure Monitor.

- Caching: API gateways can cache responses from backend functions to improve performance and reduce the load on the serverless infrastructure.

Serverless-First Development Lifecycle

Serverless-first development necessitates a distinct lifecycle compared to traditional development approaches. This lifecycle emphasizes rapid iteration, automated deployments, and efficient resource utilization. It’s a shift towards a more agile and scalable development methodology, leveraging the inherent benefits of serverless architectures.

Typical Development Lifecycle for Serverless-First Projects

The development lifecycle for serverless-first projects typically follows an iterative process, prioritizing continuous integration and continuous deployment (CI/CD). This approach facilitates faster development cycles and quicker time-to-market. The steps involved, though adaptable, generally encompass the following phases:

- Planning and Design: This initial phase involves defining the application’s requirements, identifying the appropriate serverless services (e.g., functions, databases, API gateways), and designing the overall architecture. Careful consideration is given to event triggers, data flow, and security considerations.

- Development and Coding: Developers write the code for serverless functions, API endpoints, and any necessary infrastructure-as-code (IaC) configurations. The focus is on writing small, independent functions that perform specific tasks.

- Testing: Rigorous testing is crucial. This includes unit tests for individual functions, integration tests to verify interactions between functions and services, and end-to-end tests to validate the complete application flow. Testing is automated as much as possible.

- Deployment: Code and infrastructure configurations are deployed to the serverless platform. This process is automated through CI/CD pipelines.

- Monitoring and Observability: Continuous monitoring of the application’s performance, health, and resource utilization is essential. Metrics are collected, logs are analyzed, and alerts are configured to proactively identify and address issues.

- Iteration and Optimization: Based on monitoring data and user feedback, the application is continuously iterated upon. Code is updated, functions are optimized, and the architecture is refined to improve performance, scalability, and cost efficiency.

Steps Involved in Deploying a Serverless Function

Deploying a serverless function is a streamlined process, typically automated through CI/CD pipelines. The exact steps may vary slightly depending on the serverless platform and chosen tools, but generally involve these core actions:

- Code Compilation and Packaging: The function’s source code, along with any dependencies, is compiled and packaged into a deployment artifact (e.g., a ZIP file).

- Infrastructure Configuration: Infrastructure-as-code (IaC) tools, such as AWS CloudFormation, Terraform, or Serverless Framework, are used to define the necessary infrastructure resources (e.g., API Gateway endpoints, database connections, event triggers).

- Deployment to the Serverless Platform: The deployment artifact and infrastructure configuration are uploaded to the serverless platform (e.g., AWS Lambda, Azure Functions, Google Cloud Functions). The platform handles the provisioning, scaling, and management of the function.

- Configuration and Environment Variables: Environment variables, secrets, and other configuration settings are configured for the function. This ensures the function can access the necessary resources and operate correctly.

- Testing and Validation: After deployment, the function is tested to ensure it’s functioning as expected. This includes triggering the function, verifying its output, and checking logs for any errors.

- Rollback and Versioning: Serverless platforms often provide features for rolling back to previous versions of the function in case of deployment failures or issues. Versioning allows for managing multiple deployments and facilitates the ability to revert to a known-good state.

CI/CD Pipeline for a Serverless Application

A CI/CD pipeline automates the build, test, and deployment processes, enabling rapid and reliable releases. The following flowchart depicts a typical CI/CD pipeline for a serverless application:

Flowchart Description: The flowchart Artikels the steps involved in a CI/CD pipeline for a serverless application. The process begins with a code commit, triggering the pipeline. The pipeline then proceeds through the following stages:

1. Source Code Repository: Code is committed to a source code repository (e.g., Git). This triggers the CI/CD pipeline.

2. Build Stage:

- Code Compilation: The code is compiled.

- Dependency Management: Dependencies are installed.

- Packaging: The code and dependencies are packaged.

3. Test Stage:

- Unit Tests: Unit tests are executed.

- Integration Tests: Integration tests are performed.

- Code Analysis: Code analysis tools are used to check code quality and style.

4. Deploy Stage:

- Infrastructure Provisioning: Infrastructure-as-code (IaC) tools provision the necessary infrastructure.

- Deployment: The application is deployed to the serverless platform.

- Configuration: Application configuration is performed.

5. Monitoring Stage:

- Health Checks: Health checks are performed to monitor application health.

- Performance Monitoring: Application performance is monitored.

- Logging: Logs are collected and analyzed.

6. Feedback Loop: Feedback from monitoring is used to improve the code. This process continuously refines the application.

The CI/CD pipeline enables automated testing and deployment, accelerating the development process and increasing the reliability of serverless applications. A successful build and test sequence triggers the deployment phase. Any failure at any stage halts the pipeline and requires investigation and correction. This continuous feedback loop is crucial for agile development in serverless environments.

Serverless-First Design Patterns

Serverless-first development leverages established design patterns to build scalable, resilient, and cost-effective applications. These patterns guide developers in structuring their applications to best utilize the capabilities of serverless platforms, optimizing for event-driven architectures, and managing data efficiently. Understanding these patterns is crucial for effectively implementing serverless solutions.

Common Serverless-First Design Patterns

Several design patterns are frequently employed in serverless-first development to address various architectural challenges. These patterns facilitate the creation of robust and efficient serverless applications.

- Event-Driven Architecture: This pattern forms the foundation of many serverless applications, enabling loose coupling and asynchronous communication between components.

- API Gateway Pattern: This pattern involves using an API gateway to manage API access, security, and routing, providing a single entry point for client applications.

- Function Chaining: This pattern orchestrates the execution of multiple serverless functions, where the output of one function triggers the next, forming a workflow.

- Fan-Out/Fan-In: The fan-out pattern distributes a single event to multiple consumers, while the fan-in pattern aggregates results from multiple sources.

- CQRS (Command Query Responsibility Segregation): This pattern separates read and write operations, often used with serverless databases to optimize performance and scalability.

- Circuit Breaker: This pattern prevents cascading failures by monitoring the health of external services and temporarily halting requests if a service becomes unavailable.

Event-Driven Architecture Pattern

The event-driven architecture (EDA) pattern is a cornerstone of serverless-first development, enabling applications to react to events in real-time. This architecture promotes loose coupling, scalability, and fault tolerance.

In an EDA, components communicate through events, which are significant occurrences within the system. These events can be anything from user actions to system-generated signals. Events are typically published to an event bus, such as AWS EventBridge or Azure Event Grid, and then consumed by interested subscribers (serverless functions).

The benefits of using EDA in serverless-first applications are numerous:

- Scalability: Individual functions can scale independently based on event volume.

- Resilience: Failures in one component do not necessarily affect other components.

- Loose Coupling: Components are decoupled, making it easier to maintain and update the system.

- Real-time Processing: Events are processed as they occur, enabling real-time applications.

Example: Consider an e-commerce application. When a new order is placed, an “OrderPlaced” event is published. This event might trigger several serverless functions: one to send a confirmation email, another to update inventory, and a third to initiate the shipping process. Each function operates independently, reacting to the event and performing its specific task. If the email function fails, the inventory update and shipping functions can continue to operate without interruption.

Considerations for Data Storage in Serverless-First Applications

Data storage is a critical aspect of serverless-first applications, and the choice of data storage solutions significantly impacts performance, scalability, and cost. Serverless architectures often leverage various data storage options, each with its strengths and weaknesses.

Selecting the appropriate data storage solution involves considering factors such as data type, access patterns, read/write requirements, and cost optimization. The goal is to choose a solution that efficiently stores and retrieves data while minimizing operational overhead.

- Serverless Databases: Serverless databases, such as Amazon DynamoDB, Google Cloud Firestore, and Azure Cosmos DB, are designed to scale automatically and provide pay-per-use pricing. They are well-suited for applications with variable workloads. DynamoDB, for example, excels at handling high-volume, low-latency read/write operations.

- Object Storage: Object storage services, like Amazon S3, Google Cloud Storage, and Azure Blob Storage, are used for storing large amounts of unstructured data, such as images, videos, and documents. They offer high durability and scalability.

- Relational Databases: Serverless relational database options, like Amazon Aurora Serverless, provide a fully managed relational database experience that scales automatically. They are appropriate when a structured database with SQL support is required.

- Data Caching: Caching mechanisms, such as Amazon ElastiCache or Redis, can improve application performance by storing frequently accessed data in memory, reducing the load on the underlying data stores.

Data Access Patterns: Data access patterns significantly influence the choice of data storage. For example, an application that requires frequent reads and writes to small data items may benefit from a NoSQL database like DynamoDB. Conversely, an application requiring complex queries and relational data may be better suited to a serverless relational database.

Data Consistency: Achieving data consistency is vital. Different consistency models, such as eventual consistency and strong consistency, have trade-offs between performance and data accuracy. Developers must carefully consider the appropriate consistency model for their specific application needs.

Example: An image-sharing application might store user profile data in a NoSQL database like DynamoDB for fast access. Images and videos would be stored in object storage (e.g., S3), and thumbnails could be generated using serverless functions. This approach allows for scalable storage, efficient retrieval, and cost optimization.

Security Considerations in Serverless-First

Serverless-first architectures, while offering significant advantages in scalability and cost-efficiency, introduce unique security challenges that demand a proactive and comprehensive approach. The distributed nature of serverless applications, the reliance on third-party services, and the inherent lack of direct control over underlying infrastructure necessitate a shift in security paradigms. This section delves into the specific security considerations critical for serverless-first deployments, emphasizing best practices, responsibility allocation, and practical implementation strategies.

Security Best Practices for Serverless-First Applications

Adopting robust security practices is paramount in serverless-first environments. These practices encompass various aspects, from code development to infrastructure configuration, to mitigate potential vulnerabilities and ensure data integrity. Implementing these practices proactively significantly reduces the attack surface and enhances the overall security posture.

- Least Privilege Principle: Grant functions only the necessary permissions to access resources. Avoid broad, unrestricted access rights. This limits the impact of a compromised function. For example, a function that only needs to read data from a database should not have write or delete permissions.

- Input Validation and Sanitization: Validate and sanitize all user inputs to prevent injection attacks (e.g., SQL injection, cross-site scripting). This involves verifying the data type, length, and format of the input before processing it. For example, if a user enters a phone number, ensure it conforms to the expected format using regular expressions.

- Secure Code Development: Employ secure coding practices, including regular code reviews, static analysis, and dependency management. Regularly update dependencies to patch known vulnerabilities. Using a code analysis tool to detect vulnerabilities before deployment is a crucial step.

- Secrets Management: Store sensitive information (API keys, database credentials) securely. Avoid hardcoding secrets in the code. Utilize dedicated secrets management services (e.g., AWS Secrets Manager, Azure Key Vault, Google Cloud Secret Manager) to store and manage secrets with proper access controls and rotation policies.

- Monitoring and Logging: Implement comprehensive monitoring and logging to detect and respond to security incidents. Collect logs from all serverless components (functions, APIs, databases) and analyze them for suspicious activity. Utilize security information and event management (SIEM) systems to correlate and analyze logs from different sources.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify and address vulnerabilities. This helps to proactively uncover weaknesses in the application and infrastructure. Penetration testing should simulate real-world attacks to assess the effectiveness of security controls.

- Infrastructure as Code (IaC): Define infrastructure configurations as code (e.g., using Terraform, CloudFormation). This promotes consistency, version control, and automation, reducing the risk of misconfigurations. IaC allows for repeatable deployments and simplifies security audits.

- API Security: Secure APIs using authentication, authorization, and rate limiting. Implement API gateways (e.g., AWS API Gateway, Azure API Management, Google Cloud API Gateway) to manage and secure API traffic. This can include features like request validation and traffic throttling to protect against denial-of-service attacks.

Comparing and Contrasting Security Responsibilities in Serverless vs. Traditional Development

The security responsibilities in serverless development differ significantly from those in traditional, infrastructure-managed environments. While traditional development places a greater emphasis on infrastructure-level security, serverless shifts the focus towards code, configuration, and the security of managed services. Understanding these differences is crucial for effectively allocating security tasks and responsibilities.

| Aspect | Traditional Development | Serverless Development |

|---|---|---|

| Infrastructure Security | High responsibility (e.g., patching servers, network security, operating system hardening) | Shared responsibility (provider manages infrastructure security; focus on configuration and function security) |

| Application Security | High responsibility (e.g., code security, input validation, authentication/authorization) | High responsibility (emphasis on code security, API security, and integration with managed services) |

| Network Security | High responsibility (firewalls, intrusion detection/prevention systems) | Lower responsibility (network security managed by the provider; focus on API security) |

| Data Security | High responsibility (encryption, access controls, data backups) | Shared responsibility (provider manages underlying storage security; focus on data access controls and encryption within applications) |

| Monitoring and Logging | High responsibility (setting up and maintaining monitoring and logging infrastructure) | Lower responsibility (provider provides monitoring and logging services; focus on configuring and analyzing logs) |

| Patching | High responsibility (patching operating systems, middleware, and applications) | Lower responsibility (provider handles patching of underlying infrastructure; focus on dependency management and application updates) |

The shared responsibility model highlights the importance of understanding the division of labor between the cloud provider and the application developer. While the provider handles infrastructure security, developers are responsible for securing their code, configuring services securely, and implementing robust authentication and authorization mechanisms.

Implementing Authentication and Authorization in a Serverless Environment

Authentication and authorization are fundamental security components in serverless applications, ensuring that only authorized users and applications can access protected resources. The implementation strategies in serverless environments leverage managed services and specialized techniques to provide secure and scalable access control.

- Authentication: The process of verifying the identity of a user or application. Serverless authentication typically involves:

- Identity Providers (IdPs): Utilize managed identity providers (e.g., Amazon Cognito, Azure Active Directory B2C, Google Identity Platform) to manage user identities, handle user registration, and provide authentication services. These services offer features like multi-factor authentication (MFA) and social login integration.

- API Gateways: Configure API gateways to handle authentication requests. The API gateway can integrate with IdPs to validate user credentials before routing requests to backend functions.

- JWT (JSON Web Tokens): Use JWTs to securely transmit user identity information. After successful authentication, the IdP issues a JWT, which the client includes in subsequent requests. The API gateway or backend functions can then validate the JWT to verify the user’s identity.

- Example: A mobile application authenticates a user using Amazon Cognito. Cognito issues a JWT after successful login. The mobile app then includes the JWT in the Authorization header of each API request to an API Gateway. The API Gateway validates the JWT using Cognito’s public keys before routing the request to the backend function.

- Authorization: The process of determining what a user or application is allowed to access. Serverless authorization strategies include:

- Role-Based Access Control (RBAC): Assign users to roles, and grant permissions to roles rather than individual users. This simplifies permission management and ensures consistency. For instance, create roles like “administrator,” “editor,” and “viewer” and assign appropriate permissions to each role.

- Attribute-Based Access Control (ABAC): Define access control rules based on attributes of users, resources, and the environment. ABAC provides fine-grained control and allows for complex access policies.

- API Gateway Authorization: Configure API gateways to perform authorization checks based on user roles or attributes. The API gateway can use custom authorizers or built-in authorization features to control access to API endpoints.

- Backend Function Authorization: Implement authorization logic within backend functions to enforce access control at the resource level. This can involve checking user permissions against a database or authorization service.

- Example: An API Gateway uses a custom authorizer function that validates the user’s JWT and retrieves their role from a database. Based on the role, the authorizer function determines whether the user has permission to access the requested API endpoint. If the user is an administrator, the function allows access; otherwise, it denies access.

By carefully implementing these authentication and authorization strategies, developers can build secure serverless applications that protect sensitive data and resources while maintaining scalability and ease of management.

Monitoring and Observability in Serverless-First

Monitoring and observability are crucial in serverless-first applications, as the ephemeral nature and distributed architecture of serverless environments present unique challenges to understanding system behavior. Unlike traditional monolithic applications, serverless applications often consist of numerous, independently deployed functions that interact with various services. This necessitates a robust monitoring strategy to track performance, identify issues, and ensure the overall health and reliability of the application.

Observability, encompassing monitoring, logging, and tracing, provides the necessary insights to diagnose problems quickly and efficiently, enabling proactive optimization and informed decision-making.

Importance of Monitoring and Observability

The dynamic and distributed nature of serverless architectures amplifies the significance of effective monitoring and observability. Serverless functions execute on-demand, scaling automatically based on incoming traffic. This elasticity introduces complexities in tracking performance metrics and identifying bottlenecks. Observability provides the necessary tools to understand the behavior of individual functions and the interactions between them, ensuring the application operates as intended.* Rapid Issue Identification: Real-time monitoring and comprehensive logging enable quick detection of errors, performance degradations, and security vulnerabilities.

This allows for prompt intervention and minimizes downtime.

Performance Optimization

Detailed metrics on function execution time, resource utilization, and error rates provide insights into performance bottlenecks. This data informs optimization efforts, such as code refactoring, resource allocation adjustments, and infrastructure scaling.

Cost Management

Monitoring resource consumption, including function invocations, memory usage, and data transfer, helps optimize costs. Analyzing these metrics allows for identifying inefficient code or unnecessary resource allocation, leading to cost savings.

Improved User Experience

By proactively monitoring application performance and identifying potential issues, developers can ensure a smooth and responsive user experience. Rapidly addressing performance problems and errors enhances user satisfaction.

Compliance and Security

Monitoring and logging are critical for meeting compliance requirements and ensuring the security of serverless applications. Auditing logs, tracking user activity, and detecting suspicious behavior are essential for maintaining a secure environment.

Examples of Monitoring Tools for Serverless Environments

Several tools are specifically designed or well-suited for monitoring serverless environments. These tools offer features such as automated instrumentation, real-time dashboards, alerting, and distributed tracing, providing comprehensive visibility into application behavior. The choice of tools depends on the specific cloud provider, application requirements, and team preferences.* Cloud Provider Native Tools:

AWS CloudWatch (AWS)

CloudWatch provides a comprehensive suite of monitoring and logging capabilities for AWS services, including Lambda functions, API Gateway, and DynamoDB. It offers metrics, logs, and dashboards for visualizing application performance and health. CloudWatch also supports custom metrics, enabling developers to track application-specific data.

Azure Monitor (Azure)

Azure Monitor provides monitoring and logging services for Azure resources, including Azure Functions, API Management, and Cosmos DB. It offers a unified view of application performance, health, and availability, along with alerting and diagnostic capabilities.

Google Cloud Monitoring (GCP)

Google Cloud Monitoring, formerly Stackdriver, provides monitoring and logging for Google Cloud services, including Cloud Functions, Cloud Run, and Cloud Storage. It offers real-time metrics, logs, dashboards, and alerting for comprehensive observability.* Third-Party Tools:

Datadog

Datadog offers a unified monitoring and analytics platform that integrates with various cloud providers and serverless technologies. It provides real-time dashboards, alerting, distributed tracing, and log management capabilities for serverless applications.

New Relic

New Relic provides application performance monitoring (APM) and observability solutions, including support for serverless functions. It offers distributed tracing, real-time dashboards, and alerting for identifying and resolving performance issues.

Honeycomb

Honeycomb is a observability platform focused on providing real-time insights into distributed systems. It is well-suited for serverless applications due to its emphasis on distributed tracing and detailed event analysis.

Log Structure Design for a Serverless Function

Designing a well-structured log format is essential for effective monitoring and troubleshooting in serverless environments. The log structure should include relevant data points that provide context and facilitate analysis. This structured approach enables efficient searching, filtering, and aggregation of log data, allowing developers to quickly identify and resolve issues.* Required Data Points:

Timestamp

The exact time the log event occurred, using a standardized format (e.g., ISO 8601). This is crucial for correlating events and analyzing timelines.

Log Level

Indicates the severity of the log event (e.g., `DEBUG`, `INFO`, `WARN`, `ERROR`). This helps prioritize issues and filter logs based on their importance.

Request ID

A unique identifier associated with each request, allowing for tracing the entire request flow across multiple functions and services. This is essential for distributed tracing.

Function Name

The name of the serverless function that generated the log event. This allows for filtering logs by function.

Invocation ID

A unique identifier for each function invocation, allowing to trace specific execution instances.

Correlation ID

Enables linking related log entries, even across different services or functions, facilitating debugging complex scenarios.

Message

A human-readable description of the log event. This should provide context and details about what happened.

Error Details (for error logs)

Include the error message, stack trace, and any relevant context, such as the input parameters that caused the error.* Optional Data Points:

User ID

The identifier of the user associated with the request, if applicable. This is useful for user-specific troubleshooting.

Client IP Address

The IP address of the client making the request. This can be helpful for security and troubleshooting.

HTTP Status Code

The HTTP status code returned by the function (e.g., 200 OK, 400 Bad Request, 500 Internal Server Error).

Execution Time

The time it took for the function to execute, measured in milliseconds.

Memory Usage

The amount of memory used by the function during execution.

Resource Consumption Metrics

Metrics related to the resources consumed by the function, such as database queries, API calls, or external service interactions.* Log Format Examples:

JSON

A common and versatile format that allows for structured logging and easy parsing. “`json “timestamp”: “2024-07-26T10:00:00.000Z”, “logLevel”: “INFO”, “requestId”: “abc123def456”, “functionName”: “myFunction”, “invocationId”: “inv-12345”, “correlationId”: “corr-7890”, “message”: “Successfully processed request”, “userId”: “user123”, “executionTime”: 150 “`

Structured Text

A format where key-value pairs are separated by delimiters. “`text [2024-07-26T10:00:00.000Z] INFO [abc123def456] myFunction: Successfully processed request. userId=user123, executionTime=150ms “`

Log Aggregation

Log aggregation tools can process the logs and extract the information, such as average execution time or error rates. This information can then be displayed on dashboards.

Serverless-First Cost Optimization

Serverless-first architectures, while offering significant benefits in scalability and agility, necessitate careful cost management to ensure economic viability. The pay-per-use model inherent in serverless deployments demands a proactive approach to optimization. Effective cost control involves a multi-faceted strategy, encompassing function design, resource allocation, and vigilant monitoring.

Strategies for Optimizing Costs in Serverless-First Environments

Optimizing costs in serverless environments requires a proactive and continuous approach. Several strategies can be employed to minimize expenses without sacrificing performance or functionality.

- Right-Sizing Resources: This involves carefully assessing the resource requirements of each serverless function. It means configuring memory allocation, execution time limits, and other parameters to match the actual workload. Over-provisioning leads to unnecessary costs, while under-provisioning can result in performance degradation.

- Efficient Code Optimization: The efficiency of the code directly impacts resource consumption. Optimizing code for speed and memory usage can reduce the execution time of functions, thereby lowering costs. This includes techniques such as minimizing dependencies, using efficient algorithms, and optimizing data structures.

- Leveraging Managed Services: Utilizing managed services offered by cloud providers can reduce operational overhead and potentially lower costs. For example, using a managed database service like Amazon DynamoDB or Google Cloud Firestore can eliminate the need to manage the underlying infrastructure, reducing both operational costs and the potential for over-provisioning.

- Monitoring and Alerting: Implementing robust monitoring and alerting systems is crucial for identifying and addressing cost anomalies. Monitoring function invocations, execution times, and resource consumption allows for early detection of inefficiencies or unexpected spikes in usage. Alerts can be configured to notify administrators of potential cost overruns, enabling timely intervention.

- Implementing Cost Allocation Tags: Tagging resources with appropriate cost allocation tags enables granular cost tracking. This allows for detailed analysis of spending by project, team, or function, making it easier to identify areas where costs can be optimized. This also helps to attribute costs to specific business units or initiatives.

- Using Reserved Instances or Committed Use Discounts (where applicable): While serverless architectures are primarily based on pay-per-use models, some cloud providers offer discounts for committing to a certain level of resource usage. If usage patterns are predictable, leveraging these options can further reduce costs. However, the benefits must be weighed against the potential risks of underutilization if the workload fluctuates.

- Automating Scaling: Employing automated scaling mechanisms is essential. Serverless platforms automatically scale functions based on demand, but it’s crucial to configure scaling policies effectively. Setting appropriate concurrency limits and scaling triggers ensures resources are allocated efficiently, preventing over-provisioning during periods of low activity and ensuring sufficient capacity during peak loads.

Tips for Right-Sizing Serverless Functions

Right-sizing serverless functions is critical for cost efficiency. The goal is to allocate the minimum resources necessary to meet performance requirements. This requires a thorough understanding of function behavior and workload characteristics.

- Analyze Function Metrics: Regularly monitor key metrics, including memory usage, execution time, and the number of invocations. Cloud provider dashboards offer tools for visualizing these metrics. Identify functions that consistently exceed allocated resources or exhibit inefficient performance.

- Experiment with Memory Allocation: Experiment with different memory configurations for each function. Start with a lower allocation and gradually increase it until performance goals are met. Monitor the impact on execution time and cost. Observe how the function behaves under different memory constraints.

- Optimize Execution Time: Identify and address any performance bottlenecks in the function code. This may involve optimizing algorithms, reducing dependencies, or improving data access patterns. Shorter execution times directly translate to lower costs.

- Use Provisioned Concurrency (if applicable): Some cloud providers offer provisioned concurrency, allowing you to pre-warm function instances to reduce cold start times. This can be particularly beneficial for latency-sensitive applications. However, it also incurs a cost, so carefully consider the trade-off between performance and cost.

- Test Under Realistic Load: Conduct thorough testing under realistic load conditions to simulate actual usage patterns. This helps to identify potential performance issues and ensure that the function can handle peak loads without exceeding resource limits. Consider using load testing tools to simulate concurrent requests.

- Review Regularly: Regularly review function configurations and resource allocations. Workloads and application requirements can change over time, so it’s essential to revisit these settings periodically to ensure they remain optimal. Automated processes can be implemented to flag anomalies or suggest optimization opportunities.

Cost Comparison Table: Serverless Database Service

The following table provides a simplified cost comparison for a hypothetical serverless database service across three major cloud providers. This table is for illustrative purposes only, and actual costs will vary based on region, usage patterns, and specific service configurations. Pricing is subject to change. The example service is a key-value store with moderate read/write activity.

| Cloud Provider | Service | Monthly Cost (Estimated) | Key Cost Drivers |

|---|---|---|---|

| Amazon Web Services (AWS) | Amazon DynamoDB | $50 – $200 (Varies with Provisioned Throughput/On-Demand) | Read/Write Capacity Units (RCUs/WCUs), Data Storage, Data Transfer |

| Google Cloud Platform (GCP) | Cloud Firestore | $40 – $180 (Varies with usage) | Read/Write Operations, Data Storage, Network Egress |

| Microsoft Azure | Azure Cosmos DB | $60 – $220 (Varies with Throughput) | Request Units (RUs), Data Storage, Data Transfer |

| Cloud Provider (Hypothetical) | Example Service | $30 – $150 (Varies with Provisioned Throughput) | Read/Write Capacity, Data Storage, Network Usage |

Note: This table presents a simplified comparison. Actual costs will vary based on specific usage patterns, data storage needs, and the selected service tier. It is crucial to consult the official pricing documentation for each cloud provider and to use cost estimation tools to determine the most cost-effective solution for a specific workload. The example service includes various factors such as storage capacity, read/write operations, and network transfer charges.

Challenges and Limitations of Serverless-First

Adopting a serverless-first approach, while offering significant advantages, also presents several challenges and limitations that must be carefully considered. These challenges can impact development, operations, and cost management, potentially making serverless-first unsuitable for certain use cases. A thorough understanding of these limitations is crucial for making informed decisions about architectural choices and mitigating potential risks.

Complexity and Debugging Difficulties

Debugging serverless applications can be more complex than debugging traditional monolithic or microservices architectures. Distributed nature and event-driven characteristics add to this complexity.

- Distributed Tracing Challenges: Serverless functions often interact with various services, making it difficult to trace requests and identify the root cause of errors. Tools like AWS X-Ray or Google Cloud Trace can help, but setup and configuration can be complex.

- Local Development and Testing: Emulating the serverless environment locally for testing can be challenging. Differences between local and cloud environments may lead to discrepancies. Tools like the Serverless Framework or AWS SAM can assist in local testing, but they may not perfectly replicate the production environment.

- Logging and Monitoring Overhead: Effective logging and monitoring are crucial for serverless applications. Setting up and managing these systems can be complex, requiring specialized tools and expertise. Identifying the specific function or service causing an issue can be time-consuming.

Vendor Lock-in Concerns

The reliance on specific cloud provider services can lead to vendor lock-in, making it difficult to migrate to another provider or self-host the application. This dependence can also impact pricing and feature availability.

- Service-Specific APIs and SDKs: Serverless platforms offer unique APIs and SDKs, tying the application to a specific vendor. Migrating to another provider requires significant code changes and refactoring.

- Proprietary Features: Cloud providers often offer proprietary serverless features that are not available on other platforms. Leveraging these features can accelerate development, but it also increases vendor lock-in.

- Cost Implications: Vendor lock-in can limit the ability to negotiate pricing and take advantage of competitive offers from other providers.

Cold Starts and Performance Considerations

Cold starts, the delay experienced when a serverless function is invoked for the first time or after a period of inactivity, can negatively impact performance, especially for latency-sensitive applications.

- Impact of Cold Starts: Cold starts can introduce significant latency, potentially impacting user experience. The duration of a cold start depends on several factors, including the function’s code size, the programming language, and the platform.

- Mitigation Strategies: Strategies to mitigate cold starts include pre-warming functions (keeping them active), optimizing code size, and choosing appropriate runtime environments. However, these strategies can add to operational overhead and cost.

- Use Case Suitability: Serverless-first may not be ideal for applications requiring low-latency responses, such as real-time gaming or high-frequency trading.

Cost Management and Optimization

While serverless can be cost-effective, managing costs requires careful monitoring and optimization. Unexpected spikes in function invocations or inefficient resource utilization can lead to higher-than-expected bills.

- Monitoring and Alerting: Implementing robust monitoring and alerting systems is essential to identify and address cost anomalies. This includes monitoring function invocation counts, execution times, and resource consumption.

- Resource Optimization: Optimizing function code, memory allocation, and other resources can help reduce costs. This requires a deep understanding of the serverless platform and its pricing model.

- Cost Analysis Tools: Utilizing cost analysis tools provided by cloud providers or third-party vendors can help identify cost drivers and areas for optimization.

Limited Control and Customization

Serverless platforms abstract away infrastructure management, offering less control over underlying resources. This limitation can be a disadvantage in certain scenarios.

- Infrastructure Control: Serverless platforms manage the infrastructure, limiting the ability to customize the underlying resources. This can be a limitation for applications requiring specific hardware configurations or low-level control.

- Debugging and Troubleshooting: Limited access to the underlying infrastructure can make debugging and troubleshooting more challenging. Diagnosing performance issues or resource bottlenecks may require reliance on vendor-provided tools.

- Compliance and Security: Meeting specific compliance requirements or implementing advanced security configurations can be more complex in a serverless environment.

Scenarios Where Serverless-First Might Not Be Optimal

Serverless-first is not a one-size-fits-all solution. Several scenarios may make traditional or microservices architectures a better choice.

- High-Performance Computing (HPC): Applications requiring significant computational resources and low-latency processing may not be well-suited for serverless.

- Stateful Applications: Serverless functions are generally stateless. Stateful applications that require persistent storage or in-memory caching may be more complex to implement in a serverless environment.

- Complex Workflows with Tight Integration: Applications with complex workflows and tight integration requirements may benefit from a more traditional architecture.

- Applications with Strict Security Requirements: Applications with highly stringent security requirements may require more granular control over the infrastructure, making serverless less desirable.

Mitigation Strategies for Vendor Lock-in

While complete avoidance of vendor lock-in is often impractical, several strategies can mitigate its impact.

- Abstraction Layers: Employing abstraction layers to isolate the application from vendor-specific APIs and services can facilitate easier migration. This involves creating custom interfaces or wrappers around vendor-specific functionality.

- Open-Source Alternatives: Utilizing open-source alternatives for common services, such as databases or message queues, can reduce reliance on vendor-specific offerings.

- Multi-Cloud Strategy: Designing the application to run on multiple cloud providers can provide flexibility and reduce vendor lock-in. This requires careful planning and consideration of cross-platform compatibility.

- Infrastructure as Code (IaC): Implementing IaC practices allows for defining and managing infrastructure resources in code, making it easier to replicate and migrate the application to another platform.

- Containerization: Containerizing serverless functions can provide a degree of portability and reduce vendor lock-in.

Final Wrap-Up

In conclusion, serverless-first development provides a compelling framework for modern application development. By embracing this approach, businesses can achieve faster time-to-market, enhanced scalability, and reduced operational costs. While challenges exist, the benefits of serverless-first are undeniable. As technology continues to evolve, the serverless-first methodology will likely become increasingly important in shaping the future of software development, and developers must be prepared for this shift.

User Queries

What is the primary advantage of serverless-first development?

The primary advantage is the elimination of server management, allowing developers to focus solely on writing and deploying code without worrying about infrastructure provisioning, scaling, or maintenance.

How does serverless-first impact development costs?

Serverless-first development can significantly reduce costs by providing a pay-per-use model. You only pay for the resources consumed, leading to cost savings compared to traditional models where resources are provisioned regardless of usage.

What are the potential drawbacks of serverless-first development?

Potential drawbacks include vendor lock-in, increased complexity in debugging, and the potential for increased latency due to cold starts. Also, limited control over underlying infrastructure can be a disadvantage.

Is serverless-first suitable for all types of applications?

No, serverless-first is not always the optimal choice. Applications with predictable workloads, high computational demands, or strict latency requirements might not benefit as much as applications with variable workloads and event-driven architectures.

How does serverless-first enhance business agility?

Serverless-first enhances business agility by enabling faster development cycles, quicker deployment times, and the ability to rapidly scale applications to meet changing demands. This leads to faster innovation and quicker time-to-market.