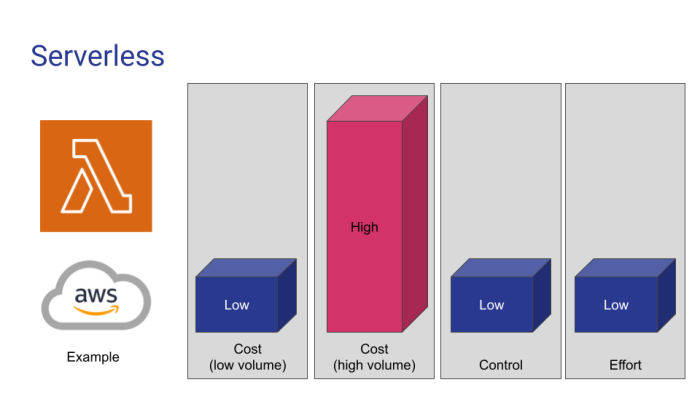

Serverless computing, while offering unparalleled scalability and cost efficiency, introduces complexities in the debugging process. Debugging serverless applications traditionally involved deploying code to the cloud, a time-consuming and often inefficient approach. The need for local debugging tools has thus become paramount, enabling developers to identify and resolve issues quickly within their development environments, mirroring the production environment as closely as possible.

This exploration delves into the critical tools and techniques that facilitate local debugging of serverless applications. We will examine CLI tools, IDE integrations, emulators, and mocking frameworks, providing a detailed understanding of their functionalities and practical applications. Furthermore, the discussion will extend to platform-specific debugging strategies, covering AWS Lambda, Azure Functions, and Google Cloud Functions, equipping developers with the knowledge to effectively debug across different serverless platforms.

Introduction to Serverless Local Debugging

Serverless local debugging is a critical practice for developers working with serverless architectures. It involves the ability to test and debug serverless functions and applications on a local development machine, mimicking the cloud environment as closely as possible. This approach significantly accelerates the development lifecycle and improves the quality of serverless applications.

Defining Serverless Architecture in the Context of Debugging

Serverless architecture, in the context of debugging, refers to a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. Developers write and deploy code (functions) without managing servers. The debugging process, therefore, needs to adapt to this model, focusing on the function’s behavior and its interactions with cloud services, rather than the underlying infrastructure.

Benefits of Local Debugging over Cloud Debugging

Debugging serverless applications locally offers several advantages over debugging directly in the cloud. These benefits contribute to faster development cycles, improved code quality, and reduced costs.

- Faster Development Cycles: Local debugging allows developers to iterate and test code changes much faster. Deploying and testing in the cloud can take several minutes, whereas local debugging provides immediate feedback. This speed increase is particularly beneficial when addressing complex logic or intricate integrations. For example, a developer fixing a bug in a function that processes image uploads can rapidly test different scenarios locally, without repeatedly deploying the function to a cloud environment.

- Reduced Cloud Costs: Repeated deployments and testing in the cloud incur costs. Local debugging minimizes these expenses by allowing developers to identify and fix bugs before deploying to the cloud. By simulating cloud services locally, developers can reduce the number of cloud resources consumed during the development phase.

- Improved Debugging Experience: Local debugging tools often provide more comprehensive debugging capabilities. They offer features such as step-by-step execution, breakpoints, and the ability to inspect variables in real-time. This detailed visibility into the code’s execution flow is invaluable for identifying and resolving issues.

- Offline Development: Local debugging enables developers to work on serverless applications even without an active internet connection. This is particularly useful when traveling or working in areas with limited connectivity. The ability to debug offline enhances productivity and reduces dependence on a stable internet connection.

- Enhanced Security: Local debugging keeps sensitive data and code within the developer’s controlled environment. This reduces the risk of data breaches or unauthorized access, especially when dealing with confidential information or proprietary code. By performing debugging locally, developers can minimize the attack surface and maintain a higher level of security during the development process.

Essential Tools for Local Debugging

Debugging serverless functions locally is crucial for rapid development, testing, and ensuring code quality before deployment. The ability to simulate the serverless environment on a developer’s machine significantly accelerates the feedback loop, making it easier to identify and fix bugs. This section focuses on the command-line interface (CLI) tools that are essential for this process. These tools provide direct access to the underlying mechanisms of serverless function execution and allow developers to step through code, inspect variables, and monitor performance, all within a local context.

CLI Tools for Local Debugging

CLI tools offer a powerful and efficient way to debug serverless functions. They provide a direct interface to the underlying serverless platform’s functionalities, allowing developers to interact with their functions in a controlled environment. They also often integrate with Integrated Development Environments (IDEs) to provide a seamless debugging experience.

- Serverless Framework CLI: The Serverless Framework CLI is a comprehensive tool for deploying and managing serverless applications. It simplifies the development process by automating tasks such as packaging code, configuring infrastructure, and deploying to various cloud providers.

- Functionality: The Serverless Framework CLI facilitates local function invocation and debugging. It allows developers to test their functions locally by simulating the cloud environment. Developers can trigger functions with specific events, inspect the function’s execution, and view logs. The framework also supports hot-reloading, automatically restarting the function when code changes are detected.

- Setup and Usage: To use the Serverless Framework CLI for local debugging, developers typically install the framework globally using npm:

npm install -g serverless. They then define their serverless functions and configurations in a `serverless.yml` file. To invoke a function locally, developers can use the `serverless invoke local` command, specifying the function name and any event data. For example:serverless invoke local -f myFunction -p event.json. The `-f` flag specifies the function name, and the `-p` flag provides the path to an event payload. - AWS SAM CLI (Serverless Application Model CLI): The AWS SAM CLI is a tool specifically designed for developing and testing serverless applications on AWS. It leverages the AWS SAM specification, a simplified way to define serverless resources.

- Functionality: The AWS SAM CLI provides robust local testing capabilities. It allows developers to emulate the AWS Lambda execution environment, including API Gateway, S3, and other AWS services. Developers can build, test, and debug their serverless applications locally before deploying them to AWS. It also offers features like live-reload and remote debugging support, integrating well with IDEs.

- Setup and Usage: The AWS SAM CLI can be installed using a package manager like pip:

pip install aws-sam-cli. Developers define their serverless applications using AWS SAM templates (YAML or JSON). To test a function locally, developers can use the `sam local invoke` command, specifying the function name and event data. For example:sam local invoke "MyFunction" -e event.json. The `sam local start-api` command can be used to simulate an API Gateway and test API endpoints locally. - Azure Functions Core Tools: The Azure Functions Core Tools are a set of CLI tools designed for developing, testing, and debugging Azure Functions locally.

- Functionality: Azure Functions Core Tools provide a complete local development experience. They allow developers to run and debug Azure Functions locally, simulate triggers, and test function interactions with Azure services. The tools also support hot-reloading and integrated debugging with IDEs. They offer a simplified way to manage dependencies and build Azure Functions projects.

- Setup and Usage: The Azure Functions Core Tools can be installed using npm:

npm install -g azure-functions-core-tools@4 --unsafe-perm true. Developers create Azure Functions projects using the `func init` command. To run a function locally, developers use the `func start` command, which starts the Azure Functions runtime locally. The `func run` command can be used to trigger a specific function, providing event data through the command line.For example:

func run MyFunction --trigger-input.

Essential Tools for Local Debugging

Debugging serverless applications locally is crucial for rapid development and efficient troubleshooting. Integrated Development Environments (IDEs) provide a streamlined experience, offering tools to inspect code, set breakpoints, and analyze application behavior without deploying to a cloud environment. This section delves into the integration of serverless local debugging with popular IDEs, comparing their capabilities and outlining configuration steps.

IDE Integrations

The integration of serverless local debugging with IDEs significantly enhances the developer workflow. IDEs provide a centralized environment for coding, testing, and debugging, eliminating the need to switch between multiple tools and interfaces. This integration typically involves plugins or extensions that connect the IDE to local serverless emulators or frameworks. These plugins enable developers to set breakpoints, inspect variables, and step through code execution, mimicking the behavior of a serverless function running in a cloud environment.

Comparing Debugging Capabilities of Different IDEs

Different IDEs offer varying levels of support and features for serverless local debugging. The choice of IDE often depends on the programming language used, the specific serverless framework employed, and the developer’s preferences.

- Visual Studio Code (VS Code): VS Code, a popular and versatile IDE, supports serverless debugging through extensions. For example, the AWS Toolkit for VS Code provides robust debugging capabilities for AWS Lambda functions locally. This includes the ability to set breakpoints, inspect variables, and step through code execution. The integration leverages the AWS SAM (Serverless Application Model) CLI, which allows developers to run and debug serverless applications locally.

VS Code also supports debugging for other serverless frameworks through appropriate extensions. For instance, with the Serverless Framework extension, developers can debug functions defined using the Serverless Framework directly within VS Code.

- IntelliJ IDEA (and related IDEs like PyCharm, WebStorm): IntelliJ IDEA, and its derivatives, such as PyCharm (for Python), WebStorm (for JavaScript/TypeScript), and others, offer excellent support for serverless debugging. They provide dedicated debugging features and integrations, particularly for Java-based serverless functions. The IDEs often integrate with serverless frameworks and cloud provider tools, enabling developers to debug functions locally with ease. Debugging is typically achieved through the use of debug configurations that specify the function to be debugged, the local environment to run it in, and any necessary parameters.

- Eclipse: Eclipse, an established IDE, also provides support for serverless debugging, often through plugins or extensions. While the level of integration may vary depending on the serverless framework and language used, Eclipse generally offers features such as breakpoint setting, variable inspection, and code stepping. Developers can configure debug launch configurations to specify the function to debug and the environment settings.

Configuring an IDE for Local Serverless Debugging

Configuring an IDE for local serverless debugging typically involves installing the appropriate plugins or extensions, configuring the project, and setting up debug configurations. The specific steps vary depending on the IDE, the serverless framework, and the programming language.

- Install the necessary plugins or extensions: This is usually the first step. For example, in VS Code, you would install the AWS Toolkit or a plugin specific to your serverless framework (e.g., Serverless Framework extension). In IntelliJ IDEA, you would install plugins related to the cloud provider (e.g., AWS Toolkit) or the serverless framework you are using.

- Configure the project: This may involve setting up the project structure, specifying the runtime environment, and configuring any necessary dependencies. The IDE will often guide you through this process, providing templates or wizards to help you get started.

- Create a debug configuration: This is where you define how the debugger should run your serverless function locally. The configuration will typically include the function’s entry point, any environment variables, and any input parameters. The IDE will then use this configuration to launch the function in a local environment and attach the debugger.

- Set breakpoints: Place breakpoints in your code where you want the debugger to pause execution. The debugger will stop at these points, allowing you to inspect variables, step through code, and analyze the application’s behavior.

- Start debugging: Once the configuration is set up and breakpoints are in place, you can start the debugging session. The IDE will launch the function locally, hit the breakpoints, and allow you to debug your code.

Example: VS Code and AWS Lambda

Consider debugging an AWS Lambda function written in Python using VS Code and the AWS Toolkit. The general steps would be as follows:

- Install the AWS Toolkit for VS Code.

- Configure your AWS credentials (usually by setting up an AWS profile).

- Open the project containing your Lambda function.

- Create a `template.yaml` file (or equivalent) that describes your serverless application using AWS SAM.

- In the `template.yaml` file, define your Lambda function.

- Add breakpoints in your Python code.

- Use the AWS Toolkit to start debugging. The toolkit will use AWS SAM to run your function locally, hit the breakpoints, and allow you to step through the code.

Emulators and Mocking Frameworks

Emulators and mocking frameworks are critical components in serverless local debugging, enabling developers to replicate the serverless environment and isolate dependencies. They provide a controlled testing ground, allowing for the observation of code behavior without deploying to a live serverless platform. This leads to faster feedback loops, reduced costs, and improved development efficiency.

Role of Emulators and Mocking Frameworks

Emulators and mocking frameworks serve distinct but complementary roles in the debugging process. Emulators simulate the runtime environment of a serverless platform, such as AWS Lambda, Azure Functions, or Google Cloud Functions. They replicate the services and infrastructure, including event triggers, function execution, and resource access, allowing developers to test their functions locally as if they were running in the cloud.

Mocking frameworks, on the other hand, are used to simulate the behavior of external services that a serverless function interacts with, such as databases, APIs, or message queues. By mocking these services, developers can control the input and output of these interactions, isolate their code, and test specific scenarios without relying on live dependencies. This allows for comprehensive testing of various edge cases and simplifies debugging complex interactions.

Emulators for Serverless Platforms

Several emulators are available for different serverless platforms, each offering specific features and capabilities. These emulators allow developers to mimic the behavior of cloud services on their local machines, streamlining the debugging process.

- AWS Lambda: The AWS SAM CLI (Serverless Application Model Command Line Interface) provides a robust local development and testing experience for AWS Lambda functions. It includes a local Lambda execution environment that simulates the AWS Lambda runtime, including event triggers, function execution, and resource access. Developers can use the SAM CLI to invoke Lambda functions locally, test API Gateway integrations, and simulate other AWS services like S3 and DynamoDB.

This eliminates the need to deploy to the cloud for basic testing and debugging.

- Azure Functions: The Azure Functions Core Tools offer a local development environment for Azure Functions. This includes a local runtime that mimics the Azure Functions environment, allowing developers to run and debug their functions locally. The Core Tools also support triggering functions locally, simulating various triggers like HTTP requests, timers, and queues. They provide a streamlined workflow for local development and testing, reducing the time required for debugging.

- Google Cloud Functions: The Google Cloud Functions Framework enables local development and testing of Google Cloud Functions. This framework provides a local server that mimics the Google Cloud Functions runtime, allowing developers to run and debug their functions locally. The framework supports various triggers, including HTTP requests, Cloud Storage events, and Cloud Pub/Sub messages. This provides a convenient way to test functions before deploying them to Google Cloud.

Utilizing Mocking Frameworks

Mocking frameworks play a vital role in isolating serverless functions and simulating external service interactions during debugging. By controlling the input and output of these interactions, developers can test their code in various scenarios without relying on live dependencies.

- Benefits of Mocking: Mocking offers several advantages, including faster test execution, improved test reliability, and easier testing of edge cases. Mocking allows developers to test different scenarios, such as error conditions, data validation, and performance optimization. It helps to isolate the code and ensure that it functions correctly, regardless of the external service’s behavior.

- Popular Mocking Frameworks: Several mocking frameworks are available for different programming languages. Some popular options include:

- Mockito (Java): A widely used mocking framework for Java, allowing developers to create mock objects, define behavior, and verify interactions.

- unittest.mock (Python): A built-in mocking library in Python’s unittest module, providing tools for creating mock objects, patching functions, and asserting method calls.

- Jest (JavaScript/TypeScript): A popular testing framework for JavaScript and TypeScript, offering built-in mocking capabilities for creating mock modules, functions, and objects.

- Example Scenario: Consider a serverless function that retrieves data from a database.

To test this function locally, a mocking framework can be used to simulate the database interaction. This involves creating a mock object that mimics the behavior of the database client, defining specific responses for different queries, and verifying that the function interacts with the database client as expected. This allows developers to test the function’s logic and error handling without connecting to a live database.

Local Debugging with Specific Platforms

Local debugging is critical for efficient serverless development, providing developers the ability to test and troubleshoot their functions before deployment. This section will delve into the specific tools and techniques tailored for debugging AWS Lambda functions locally, offering a practical guide to streamline the development process.Debugging AWS Lambda functions locally involves simulating the AWS environment on a developer’s machine. This allows for testing code execution, inspecting variables, and identifying errors without deploying to the cloud.

The following sections will Artikel the essential tools and a step-by-step process.

Local Debugging with AWS Lambda

Local debugging of AWS Lambda functions is facilitated by a combination of tools and techniques that allow developers to replicate the Lambda execution environment on their local machines. These tools provide capabilities such as function invocation, event simulation, and breakpoint debugging, enabling efficient troubleshooting and code validation.

- AWS SAM CLI: The AWS Serverless Application Model Command Line Interface (SAM CLI) is a crucial tool for local development and testing of serverless applications. It allows developers to build, test, and debug Lambda functions locally, emulating the AWS Lambda execution environment. The SAM CLI uses Docker containers to simulate the Lambda environment, including the runtime, environment variables, and IAM roles.

The SAM CLI supports various commands for local debugging, including `sam local invoke` to invoke functions with test events and `sam local start-api` to simulate API Gateway interactions.

- AWS Toolkit for VS Code/IntelliJ: These IDE extensions provide seamless integration with AWS services, including Lambda. They offer features such as code completion, debugging, and deployment directly from the IDE.

The toolkits often include features for configuring and running Lambda functions locally, simplifying the debugging process. They allow setting breakpoints, inspecting variables, and stepping through code within the IDE environment.

- Docker: Docker containers are used by the SAM CLI and other tools to replicate the Lambda runtime environment. Docker provides a consistent and isolated environment for running functions, ensuring that the local execution closely mirrors the production environment. Developers can also create custom Docker images to match specific runtime versions and dependencies.

- IDE Debuggers: Integrated Development Environments (IDEs) like VS Code, IntelliJ IDEA, and others offer built-in debuggers that support debugging Lambda functions. These debuggers allow developers to set breakpoints, step through code, inspect variables, and monitor function execution.

The debugging process typically involves configuring the IDE to connect to a local Lambda execution environment or a remote debugger.

Step-by-Step Guide to Debugging a Lambda Function Locally Using an IDE

Debugging a Lambda function locally using an IDE involves several steps, from setting up the environment to executing and inspecting the code. This guide provides a detailed walkthrough of the process, emphasizing the key configurations and actions required.

- Prerequisites:

- Install the AWS SAM CLI and Docker.

- Install an IDE with AWS Toolkit (e.g., VS Code with AWS Toolkit).

- Ensure you have an existing Lambda function or create a new one.

- Configure the IDE for Local Debugging:

- Open your Lambda function’s code in the IDE.

- Configure the IDE’s AWS Toolkit to connect to your AWS account (if necessary).

- Create a debugging configuration (e.g., a launch configuration in VS Code) that specifies the function to debug, the event payload, and the local debugging port. The configuration might use the SAM CLI or other debugging tools.

- Set Breakpoints:

- Open the Lambda function’s code file in the IDE.

- Click in the gutter next to the line numbers to set breakpoints at the points where you want to pause execution.

- Breakpoints allow you to inspect variables and step through the code line by line.

- Start the Local Debugging Session:

- Start the debugging session using the debugging configuration you created. This typically involves clicking a “Debug” button or using a keyboard shortcut.

- The IDE will launch the local Lambda execution environment, which may involve starting a Docker container.

- The Lambda function will be invoked with the specified event payload.

- Inspect Variables and Step Through Code:

- When the execution reaches a breakpoint, the IDE will pause execution.

- Use the IDE’s debugging tools to inspect the values of variables, step through the code line by line, and evaluate expressions.

- The IDE will provide a visual representation of the call stack and the current state of the function.

- Troubleshoot and Iterate:

- Analyze the variables and code execution to identify the root cause of any issues.

- Modify the code and restart the debugging session to test your changes.

- Continue to set breakpoints and step through the code until the function behaves as expected.

- Stop the Debugging Session:

- When you have finished debugging, stop the debugging session in the IDE.

- Close any related debugging tools and Docker containers.

Visual Representation of the Debugging Process

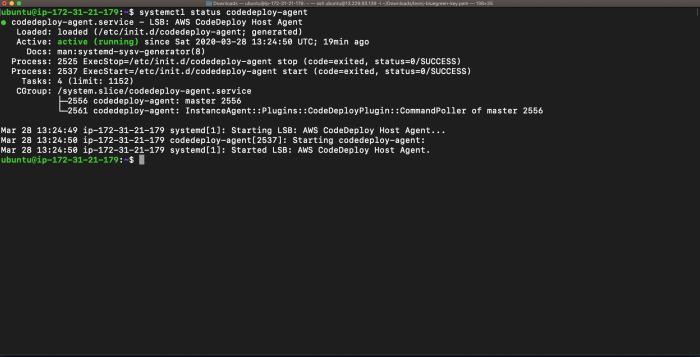

The debugging process involves several steps, from event generation to function execution and inspection. The following diagram illustrates the data flow and execution flow during local debugging of an AWS Lambda function.

Diagram Description:

The diagram depicts the flow of data and execution during the local debugging of an AWS Lambda function.

At the top, there’s an “Event Source” (e.g., API Gateway, S3, or other AWS services) which triggers the Lambda function.

This event source generates an event payload.

The event payload is then passed to the “IDE Debugger” which utilizes “SAM CLI” or similar tools.

“SAM CLI” uses “Docker Container” to create a container that simulates the Lambda execution environment.

Within the container, the “Lambda Function” is executed.

During execution, the IDE Debugger can interact with the running Lambda Function to inspect variables, set breakpoints, and step through code.

After the execution completes, the output of the Lambda function is passed back to the IDE Debugger.

The IDE Debugger then displays the output and debugging information to the developer.

The diagram illustrates a complete debugging cycle, starting from event trigger, the function execution, and finally, the display of the debugging information.

Local Debugging with Specific Platforms

Local debugging is critical for efficient development and testing of serverless applications, allowing developers to identify and resolve issues before deployment. Focusing on specific platforms, such as Azure Functions, reveals the tailored tools and techniques required for effective local debugging. This section delves into the nuances of debugging Azure Functions locally, examining the necessary tools, setup processes, and simulation of Azure service triggers.

Local Debugging with Azure Functions Tools and Methods

Azure Functions provides a robust set of tools and methods for local debugging, leveraging the power of the Azure Functions Core Tools and the integration capabilities of popular IDEs like Visual Studio Code. This allows developers to step through code, inspect variables, and identify errors within the local environment.

- Azure Functions Core Tools: The Azure Functions Core Tools are essential for local development. They provide a command-line interface (CLI) for creating, building, and running Azure Functions locally. They also emulate the Azure Functions runtime, enabling developers to test their functions in an environment that closely mirrors the production environment. This tool can be installed via npm (Node Package Manager) or chocolatey (for Windows).

Installation via npm can be done with the command:

npm install -g azure-functions-core-tools@4 --unsafe-perm true. The--unsafe-perm trueflag is sometimes necessary on certain operating systems to ensure the correct permissions for the tools. - Integrated Development Environments (IDEs): Popular IDEs, such as Visual Studio Code, offer seamless integration with Azure Functions Core Tools. This integration allows developers to set breakpoints, step through code, and inspect variables directly within the IDE. The Azure Functions extension for Visual Studio Code enhances the debugging experience, providing features like function creation, deployment, and monitoring.

- Debugging Configuration Files: The `launch.json` file in Visual Studio Code is used to configure debugging sessions. This file specifies the debugger, the function to debug, and any necessary environment variables. Properly configuring this file is crucial for initiating a debugging session and connecting the debugger to the running Azure Functions process.

- .NET Debugger (for .NET Functions): For Azure Functions written in .NET, the .NET debugger is used. This debugger is integrated within Visual Studio and Visual Studio Code, providing the standard debugging features such as breakpoints, stepping, and variable inspection.

- Node.js Debugger (for JavaScript/TypeScript Functions): For JavaScript and TypeScript Azure Functions, the Node.js debugger is employed. This debugger is also integrated within Visual Studio Code, allowing developers to debug their functions using familiar debugging techniques.

Setting Up Local Debugging for Azure Functions

Setting up local debugging for Azure Functions involves a structured process that ensures a smooth debugging experience. This process includes installing necessary tools, configuring the development environment, and verifying the setup.

- Install Azure Functions Core Tools: As mentioned earlier, install the Azure Functions Core Tools using npm or chocolatey. Verify the installation by running the command `func –version` in the terminal. This command should display the installed version of the Core Tools.

- Create or Open an Azure Functions Project: Create a new Azure Functions project or open an existing one. This project should contain the function code, function.json files (configuration files), and other necessary dependencies.

- Configure the Debugging Environment in your IDE:

- Visual Studio Code:

- Install the Azure Functions extension.

- Create a `launch.json` file in the `.vscode` directory (if it doesn’t already exist).

- Configure the `launch.json` file with the appropriate settings for your function, including the function name, port, and any necessary environment variables.

- Visual Studio:

- Ensure that the Azure development workload is installed.

- Right-click on the Azure Functions project in the Solution Explorer and select “Start Debugging.”

- Visual Studio Code:

- Run the Function Locally: Start the Azure Functions host locally using the command `func start` in the terminal (from the project’s root directory). This will start the Azure Functions runtime and make your functions available for local testing.

- Set Breakpoints: Set breakpoints in your function code where you want to pause execution and inspect the state of the variables.

- Start Debugging: Start the debugging session from your IDE (e.g., by clicking the “Start Debugging” button in Visual Studio Code). The debugger will attach to the running Azure Functions process.

- Trigger the Function: Trigger the function by sending a request to the function’s endpoint or by simulating an event trigger (see the next section).

- Inspect and Debug: The debugger will hit the breakpoints you set. You can then step through the code, inspect variables, and identify and fix any issues.

Simulating Azure Service Triggers during Local Debugging

Simulating Azure service triggers is crucial for testing Azure Functions that are triggered by events from services like Azure Blob Storage, Azure Queue Storage, or Azure Event Hubs. This simulation allows developers to test these functions locally without needing to deploy the functions or interact with the actual Azure services.

- Azure Functions Core Tools Triggers: The Azure Functions Core Tools provide built-in support for simulating some trigger types, such as HTTP triggers. For other triggers, you may need to configure your debugging environment.

- HTTP Triggers: For HTTP-triggered functions, you can use tools like `curl` or Postman to send HTTP requests to the function’s local endpoint. The endpoint is usually displayed in the console when you start the Azure Functions host locally.

- Queue Storage Triggers: To simulate an Azure Queue Storage trigger, you can use tools like Azure Storage Explorer or Azure CLI to add a message to the local storage emulator queue. The Azure Functions runtime will then process this message, triggering the function.

- Blob Storage Triggers: For Blob Storage triggers, you can upload a file to the local storage emulator’s container. The Azure Functions runtime will detect the new file and trigger the function.

- Event Hubs Triggers: Simulating Event Hubs triggers requires a more complex setup. You can use tools like the Azure Event Hubs Explorer or Azure CLI to send events to the local Event Hubs emulator. Your Azure Function will then receive the events.

- Local Storage Emulator: The Azure Functions Core Tools typically use a local storage emulator to simulate Azure Storage services. This emulator can be configured to mimic the behavior of Azure Blob Storage and Azure Queue Storage.

- Environment Variables for Local Emulation: Set the connection strings for the local storage emulator in the `local.settings.json` file. This file is used to store environment variables for local development. An example of this is:

"IsEncrypted": false,

"Values":

"FUNCTIONS_WORKER_RUNTIME": "dotnet",

"AzureWebJobsStorage": "UseDevelopmentStorage=true" - Simulating Triggers Programmatically: For complex scenarios, you can write code within your function to simulate trigger events. For example, in an Azure Blob Storage triggered function, you can write code to programmatically create a blob within the local storage emulator, triggering the function. This approach provides fine-grained control over the trigger simulation.

Local Debugging with Specific Platforms

Debugging serverless functions locally is crucial for efficient development and testing. It allows developers to identify and resolve issues before deploying to the cloud, saving time and resources. This section focuses on local debugging techniques for Google Cloud Functions, providing a comprehensive guide to streamline the development process.

Local Debugging with Specific Platforms: Google Cloud Functions

Google Cloud Functions, a serverless compute service, provides developers with a platform to execute code in response to events. Local debugging is essential for these functions to ensure proper functionality and efficient development. Several tools and processes are available to facilitate this.Google Cloud Functions offers several methods for local debugging. These methods allow developers to test and troubleshoot their functions before deploying them to the cloud.

- Cloud Functions Framework: The Cloud Functions Framework provides a local development environment. It allows developers to run and test their functions locally, simulating the Google Cloud Functions environment. The framework supports various languages, including Node.js, Python, Go, and Java.

- Google Cloud SDK (gcloud CLI): The gcloud CLI offers debugging capabilities. It allows developers to deploy functions to a local emulator, which simulates the Google Cloud environment. Developers can then debug these functions using their preferred IDEs or debuggers.

- IDE Integrations: Many Integrated Development Environments (IDEs), such as VS Code and IntelliJ IDEA, offer extensions and plugins specifically designed for Google Cloud Functions development. These integrations often provide features like debugging, code completion, and deployment directly from the IDE.

Configuration for local debugging Google Cloud Functions involves setting up the necessary environment and tools. This ensures that the functions can be executed and debugged locally.

- Installation of Cloud Functions Framework: The Cloud Functions Framework must be installed to enable local execution. This typically involves using a package manager such as npm for Node.js, pip for Python, or go get for Go.

- Setting up the gcloud CLI: The Google Cloud SDK (gcloud CLI) must be installed and configured. This includes authenticating with a Google Cloud project and selecting the appropriate project for deployment and debugging.

- IDE Configuration: Developers must configure their IDEs with the necessary extensions or plugins for Google Cloud Functions. This configuration often involves specifying the project, function name, and entry point.

- Emulator Setup (if applicable): If using the gcloud CLI with a local emulator, the emulator needs to be started and configured to simulate the Google Cloud environment. This involves setting up the necessary services, such as Cloud Storage and Cloud Pub/Sub, if the function interacts with them.

Setting up a local development environment for Google Cloud Functions involves several steps to ensure a smooth debugging experience. This guide provides a detailed overview of the setup process.

- Project Setup: Create a new Google Cloud project or use an existing one. Enable the Cloud Functions API within the Google Cloud Console.

- Environment Setup: Install the required tools, including the gcloud CLI, the Cloud Functions Framework (for your chosen language), and any necessary language-specific tools (e.g., Node.js and npm for JavaScript/TypeScript).

- Function Code: Create the function code. This code will be executed when the function is triggered. Ensure the code adheres to the Google Cloud Functions specifications for the chosen runtime.

- Local Testing with Framework: Use the Cloud Functions Framework to run and test the function locally. This involves running the framework command, specifying the function’s entry point, and providing any necessary input data.

- Debugging with IDE: Configure the IDE to debug the function. This usually involves setting breakpoints in the code and attaching the debugger to the local process.

- Deployment and Testing (Cloud): Once the function is tested locally, deploy it to Google Cloud. Test the function by triggering it through the specified triggers (e.g., HTTP requests, Cloud Storage events). Monitor the logs in the Google Cloud Console for any errors or issues.

- Emulator Usage (Optional): If the function interacts with other Google Cloud services, utilize the local emulator. Configure the emulator to simulate these services and test the function’s interactions locally.

Example:Consider a simple HTTP function written in Node.js. The function is designed to respond to HTTP requests.

// index.jsexports.helloWorld = (req, res) => res.send('Hello, World!');; To debug this function locally using the Cloud Functions Framework, the following steps can be taken:

1. Install the Cloud Functions Framework:

“`bash

npm install @google-cloud/functions-framework –save

“`

2. Run the function locally:

“`bash

functions-framework –target=helloWorld –signature-type=http

“`

This command starts the framework, making the function accessible at `http://localhost:8080`.

3. Test the function using `curl` or a browser:

“`bash

curl http://localhost:8080

“`

This should return “Hello, World!”.

4. Debug with IDE (e.g., VS Code):

– Install the Cloud Code extension in VS Code.

– Create a launch configuration to attach to the Node.js process running the framework.

– Set breakpoints in the `index.js` file.

– Send an HTTP request to the local function and observe the debugger hitting the breakpoints.

This example demonstrates the basic setup and debugging process for a simple HTTP function. More complex functions, involving interactions with other Google Cloud services, would require additional configuration, such as setting up local emulators for those services. This approach allows developers to thoroughly test their functions before deployment, significantly improving the development cycle and reducing the likelihood of errors in production.

Debugging Techniques and Strategies

Debugging serverless applications locally requires a multifaceted approach. The ephemeral nature of serverless functions, combined with the distributed architecture, introduces unique challenges. Effective debugging strategies combine established techniques with serverless-specific tools and methodologies to pinpoint and resolve issues efficiently. A comprehensive understanding of these techniques is crucial for developing and maintaining robust serverless applications.

Advanced Debugging Techniques

Debugging serverless applications benefits from advanced techniques that extend beyond basic troubleshooting. These techniques enhance the ability to isolate problems, understand application behavior, and improve overall code quality.

- Remote Debugging: Allows developers to connect a local debugger to a serverless function running in a local emulator or a deployed environment. This enables stepping through code, inspecting variables, and setting breakpoints, providing real-time insights into function execution. This technique is particularly valuable for complex logic or interactions with external services. For example, AWS Lambda provides the ability to connect a debugger (e.g., VS Code) to a locally running function through the SAM CLI, facilitating step-by-step debugging.

- Distributed Tracing: Essential for understanding the flow of requests across multiple serverless functions and services. Tools like AWS X-Ray or OpenTelemetry capture and visualize the execution path, latency, and errors for each component involved in a transaction. This provides a holistic view of the application, enabling developers to identify bottlenecks and pinpoint the source of performance issues. A practical example involves tracing an API Gateway request that triggers several Lambda functions, each performing a specific task.

Distributed tracing tools would show the time spent in each function, any errors encountered, and the overall latency of the request.

- Performance Profiling: Involves analyzing the performance characteristics of serverless functions to identify areas for optimization. Tools like profiling agents, integrated into the local development environment or cloud providers’ debugging tools, collect data on CPU usage, memory allocation, and function execution time. This information helps developers pinpoint inefficient code sections or resource bottlenecks. For instance, profiling a Lambda function might reveal that a database query is taking an excessive amount of time, leading to optimization efforts.

- Snapshot Debugging: Captures the state of a running function at a specific point in time, including variables, call stacks, and environment details. This technique is particularly useful for diagnosing intermittent issues or complex scenarios that are difficult to reproduce. Tools like those offered by serverless platform providers can automatically create snapshots upon encountering errors or when specific conditions are met. This provides detailed context for debugging, helping to understand the state of the application at the time of the issue.

Common Debugging Challenges and Solutions

Serverless environments present specific debugging challenges that necessitate targeted solutions. The following table summarizes common issues and their recommended approaches:

| Challenge | Description | Solution |

|---|---|---|

| Function Cold Starts | The initial delay in function invocation when the function’s container needs to be provisioned. | Use local emulators, provisioned concurrency (for deployed environments), and optimize function code to reduce startup time. |

| Idempotency Issues | Ensuring that repeated executions of a function produce the same result, critical for handling retries. | Implement idempotency mechanisms, such as unique request IDs and state management, to prevent unintended side effects. |

| Asynchronous Operations | Debugging the interactions between functions and services that are not directly connected. | Use distributed tracing, logging with correlation IDs, and local emulators that simulate asynchronous event processing. |

| Resource Limits | Encountering the limits of resources such as memory, execution time, or network connections. | Optimize function code, adjust resource configurations, and monitor resource usage metrics. |

| Complex Dependencies | Managing and resolving dependencies within the function’s environment. | Use package managers, local build tools, and containerization for consistent dependency management. |

Logging and Tracing for Effective Local Debugging

Logging and tracing are indispensable for effective local debugging in serverless environments. They provide crucial insights into function behavior, request flow, and potential issues.

- Logging: Involves recording events, errors, and other relevant information during function execution. Structured logging, using formats like JSON, makes it easier to analyze logs and filter for specific events. Consider the following:

- Log Levels: Utilize different log levels (e.g., DEBUG, INFO, WARN, ERROR) to categorize log messages based on their severity.

- Contextual Information: Include contextual information, such as request IDs, timestamps, and function names, in log messages to aid in debugging.

- Example: In a Python Lambda function, you can use the `logging` module to write log messages. For example:

import logging

logger = logging.getLogger()

logger.setLevel(logging.INFO)def lambda_handler(event, context):

logger.info("Received event: %s", event)

# ... function logic ...

return "statusCode": 200, "body": "Success"

- Tracing: Enables the tracking of requests across multiple functions and services, providing a comprehensive view of the application’s execution path.

- Distributed Tracing Tools: Use tools like AWS X-Ray or OpenTelemetry to visualize the flow of requests and identify bottlenecks.

- Span Context Propagation: Ensure that the trace context (e.g., trace IDs and span IDs) is propagated across function invocations and service interactions.

- Example: Integrating AWS X-Ray into a Node.js Lambda function involves using the `aws-xray-sdk` library. This automatically instruments the function to capture traces and sends them to X-Ray for analysis.

const AWSXRay = require('aws-xray-sdk-core');

const AWS = AWSXRay.captureAWS(require('aws-sdk'));exports.handler = async (event, context) =>

// ... function logic ...

;

Best Practices for Local Debugging

Effective local debugging is crucial for rapid development and efficient troubleshooting in serverless applications. Adhering to best practices streamlines the debugging process, minimizes wasted time, and enhances the overall quality of the deployed code. Implementing these strategies helps developers quickly identify and resolve issues before they impact production environments.

Setting Up and Maintaining a Local Debugging Environment

Properly configuring and maintaining the local debugging environment is foundational for effective serverless development. This involves selecting the right tools, configuring them correctly, and establishing consistent practices for managing the environment.

- Version Control: Utilize version control systems (e.g., Git) to track changes to code and configuration files. This allows for easy rollback to previous states if debugging introduces unintended side effects. The ability to revert to a known working state significantly reduces debugging time.

- Environment Isolation: Employ containerization (e.g., Docker) or virtual environments to isolate the local debugging environment from the host system. This prevents conflicts between dependencies and ensures that the debugging environment accurately mirrors the production environment’s dependencies. For example, a Dockerfile can specify the exact versions of Node.js, Python, or other runtimes required by the serverless functions, ensuring consistency.

- Configuration Management: Centralize and manage configuration settings using environment variables or configuration files. This simplifies the process of switching between different environments (local, staging, production) and allows for easy modification of debugging parameters without changing the code. Tools like `dotenv` for Node.js or similar libraries in other languages can load environment variables from a `.env` file.

- Dependency Management: Carefully manage project dependencies. Use a package manager (e.g., npm, pip) to specify and install all required libraries and their versions. Regularly update dependencies to incorporate security patches and bug fixes. Pinning dependency versions in a `package-lock.json` or `requirements.txt` file ensures reproducible builds.

- Automated Testing: Integrate unit tests and integration tests into the development workflow. These tests should be executed locally as part of the debugging process. Automated tests provide immediate feedback on code correctness and help identify issues early. Consider tools like Jest for JavaScript or pytest for Python.

- Logging and Monitoring: Implement comprehensive logging and monitoring. Use logging statements within the code to track the execution flow and the values of variables. Configure monitoring tools to collect and analyze logs, metrics, and traces. Tools like CloudWatch (AWS), Stackdriver (GCP), or Azure Monitor can be utilized.

Optimizing the Debugging Process

Optimizing the debugging process involves employing techniques that reduce the time required to identify and resolve issues. This includes utilizing effective debugging tools, employing strategic debugging techniques, and streamlining the overall workflow.

- Use Debuggers Effectively: Leverage the features of debuggers to step through code, inspect variables, and set breakpoints. Learn to use features like conditional breakpoints (breakpoints that are only hit when a specific condition is met) and watch expressions (to monitor the value of variables over time). Debuggers can be integrated into IDEs (e.g., VS Code, IntelliJ) or used as standalone tools (e.g., Node.js debugger).

- Isolate the Problem: Employ the divide-and-conquer strategy. When encountering a bug, attempt to isolate the problem by commenting out sections of code, removing dependencies, or simplifying the logic until the issue disappears. This helps pinpoint the exact location of the bug.

- Reproduce the Bug: Ensure the bug can be consistently reproduced. This involves documenting the steps required to trigger the bug and creating a test case to replicate it. This allows for easier debugging and prevents the bug from reoccurring after a fix.

- Use Profiling Tools: Utilize profiling tools to identify performance bottlenecks. Profilers can analyze the execution time of different code sections, helping to identify areas for optimization. Tools like the Chrome DevTools Profiler (for JavaScript) or profilers integrated into IDEs can be used.

- Leverage Test-Driven Development (TDD): Write tests before writing the code. This approach forces developers to think about the expected behavior of the code and can help prevent bugs from occurring in the first place. TDD also makes it easier to verify that the code is working correctly.

- Refactor Code Regularly: Regularly refactor code to improve readability and maintainability. Clean code is easier to debug. Refactoring can involve renaming variables, extracting methods, and simplifying complex logic.

Checklist for Efficient Local Debugging

Implementing a checklist ensures a structured approach to local debugging, leading to more efficient troubleshooting and improved code quality. This checklist serves as a guide to follow during the debugging process.

- Reproducibility: Can the bug be reliably reproduced in the local environment? If not, ensure the local environment mirrors the production environment.

- Environment Setup: Is the local environment correctly configured with the necessary tools, dependencies, and environment variables? Verify containerization and version control setup.

- Logging and Monitoring: Are comprehensive logs and monitoring enabled? Examine the logs for error messages, warnings, and debugging information.

- Breakpoints: Are breakpoints strategically placed to inspect the execution flow and variable values? Utilize conditional breakpoints.

- Code Inspection: Is the code reviewed for potential errors and logical flaws? Use a debugger to step through the code and inspect variable values.

- Test Execution: Have unit tests and integration tests been executed to verify the code’s behavior? Analyze test results to identify areas of failure.

- Profiling: If performance is an issue, have profiling tools been used to identify bottlenecks? Optimize the code based on the profiling results.

- Problem Isolation: Has the problem been isolated using the divide-and-conquer strategy? Simplify the code to identify the root cause.

- Solution Verification: Does the fix resolve the bug, and does it introduce any new issues? Retest the code thoroughly.

- Documentation: Have the debugging steps and the solution been documented? Document the debugging process for future reference.

Future Trends in Serverless Local Debugging

The serverless landscape is constantly evolving, and with it, the tools and techniques for local debugging are also undergoing significant transformations. Future advancements promise to enhance developer productivity, streamline debugging workflows, and provide more comprehensive insights into serverless application behavior. These trends are driven by the need for faster development cycles, improved error detection, and the ability to debug complex, distributed systems efficiently.

Advanced Observability and Monitoring Integration

Current local debugging tools often provide limited visibility into the internal workings of serverless functions and their interactions with external services. Future trends will emphasize deep integration with advanced observability and monitoring platforms.

- Real-time Metrics and Tracing: Tools will provide real-time metrics on function performance, resource utilization, and error rates, directly within the local debugging environment. This will enable developers to identify performance bottlenecks and troubleshoot issues quickly. Distributed tracing capabilities will allow for end-to-end visibility into the execution flow across multiple functions and services, even when debugging locally.

- Automated Error Detection and Root Cause Analysis: Advanced debugging tools will leverage machine learning and artificial intelligence to automatically detect errors, identify their root causes, and suggest potential fixes. This will reduce the time spent manually analyzing logs and debugging complex issues. For example, the tool could analyze the patterns of logs and errors and suggest that a database connection timeout is the cause, providing information to quickly identify and resolve the problem.

- Integration with Existing Observability Platforms: Seamless integration with popular observability platforms such as Datadog, New Relic, and Prometheus will become standard. This will allow developers to leverage their existing monitoring infrastructure for local debugging, reducing the need to learn new tools and workflows. The debugging tools will transmit local debugging data to the observability platforms to give a holistic view of the system.

Enhanced Local Environment Simulation

The accuracy and completeness of local environment simulation are crucial for effective debugging. Future trends will focus on improving the fidelity of these simulations.

- More Accurate Service Emulation: Emulators will evolve to provide more accurate representations of cloud services, including databases, message queues, and API gateways. This will reduce the discrepancies between local and cloud environments, minimizing the risk of unexpected behavior when deploying to production. The goal is to make the local environment as similar to the production environment as possible.

- Dynamic Resource Provisioning: Tools will automatically provision and configure resources such as databases and message queues within the local debugging environment. This will simplify the setup process and allow developers to focus on debugging their code rather than managing infrastructure. This can be achieved by integrating with tools such as Docker Compose or Kubernetes to orchestrate local services.

- Improved Event Simulation: Debugging tools will provide more sophisticated event simulation capabilities, allowing developers to test their functions under various scenarios, including different event payloads, triggers, and timing conditions. This will help developers identify and fix edge cases and ensure their functions behave as expected. For example, the tool might allow the user to simulate a specific sequence of events coming from an API Gateway.

AI-Powered Debugging Assistance

Artificial intelligence and machine learning are poised to play a significant role in the future of serverless local debugging.

- Intelligent Code Analysis: Debugging tools will employ AI to analyze code in real-time, identifying potential errors, performance issues, and security vulnerabilities. The tool could suggest code optimizations or highlight areas where the code might be prone to bugs.

- Automated Test Generation: AI will be used to automatically generate test cases based on the code and its dependencies. This will reduce the burden on developers to write comprehensive tests and improve code coverage.

- Personalized Debugging Recommendations: Debugging tools will learn from developers’ debugging patterns and provide personalized recommendations for resolving issues. This could include suggestions for specific debugging techniques, code snippets, or relevant documentation.

Increased Emphasis on Security and Compliance

Security and compliance are becoming increasingly important in serverless development. Future debugging tools will address these concerns directly.

- Security Vulnerability Scanning: Debugging tools will integrate security vulnerability scanning capabilities to identify potential security flaws in code and dependencies. This will help developers address security issues early in the development cycle. The tool will be able to scan the code for common vulnerabilities such as SQL injection or cross-site scripting.

- Compliance Checks: Tools will incorporate compliance checks to ensure that serverless functions adhere to relevant regulations and standards. This will help organizations meet their compliance requirements.

- Secure Debugging Environments: Debugging tools will prioritize the security of the local debugging environment, protecting sensitive data and preventing unauthorized access.

Closing Summary

In conclusion, the landscape of serverless local debugging is evolving rapidly, with continuous advancements in tools and methodologies. From CLI tools and IDE integrations to emulators and mocking frameworks, the availability of resources for efficient local debugging has dramatically improved. By embracing best practices and staying informed about emerging trends, developers can significantly streamline their debugging workflows, ultimately leading to more robust and reliable serverless applications.

The ability to debug locally is no longer a luxury, but a necessity for effective serverless development.

FAQ Guide

What is the primary advantage of local debugging over cloud-based debugging?

Local debugging offers faster iteration cycles by eliminating the deployment step, allowing developers to rapidly test changes and identify issues without incurring cloud costs for each test run.

Are there any performance limitations associated with local debugging tools?

While local debugging tools strive to replicate cloud environments, they may not perfectly mirror the performance characteristics of the production environment. Resource constraints on the developer’s machine can sometimes impact the simulation.

How do emulators differ from mocking frameworks in the context of serverless debugging?

Emulators mimic the behavior of entire serverless platforms or services, providing a close approximation of the cloud environment. Mocking frameworks, on the other hand, simulate the responses of external services, allowing developers to isolate and test specific components of their applications.

What are the security implications of local debugging?

Developers should ensure that sensitive information, such as API keys and database credentials, is handled securely within the local debugging environment. Avoid hardcoding sensitive data directly into the code.

How can I choose the right debugging tool for my serverless project?

The choice of debugging tools depends on the serverless platform being used, the IDE preferences of the development team, and the specific debugging needs of the project. Evaluate tools based on features, ease of use, and compatibility with the existing development workflow.