In today’s dynamic digital landscape, the efficient management of cloud resources is paramount. This comprehensive guide, “Strategies for Minimizing Idle Cloud Resources,” delves into the critical practices needed to optimize your cloud infrastructure, transforming it from a potential cost center into a streamlined, cost-effective powerhouse. We will explore various techniques to identify, address, and ultimately minimize the waste associated with idle resources, ensuring that your cloud spending is aligned with actual usage and business needs.

This journey will encompass a range of strategies, from pinpointing underutilized compute instances and storage volumes to implementing sophisticated automation and cost monitoring solutions. We’ll dissect right-sizing techniques, automated scaling, and the benefits of containerization and serverless computing. The goal is to equip you with the knowledge and tools necessary to make informed decisions, leading to substantial cost savings and enhanced operational efficiency within your cloud environment.

Identifying Idle Cloud Resources

Identifying idle cloud resources is a crucial step in optimizing cloud spending and improving resource utilization. Unused or underutilized resources represent wasted expenditure and can negatively impact overall cloud efficiency. The following sections detail methods for pinpointing these resources, focusing on compute instances, storage volumes, and network components.

Identifying Compute Instances with Low CPU Utilization

Pinpointing compute instances that are underutilized is vital for cost optimization. Several methods and metrics can be employed to detect instances with low CPU utilization, enabling informed decisions about resizing or terminating them.The identification process often involves monitoring CPU utilization metrics provided by cloud providers. For example, Amazon CloudWatch, Azure Monitor, and Google Cloud Monitoring offer detailed insights into instance performance.

Analyzing these metrics allows administrators to identify instances consistently operating below a certain threshold. This threshold is often defined based on the specific workload requirements; however, a general guideline suggests that instances consistently below 10-20% CPU utilization over an extended period (e.g., a week or a month) might be considered underutilized.

- Monitoring CPU Utilization Metrics: Continuously track CPU utilization percentage. Look for instances where the CPU usage consistently remains low. Most cloud providers offer tools like CloudWatch (AWS), Azure Monitor (Azure), and Cloud Monitoring (GCP) for this purpose.

- Analyzing CPU Credits (for burstable instances): Burstable instance types, such as AWS T-series instances, accumulate CPU credits when idle. When CPU usage is high, these credits are consumed. Monitoring credit balance can help identify underutilized burstable instances. A consistently high credit balance indicates underutilization.

- Utilizing Cloud Provider Tools: Many cloud providers offer built-in tools or services to identify underutilized resources. For example, AWS Compute Optimizer provides recommendations for optimizing compute resources, including identifying instances that are right-sized or over-provisioned.

- Implementing Automated Alerting: Set up alerts based on CPU utilization thresholds. When CPU usage falls below a defined level for a sustained period, trigger an alert to notify administrators. This allows for proactive identification and remediation.

- Reviewing Instance Logs: Examine instance logs for any signs of inactivity or low workload. Logs can provide valuable insights into the applications running on the instance and their resource consumption patterns.

For instance, a web server instance running on an AWS EC2 instance might show an average CPU utilization of only 5% during off-peak hours. This consistent low utilization, combined with monitoring data, suggests the instance is underutilized and could be a candidate for resizing or termination. The cloud provider’s Compute Optimizer might further confirm this, providing recommendations for a smaller instance type.

Detecting Underutilized Storage Volumes

Identifying underutilized storage volumes is essential for cost savings, as unused storage still incurs charges. Techniques for detecting underutilized storage volumes involve analyzing storage capacity, I/O performance, and access patterns.The process typically involves monitoring storage metrics and analyzing access patterns to identify volumes that are not actively being used or are not fully utilized. The specific metrics and tools used vary depending on the cloud provider and the type of storage volume.

However, the underlying principles remain consistent.

- Monitoring Storage Capacity Utilization: Track the percentage of storage capacity being used. Look for volumes where a significant portion of the allocated storage is unused. For example, if a 1 TB volume has only 100 GB of data stored, it is likely underutilized.

- Analyzing I/O Performance: Monitor I/O operations per second (IOPS) and throughput (MB/s) to assess storage volume activity. Low IOPS and throughput indicate minimal data access, suggesting the volume might be underutilized.

- Reviewing Access Patterns: Analyze the frequency and timing of data access. If data is rarely accessed, or if access occurs only during specific periods, the volume might be underutilized.

- Utilizing Cloud Provider Tools: Cloud providers offer tools to monitor storage volumes and identify underutilization. For example, AWS offers Amazon CloudWatch for monitoring storage metrics, and AWS Cost Explorer can help analyze storage costs.

- Implementing Lifecycle Policies: Utilize storage lifecycle policies to automatically move infrequently accessed data to cheaper storage tiers, such as archive storage. This can help optimize costs for data that is not frequently accessed.

- Analyzing Storage Volume Snapshots: Assess the size and frequency of snapshots. If snapshots are consistently small or infrequently created, the underlying storage volume may be underutilized.

For example, consider an Azure managed disk with a capacity of 500 GB. If the disk consistently uses only 50 GB of storage and exhibits low I/O activity, it is likely underutilized. Azure Monitor can be used to track these metrics, and administrators can then consider reducing the disk size to optimize costs. Additionally, storage lifecycle policies can automatically move older data to cheaper storage tiers, further optimizing storage expenses.

Identifying Network Resources Not Actively Processing Traffic

Identifying network resources that are not actively processing traffic is essential for cost optimization. Network resources, such as load balancers, virtual private cloud (VPC) endpoints, and network address translation (NAT) gateways, can incur significant costs, even when idle. The following techniques are used to detect these idle resources.The process of identifying idle network resources involves monitoring network traffic, connection counts, and resource utilization metrics provided by the cloud provider.

Analyzing these metrics allows administrators to pinpoint resources that are not actively processing traffic.

- Monitoring Load Balancer Traffic: Monitor the number of requests processed by load balancers. Low request counts, or zero requests over an extended period, indicate that the load balancer is not actively processing traffic.

- Analyzing Connection Counts: Track the number of active connections to network resources. Low or zero connection counts suggest that the resource is not being utilized.

- Monitoring Network Traffic Metrics: Monitor network traffic in and out of network resources, such as NAT gateways and VPC endpoints. Low traffic volumes indicate that the resources are not actively being used.

- Utilizing Cloud Provider Tools: Cloud providers offer tools to monitor network resources and identify underutilization. For example, AWS CloudWatch can be used to monitor load balancer metrics, and Azure Monitor can be used to monitor network traffic.

- Reviewing Resource Logs: Examine resource logs for any signs of activity or inactivity. Logs can provide valuable insights into the applications or services using the network resources and their traffic patterns.

- Checking Health Checks: Ensure health checks are configured correctly and are passing. If health checks are failing, the load balancer might not be directing traffic to the backend instances.

For instance, a load balancer that has zero requests per minute for an extended period may be considered idle. An administrator can then investigate the reason for the lack of traffic, which might involve application misconfiguration or the need to remove or reconfigure the load balancer to optimize costs. If the load balancer is not needed, it can be deleted.

Another example involves a NAT gateway. If a NAT gateway consistently processes a very small amount of traffic, it may be underutilized, and the administrator can then consider resizing it or consolidating traffic through fewer gateways.

Right-Sizing Compute Instances

Optimizing the size of your compute instances is a critical strategy for minimizing cloud resource waste. This involves ensuring that your instances have the appropriate resources (CPU, memory, storage) to meet the demands of your workloads without being over-provisioned. Right-sizing not only reduces costs but also improves performance by preventing resource bottlenecks.

This section will Artikel procedures for determining optimal instance sizes, methods for automating resizing, and a comparison of manual versus automated approaches.

Determining Optimal Instance Size Based on Historical Usage Data

Analyzing historical usage data is fundamental to determining the optimal size for your compute instances. This involves collecting and interpreting metrics related to CPU utilization, memory consumption, disk I/O, and network traffic over a defined period. This data provides a baseline understanding of resource requirements.

- Data Collection: Implement comprehensive monitoring tools to gather relevant metrics from your compute instances. Examples include:

- CloudWatch (AWS)

- Azure Monitor (Azure)

- Stackdriver (Google Cloud)

- Third-party monitoring solutions like Datadog or New Relic

Collect data at a granular level (e.g., every 5 minutes) to capture fluctuations in resource usage.

- Data Analysis: Analyze the collected data to identify patterns, trends, and peaks in resource utilization.

- CPU Utilization: Determine the average and peak CPU utilization percentages. Instances consistently operating below 40% CPU utilization might be candidates for downsizing.

- Memory Utilization: Monitor memory usage to identify potential memory bottlenecks. If instances consistently have low memory utilization, they may be over-provisioned.

- Disk I/O: Analyze disk read/write operations to identify instances with storage performance issues. High disk I/O could indicate the need for larger storage or a different instance type.

- Network Traffic: Track network traffic to ensure instances have sufficient network bandwidth.

- Threshold Definition: Define thresholds for each metric to identify instances that are either underutilized or overutilized. For example:

- Underutilized: Instances consistently using less than 40% CPU or memory.

- Overutilized: Instances consistently using more than 80% CPU or experiencing frequent memory swapping.

- Instance Sizing Recommendations: Based on the analysis, provide recommendations for instance sizing. For example:

- If an instance consistently uses 30% CPU and 40% memory, suggest downsizing to a smaller instance type.

- If an instance frequently experiences CPU spikes above 90%, recommend upgrading to a larger instance type.

- Iteration and Refinement: Continuously monitor and analyze the data. Adjust instance sizes and thresholds as needed. Cloud environments are dynamic, and resource requirements can change over time.

Consider a real-world example: A web application hosted on AWS EC2 instances experiences periods of low traffic during off-peak hours. Historical data analysis reveals that CPU utilization consistently stays below 20% during these times. Based on this analysis, the organization can downsize the instances during off-peak hours to reduce costs. When traffic increases during peak hours, they can scale up the instances to maintain performance.

Automating Instance Resizing Based on Real-Time Resource Demands

Automating instance resizing provides a dynamic approach to optimize resource utilization. This involves using automation tools and scripts to automatically adjust instance sizes based on real-time resource demands. Automation reduces the need for manual intervention and ensures optimal resource allocation.

- Implementation of Auto Scaling: Implement auto-scaling features available in most cloud platforms.

- AWS Auto Scaling: Provides automatic scaling for EC2 instances.

- Azure Virtual Machine Scale Sets: Enables automatic scaling for Azure VMs.

- Google Compute Engine Autoscaler: Offers automatic scaling for Google Compute Engine instances.

Auto-scaling groups can be configured to automatically add or remove instances based on defined metrics, such as CPU utilization or network traffic.

- Configuration of Scaling Policies: Define scaling policies based on real-time metrics. For example:

- Scale Out: Add more instances when CPU utilization exceeds a threshold (e.g., 80%).

- Scale In: Remove instances when CPU utilization falls below a threshold (e.g., 40%).

Scaling policies can also be based on other metrics, such as memory utilization, disk I/O, or custom metrics.

- Scheduling: Implement scheduled scaling to proactively adjust instance sizes based on predictable traffic patterns. For example:

- Scale up instances before peak hours.

- Scale down instances during off-peak hours.

- Monitoring and Alerting: Continuously monitor the performance of auto-scaling groups and set up alerts to notify administrators of any issues.

- Monitor scaling events to ensure instances are scaling as expected.

- Set up alerts for scaling failures or other anomalies.

- Testing and Validation: Test scaling policies and configurations to ensure they function correctly.

- Simulate load to verify that instances scale up and down as expected.

- Monitor instance performance after scaling events.

Consider a real-world example: An e-commerce website experiences a surge in traffic during a flash sale. Using auto-scaling, the system automatically detects the increased load and launches additional instances to handle the demand. Once the sale ends and traffic decreases, the auto-scaling system automatically removes the extra instances, reducing costs. This dynamic adjustment ensures optimal performance and cost efficiency.

Comparison of Manual vs. Automated Right-Sizing Approaches

Both manual and automated approaches to right-sizing have their advantages and disadvantages. Understanding these differences can help you choose the best approach for your specific needs.

| Feature | Manual Right-Sizing | Automated Right-Sizing | Pros | Cons |

|---|---|---|---|---|

| Responsiveness | Slow; requires manual intervention. | Fast; responds to real-time changes. | Provides control and allows for human judgment. | Can lead to delays and potential performance issues. |

| Efficiency | Can be inefficient if not performed regularly. | Highly efficient; continuously optimizes resource usage. | Allows for fine-tuning and adjustments based on expert knowledge. | Requires careful configuration and monitoring to avoid unexpected behavior. |

| Cost Optimization | Can be cost-effective if done frequently and correctly. | Highly cost-effective; reduces idle resources automatically. | Can be adapted to specific requirements and allows for customization. | May require specialized skills for configuration and maintenance. |

| Complexity | Simple to understand and implement initially. | More complex to set up initially. | Simple to set up and manage initially. | Can be more challenging to troubleshoot and maintain. |

Automated Scaling Strategies

Automated scaling is a crucial approach to minimizing idle cloud resources by dynamically adjusting the resources allocated to an application based on its real-time needs. This proactive management strategy prevents over-provisioning during periods of low demand and ensures sufficient resources during peak loads, optimizing both performance and cost. Implementing automated scaling involves setting up rules and policies that trigger the addition or removal of instances based on specific metrics.

Auto-Scaling Groups for Dynamic Instance Adjustment

Auto-scaling groups (ASGs) are fundamental components of automated scaling in cloud environments. They allow for the automatic adjustment of the number of instances running within an application, based on pre-defined policies. ASGs continuously monitor the health and performance of instances, and automatically launch new instances or terminate existing ones to maintain the desired performance levels and resource utilization.To use ASGs effectively, you define:

- Desired Capacity: The initial number of instances the ASG should maintain.

- Minimum Capacity: The smallest number of instances the ASG will keep running.

- Maximum Capacity: The largest number of instances the ASG is allowed to launch.

- Launch Configuration: Specifies the template for launching new instances, including the Amazon Machine Image (AMI), instance type, security groups, and other configurations.

- Scaling Policies: Define the conditions under which the ASG should scale out (add instances) or scale in (remove instances).

For example, an ASG can be configured to maintain a minimum of two instances to ensure high availability. During a traffic spike, the scaling policy might add more instances, up to a maximum of ten, to handle the increased load. When the traffic subsides, the ASG can automatically remove instances, reducing costs.

Different Scaling Policies

Scaling policies are the heart of automated scaling, dictating how an ASG responds to changes in resource demand. These policies are based on various metrics that reflect the performance and utilization of the instances. Several common scaling policies are available:

- CPU Utilization: This is a common metric. If the average CPU utilization of the instances in the ASG exceeds a predefined threshold (e.g., 70%), the ASG will scale out by launching additional instances. Conversely, if the CPU utilization drops below another threshold (e.g., 30%), the ASG will scale in by terminating instances.

- Network Traffic: Network traffic, both inbound and outbound, can be used to trigger scaling actions. For example, if the average network in/out bytes per instance exceeds a certain threshold, the ASG can scale out to handle increased network load.

- Request Count: For web applications, the number of requests per second or minute can be a useful metric. If the number of requests exceeds a threshold, the ASG can add instances.

- Custom Metrics: Cloud providers allow the use of custom metrics, enabling scaling based on application-specific performance indicators. This could include the number of database queries, the size of a processing queue, or any other relevant metric.

Scaling policies can also incorporate cool-down periods, which prevent the ASG from reacting too quickly to short-term fluctuations.

Configuring Auto-Scaling Based on Scheduled Events

Scheduled scaling allows for the automation of scaling actions based on a predefined schedule. This is particularly useful for applications with predictable traffic patterns, such as those with peak hours or known periods of high demand.Here’s how scheduled scaling works:

- Define the Schedule: Specify the time and date when the scaling action should occur.

- Define the Action: Determine the desired number of instances at the scheduled time. This can involve setting the desired capacity, or adding or removing a specific number of instances.

- Set the Recurrence: Optionally, define how often the schedule should repeat (e.g., daily, weekly).

For example, an e-commerce website might anticipate a surge in traffic during evening hours. A scheduled scaling action could be configured to increase the desired capacity of the ASG at 6 PM every day, and then reduce it at midnight, ensuring sufficient resources during peak hours and minimizing costs during off-peak times.This table provides an example of a scheduled scaling configuration:

| Schedule Name | Start Time | End Time | Recurrence | Action |

|---|---|---|---|---|

| Peak Hours | 6:00 PM UTC | 12:00 AM UTC | Daily | Set desired capacity to 10 instances |

| Off-Peak | 12:00 AM UTC | 6:00 PM UTC | Daily | Set desired capacity to 2 instances |

This example shows how scheduled scaling can be combined with other scaling policies, like CPU utilization, for a comprehensive approach to resource management.

Implementing Scheduling and Automation

Scheduling and automation are critical for optimizing cloud resource utilization and minimizing idle time. By strategically controlling the start and stop times of instances, and automating resource deallocation, organizations can significantly reduce costs and improve efficiency. This section explores the tools, best practices, and considerations for implementing effective scheduling and automation strategies.

Tools and Services for Scheduling Instance Start and Stop Times

Several cloud providers offer native services and tools designed to schedule instance start and stop times. These services enable automated management of compute resources, aligning resource availability with actual demand.

- AWS: AWS provides several options for scheduling, including:

- Amazon EC2 Instance Scheduler: A free, open-source solution that uses AWS CloudFormation templates to schedule EC2 instances. It allows for the creation of schedules to start and stop instances at predefined times, supporting various time zones and instance types.

- AWS Systems Manager Automation: Systems Manager allows users to create automation runbooks to start and stop EC2 instances based on schedules. These runbooks can include custom logic and integrate with other AWS services.

- AWS CloudWatch Events (now Amazon EventBridge): EventBridge can trigger actions based on schedules. For instance, you can set up a cron expression to start or stop an EC2 instance at specific times.

- Microsoft Azure: Azure offers several scheduling capabilities:

- Azure Automation: Azure Automation allows users to create runbooks (using PowerShell or Python) to automate the start and stop of virtual machines. These runbooks can be scheduled to run at specific times.

- Azure Logic Apps: Logic Apps can be used to create workflows that include scheduling triggers to start and stop virtual machines.

- Azure Resource Manager (ARM) templates: ARM templates can be used to deploy resources with scheduled start and stop times, using tags and automation runbooks.

- Google Cloud Platform (GCP): GCP provides scheduling options through:

- Cloud Scheduler: Cloud Scheduler is a fully managed cron job scheduler that allows you to schedule tasks, including starting and stopping Compute Engine instances, at specific times.

- Cloud Functions: Cloud Functions can be triggered by Cloud Scheduler to perform actions like starting or stopping instances.

- Compute Engine API: Using the Compute Engine API and scripting, you can create custom scheduling solutions.

- Third-party Tools: In addition to native cloud provider services, various third-party tools integrate with multiple cloud platforms to provide advanced scheduling capabilities. These tools often offer features such as centralized management, reporting, and integration with other IT systems.

Best Practices for Automating Resource Deallocation During Off-Peak Hours

Automating resource deallocation during off-peak hours is a key strategy for minimizing costs. Implementing these best practices ensures resources are only active when needed.

- Identify Off-Peak Hours: Analyze resource usage patterns to determine the periods when resources are least utilized. Use monitoring tools to gather data on CPU utilization, network traffic, and other relevant metrics.

- Implement Scheduling: Use the scheduling tools and services discussed above to automatically stop instances during off-peak hours.

- Automate Instance Termination: Ensure instances are gracefully terminated. This can involve sending shutdown signals to the operating system and saving any necessary data before stopping the instance.

- Consider Data Persistence: Decide how to handle data stored on instances. For example, you might:

- Use persistent storage: Store data on persistent storage volumes (e.g., Amazon EBS, Azure Managed Disks, Google Persistent Disk) to ensure data is retained when instances are stopped.

- Back up data: Regularly back up data to a separate storage location (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage) to ensure data recovery.

- Delete temporary data: If the data is temporary, it can be deleted when the instance is stopped.

- Test and Monitor: Thoroughly test the scheduling and automation processes to ensure they function correctly. Continuously monitor resource utilization and adjust schedules as needed.

- Use Tags and Metadata: Tag instances with metadata to categorize them and facilitate automated management. For example, you can use tags to identify instances that should be scheduled or to specify the appropriate backup strategy.

Considerations for Scheduling Resources in Different Time Zones

When managing resources across multiple time zones, it’s essential to account for the differences in local time to ensure instances start and stop at the intended times.

- Use UTC: Whenever possible, use Coordinated Universal Time (UTC) as the basis for scheduling. This simplifies time zone conversions and avoids issues related to daylight saving time.

- Time Zone Conversions: If you must use local time zones, use the scheduling tools’ capabilities to convert UTC times to the appropriate local times. For example, AWS EC2 Instance Scheduler allows you to specify the time zone for each schedule.

- Account for Daylight Saving Time (DST): DST can shift the start and stop times of instances. Ensure your scheduling tools are configured to handle DST changes correctly. Some tools automatically adjust for DST.

- Define Schedules per Time Zone: For applications spanning multiple time zones, consider creating separate schedules for each time zone. This allows you to tailor the resource availability to the specific needs of each location.

- Test Thoroughly: Test scheduling configurations across different time zones to verify that instances start and stop at the correct local times.

- Monitor and Adjust: Continuously monitor the performance and resource utilization in each time zone. Make adjustments to the schedules as needed to optimize resource allocation and minimize costs.

Optimizing Storage Utilization

Efficiently managing cloud storage is critical for controlling costs. Underutilized storage resources represent wasted expenditure, while inefficient storage configurations can impact performance. This section focuses on strategies for identifying, archiving, and optimizing cloud storage to minimize expenses and maximize efficiency.

Identifying and Removing Unused or Orphaned Storage Volumes

Identifying and removing unused or orphaned storage volumes is essential for preventing unnecessary storage costs. These volumes consume resources without providing any value.The process involves several steps:

- Inventory and Analysis: Begin by creating an inventory of all storage volumes. Analyze volume usage patterns, including last access times and data transfer rates. Cloud providers offer tools to facilitate this, such as AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring.

- Identification of Unused Volumes: Identify volumes that have not been accessed for an extended period. Define a threshold based on your data retention policies and business requirements. A common threshold is 30, 60, or 90 days of inactivity.

- Identification of Orphaned Volumes: Orphaned volumes are those that are no longer attached to any compute instance. This can occur due to instance termination or misconfigurations. These volumes should be identified and reviewed for potential data retention requirements.

- Data Validation and Backup: Before deleting any volume, validate the data contained within it. Ensure that any critical data is backed up. Cloud providers offer snapshot and backup services for this purpose.

- Deletion: Once the data is validated and backed up (if necessary), the unused or orphaned volumes can be safely deleted.

For example, a company using AWS might find several EBS volumes that have not been accessed for over three months. After validating that the data is no longer needed and backing up any essential information, these volumes can be deleted, immediately reducing storage costs. The same process can be replicated in other cloud environments like Azure or Google Cloud Platform using their respective storage services and monitoring tools.

Methods for Archiving Infrequently Accessed Data to Lower-Cost Storage Tiers

Archiving infrequently accessed data to lower-cost storage tiers significantly reduces storage expenses. Cloud providers offer various storage tiers, with varying performance and cost characteristics.The following methods can be employed:

- Data Classification: Classify data based on its access frequency and business value. Data accessed frequently should remain in high-performance storage, while infrequently accessed data is a candidate for archiving.

- Tiering Policies: Implement policies to automatically move data between storage tiers based on access patterns. For instance, data that has not been accessed for a specific period (e.g., 60 days) can be automatically moved to a lower-cost archive tier.

- Lifecycle Management: Utilize lifecycle management features offered by cloud providers. AWS offers S3 Lifecycle policies, Azure provides Blob Storage lifecycle management, and Google Cloud offers Cloud Storage lifecycle management. These features automate the transition of data between storage tiers.

- Object Storage for Archiving: Use object storage services, such as AWS S3 Glacier, Azure Archive Storage, or Google Cloud Storage Archive, for archiving. These tiers are designed for long-term data storage at a significantly lower cost.

Consider a healthcare provider storing patient records. Active records might reside in a high-performance storage tier, while older records, required for compliance but rarely accessed, are moved to an archive tier. This approach reduces storage costs while maintaining compliance. A retail company might use similar methods for its sales logs. Current sales data would be kept in a higher-performance tier, while historical sales data would be archived.

This practice of moving cold data to cold storage is a fundamental concept in cloud cost optimization.

Creating a Guide to Optimizing Storage Performance While Minimizing Costs

Optimizing storage performance while minimizing costs involves a combination of selecting appropriate storage types, right-sizing volumes, and implementing performance-enhancing techniques.Here is a guide to achieving this:

- Storage Type Selection: Choose the appropriate storage type based on workload requirements.

- For high-performance workloads (e.g., databases): Use SSD-backed storage (e.g., AWS EBS Provisioned IOPS, Azure Premium SSD, Google Persistent Disk SSD).

- For general-purpose workloads: Utilize general-purpose SSD storage (e.g., AWS EBS General Purpose SSD, Azure Standard SSD, Google Persistent Disk Balanced).

- For archival storage: Leverage object storage services with archive tiers (e.g., AWS S3 Glacier, Azure Archive Storage, Google Cloud Storage Archive).

- Right-Sizing Storage Volumes: Provision storage volumes that match the actual storage requirements. Over-provisioning leads to unnecessary costs. Monitor storage utilization regularly and adjust volume sizes as needed.

- Data Compression: Implement data compression techniques to reduce the amount of storage required. This can be done at the application level or through storage-specific features.

- Data Deduplication: Use data deduplication to eliminate redundant data blocks, reducing storage space. This is particularly beneficial for storing backups and large datasets.

- Caching Strategies: Implement caching mechanisms to improve read performance. Use caching layers (e.g., Redis, Memcached) to store frequently accessed data in memory.

- Performance Monitoring: Continuously monitor storage performance metrics, such as IOPS, throughput, and latency. Use these metrics to identify performance bottlenecks and optimize storage configurations.

- Storage Tiering: Implement automated storage tiering policies to move data between different storage tiers based on access frequency and performance needs.

For instance, a company running a web application might start with general-purpose SSD storage for its application data. As the application grows, they can monitor storage performance and move frequently accessed data to a higher-performance tier. Additionally, they could implement data compression to reduce storage costs. A media streaming service could use a tiered approach. The active videos would be stored on a high-performance tier, while older videos would be archived.

Network Resource Optimization

Optimizing network resources is critical for minimizing cloud costs and ensuring application performance. Inefficient network configurations can lead to unnecessary expenses related to data transfer, load balancing, and network infrastructure. Effective optimization strategies involve careful analysis of network traffic patterns, resource utilization, and the implementation of best practices. This section explores various techniques to streamline network resource usage, leading to significant cost savings and improved operational efficiency.

Optimizing Load Balancer Configurations

Load balancers are essential for distributing traffic across multiple compute instances, enhancing application availability and scalability. Properly configured load balancers are crucial for handling varying traffic loads efficiently, minimizing latency, and preventing resource exhaustion.To optimize load balancer configurations, consider these strategies:

- Traffic Analysis and Pattern Identification: Regularly analyze traffic patterns to understand peak loads, average traffic, and the distribution of traffic over time. This analysis informs decisions about scaling and resource allocation. For instance, a retail website might experience peak traffic during sales events, requiring the load balancer to handle a significantly higher volume of requests compared to off-peak hours.

- Auto-Scaling Load Balancers: Implement auto-scaling features to automatically adjust the capacity of the load balancer based on traffic demands. This ensures the load balancer can handle sudden traffic spikes without manual intervention. Cloud providers like AWS, Azure, and Google Cloud offer auto-scaling capabilities for their load balancing services.

- Health Checks and Monitoring: Configure robust health checks to monitor the health of backend instances. These checks ensure that traffic is only directed to healthy instances, preventing performance degradation. Define health check intervals and thresholds to identify failing instances promptly.

- Connection Pooling and Session Management: Optimize connection pooling and session management settings to reduce the overhead of establishing and maintaining connections. Proper configuration of connection timeouts and session affinity can significantly improve performance.

- Caching and Content Delivery Networks (CDNs): Utilize caching mechanisms and CDNs to reduce the load on the load balancer and backend servers. Caching static content closer to the users improves response times and reduces the number of requests handled by the load balancer. For example, a news website can use a CDN to cache images and videos, decreasing the load on the origin servers.

- Protocol Optimization: Select the appropriate protocol (e.g., HTTP/2, HTTP/3) for communication between the load balancer and the backend instances. These protocols offer performance improvements over older versions, such as multiplexing multiple requests over a single connection.

- Load Balancing Algorithms: Choose the most suitable load balancing algorithm based on application requirements. Common algorithms include round robin, least connections, and IP hash. For example, if maintaining user sessions is critical, the IP hash algorithm ensures that requests from the same user are always directed to the same backend instance.

Identifying and Removing Unused Network Interfaces and VPCs

Unused network interfaces and virtual private clouds (VPCs) represent wasted resources and contribute to unnecessary costs. Identifying and removing these idle resources is a straightforward way to optimize network resource utilization.To identify and remove unused network interfaces and VPCs, use these techniques:

- Regular Audits: Conduct regular audits of network interfaces and VPCs to identify those that are not actively in use. Utilize cloud provider tools or third-party monitoring solutions to gather data on resource utilization.

- Utilization Metrics Analysis: Analyze network interface utilization metrics such as data transfer, packet count, and connection counts. Identify interfaces with consistently low or zero activity over an extended period.

- VPC Traffic Flow Logs: Review VPC traffic flow logs to determine whether any VPCs are not transmitting or receiving any traffic. These logs provide detailed information about network traffic within a VPC.

- Tagging and Organization: Implement a consistent tagging strategy to categorize network resources. This allows you to easily identify the purpose and ownership of each resource, simplifying the identification of unused resources.

- Automation: Automate the process of identifying and removing unused resources. This can be achieved through scripts or cloud provider automation services. For instance, a script can be created to automatically detect unused network interfaces and delete them after a specified period.

- Alerting: Set up alerts to notify you when network interfaces or VPCs exhibit low utilization or inactivity. This proactive approach enables you to quickly address potential resource waste.

- Resource Grouping: Organize network resources into logical groups to facilitate easier management and identification of unused components.

Visual Representation of Network Resource Utilization and Optimization

Visual representations of network resource utilization and optimization provide insights into network performance, resource allocation, and potential areas for improvement. These visualizations help stakeholders understand network behavior and make informed decisions.The following are key elements to consider when designing a visual representation:

- Network Topology Diagram: A diagram illustrating the network infrastructure, including VPCs, subnets, load balancers, and instances. This diagram should show the relationships between different network components.

- Traffic Flow Visualization: A visualization showing the flow of traffic between different network components, including the volume of data transferred and the latency experienced.

- Resource Utilization Charts: Charts displaying the utilization of network resources, such as bandwidth usage, CPU utilization of network appliances, and connection counts.

- Alerts and Notifications: Integrate alerts and notifications to highlight potential issues or anomalies in network performance or resource utilization.

- Key Performance Indicators (KPIs): Display relevant KPIs, such as average latency, error rates, and data transfer costs, to provide a clear overview of network performance.

- Interactive Dashboards: Design interactive dashboards that allow users to drill down into specific areas of the network and analyze data in more detail.

For example, a dashboard could include:

- A map showing the geographical distribution of users accessing the application.

- Charts displaying real-time bandwidth usage for each VPC.

- A table listing the top consumers of network bandwidth.

- Alerts for unusually high latency or error rates.

Leveraging Cloud Provider Features

Cloud providers offer a plethora of features designed to optimize resource utilization and minimize costs. Understanding and utilizing these features is crucial for effective cloud cost management. This section explores the specific offerings from major cloud providers, comparing their tools and providing guidance on implementing cost-saving strategies.

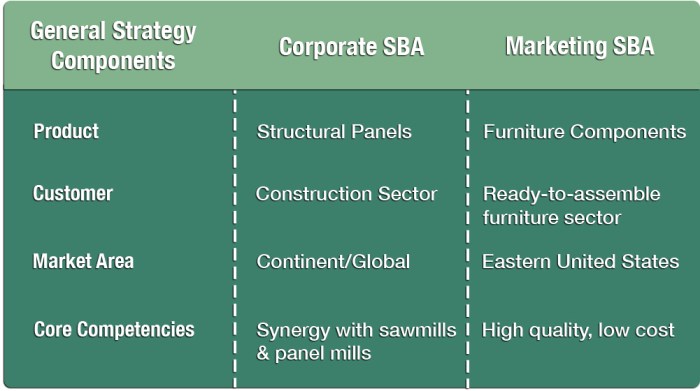

Cloud Provider Resource Optimization Features

Major cloud providers, including Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), each offer a suite of tools and services to help users optimize their cloud resources. These features address various aspects of cloud resource management, from instance selection and storage optimization to automated scaling and cost analysis.

- AWS: AWS provides a comprehensive set of tools, including AWS Cost Explorer for detailed cost analysis, AWS Compute Optimizer for instance right-sizing recommendations, and Savings Plans for discounted compute usage. AWS also offers features like Auto Scaling for automatic resource adjustments and S3 lifecycle policies for storage optimization.

- Azure: Azure offers Azure Cost Management + Billing for cost monitoring and analysis, Azure Advisor for personalized recommendations, and Azure Reserved Instances for discounted compute capacity. Azure also provides features like Azure Automation for task automation and Azure Blob Storage lifecycle management for storage optimization.

- GCP: GCP provides Cloud Billing for cost management and analysis, Cloud Recommendations for resource optimization suggestions, and Committed Use Discounts for discounted compute usage. GCP also offers features like Cloud Functions for serverless computing and Cloud Storage lifecycle management for storage optimization.

Cost Optimization Tools Comparison

Different cloud providers offer distinct cost optimization tools, each with its own strengths and weaknesses. A comparative analysis helps in selecting the most suitable tools based on specific needs and requirements.

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| Cost Analysis | AWS Cost Explorer | Azure Cost Management + Billing | Cloud Billing |

| Resource Recommendations | AWS Compute Optimizer | Azure Advisor | Cloud Recommendations |

| Discount Programs | Savings Plans, Reserved Instances | Reserved Instances | Committed Use Discounts |

| Automated Scaling | Auto Scaling | Virtual Machine Scale Sets | Autoscaling |

| Storage Optimization | S3 Lifecycle Policies | Blob Storage Lifecycle Management | Cloud Storage Lifecycle Management |

Each provider’s cost analysis tools offer detailed insights into spending patterns, allowing users to identify areas where costs can be reduced. Resource recommendation tools provide tailored suggestions for right-sizing instances and optimizing resource allocation. Discount programs, such as Savings Plans, Reserved Instances, and Committed Use Discounts, offer significant cost savings for committed usage. Automated scaling features ensure resources are dynamically adjusted to meet demand, preventing over-provisioning and minimizing idle resources.

Implementing Cost-Saving Features in AWS (Example)

Implementing cost-saving features in AWS involves a series of steps to leverage the available tools and services effectively. This guide Artikels a practical approach, using AWS as an example.

- Utilize AWS Cost Explorer: Regularly monitor spending using AWS Cost Explorer to identify cost trends and potential areas for optimization. Create custom reports and set up budget alerts to track spending against predefined thresholds.

- Implement AWS Compute Optimizer: Use AWS Compute Optimizer to analyze instance usage and receive recommendations for right-sizing compute instances. This helps in eliminating over-provisioning and choosing the most cost-effective instance types.

- Leverage Savings Plans: Explore AWS Savings Plans to commit to a consistent amount of compute usage for a discounted price. This can significantly reduce costs for steady-state workloads. For example, if a company commits to spending $1,000 per month on compute instances with a Savings Plan, they might get a discount of up to 72% compared to on-demand prices.

- Configure Auto Scaling: Implement Auto Scaling to automatically adjust the number of compute instances based on demand. This ensures that resources are scaled up during peak hours and scaled down during off-peak hours, minimizing idle capacity. Configure scaling policies based on metrics like CPU utilization, memory usage, and network traffic.

- Optimize Storage with S3 Lifecycle Policies: Utilize S3 Lifecycle Policies to automate the management of data stored in Amazon S3. Configure policies to transition infrequently accessed data to cheaper storage tiers like S3 Glacier, or automatically delete old data. For example, a policy could be set to move objects to the Glacier tier after 30 days of inactivity, saving on storage costs.

Containerization and Serverless Computing

Containerization and serverless computing represent significant advancements in cloud computing, offering powerful strategies for optimizing resource utilization and minimizing idle cloud resources. These technologies allow for more efficient allocation of computing power, leading to cost savings and improved application performance. By understanding the nuances of these approaches, organizations can significantly reduce waste and enhance their cloud infrastructure’s overall efficiency.

Improving Resource Utilization with Containerization

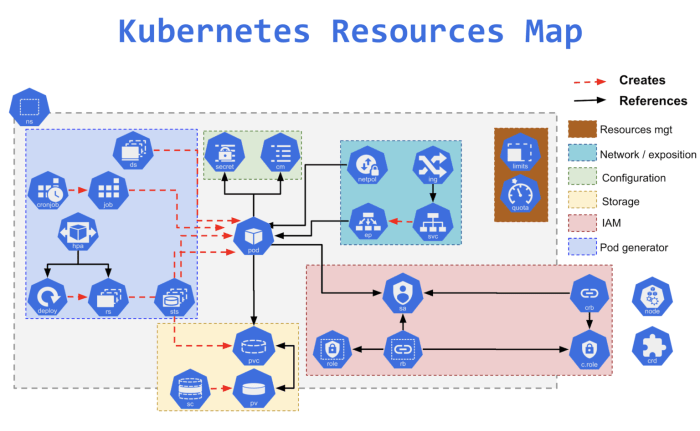

Containerization, utilizing technologies like Docker and Kubernetes, fundamentally changes how applications are packaged and deployed. This approach leads to several key benefits in terms of resource efficiency.Containers package applications with all their dependencies into isolated units. This isolation allows multiple containers to run on the same host operating system without interfering with each other.

- Resource Sharing: Containers share the host operating system’s kernel, reducing the overhead associated with running multiple virtual machines. This shared kernel model results in a smaller footprint and faster startup times compared to virtual machines.

- Density: Containerization enables higher density deployments. More applications can run on the same hardware, leading to improved utilization of CPU, memory, and other resources.

- Portability: Containers are designed to be portable across different environments. This portability allows applications to run consistently on various infrastructure, reducing the need for resource-intensive environment-specific configurations.

- Microservices Architecture: Containerization facilitates the adoption of microservices architectures. Breaking down applications into smaller, independent services allows for independent scaling and resource allocation for each service based on its specific needs.

Reducing Idle Resources with Serverless Computing

Serverless computing, exemplified by platforms like AWS Lambda, Azure Functions, and Google Cloud Functions, takes resource efficiency to the next level. Serverless platforms automatically manage the underlying infrastructure, allowing developers to focus on writing code without worrying about server provisioning, scaling, or management. This approach inherently minimizes idle resources.Serverless functions are only executed when triggered by an event, such as an HTTP request or a database update.

This event-driven nature eliminates the need for continuously running servers, significantly reducing idle time and associated costs.

- Pay-per-use Model: Serverless platforms typically offer a pay-per-use pricing model. You are only charged for the actual compute time consumed by your functions, eliminating the cost of idle resources.

- Automatic Scaling: Serverless platforms automatically scale the resources allocated to your functions based on demand. This dynamic scaling ensures that resources are only provisioned when needed, preventing over-provisioning and waste.

- Event-Driven Architecture: Serverless computing thrives on event-driven architectures. Functions are triggered by specific events, ensuring that resources are only activated when necessary, leading to significant cost savings.

- Reduced Operational Overhead: Serverless platforms abstract away infrastructure management tasks. This reduced operational overhead frees up developers to focus on building and deploying applications rather than managing servers, which can contribute to idle resources due to inefficient configuration.

Resource Efficiency Comparison: Virtual Machines, Containers, and Serverless Functions

The following table compares the resource efficiency characteristics of traditional virtual machines, containerized applications, and serverless functions. The comparison highlights the advantages of each approach in terms of resource utilization and management.

| Feature | Virtual Machines | Containers | Serverless Functions |

|---|---|---|---|

| Resource Utilization | Lower, due to OS overhead and underutilization. | Higher, due to shared OS kernel and improved density. | Highest, due to pay-per-use model and automatic scaling. |

| Startup Time | Minutes (due to OS boot) | Seconds (faster than VMs) | Milliseconds (instantaneous) |

| Scaling | Manual or with auto-scaling groups. | Automated, but requires container orchestration (e.g., Kubernetes). | Automatic and dynamic, managed by the platform. |

| Cost Model | Pay for provisioned resources, regardless of usage. | Pay for provisioned resources, but potentially higher density. | Pay-per-execution and resource consumption. |

Governance and Policy Enforcement

Establishing robust governance and enforcing policies are crucial for proactively minimizing idle cloud resources and ensuring cost-effective cloud operations. This involves setting clear guidelines, implementing automated mechanisms, and continuously monitoring resource usage to prevent waste and maintain optimal performance. Effective governance frameworks empower organizations to control cloud spending, improve resource utilization, and align cloud operations with business objectives.

Best Practices for Establishing Policies to Prevent Idle Resource Creation

Creating policies to prevent idle resource creation involves several key best practices. These policies should be well-defined, easily understood, and consistently applied across the organization. Implementing these practices helps to proactively address potential waste and optimize cloud resource utilization.

- Define Clear Resource Naming Conventions: Implement a standardized naming convention for all cloud resources. This allows for easy identification and tracking of resources, making it simpler to identify and manage potentially idle instances. For example, a naming convention could include the application name, environment (e.g., dev, prod), and resource type.

- Establish Resource Tagging Standards: Require comprehensive tagging of all cloud resources. Tags should include information such as the owner, application, cost center, and environment. This enables effective resource categorization, cost allocation, and identification of unused resources. For instance, tagging an EC2 instance with “Owner: JohnDoe,” “Application: WebApp,” and “Environment: Production” provides clear context.

- Implement Approval Workflows: Introduce approval processes for resource provisioning. This ensures that all resource requests are reviewed and approved by the appropriate personnel before being deployed. This step helps to prevent the creation of unnecessary resources.

- Set Resource Quotas and Limits: Establish resource quotas and limits at the account or project level to prevent over-provisioning. This can limit the number of instances, storage, or other resources that can be created, reducing the likelihood of idle resources. For example, set a limit of 10 EC2 instances per project.

- Automate Resource De-provisioning: Implement automated processes to de-provision resources after they are no longer needed. This includes setting up automatic shutdowns for development environments outside of working hours or terminating resources after a specific period of inactivity.

- Regularly Review and Update Policies: Policies should be regularly reviewed and updated to adapt to changing business needs and cloud environments. This ensures that the policies remain effective in preventing idle resource creation.

Implementing Governance Frameworks to Enforce Cost Optimization Strategies

Implementing governance frameworks is essential for enforcing cost optimization strategies and ensuring adherence to established policies. These frameworks provide the mechanisms and controls needed to monitor, manage, and optimize cloud resource usage effectively.

- Centralized Policy Management: Utilize a centralized policy management system to define, deploy, and enforce policies across all cloud resources. This provides a single point of control and ensures consistency. Examples include using AWS Organizations with Service Control Policies (SCPs) or Azure Policy.

- Automated Compliance Checks: Implement automated compliance checks to regularly assess resources against established policies. This helps to identify non-compliant resources and trigger corrective actions. Tools like AWS Config or Azure Policy can be used for this purpose.

- Cost Monitoring and Reporting: Integrate robust cost monitoring and reporting tools to track cloud spending and identify areas for optimization. These tools should provide detailed insights into resource usage and costs, enabling proactive cost management. Cloud provider dashboards and third-party cost management solutions are valuable in this regard.

- Role-Based Access Control (RBAC): Implement RBAC to restrict access to cloud resources based on roles and responsibilities. This limits the potential for unauthorized resource creation and ensures that only authorized personnel can make changes to cloud infrastructure.

- Incident Response and Remediation: Establish an incident response plan to address policy violations and other issues. This plan should define the steps to be taken when non-compliant resources are identified, including automated remediation actions.

- Continuous Monitoring and Feedback: Implement continuous monitoring of resource usage and costs, and provide regular feedback to stakeholders. This helps to ensure that policies are effective and that cost optimization strategies are delivering the desired results.

Steps for Creating and Enforcing a Policy that Automatically Terminates Idle Resources

Creating and enforcing a policy that automatically terminates idle resources involves several key steps. This process ensures that resources are automatically decommissioned when they are no longer in use, leading to significant cost savings.

- Define Idle Resource Criteria: Clearly define the criteria for identifying idle resources. This could be based on CPU utilization, network traffic, disk I/O, or a combination of these metrics. For example, an EC2 instance might be considered idle if its CPU utilization is below 5% for a continuous period of 30 minutes.

- Choose a Monitoring and Automation Tool: Select a monitoring and automation tool that supports policy enforcement and automated actions. Examples include AWS CloudWatch with Lambda, Azure Monitor with Azure Automation, or third-party solutions like CloudHealth or Flexera.

- Create the Policy Definition: Define the policy that will identify and terminate idle resources. This involves configuring the monitoring tool to monitor the defined criteria and trigger an action when the criteria are met. The policy should specify the resources to be monitored, the idle thresholds, and the termination action.

- Implement Automated Termination Action: Configure the automation tool to take action when an idle resource is detected. This typically involves sending a notification and then terminating the resource. The termination action should include safeguards, such as sending a warning notification before termination and allowing a grace period.

- Test and Validate the Policy: Thoroughly test the policy in a non-production environment to ensure that it functions as expected. This involves simulating idle resource conditions and verifying that the automated termination action is triggered correctly.

- Deploy and Monitor the Policy: Deploy the policy in the production environment and continuously monitor its effectiveness. This includes tracking the number of resources terminated, the cost savings achieved, and any potential issues.

- Refine and Optimize the Policy: Regularly review and refine the policy based on monitoring data and feedback. This ensures that the policy remains effective and adapts to changing resource usage patterns. Adjust thresholds, add exceptions, or modify the termination action as needed.

Closure

In conclusion, the pursuit of minimizing idle cloud resources is not merely a cost-saving exercise; it’s a fundamental aspect of modern cloud management. By embracing the strategies Artikeld in this guide, you can transform your cloud infrastructure into a lean, efficient, and highly responsive environment. From identifying and removing waste to implementing automated scaling and governance policies, the path to optimal cloud resource utilization is paved with careful planning, continuous monitoring, and a proactive approach to cost optimization.

The benefits – reduced expenses, improved performance, and increased agility – are well worth the effort, paving the way for a more sustainable and competitive future in the cloud.

FAQ Summary

What are the primary benefits of minimizing idle cloud resources?

Minimizing idle resources directly translates to reduced cloud costs, improved performance through efficient resource allocation, and enhanced scalability, allowing businesses to adapt quickly to changing demands.

How can I determine if my compute instances are underutilized?

Monitor CPU utilization, memory usage, and network I/O. Cloud providers offer monitoring tools that provide detailed metrics, allowing you to identify instances consistently operating below their capacity.

What is the difference between horizontal and vertical scaling?

Vertical scaling involves increasing the resources (CPU, RAM) of a single instance, while horizontal scaling adds more instances to handle the workload. Horizontal scaling is generally more cost-effective and resilient.

What are the best practices for scheduling instance start and stop times?

Analyze your workload patterns to identify peak and off-peak hours. Schedule instances to start before peak demand and shut down during periods of low activity to minimize idle time and costs.

How does containerization help reduce idle resources?

Containerization, such as Docker, allows for efficient resource utilization by packaging applications with their dependencies. Containers can be quickly deployed and scaled, ensuring resources are only used when needed, unlike traditional virtual machines that often have significant overhead.