Deploying serverless applications efficiently is a critical aspect of modern cloud infrastructure management. This discussion centers on leveraging Terraform, an infrastructure-as-code tool, to automate the deployment of AWS Lambda functions. Terraform’s declarative approach enables the definition, versioning, and management of infrastructure resources in a repeatable and consistent manner, significantly reducing manual effort and potential errors.

We will delve into the advantages of using Terraform for Lambda deployments, covering setup, configuration, deployment, and management. This approach allows for greater control over infrastructure, improved collaboration, and the ability to treat infrastructure as code, thus enhancing the agility and scalability of your applications. We will explore essential aspects, including environment setup, code packaging, dependency management, and integration with other AWS services, providing a solid foundation for anyone looking to adopt this practice.

Introduction to Terraform and Lambda Functions

Terraform, an Infrastructure as Code (IaC) tool, enables the definition and provisioning of infrastructure resources in a declarative manner. AWS Lambda functions, serverless compute services, allow the execution of code without managing servers. Combining these technologies offers significant advantages for deploying and managing serverless applications.

Benefits of Terraform for Infrastructure as Code

Terraform provides a structured and automated approach to infrastructure management, leading to several key benefits. These advantages contribute to improved efficiency, reliability, and scalability in cloud deployments.

- Automation: Terraform automates the entire infrastructure lifecycle, from provisioning to de-provisioning. This reduces manual intervention, minimizing human error and accelerating deployment times. For example, instead of manually configuring an AWS S3 bucket, Terraform allows defining the bucket’s configuration in code, and then automatically creates and manages it.

- Version Control: Infrastructure configurations are stored as code, enabling version control using tools like Git. This facilitates tracking changes, rolling back to previous states, and collaborating effectively within teams. Changes can be reviewed and approved before being applied, ensuring consistency and preventing unintended consequences.

- Consistency: Terraform ensures infrastructure consistency across environments (development, staging, production). By defining infrastructure in code, the same configuration can be applied repeatedly, guaranteeing that each environment is provisioned identically. This minimizes configuration drift and reduces the risk of environment-specific issues.

- Modularity and Reusability: Terraform supports modular design through the use of modules. Modules encapsulate reusable infrastructure components, such as networking configurations or database setups. This promotes code reuse, reduces redundancy, and simplifies complex deployments.

- State Management: Terraform maintains a state file that tracks the current state of the infrastructure. This state file allows Terraform to understand the relationship between resources and manage changes effectively. It prevents conflicts and ensures that updates are applied in the correct order.

Overview of AWS Lambda Functions

AWS Lambda is a serverless compute service that allows you to run code without provisioning or managing servers. Lambda automatically manages the underlying infrastructure, including compute resources, operating systems, and scaling.

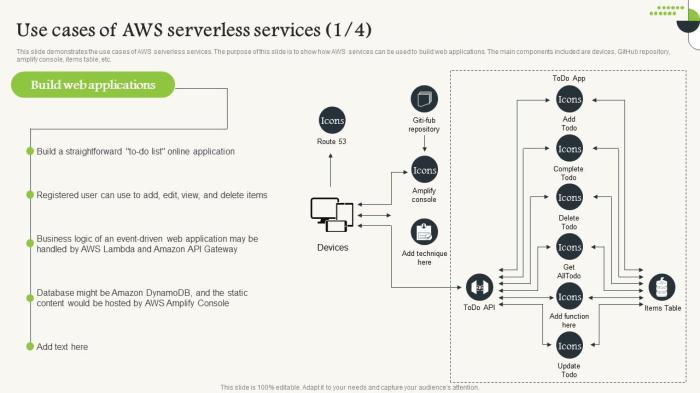

- Event-Driven Execution: Lambda functions are triggered by events from various AWS services, such as Amazon S3, Amazon DynamoDB, and Amazon API Gateway. This event-driven architecture allows you to build applications that respond to changes in real-time.

- Automatic Scaling: Lambda automatically scales your functions based on the number of incoming requests. You don’t need to worry about provisioning or managing servers to handle peak loads. Lambda scales up and down as needed, ensuring optimal performance and cost efficiency.

- Pay-per-Use Pricing: You are charged only for the compute time your function consumes. This pay-per-use model can significantly reduce costs compared to traditional server-based architectures, especially for applications with variable workloads.

- Supported Languages: Lambda supports multiple programming languages, including Node.js, Python, Java, Go, and C#. This flexibility allows you to choose the language that best suits your needs and expertise.

- Integration with Other AWS Services: Lambda seamlessly integrates with other AWS services, such as Amazon API Gateway, Amazon DynamoDB, and Amazon SQS. This integration simplifies the development of complex applications that leverage multiple AWS services.

Advantages of Deploying Lambda Functions with Terraform

Deploying Lambda functions with Terraform combines the benefits of IaC with the power of serverless computing, resulting in a streamlined and efficient deployment process.

- Declarative Configuration: Terraform allows you to define your Lambda function’s configuration (code, runtime, memory, timeout, etc.) in a declarative manner. This ensures that the function’s configuration is consistently applied across different environments.

- Automated Deployment: Terraform automates the deployment of Lambda functions, including the creation of IAM roles, S3 buckets (for code storage), and function configuration. This reduces manual effort and the potential for errors.

- Infrastructure as Code for Serverless: Terraform treats Lambda functions as infrastructure components, enabling you to manage them alongside other resources, such as API Gateways and DynamoDB tables. This promotes a unified approach to infrastructure management.

- Version Control and Rollbacks: Infrastructure configurations are stored as code, enabling version control. This allows you to track changes to your Lambda functions and roll back to previous versions if necessary.

- Reproducibility: Terraform ensures that Lambda functions can be consistently deployed across different environments. This reproducibility is crucial for testing, staging, and production deployments.

Setting up the Development Environment

To effectively deploy AWS Lambda functions using Terraform, a well-configured development environment is crucial. This involves installing and configuring the necessary software and tools, and establishing a structured project directory. This structured approach streamlines the deployment process and enhances maintainability.

Necessary Software and Tools for Terraform Deployment

Several software components are essential for a successful Terraform deployment of Lambda functions. These tools provide the functionality needed to define infrastructure as code, interact with AWS services, and manage the deployment lifecycle.

- Terraform: The core tool for defining and managing infrastructure. It translates configuration files into API calls to provision and manage resources. It’s crucial to have the correct version installed for compatibility with the AWS provider and the desired features.

- AWS CLI (Command Line Interface): Provides a command-line interface to interact with AWS services, including Lambda. It’s used for authentication, configuration, and managing resources. The AWS CLI must be configured with the appropriate AWS credentials (e.g., access key ID and secret access key) to allow Terraform to interact with your AWS account.

- An Integrated Development Environment (IDE) or Text Editor: For writing and editing Terraform configuration files (e.g., `.tf` files). Popular choices include Visual Studio Code, Sublime Text, and IntelliJ IDEA. Features like syntax highlighting, code completion, and linting significantly improve the development experience.

- A Version Control System (e.g., Git): For managing the Terraform configuration files. Version control allows for tracking changes, collaborating with others, and rolling back to previous states if necessary. Git is widely used and integrates seamlessly with platforms like GitHub, GitLab, and Bitbucket.

- A Code Editor with Terraform Extension: Enhances the coding experience by providing features like syntax highlighting, autocompletion, and error checking specifically tailored for Terraform files. This greatly improves the efficiency and accuracy of writing configurations.

Designing the Directory Structure for a Terraform Project Deploying Lambda

A well-organized directory structure is vital for managing Terraform projects, especially those involving Lambda functions. This structure promotes clarity, maintainability, and scalability. The following directory structure is a recommended approach.“`my-lambda-project/├── main.tf # Main configuration file: defines resources.├── variables.tf # Defines input variables for the project.├── outputs.tf # Defines output values for the project.├── providers.tf # Specifies the providers used (e.g., AWS).├── lambda/ # Directory for Lambda function code (e.g., Python, Node.js).│ └── my_function/│ ├── index.py # Lambda function code (Python example).│ └── requirements.txt # Dependencies (Python example).├── terraform.tfvars # (Optional) Contains variable values.└── README.md # Project documentation.“`This structure provides a clear separation of concerns:

- `main.tf`: This file contains the primary resource definitions, such as the Lambda function, IAM role, and any associated resources (e.g., API Gateway, S3 bucket).

- `variables.tf`: This file defines input variables that can be customized for different environments or configurations. This allows for reusability and flexibility.

- `outputs.tf`: This file defines output values, such as the Lambda function’s ARN or API Gateway endpoint, which can be used by other resources or for external consumption.

- `providers.tf`: This file specifies the providers that Terraform will use, such as the AWS provider. It also configures the provider with the necessary credentials and region.

- `lambda/`: This directory contains the source code for the Lambda functions. Each Lambda function should ideally reside in its own subdirectory for better organization. The example shows `my_function` as a directory that encapsulates the code for a specific Lambda function.

- `terraform.tfvars`: This file (optional) stores variable values, which can be used to avoid hardcoding values directly into the configuration files. This promotes security and maintainability.

- `README.md`: Provides documentation for the project, including instructions on how to deploy, configure, and manage the infrastructure.

Organizing the Installation Process for Terraform and AWS CLI

The installation process for Terraform and the AWS CLI involves downloading and installing the necessary binaries, and then configuring them for use. The steps are Artikeld below for common operating systems.

- Terraform Installation:

- Download: Download the appropriate Terraform binary for your operating system from the official HashiCorp website (https://www.terraform.io/downloads).

- Unzip: Unzip the downloaded archive.

- Install: Move the Terraform executable to a directory included in your system’s `PATH` environment variable (e.g., `/usr/local/bin` on macOS/Linux). This allows you to run Terraform commands from any directory.

- Verify: Verify the installation by running `terraform version` in your terminal. This should display the installed Terraform version.

- Download: Download the appropriate AWS CLI installer for your operating system from the AWS documentation. For example, on Linux, you can often use `curl “https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip” -o “awscliv2.zip”`.

- Unzip and Install: Unzip the downloaded archive and run the installer. The installation process typically guides you through setting up the AWS CLI.

- Configure: Configure the AWS CLI with your AWS credentials using the `aws configure` command. This command prompts you for your access key ID, secret access key, default region, and output format. For example:

aws configure

AWS Access Key ID [None]: AKIA...

AWS Secret Access Key [None]: ...

Default region name [None]: us-east-1

Default output format [None]: json - Verify: Verify the installation and configuration by running `aws sts get-caller-identity`. This command should return information about your IAM user or role, confirming that the AWS CLI is correctly authenticated.

These steps, when followed meticulously, will set up a functional development environment that is crucial for a smooth Terraform deployment process.

Writing the Terraform Configuration for Lambda

Terraform’s declarative approach simplifies the deployment and management of infrastructure, including AWS Lambda functions. This section details the creation of a Terraform configuration to deploy a basic Lambda function, emphasizing the specification of runtime, handler, and the crucial configuration of IAM roles and permissions for execution. This approach ensures that the function can operate securely and efficiently within the AWS environment.

Creating a Terraform Configuration File for a Basic Lambda Function

A Terraform configuration file defines the infrastructure to be created. For a Lambda function, this involves specifying the function’s code, runtime environment, handler function, and other related configurations. This configuration is typically written in a file named `main.tf` or a similar descriptive name.“`terraformresource “aws_lambda_function” “example” function_name = “my-lambda-function” filename = “lambda_function_payload.zip” # Replace with the path to your deployment package source_code_hash = filebase64sha256(“lambda_function_payload.zip”) # Ensure a new deployment handler = “index.handler” # format:

- `function_name`: A unique name for the Lambda function.

- `filename`: The path to the deployment package (a zip file containing the function code).

- `source_code_hash`: A computed hash of the deployment package to trigger updates when the code changes. This is essential for ensuring that Terraform correctly detects changes to the function code.

- `handler`: Specifies the entry point for the function (e.g., `index.handler` for a JavaScript function where `index.js` is the filename and `handler` is the function name).

- `runtime`: Specifies the runtime environment (e.g., `nodejs18.x`, `python3.9`, `java17`). The runtime must be supported by AWS Lambda.

- `role`: The ARN (Amazon Resource Name) of the IAM role that grants the Lambda function the necessary permissions to access other AWS resources.

- `timeout`: The maximum execution time in seconds.

- `memory_size`: The amount of memory allocated to the function in MB.

Specifying the Lambda Function’s Runtime and Handler

The `runtime` and `handler` attributes are crucial for Lambda function execution. They dictate how AWS Lambda executes the code provided in the deployment package. The correct configuration ensures that the function can be invoked and can execute its intended logic.The `runtime` attribute dictates the execution environment, including the programming language and its associated libraries. AWS Lambda supports various runtimes, each with its own characteristics and versioning.

Choosing the appropriate runtime is critical for compatibility and performance. For example:

- `nodejs18.x`: For JavaScript and Node.js applications.

- `python3.9`: For Python 3.9 applications.

- `java17`: For Java 17 applications.

The `handler` attribute specifies the entry point of the Lambda function. It comprises the filename (without the extension) and the function name within the code. For instance, if the deployment package contains a file named `index.js` with a function called `myHandler`, the handler would be `index.myHandler`.

Configuring IAM Roles and Permissions for Lambda Execution

IAM roles are essential for controlling the permissions of Lambda functions. They define what AWS resources the function can access and what actions it can perform. Properly configured IAM roles enhance security by enforcing the principle of least privilege, granting only the necessary permissions.To create an IAM role for a Lambda function in Terraform, you define an `aws_iam_role` resource. This role is then associated with the Lambda function using the `role` attribute.

The role must include a trust relationship that allows the Lambda service to assume the role on behalf of the function. Additionally, the role needs policies that grant the function permissions to access other AWS resources, such as:

- CloudWatch Logs: To write logs for monitoring and debugging.

- Other AWS services: Depending on the function’s requirements (e.g., access to S3 buckets, DynamoDB tables, etc.).

Example IAM role configuration:“`terraformresource “aws_iam_role” “lambda_role” name = “lambda_basic_execution” assume_role_policy = jsonencode( Version = “2012-10-17” Statement = [ Action = “sts:AssumeRole” Principal = Service = “lambda.amazonaws.com” Effect = “Allow” Sid = “” , ] )resource “aws_iam_role_policy” “lambda_policy” name = “lambda_basic_policy” role = aws_iam_role.lambda_role.id policy = jsonencode( Version = “2012-10-17” Statement = [ Action = [ “logs:CreateLogGroup”, “logs:CreateLogStream”, “logs:PutLogEvents”, ] Effect = “Allow” Resource = “arn:aws:logs:*:*:*” , ] )“`In this example:

- The `assume_role_policy` allows the Lambda service to assume the role.

- The `aws_iam_role_policy` resource defines a policy that grants the function permissions to write logs to CloudWatch. This is a fundamental permission for Lambda functions to enable monitoring and troubleshooting.

The IAM role and associated policies must be carefully designed to ensure that the Lambda function has the necessary permissions without granting excessive access. This security best practice minimizes the potential impact of any security breaches.

Configuring the Lambda Function Code

Configuring the Lambda function code is a crucial step in the deployment process. This involves writing the code that will execute within the Lambda environment, packaging it appropriately, and ensuring its seamless integration with other AWS services. The efficiency, scalability, and security of the entire solution hinge on the careful configuration of this code.

Examples of Lambda Function Code in Different Programming Languages

Lambda functions support multiple programming languages, providing developers with flexibility. The choice of language often depends on the existing skillset, project requirements, and performance considerations. Below are examples demonstrating fundamental Lambda function implementations in Python and Node.js.

Python Example:

This Python example demonstrates a simple Lambda function that processes an event and returns a greeting. It uses the `json` library for handling JSON data and the `os` library to access environment variables.

“`pythonimport jsonimport osdef lambda_handler(event, context): “”” Lambda function handler. Args: event (dict): Event data passed to the function. context (object): Lambda context object. Returns: dict: A dictionary containing the greeting.

“”” name = event.get(‘name’, ‘World’) greeting = f”Hello, name!” api_key = os.environ.get(‘API_KEY’) if api_key: greeting += f” API Key: api_key” return ‘statusCode’: 200, ‘body’: json.dumps( ‘message’: greeting ) “`

Node.js Example:

This Node.js example demonstrates a similar function, using JavaScript’s syntax. It retrieves a name from the event and returns a greeting. It also utilizes environment variables for configuration.

“`javascriptexports.handler = async (event) => const name = event.name || ‘World’; const greeting = `Hello, $name!`; const apiKey = process.env.API_KEY; let response = statusCode: 200, body: JSON.stringify( message: greeting + (apiKey ?

` API Key: $apiKey` : ”) ), ; return response;;“`

Both examples showcase the basic structure of a Lambda function, including the handler function that receives the event and context objects. The examples also demonstrate accessing event data and utilizing environment variables for configuration.

Best Practices for Packaging Lambda Function Code

Proper packaging is essential for efficient deployment and execution of Lambda functions. It ensures that all dependencies are included and that the code is optimized for the Lambda environment.

Here are key best practices:

- Use a deployment package: A deployment package is a ZIP archive containing the function code and its dependencies. For Python, this typically includes the Python source files and any necessary libraries. For Node.js, it includes the JavaScript files and the `node_modules` directory.

- Minimize package size: Smaller package sizes lead to faster deployment times and reduced cold start times. Only include necessary dependencies. Remove any unused files or libraries.

- Use a build process: Implement a build process to manage dependencies and create the deployment package. Tools like `pip` for Python and `npm` for Node.js facilitate dependency management.

- Use Layers (for Python): AWS Lambda layers allow you to separate your function code from its dependencies. This promotes code reuse, reduces package size, and simplifies updates. For example, you can create a layer containing common libraries like `requests` for Python.

- Use container images (for any language): For more complex deployments or if you want to run your lambda functions in a containerized environment, using container images offers more control and flexibility.

Detailing How to Integrate the Lambda Function Code with AWS Services

Lambda functions are most effective when integrated with other AWS services. This integration allows functions to react to events, process data, and interact with various AWS resources. The following details integration approaches.

Here’s how to integrate Lambda functions with AWS services:

- Event Sources: Lambda functions can be triggered by various AWS services, acting as event sources.

- API Gateway: Allows Lambda functions to be invoked via HTTP requests. You define API endpoints and map them to Lambda functions.

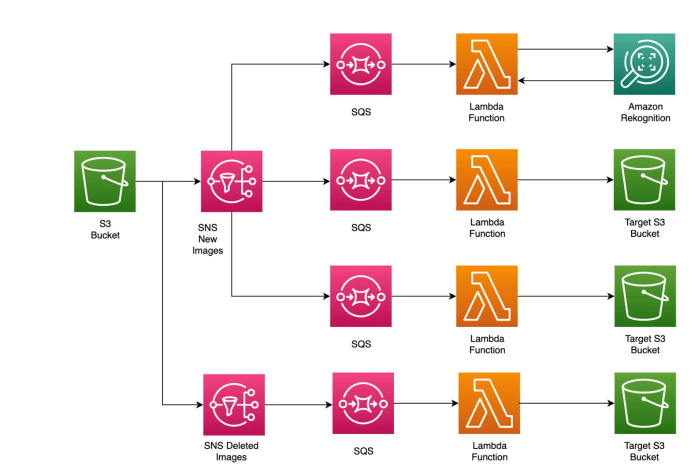

- S3: Triggers Lambda functions when objects are created, updated, or deleted in an S3 bucket. This enables serverless data processing and object transformation.

- DynamoDB: Triggers Lambda functions when items are created, updated, or deleted in a DynamoDB table. This facilitates real-time data processing and event-driven architectures.

- SNS: Allows Lambda functions to subscribe to SNS topics and receive messages. This enables building notification systems and event-driven applications.

- SQS: Allows Lambda functions to consume messages from SQS queues. This enables asynchronous processing and decoupling of services.

- CloudWatch Events/EventBridge: Allows scheduling or reacting to events from other AWS services. This facilitates automating tasks and reacting to system events.

- IAM Roles and Permissions: Lambda functions require an IAM role with appropriate permissions to access other AWS services. The role defines the actions the function is authorized to perform. For example, if a Lambda function needs to write to an S3 bucket, the IAM role must have `s3:PutObject` permissions.

- Environment Variables: Environment variables store configuration data, such as API keys, database connection strings, and service endpoints. They allow you to configure your Lambda function without modifying the code.

- SDK Integration: AWS SDKs (Software Development Kits) for various programming languages provide APIs for interacting with AWS services within your Lambda function code. For example, the AWS SDK for Python (Boto3) enables you to interact with S3, DynamoDB, and other services.

- Example: S3 Integration: A Lambda function can be triggered by an S3 event (e.g., object upload). The function code can then process the uploaded object. For example, it can resize an image, extract text from a document, or perform data validation. The Lambda function needs the `s3:GetObject` and `s3:PutObject` permissions to read from and write to the S3 bucket, respectively. The S3 event data provides information about the uploaded object, such as its key (filename) and bucket name.

Deploying and Testing the Lambda Function

Deploying and testing a Lambda function are critical steps in the development lifecycle. This phase ensures the function operates as intended within the AWS environment. It involves initializing the Terraform configuration, planning the infrastructure changes, applying those changes, and subsequently validating the function’s behavior through various testing methods. These steps guarantee the successful integration and functionality of the Lambda function.

Initializing, Planning, and Applying the Terraform Configuration

The deployment process using Terraform involves a sequence of commands designed to manage the infrastructure. These commands are essential for provisioning and updating the Lambda function within the AWS cloud.

The core commands are:

- `terraform init`: This command initializes a new or existing Terraform working directory. It downloads the necessary provider plugins, such as the AWS provider, which allows Terraform to interact with AWS resources. Initialization is the first step and must be performed before any other Terraform commands.

- `terraform plan`: This command creates an execution plan. It analyzes the current state of the infrastructure and compares it to the desired state defined in the Terraform configuration files. The plan Artikels the changes that Terraform will make to achieve the desired state, such as creating, updating, or deleting resources. This is a crucial step for understanding the impact of the changes before applying them.

- `terraform apply`: This command executes the plan and applies the changes to the infrastructure. It creates, updates, or deletes resources as defined in the Terraform configuration. After `terraform plan` has been executed and reviewed, `terraform apply` is used to deploy the infrastructure. The `terraform apply` command will prompt the user to confirm the actions before proceeding.

Here’s a practical example illustrating these commands:

- Navigate to the directory containing your Terraform configuration files.

- Run `terraform init`. This will download the necessary AWS provider. The output will confirm the successful initialization, indicating the provider has been installed.

- Run `terraform plan`. This will display the execution plan, showing the resources that will be created (e.g., the Lambda function, the IAM role, etc.). Review the plan to ensure it reflects the expected changes.

- Run `terraform apply`. Terraform will prompt you to confirm the actions. Type `yes` and press Enter to proceed. Terraform will then create the resources as defined in your configuration. The output will display the progress and the final state of the resources.

Demonstrating the Process of Testing the Deployed Lambda Function

Testing a deployed Lambda function is essential to verify its functionality and ensure it meets the defined requirements. This process involves invoking the function and examining its output. Different testing methods can be employed, ranging from simple console-based invocations to more sophisticated integration tests.

The testing process typically includes:

- Direct Invocation: Invoking the function directly using the AWS console, CLI, or SDK. This is the most basic form of testing and is useful for verifying the function’s core logic.

- Input and Output Validation: Providing various inputs to the function and validating the output against the expected results. This ensures the function handles different scenarios correctly.

- Error Handling Verification: Testing the function’s ability to handle errors gracefully. This includes verifying that the function logs errors and returns appropriate error messages.

- Integration Testing: Testing the function in conjunction with other AWS services, such as API Gateway, S3, or DynamoDB. This ensures the function integrates correctly with the broader system.

An example of testing involves:

- Accessing the AWS Console: Log in to the AWS console and navigate to the Lambda service.

- Selecting the Function: Choose the Lambda function you deployed using Terraform.

- Testing the Function: Click the “Test” tab. You can configure test events with various inputs. For example, if your function expects a JSON payload, you can create a test event with a sample JSON payload.

- Invoking the Function: Click the “Test” button to invoke the function with the configured test event.

- Viewing the Results: The console displays the execution results, including the function’s output, logs, and any errors. Verify the output against the expected results to confirm the function’s behavior.

Providing Examples of How to Invoke the Lambda Function Through the AWS Console or CLI

Invoking a Lambda function through the AWS console or CLI provides a straightforward way to test and interact with the function. These methods allow for easy invocation with various input payloads, enabling comprehensive testing and debugging.

Invoking via the AWS Console:

- Access the AWS Console: Navigate to the AWS console and sign in.

- Navigate to Lambda: Go to the Lambda service.

- Select the Function: Choose the Lambda function that you want to invoke.

- Configure Test Event: Click the “Test” tab. You can configure test events, which are JSON payloads that the Lambda function will receive as input. Create a new test event or select an existing one.

- Invoke the Function: Click the “Test” button. This will invoke the function with the selected test event.

- View Results: Examine the execution results, including the output, logs, and any errors. The “Details” section will show the function’s response, execution time, and other metrics.

Invoking via the AWS CLI:

The AWS CLI offers a programmatic way to invoke Lambda functions. The `aws lambda invoke` command is used for this purpose.

- Install and Configure the AWS CLI: Ensure the AWS CLI is installed and configured with the appropriate AWS credentials and region.

- Prepare the Input Payload (Optional): If the function requires input, create a JSON file containing the input data. For example, create a file named `input.json` with the following content:

“key1”: “value1”, “key2”: “value2”

- Invoke the Function: Use the `aws lambda invoke` command. The command takes the function name, the invocation type (RequestResponse is the default, which is synchronous), and an optional input file.

Example using an input file:

`aws lambda invoke –function-name my-lambda-function –payload file://input.json output.txt`Example without an input file (passing an empty payload):

`aws lambda invoke –function-name my-lambda-function output.txt` - Examine the Output: The command will create an output file (e.g., `output.txt`) containing the function’s response. Inspect this file to verify the function’s output. Additionally, the CLI will print the function’s execution details, such as the invocation ID, billed duration, and memory used.

Managing Dependencies and Modules

Efficient management of dependencies and modularization are crucial for creating maintainable and scalable infrastructure as code (IaC) solutions, particularly when deploying Lambda functions. Terraform’s module system facilitates code reuse, reduces redundancy, and promotes best practices. Properly handling dependencies, both within Terraform configurations and within the Lambda function’s code, ensures consistent and reliable deployments.

Creating a Custom Terraform Module for Reusable Lambda Function Deployments

Creating a custom Terraform module for Lambda functions encapsulates the common configuration elements, simplifying deployment and promoting consistency across multiple Lambda functions. This approach improves maintainability by centralizing changes and updates to the Lambda function’s infrastructure.To create a reusable Lambda module, the following components are essential:

- Module Structure: A well-defined module structure organizes the configuration files logically. A typical structure includes a `main.tf` file for resource definitions, a `variables.tf` file for input variables, and an `outputs.tf` file for exposing values from the module.

- Input Variables: Define variables to customize the Lambda function’s configuration. Examples include:

- `function_name`: The name of the Lambda function.

- `handler`: The entry point for the Lambda function (e.g., `index.handler`).

- `runtime`: The Lambda function’s runtime environment (e.g., `nodejs18.x`).

- `source_path`: The path to the Lambda function’s code.

- `memory_size`: The memory allocated to the Lambda function.

- `timeout`: The timeout duration for the Lambda function.

- Resource Definitions: Within `main.tf`, define the `aws_lambda_function` resource, utilizing the input variables for configuration. Also, consider including other related resources such as `aws_iam_role` for execution permissions and `aws_cloudwatch_log_group` for logging.

- Output Values: Define output values in `outputs.tf` to expose relevant information about the deployed Lambda function, such as the function’s ARN (Amazon Resource Name).

Example of `main.tf` within a `lambda` module:“`terraformresource “aws_iam_role” “lambda_role” name = “$var.function_name-role” assume_role_policy = jsonencode( Version = “2012-10-17” Statement = [ Action = “sts:AssumeRole” Effect = “Allow” Principal = Service = “lambda.amazonaws.com” ] )resource “aws_lambda_function” “this” function_name = var.function_name filename = var.source_path handler = var.handler runtime = var.runtime role = aws_iam_role.lambda_role.arn memory_size = var.memory_size timeout = var.timeout source_code_hash = filebase64sha256(var.source_path) environment variables = var.environment_variables resource “aws_cloudwatch_log_group” “log_group” name = “/aws/lambda/$var.function_name” retention_in_days = 30resource “aws_iam_role_policy_attachment” “lambda_basic_execution” role = aws_iam_role.lambda_role.name policy_arn = “arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole”“`Example of `variables.tf` within a `lambda` module:“`terraformvariable “function_name” type = string description = “The name of the Lambda function.”variable “handler” type = string description = “The entry point for the Lambda function (e.g., index.handler).”variable “runtime” type = string description = “The Lambda function’s runtime environment (e.g., nodejs18.x).”variable “source_path” type = string description = “The path to the Lambda function’s code.”variable “memory_size” type = number default = 128 description = “The memory allocated to the Lambda function (in MB).”variable “timeout” type = number default = 30 description = “The timeout duration for the Lambda function (in seconds).”variable “environment_variables” type = map(string) default = description = “Environment variables to set for the Lambda function.”“`Example of `outputs.tf` within a `lambda` module:“`terraformoutput “function_arn” value = aws_lambda_function.this.arn description = “The ARN of the Lambda function.”“`To use this module in a Terraform configuration, reference it using the `module` block:“`terraformmodule “my_lambda” source = “./modules/lambda” # Path to the lambda module function_name = “my-example-function” handler = “index.handler” runtime = “nodejs18.x” source_path = “lambda_function.zip” # Assuming a zip file containing the code environment_variables = EXAMPLE_VARIABLE = “example_value” “`This modular approach streamlines deployments, improves consistency, and simplifies updates by allowing modifications to be made in one place and propagated across all instances of the module.

Organizing the Use of External Modules to Manage Dependencies

Terraform’s ecosystem provides numerous external modules that simplify the management of infrastructure dependencies, such as networking, security groups, and databases. Leveraging these modules promotes code reuse, reduces the need to write custom configurations for common infrastructure components, and adheres to best practices.To effectively use external modules:

- Module Selection: Choose modules from trusted sources, such as the Terraform Registry, or from reputable providers. Verify the module’s documentation, examples, and versioning to ensure compatibility and proper usage.

- Module Configuration: Configure the external module by providing the necessary input variables. Refer to the module’s documentation for the required and optional variables.

- Versioning: Pin the module’s version to maintain stability and control over changes. Use the `version` argument in the `module` block to specify the desired version.

- Dependency Management: Terraform automatically handles dependencies between resources defined within the external module and other resources in the configuration. Ensure that the module’s outputs are used as inputs to other resources when required.

Example of using the `aws_vpc` module from the Terraform Registry to create a VPC:“`terraformmodule “vpc” source = “terraform-aws-modules/vpc/aws” version = “5.1.0” name = “my-vpc” cidr = “10.0.0.0/16” azs = [“us-east-1a”, “us-east-1b”, “us-east-1c”] private_subnets = [“10.0.1.0/24”, “10.0.2.0/24”, “10.0.3.0/24”] public_subnets = [“10.0.101.0/24”, “10.0.102.0/24”, “10.0.103.0/24”] tags = Terraform = “true” “`In this example, the `aws_vpc` module manages the creation of a VPC, subnets, and other networking components.

The module’s outputs, such as the VPC ID and subnet IDs, can be used as inputs to other resources, such as Lambda functions, to connect them to the network.By leveraging external modules, the configuration is simplified, and the focus shifts from infrastructure provisioning to application deployment. This approach accelerates development cycles and reduces the risk of errors.

Handling Dependencies in the Lambda Function’s Code

Dependencies within the Lambda function’s code, such as libraries and frameworks, must be managed to ensure that the function executes correctly. The method for handling dependencies depends on the programming language and the deployment package format.

- Node.js:

- Use `npm` to manage dependencies.

- Include the `node_modules` directory in the deployment package (e.g., a ZIP file).

- Consider using a build process (e.g., Webpack) to bundle the code and dependencies.

- Python:

- Use `pip` to manage dependencies.

- Create a `requirements.txt` file to list the dependencies.

- Include the dependencies in the deployment package. This can be done using a deployment package that includes the dependencies, or by utilizing Lambda Layers to separate code from dependencies.

- Java:

- Use a build tool like Maven or Gradle to manage dependencies.

- Package the dependencies into a JAR file or include them in the deployment package.

For example, using Python and `pip`:

1. Create `requirements.txt`

List the required Python packages and their versions: “`text requests==2.28.1 “`

2. Package the Code and Dependencies

Create a deployment package (e.g., a ZIP file) that includes the Lambda function’s code (e.g., `main.py`) and the installed dependencies. This can be achieved by creating a virtual environment and installing the dependencies there before zipping. “`bash python3 -m venv .venv source .venv/bin/activate pip install -r requirements.txt –target ./ cp main.py .

zip -r lambda_function.zip . “` The `lambda_function.zip` file is then used in the Terraform configuration. This approach ensures that the Lambda function has access to the necessary libraries and frameworks to execute its logic. Alternative methods, such as Lambda Layers, offer more efficient dependency management by allowing the separation of code and dependencies.

Lambda Layers promote code reuse and reduce the size of the deployment package, which can improve deployment times and reduce cold start latency.

Configuring Triggers and Events

Terraform facilitates the configuration of event triggers for AWS Lambda functions, enabling these functions to respond to various events within the AWS ecosystem. This capability is crucial for building event-driven architectures, where actions are automatically initiated in response to specific occurrences. By defining these triggers, you can seamlessly integrate Lambda functions with other AWS services, creating automated workflows and responsive applications.

Configuring Lambda Function Triggers

Lambda functions can be triggered by a multitude of event sources, and Terraform provides resources to configure these triggers effectively. The choice of trigger depends on the desired functionality and the service that should initiate the function execution. The core principle involves defining an event source mapping or, in the case of API Gateway, configuring the gateway to route requests to the Lambda function.

- API Gateway: API Gateway enables the creation of REST APIs that trigger Lambda functions. This configuration involves defining the API endpoint, HTTP method (e.g., GET, POST), and integration with the Lambda function. When a request is received at the endpoint, API Gateway invokes the specified Lambda function.

- Amazon S3: S3 can trigger Lambda functions upon object creation, deletion, or modification. This is achieved by configuring an S3 bucket notification to send events to the Lambda function. The function receives information about the event, such as the object key and bucket name, allowing it to process the data.

- Amazon CloudWatch Events (EventBridge): CloudWatch Events (now EventBridge) enables the creation of rules that trigger Lambda functions based on time schedules or specific events within other AWS services. This is particularly useful for scheduled tasks or responding to operational events. The rule defines the event pattern that matches the events of interest, and the target is the Lambda function to be invoked.

- Amazon DynamoDB: DynamoDB streams can trigger Lambda functions upon changes to DynamoDB table items. This is achieved by enabling streams on the DynamoDB table and configuring a Lambda function to process the stream records. The function receives information about the changes, such as the item before and after the modification.

- Amazon SNS: Amazon SNS (Simple Notification Service) can trigger Lambda functions when a message is published to an SNS topic. This is useful for building fan-out architectures, where a single message can trigger multiple functions. The function receives the message payload from the SNS topic.

- Amazon Kinesis: Amazon Kinesis streams can trigger Lambda functions to process real-time data streams. This involves configuring a Kinesis stream as an event source for the Lambda function. The function receives batches of records from the stream.

Event Source Mappings for Different AWS Services

Event source mappings are the mechanism by which Lambda functions are connected to various AWS services to be triggered by events from those services. Terraform utilizes specific resources to configure these mappings, tailoring them to the characteristics of each service. Understanding these mappings is crucial for designing efficient and scalable event-driven architectures.

- S3 Event Source Mapping: Configuring an S3 event source mapping involves defining the bucket name, the event types to trigger the function (e.g., object creation, object deletion), and any optional filtering criteria. The `aws_s3_bucket_notification` resource is used for this. For instance, a configuration might specify that a Lambda function should be triggered whenever a new object is uploaded to an S3 bucket with a specific prefix.

- CloudWatch Events (EventBridge) Event Source Mapping: Configuring a CloudWatch Events (EventBridge) event source mapping involves defining an event rule that specifies the event pattern to match and the Lambda function as the target. The `aws_cloudwatch_event_rule` and `aws_cloudwatch_event_target` resources are used for this. An example configuration could trigger a Lambda function every hour to perform a specific task.

- DynamoDB Event Source Mapping: DynamoDB event source mappings involve enabling streams on a DynamoDB table and configuring the Lambda function to process the stream records. The `aws_lambda_event_source_mapping` resource is used for this. The function will be invoked whenever items are created, updated, or deleted in the DynamoDB table.

- Kinesis Event Source Mapping: Kinesis event source mappings involve configuring a Kinesis stream as an event source for the Lambda function. The `aws_lambda_event_source_mapping` resource is used for this. The function receives batches of records from the Kinesis stream, enabling real-time data processing.

- SNS Event Source Mapping: SNS event source mappings involve subscribing a Lambda function to an SNS topic. The `aws_lambda_permission` resource is often used to grant the necessary permissions for SNS to invoke the Lambda function. The function will be triggered whenever a message is published to the SNS topic.

Configuring an API Gateway Endpoint that Triggers a Lambda Function

Configuring an API Gateway endpoint to trigger a Lambda function is a common pattern for building serverless APIs. Terraform facilitates this configuration by defining the API Gateway resources and integrating them with the Lambda function. This setup allows the Lambda function to handle HTTP requests and return responses.

The process involves several steps:

- Define the API Gateway: Create an API Gateway resource using the `aws_api_gateway_rest_api` resource. This resource defines the overall API.

- Create a Resource: Define a resource within the API Gateway, such as `/hello`, using the `aws_api_gateway_resource` resource. This resource represents a path in the API.

- Create a Method: Define an HTTP method (e.g., GET, POST) for the resource using the `aws_api_gateway_method` resource. This method defines the HTTP verb and the integration type.

- Integrate with the Lambda Function: Configure the method to integrate with the Lambda function using the `aws_api_gateway_integration` resource. This resource specifies the Lambda function ARN and the request/response mapping.

- Grant Permissions: Grant API Gateway permission to invoke the Lambda function using the `aws_lambda_permission` resource.

- Deploy the API: Deploy the API Gateway to make it accessible using the `aws_api_gateway_deployment` and `aws_api_gateway_stage` resources.

Example:

Assume a simple Lambda function named `hello_function` that returns a greeting. The Terraform configuration would include:

1. The `aws_api_gateway_rest_api` resource, defining the API name (e.g., “MyAPI”).

2. The `aws_api_gateway_resource` resource, creating a resource path (e.g., “/hello”).

3. The `aws_api_gateway_method` resource, configuring a GET method for the “/hello” resource.

4. The `aws_api_gateway_integration` resource, integrating the GET method with the `hello_function` Lambda function.

5. The `aws_lambda_permission` resource, granting API Gateway permission to invoke the Lambda function.

6. The `aws_api_gateway_deployment` and `aws_api_gateway_stage` resources, deploying the API and making it accessible.

After deployment, accessing the API endpoint (e.g., `https://your-api-id.execute-api.your-region.amazonaws.com/stage/hello`) would trigger the Lambda function and return the greeting.

Monitoring and Logging

Effective monitoring and logging are critical for maintaining the health, performance, and security of Lambda functions. By collecting and analyzing logs, developers can gain valuable insights into function behavior, identify and resolve issues, and optimize resource utilization. Implementing comprehensive monitoring allows for proactive detection of anomalies and potential problems, enabling timely intervention and minimizing downtime.

Configuring CloudWatch Logging for Lambda Functions

CloudWatch is the primary logging and monitoring service for AWS. Lambda functions automatically integrate with CloudWatch, and logs are stored in CloudWatch Logs. The configuration for CloudWatch logging is handled implicitly by default, but customizations can be made through Terraform to control log group names, retention policies, and other settings.To configure CloudWatch logging for a Lambda function, Terraform’s `aws_lambda_function` resource includes attributes related to logging.

These settings primarily control the log group associated with the function and the retention period for the logs.

- Log Group Naming: By default, Lambda creates a log group named `/aws/lambda/

`. You can specify a custom log group name using the `name` attribute within the `aws_cloudwatch_log_group` resource. This allows for organization and easier log identification. For instance, you might use a naming convention like `/aws/lambda/my-application-production-lambda`. - Log Retention: The `retention_in_days` attribute in the `aws_cloudwatch_log_group` resource determines how long logs are stored. Valid values range from 1 to 3653 days (approximately 10 years), or you can set it to `0` for infinite retention (which is not recommended due to storage costs). The choice of retention period depends on compliance requirements, debugging needs, and storage budget. For example, a shorter retention period (e.g., 7 days) might be suitable for frequently updated development environments, while a longer period (e.g., 365 days) might be necessary for production environments subject to regulatory requirements.

- Log Format: Lambda functions automatically log information about each invocation, including the function’s execution time, memory usage, and any errors. By default, these logs are formatted as plain text. However, the function code itself can also generate logs using standard output (e.g., `console.log` in Node.js or `print` in Python), which will be captured and stored in CloudWatch Logs. The format of these application-specific logs depends on the application code.

Consider structuring log messages with key-value pairs for easier parsing and analysis.

The following Terraform code snippet illustrates how to configure CloudWatch logging, including a custom log group name and a 30-day retention policy:“`terraformresource “aws_cloudwatch_log_group” “example” name = “/aws/lambda/my-lambda-function” retention_in_days = 30resource “aws_lambda_function” “example” # … other configuration … name = “my-lambda-function” # …

#Ensure the Lambda function’s IAM role has permissions to write logs to CloudWatch. #The ‘arn’ of the log group is automatically associated.“`

Demonstrating the Process of Setting Up CloudWatch Alarms for Monitoring Lambda Performance

CloudWatch Alarms allow for automated monitoring of Lambda function metrics. Alarms trigger notifications or automated actions based on predefined thresholds for metrics like invocation errors, function duration, and concurrent executions. Properly configured alarms are essential for proactively detecting and responding to performance issues.The process involves several steps, including selecting metrics, defining thresholds, and configuring actions.

- Metric Selection: Choose the relevant metrics to monitor. Key metrics for Lambda functions include:

- Invocations: The number of times the function is executed.

- Errors: The number of function invocations that result in errors.

- Throttles: The number of times the function is throttled due to concurrency limits.

- Duration: The execution time of the function.

- ConcurrentExecutions: The number of concurrent function executions.

- Threshold Definition: Define the conditions that trigger the alarm. This involves setting the threshold value, the comparison operator (e.g., greater than, less than), and the evaluation period (the number of data points that must meet the condition to trigger the alarm). For example, you might set an alarm to trigger if the `Errors` metric exceeds 10 errors within a 5-minute period.

- Action Configuration: Specify the actions to be taken when the alarm state changes. Common actions include:

- Sending Notifications: Using SNS (Simple Notification Service) to send email, SMS, or other notifications.

- Automated Remediation: Triggering an Auto Scaling action to increase the concurrency limit of the Lambda function.

The following Terraform code demonstrates the configuration of a CloudWatch alarm that triggers when the number of function errors exceeds a threshold:“`terraformresource “aws_cloudwatch_metric_alarm” “lambda_errors” alarm_name = “lambda-errors-alarm” comparison_operator = “GreaterThanThreshold” evaluation_periods = 2 metric_name = “Errors” namespace = “AWS/Lambda” period = 300 # 5 minutes statistic = “Sum” threshold = 10 alarm_description = “Alarm when Lambda function errors exceed 10 in a 5-minute period.” dimensions = FunctionName = aws_lambda_function.example.function_name # Add actions here, like sending notifications via SNS # alarm_actions = [aws_sns_topic.example.arn]“`In this example, the alarm monitors the `Errors` metric for the specified Lambda function (`aws_lambda_function.example`).

If the sum of errors within a 5-minute period (period = 300 seconds) exceeds the threshold of 10, the alarm state changes, and configured actions (e.g., sending a notification) are triggered. The `evaluation_periods` setting ensures the condition persists for at least two periods before the alarm triggers.

Creating a Configuration for Exporting Lambda Logs to a Central Logging Service

Centralized logging is crucial for aggregating logs from multiple sources, including Lambda functions, for easier analysis, troubleshooting, and security auditing. This often involves exporting logs to a dedicated logging service like Amazon Elasticsearch Service (ES), Splunk, or Datadog. Terraform can be used to configure the necessary resources for log export.The configuration process generally involves these key steps:

- Setting up a Destination: This is the service where the logs will be stored. For example, this could be an Amazon ES domain, a Splunk instance, or a Datadog account.

- Creating a Log Subscription Filter: This filter determines which logs are exported from CloudWatch Logs to the destination. It specifies the log group(s) to monitor and optionally filters the logs based on specific patterns or s.

- Configuring IAM Permissions: An IAM role with appropriate permissions is required to allow the Lambda function to write logs to the destination service.

The specific configuration details depend on the target logging service. Here’s an example using Amazon ES as the destination:“`terraform# Assume an existing Elasticsearch domain is available.resource “aws_cloudwatch_log_subscription_filter” “example” name = “lambda-log-export” log_group_name = aws_cloudwatch_log_group.example.name filter_pattern = “” # Optional filter pattern. Export all logs in this case.

destination_arn = aws_elasticsearch_domain.example.arn role_arn = aws_iam_role.example.arnresource “aws_iam_role” “example” name = “lambda-log-export-role” assume_role_policy = jsonencode( Version = “2012-10-17” Statement = [ Action = “sts:AssumeRole” Effect = “Allow” Principal = Service = “logs.amazonaws.com” ] )resource “aws_iam_role_policy” “example” name = “lambda-log-export-policy” role = aws_iam_role.example.id policy = jsonencode( Version = “2012-10-17” Statement = [ Action = [ “es:*” # Or specific actions like es:PutLogEvents ] Effect = “Allow” Resource = aws_elasticsearch_domain.example.arn , Action = [ “logs:PutLogEvents”, “logs:CreateLogStream” ] Effect = “Allow” Resource = “*” ] )“`In this example, the `aws_cloudwatch_log_subscription_filter` resource sets up a filter to export logs from the specified log group (`aws_cloudwatch_log_group.example.name`) to an Elasticsearch domain (`aws_elasticsearch_domain.example.arn`).

The `filter_pattern` is set to an empty string, which means all logs are exported. The IAM role (`aws_iam_role.example`) grants the necessary permissions for the log export process, allowing the logs service to write to the Elasticsearch domain.

Advanced Topics and Best Practices

This section delves into advanced strategies for managing and optimizing Lambda function deployments using Terraform. It explores versioning and aliases, performance optimization techniques, and the implementation of CI/CD pipelines. These practices are crucial for building robust, scalable, and maintainable serverless applications.

Handling Lambda Function Versioning and Aliases

Versioning and aliases are fundamental to managing Lambda function updates and deployments safely. They provide a mechanism to roll back to previous function versions and to deploy different function states to different environments.

- Versioning: Each time a Lambda function’s code or configuration changes, a new version is created. This allows for the preservation of past function states. Terraform can manage the function’s versioning. When the code is modified and applied with Terraform, a new version is automatically created. The version number is incremented.

- Aliases: Aliases act as pointers to specific function versions. They enable the use of a stable endpoint for a function, regardless of the underlying version. This is particularly useful for deployments to different environments like development, staging, and production. For example, the ‘dev’ alias could point to version 1, while the ‘prod’ alias points to version 3. This allows developers to test new versions without impacting production traffic.

- Terraform Configuration for Versioning and Aliases: Terraform can be used to manage both versions and aliases. The `aws_lambda_function` resource creates a new version when the `filename` or `source_code_hash` attributes are updated. The `aws_lambda_alias` resource is used to create and manage aliases, pointing them to specific function versions.

- Example: Consider a scenario where a Lambda function processes image uploads. After a new version is deployed, it may be necessary to roll back to the previous version due to an error. Versioning allows the easy reversion of the function to its previous state, avoiding disruption. The following code snippet illustrates how to create a Lambda function and an alias in Terraform:

resource "aws_lambda_function" "example" function_name = "my-image-processor" handler = "index.handler" runtime = "nodejs18.x" filename = "lambda_function_payload.zip" source_code_hash = filebase64sha256("lambda_function_payload.zip") role = aws_iam_role.iam_for_lambda.arnresource "aws_lambda_alias" "prod" name = "prod" function_name = aws_lambda_function.example.function_name function_version = "$LATEST" // Initially points to the latest versionIn this example, `aws_lambda_function` creates the function.

The `aws_lambda_alias` creates an alias named ‘prod’. When the code is updated, a new version is created, and the alias can be updated to point to the new version.

Sharing Best Practices for Optimizing Lambda Function Performance

Optimizing Lambda function performance is essential for minimizing costs, improving response times, and enhancing the overall user experience. Several strategies can be employed to achieve this goal.

- Code Optimization: Optimize the function’s code for performance. This includes minimizing dependencies, reducing code size, and using efficient algorithms. Profiling tools can help identify bottlenecks in the code. Using libraries specifically designed for serverless environments can also significantly improve performance.

- Memory and CPU Allocation: Configure the appropriate memory and CPU allocation for the function. Allocating more memory also increases the CPU resources available. The right amount of memory depends on the function’s workload. Monitor the function’s performance metrics to determine the optimal memory allocation.

- Execution Environment: Choose the right runtime environment for the function. The performance can vary between runtimes. Consider factors such as startup time and the availability of optimized libraries.

- Caching: Implement caching strategies to reduce the load on the function and backend services. Caching can be applied to data and responses to minimize latency.

- Concurrency Limits: Set appropriate concurrency limits to prevent the function from being overwhelmed by requests. Concurrency controls the number of function instances running simultaneously.

- Cold Start Mitigation: Cold starts can significantly impact the performance of Lambda functions. Strategies to mitigate cold starts include:

- Provisioned Concurrency: AWS Lambda provisioned concurrency provides pre-warmed execution environments for functions, minimizing cold starts.

- Keep-alive Pings: Regularly invoke the function to keep it warm. This can be achieved using CloudWatch Events or other scheduling services.

- Reduce Package Size: Smaller package sizes lead to faster deployment and cold start times.

- Monitoring and Tuning: Continuously monitor the function’s performance metrics, such as execution time, memory usage, and error rates. Use these metrics to identify areas for improvement and fine-tune the function’s configuration.

Detailing How to Implement CI/CD Pipelines for Lambda Function Deployments Using Terraform

Implementing a CI/CD pipeline for Lambda function deployments automates the build, testing, and deployment process, leading to faster release cycles and improved code quality. Terraform integrates well with various CI/CD tools.

- Choosing a CI/CD Tool: Select a CI/CD tool such as Jenkins, GitLab CI, AWS CodePipeline, or GitHub Actions. The choice depends on factors such as the existing infrastructure, team expertise, and cost considerations.

- Defining the Pipeline Stages: The CI/CD pipeline typically consists of the following stages:

- Code Commit: Developers commit code changes to a version control system (e.g., Git).

- Build: The build stage packages the function code, creates a deployment package (e.g., a ZIP file), and performs any necessary build steps.

- Testing: The testing stage runs unit tests and integration tests to ensure the code functions correctly.

- Terraform Plan: The Terraform plan stage generates an execution plan, showing the changes Terraform will make to the infrastructure.

- Terraform Apply: The Terraform apply stage applies the changes to the infrastructure, deploying the Lambda function and any associated resources.

- Verification/Validation: After deployment, verification steps validate that the function is working as expected. This might involve invoking the function and checking the response.

- Terraform Configuration for CI/CD: The Terraform configuration should be structured to support the CI/CD process. This includes:

- Separate Terraform Environments: Maintain separate Terraform configurations for different environments (e.g., development, staging, production).

- Use of Variables: Use variables to configure the environment-specific settings, such as the function name, memory allocation, and environment variables.

- State Management: Store the Terraform state file in a secure location, such as an AWS S3 bucket.

- CI/CD Tool Configuration: Configure the CI/CD tool to:

- Trigger the Pipeline: Trigger the pipeline automatically when code changes are pushed to the repository.

- Authenticate with AWS: Configure the CI/CD tool to authenticate with AWS using an IAM role with the necessary permissions to deploy Lambda functions and manage related resources.

- Run Terraform Commands: Execute the Terraform commands (e.g., `terraform init`, `terraform plan`, `terraform apply`) in the correct order.

- Example with GitHub Actions: The following YAML file illustrates a basic CI/CD pipeline using GitHub Actions:

name: Deploy Lambda Functionon: push: branches: -mainjobs: deploy: runs-on: ubuntu-latest steps: -uses: actions/checkout@v3 -name: Configure AWS credentials uses: aws-actions/configure-aws-credentials@v1 with: aws-access-key-id: $ secrets.AWS_ACCESS_KEY_ID aws-secret-access-key: $ secrets.AWS_SECRET_ACCESS_KEY aws-region: us-east-1 -name: Setup Terraform uses: hashicorp/setup-terraform@v2 -name: Terraform Init run: terraform init -name: Terraform Plan run: terraform plan -name: Terraform Apply run: terraform apply -auto-approve

This example checks out the code, configures AWS credentials, sets up Terraform, initializes Terraform, creates a plan, and applies the changes.

Epilogue

In conclusion, employing Terraform for Lambda function deployments offers a robust and scalable solution for managing serverless architectures. This methodology provides a structured approach to defining, deploying, and maintaining Lambda functions, encompassing crucial elements like infrastructure as code, dependency management, and event triggering. By adopting these practices, developers can streamline their workflows, reduce operational overhead, and focus on developing innovative solutions.

The integration of Terraform with Lambda functions empowers teams to build and deploy serverless applications with greater efficiency and confidence.

Answers to Common Questions

What is the difference between Terraform and AWS CloudFormation?

Terraform is a cloud-agnostic infrastructure-as-code tool that supports multiple cloud providers and on-premise infrastructure. CloudFormation is a service provided by AWS, specifically designed for managing AWS resources. While both tools achieve similar goals, Terraform’s broader compatibility can be advantageous in multi-cloud environments.

How do I handle sensitive information, such as API keys, in my Terraform configuration?

Sensitive information should never be hardcoded in your Terraform configuration files. Use environment variables, Terraform variables with sensitive flags, or external secret management services (e.g., AWS Secrets Manager, HashiCorp Vault) to store and manage these values securely. This practice prevents accidental exposure of sensitive data.

Can I use Terraform to manage Lambda function versions and aliases?

Yes, Terraform supports the management of Lambda function versions and aliases. You can define resources to create and manage different versions of your function, as well as create aliases to point to specific versions. This allows for controlled deployments and easy rollback capabilities.

How do I update a Lambda function deployed with Terraform?

To update a Lambda function, you modify the function code, configuration, or related resources in your Terraform configuration. Then, you run `terraform plan` to preview the changes and `terraform apply` to apply them. Terraform will automatically update the necessary resources based on the changes you’ve made.