The advent of serverless computing has revolutionized software development, promising increased agility and reduced operational overhead. However, this paradigm shift necessitates a corresponding evolution in testing methodologies. Testing serverless applications locally is paramount to ensuring functionality, performance, and security before deployment. This guide delves into the intricacies of this crucial practice, providing a structured approach to effectively test serverless applications in a local development environment.

This document will systematically dissect the various facets of local serverless application testing, beginning with environment setup and progressing through unit, integration, and performance testing. Furthermore, it will explore debugging techniques, monitoring strategies, and security considerations. The aim is to equip developers with the knowledge and tools necessary to confidently validate serverless applications locally, thereby minimizing the risk of production issues and maximizing the benefits of serverless architectures.

Setting Up the Local Development Environment

Local testing is crucial for efficient serverless application development, allowing developers to rapidly iterate, debug, and validate code changes without deploying to a cloud environment. This approach accelerates the development cycle, reduces costs associated with cloud resources during testing, and improves the overall developer experience. The following sections detail the setup of a local development environment for serverless applications.

Installing Dependencies and Tools

Setting up a local environment requires specific tools and dependencies. These components are essential for simulating the cloud environment locally and facilitating the execution of serverless functions.To begin, install the necessary dependencies:

- Programming Language Runtime: Choose the appropriate runtime environment based on your serverless functions’ language (e.g., Node.js, Python, Java, Go). Install the necessary version using a version manager like Node Version Manager (nvm) for Node.js, pyenv for Python, or sdkman for Java/Groovy/Kotlin.

- Package Manager: Use a package manager specific to your chosen runtime to manage project dependencies. Examples include npm or yarn for Node.js, pip for Python, and Maven or Gradle for Java.

- Serverless Framework or SAM CLI: Install either the Serverless Framework or the AWS SAM CLI (Serverless Application Model Command Line Interface), depending on your preference and the cloud provider you’re targeting. Both tools provide functionalities for local testing, deployment, and management of serverless applications.

- Local Development Tools: Consider installing tools like a code editor (e.g., Visual Studio Code, Sublime Text, IntelliJ IDEA), a debugger, and any relevant IDE plugins that support serverless development and your chosen programming language.

- Docker (Optional): Docker is beneficial for emulating cloud environments and ensuring consistency across development, staging, and production environments. Docker is often required by SAM CLI and can be helpful with the Serverless Framework for certain plugin configurations.

For instance, using `npm` to install the Serverless Framework:“`bashnpm install -g serverless“`Or, installing SAM CLI using `pip`:“`bashpip install aws-sam-cli“`

Configuring a Local Testing Framework

The choice of framework influences the configuration steps. Both SAM CLI and the Serverless Framework offer mechanisms for local testing, involving specific configurations to simulate cloud environments. SAM CLI Setup:

- Installation: Ensure SAM CLI is installed as described above.

- AWS Credentials: Configure AWS credentials using the AWS CLI. This step is essential for accessing AWS services, even for local testing. Use `aws configure` to set up your access key ID, secret access key, region, and output format. These credentials are used by SAM CLI to emulate interactions with AWS services locally.

- Project Initialization: Create a new serverless project or navigate to an existing one. SAM CLI typically operates on a project directory containing your application’s code and a `template.yaml` file.

- Template Configuration: The `template.yaml` file defines your serverless application’s resources. This file describes the functions, APIs, event sources, and other components. Configure it to reflect the resources your application requires. For example, specify the function’s handler, runtime, and any environment variables.

- Local Invocation: Use the `sam local invoke` command to test your functions locally. This command simulates the invocation of your functions based on events.

- Local API Gateway: Utilize the `sam local start-api` command to simulate an API Gateway, allowing you to test API endpoints locally.

Serverless Framework Setup:

- Installation: Install the Serverless Framework globally using `npm install -g serverless`.

- Project Initialization: Create a new serverless project or navigate to an existing one. The Serverless Framework relies on a `serverless.yml` file.

- Provider Configuration: Within `serverless.yml`, configure the cloud provider (e.g., AWS) and provide the necessary credentials and region.

- Function Definitions: Define your serverless functions in `serverless.yml`, specifying the handler, runtime, and event triggers.

- Local Testing Plugins: Install and configure plugins for local testing, such as `serverless-offline`. This plugin emulates the AWS Lambda and API Gateway locally.

- Local Invocation: Use the `serverless invoke local` command to test your functions locally.

- Local API Gateway: Run `serverless offline start` to start a local API Gateway that mimics the behavior of AWS API Gateway.

Comparing Local Testing Tools

The selection of a local testing tool depends on project requirements and personal preference. Each tool offers different strengths and weaknesses, as Artikeld in the table below.

| Feature | SAM CLI | Serverless Framework | Description |

|---|---|---|---|

| Ease of Use | Generally easier to set up for AWS-centric applications. It has strong integration with AWS services. | More flexible and supports multiple cloud providers (AWS, Azure, Google Cloud, etc.). However, initial setup can be more complex. | |

| Cloud Provider Support | Primarily focused on AWS. Deep integration with AWS services. | Supports multiple cloud providers. Good for cross-platform development. | |

| Community and Ecosystem | Strong community support within the AWS ecosystem. | Large community with a vast ecosystem of plugins for extended functionalities. | |

| Debugging and Monitoring | Provides debugging capabilities through integration with IDEs and local invocation commands. | Offers debugging through IDE plugins and integration with various monitoring tools via plugins. |

Simulating Cloud Services Locally

Simulating cloud services locally is crucial for effective serverless application development and testing. This approach allows developers to iterate quickly, identify and resolve issues early in the development lifecycle, and reduce the reliance on expensive cloud resources during the testing phase. By replicating the behavior of cloud services like S3, DynamoDB, and API Gateway within a local environment, developers can create a more realistic and efficient testing process.

Emulating AWS Services

Emulating AWS services locally provides a controlled environment for testing serverless applications without incurring costs or relying on an active internet connection. This process involves setting up local instances of the AWS services that the application interacts with. Several tools and techniques are available for this purpose, each with its strengths and weaknesses.

Tools for Simulating Cloud Services

LocalStack and Moto are two prominent tools used to simulate AWS services locally. Each offers a distinct approach to mirroring cloud service functionality, catering to different development needs.

- LocalStack: LocalStack provides a comprehensive solution by running a fully functional, in-memory AWS cloud environment on a local machine. It supports a wide range of AWS services, including S3, DynamoDB, API Gateway, Lambda, and more. This allows developers to test the interactions between different services in a simulated cloud environment. LocalStack utilizes Docker containers to isolate and manage the different service emulations, ensuring a clean and reproducible testing setup.

For example, a developer can create an S3 bucket within LocalStack, upload files, and then test a Lambda function that triggers on object creation within that bucket.

- Moto: Moto is a Python library that allows developers to mock out AWS services. It doesn’t run a full local cloud environment like LocalStack; instead, it provides a way to mock AWS API calls in Python tests. This is particularly useful for unit testing individual components that interact with AWS services. Moto intercepts the AWS SDK calls and returns predefined responses, allowing developers to simulate different scenarios, such as successful operations, errors, or specific data states.

For instance, a developer can use Moto to mock a DynamoDB table and test a function that retrieves data from it without connecting to an actual DynamoDB instance.

Common Issues and Solutions

Simulating cloud services locally is not without its challenges. Developers often encounter specific issues that can hinder the testing process. Addressing these challenges requires a combination of careful configuration, understanding of the limitations of the chosen tools, and a proactive approach to debugging.

- Service Compatibility: Not all AWS services are fully supported by local simulation tools. LocalStack, for example, offers broad support, but some newer or more specialized services might not be available or may have limited functionality.

- Solution: Verify the supported services and their features within the chosen tool’s documentation. If a required service is not fully supported, consider using mocks (like Moto) or integrating tests that use real cloud services for those specific components.

Prioritize testing the core functionalities that are critical for the application’s behavior.

- Solution: Verify the supported services and their features within the chosen tool’s documentation. If a required service is not fully supported, consider using mocks (like Moto) or integrating tests that use real cloud services for those specific components.

- Configuration Complexity: Setting up and configuring local environments can be complex, particularly when dealing with multiple services and dependencies. Incorrect configurations can lead to unexpected behavior and testing failures.

- Solution: Use infrastructure-as-code tools, such as Terraform or AWS CloudFormation, to define and manage the local environment. This ensures consistency, reproducibility, and ease of setup. Carefully review the configuration settings and documentation for each service being simulated.

Employ environment variables to manage configuration details and avoid hardcoding sensitive information.

- Solution: Use infrastructure-as-code tools, such as Terraform or AWS CloudFormation, to define and manage the local environment. This ensures consistency, reproducibility, and ease of setup. Carefully review the configuration settings and documentation for each service being simulated.

- Data Persistence: Data persistence in local simulation environments can be an issue. In-memory solutions, like those used by some simulation tools, lose data when the environment is restarted.

- Solution: Choose tools that offer data persistence options. For example, LocalStack allows you to persist data using volumes. Consider using dedicated databases or file storage within the local environment to ensure data is retained across test runs.

Implement strategies to seed the local environment with test data to facilitate consistent testing.

- Solution: Choose tools that offer data persistence options. For example, LocalStack allows you to persist data using volumes. Consider using dedicated databases or file storage within the local environment to ensure data is retained across test runs.

- Network Connectivity: Issues can arise with network connectivity, especially when applications need to interact with services outside the local environment. Firewall rules, proxy settings, and incorrect DNS configurations can cause connectivity problems.

- Solution: Verify network settings and ensure that the local environment can access the required external resources. Check firewall rules to confirm that traffic to and from the local environment is allowed.

If a proxy is used, ensure it is configured correctly for both the application and the simulation tools.

- Solution: Verify network settings and ensure that the local environment can access the required external resources. Check firewall rules to confirm that traffic to and from the local environment is allowed.

- Version Compatibility: Compatibility issues can occur between the application code, the AWS SDK, and the local simulation tools. Using different versions can lead to unexpected behavior or errors.

- Solution: Maintain consistent versions across all components. Specify the AWS SDK version in the project’s dependencies. Ensure that the local simulation tool supports the AWS service versions used by the application.

Regularly update the simulation tools to take advantage of the latest features and bug fixes, while also testing thoroughly after each update to identify any potential regressions.

- Solution: Maintain consistent versions across all components. Specify the AWS SDK version in the project’s dependencies. Ensure that the local simulation tool supports the AWS service versions used by the application.

Writing Unit Tests for Serverless Functions

Unit testing is a critical practice in software development, and it is equally important for serverless applications. Serverless functions, by their nature, are designed to be small, independent units of code. This modularity makes them ideal candidates for unit testing, allowing developers to verify the functionality of each function in isolation. Effective unit tests contribute significantly to the overall reliability, maintainability, and testability of a serverless application.

They enable developers to identify and fix bugs early in the development lifecycle, reducing the risk of production issues.

Best Practices for Writing Unit Tests for Individual Serverless Functions

Writing effective unit tests for serverless functions involves adhering to several best practices. These practices ensure that tests are reliable, maintainable, and provide meaningful coverage of the function’s behavior.

- Isolate the Function: Unit tests should focus on testing a single function in isolation. This means mocking or stubbing any external dependencies, such as database connections, API calls, or other serverless functions. The goal is to verify the function’s logic based on its inputs and expected outputs, independent of external factors.

- Mock Dependencies: Use mocking frameworks to simulate the behavior of external services. For example, if a function interacts with a database, mock the database client to return predefined data or simulate specific error conditions. This prevents tests from relying on the availability or state of external services.

- Test Different Scenarios: Create tests to cover a variety of scenarios, including positive and negative test cases. Positive tests verify that the function behaves as expected under normal conditions. Negative tests validate the function’s behavior when given invalid inputs, unexpected data, or when external services fail.

- Assert Correct Outputs: Assert that the function returns the correct output for a given input. This might involve verifying the returned value, the data written to a database, or the messages sent to an external service.

- Use a Test Framework: Utilize a testing framework appropriate for the programming language used by the serverless function. Popular frameworks include Jest for JavaScript/TypeScript, JUnit for Java, and pytest for Python. These frameworks provide tools for writing, running, and reporting on tests.

- Keep Tests Concise and Readable: Write tests that are easy to understand and maintain. Use descriptive test names and comments to explain the purpose of each test. Avoid overly complex test logic.

- Automate Test Execution: Integrate unit tests into the continuous integration/continuous delivery (CI/CD) pipeline. This ensures that tests are automatically executed whenever code changes are made, providing immediate feedback on the impact of those changes.

Code Examples Demonstrating Unit Tests in Different Programming Languages Commonly Used in Serverless Development

The following code examples illustrate how to write unit tests for serverless functions in different programming languages, showcasing common testing scenarios and techniques.

JavaScript (with Jest)

Consider a simple JavaScript function that converts Celsius to Fahrenheit:

// celsiusToFahrenheit.jsexports.handler = async (event) => const celsius = parseFloat(event.celsius); if (isNaN(celsius)) return statusCode: 400, body: JSON.stringify( error: 'Invalid input: Celsius must be a number.' ), ; const fahrenheit = (celsius- 9/5) + 32; return statusCode: 200, body: JSON.stringify( fahrenheit: fahrenheit ), ;; Here’s a unit test for this function using Jest:

// celsiusToFahrenheit.test.jsconst handler = require('./celsiusToFahrenheit');describe('celsiusToFahrenheit', () => it('should convert 0 Celsius to 32 Fahrenheit', async () => const event = celsius: '0' ; const result = await handler(event); expect(result.statusCode).toBe(200); const body = JSON.parse(result.body); expect(body.fahrenheit).toBe(32); ); it('should convert 100 Celsius to 212 Fahrenheit', async () => const event = celsius: '100' ; const result = await handler(event); expect(result.statusCode).toBe(200); const body = JSON.parse(result.body); expect(body.fahrenheit).toBe(212); ); it('should return an error for invalid input', async () => const event = celsius: 'abc' ; const result = await handler(event); expect(result.statusCode).toBe(400); const body = JSON.parse(result.body); expect(body.error).toBe('Invalid input: Celsius must be a number.'); );); Python (with pytest)

Consider a Python function that calculates the area of a rectangle:

# area_calculator.pyimport jsondef handler(event, context): try: width = float(event.get('width', 0)) height = float(event.get('height', 0)) if width <= 0 or height <= 0: return 'statusCode': 400, 'body': json.dumps('error': 'Width and height must be positive numbers.') area = width- height return 'statusCode': 200, 'body': json.dumps('area': area) except ValueError: return 'statusCode': 400, 'body': json.dumps('error': 'Invalid input: Width and height must be numbers.') Here's a unit test for this function using pytest:

# test_area_calculator.pyimport jsonfrom area_calculator import handlerdef test_area_calculator_valid_input(): event = 'width': '10', 'height': '5' result = handler(event, ) assert result['statusCode'] == 200 body = json.loads(result['body']) assert body['area'] == 50def test_area_calculator_invalid_input(): event = 'width': 'abc', 'height': '5' result = handler(event, ) assert result['statusCode'] == 400 body = json.loads(result['body']) assert body['error'] == 'Invalid input: Width and height must be numbers.'def test_area_calculator_zero_input(): event = 'width': '0', 'height': '5' result = handler(event, ) assert result['statusCode'] == 400 body = json.loads(result['body']) assert body['error'] == 'Width and height must be positive numbers.' Java (with JUnit)

Consider a Java function that concatenates two strings:

// StringConcatenator.javaimport com.amazonaws.services.lambda.runtime.Context;import com.amazonaws.services.lambda.runtime.RequestHandler;public class StringConcatenator implements RequestHandler<String, String> @Override public String handleRequest(String input, Context context) String[] parts = input.split(","); if (parts.length != 2) return "Error: Please provide two strings separated by a comma."; return parts[0] + parts[1]; Here's a unit test for this function using JUnit:

// StringConcatenatorTest.javaimport org.junit.jupiter.api.Test;import static org.junit.jupiter.api.Assertions.assertEquals;import StringConcatenator;public class StringConcatenatorTest @Test public void testConcatenateStrings() StringConcatenator stringConcatenator = new StringConcatenator(); String input = "Hello,World!"; String result = stringConcatenator.handleRequest(input, null); assertEquals("HelloWorld!", result); @Test public void testInvalidInput() StringConcatenator stringConcatenator = new StringConcatenator(); String input = "Hello"; String result = stringConcatenator.handleRequest(input, null); assertEquals("Error: Please provide two strings separated by a comma.", result); Steps Involved in Writing Effective Unit Tests for Serverless Functions

Writing effective unit tests for serverless functions can be broken down into a series of well-defined steps. Following these steps ensures that tests are comprehensive and cover all critical aspects of the function's behavior.

- Identify the Function's Purpose and Scope: Begin by clearly understanding what the function is supposed to do. Define the expected inputs, outputs, and any external dependencies.

- Choose a Testing Framework: Select a suitable testing framework for the programming language used by the serverless function. This framework will provide the necessary tools for writing and running tests.

- Create a Test Suite: Create a test suite for the function. This will contain all the individual test cases. Organize tests logically based on different scenarios.

- Write Test Cases for Different Scenarios: Develop test cases to cover various scenarios, including:

- Positive Tests: Verify the function's behavior with valid inputs.

- Negative Tests: Test the function's response to invalid inputs or error conditions.

- Boundary Tests: Test the function with inputs at the boundaries of acceptable values (e.g., minimum and maximum values).

- Edge Case Tests: Test the function with unusual or unexpected inputs.

- Mock External Dependencies: If the function interacts with external services, mock those services to isolate the function. Use mocking frameworks to simulate the behavior of these dependencies.

- Define Assertions: For each test case, define assertions to verify the function's output. Assertions check that the actual output matches the expected output.

- Run and Analyze Tests: Execute the test suite and analyze the results. Identify and fix any failing tests.

- Refactor and Improve Tests: Continuously review and improve the tests. Refactor tests to make them more readable and maintainable. Add new tests as the function evolves.

- Integrate with CI/CD: Integrate the unit tests into the CI/CD pipeline to automate test execution and ensure that tests are run whenever code changes are made.

Testing Event Triggers and Integrations

Testing event triggers and integrations is crucial for ensuring the reliable operation of serverless applications. Serverless architectures heavily rely on event-driven interactions, where functions are triggered by events from various sources like object storage, databases, or message queues. Thorough testing of these triggers is essential to validate the application's behavior under different event scenarios, ensuring that functions are invoked correctly and respond as expected.

This section focuses on methodologies for effectively testing these event-driven components in a local development environment.

Simulating Event Sources and Triggering Functions

To test event triggers, a mechanism to simulate events from different sources is necessary. This typically involves creating mock implementations of the event sources or using tools that can inject simulated events into the local development environment. The goal is to mimic the behavior of the cloud services as closely as possible, allowing developers to test function responses without deploying to the cloud.

- S3 Event Simulation: Simulating S3 events involves mimicking object creation, deletion, and modification events. This can be achieved by using local S3 emulators like LocalStack or MinIO. These emulators provide an API compatible with AWS S3, allowing developers to upload, download, and manipulate objects locally. To test an S3 trigger, a developer would upload a mock object to the local S3 emulator, and the function configured to trigger on object creation would be invoked.

The function's response can then be verified. For instance, if the function is designed to resize images uploaded to S3, the test would involve uploading a test image and verifying that the resized image is created in the expected location.

- DynamoDB Event Simulation: DynamoDB event triggers, often used for processing data changes, can be simulated using local DynamoDB emulators. Similar to S3 emulators, these tools provide a local instance of DynamoDB. Developers can create tables, insert, update, and delete items, and then observe the function's response. The testing process involves setting up a local DynamoDB table, inserting test data, and confirming that the Lambda function, configured to trigger on DynamoDB events, is executed and processes the data correctly.

This might involve verifying that the function correctly updates related data in another database or triggers a notification.

- API Gateway Event Simulation: API Gateway triggers, which allow functions to be invoked via HTTP requests, can be tested using tools that allow sending HTTP requests to the local development environment. Tools like Postman or curl can be used to send requests that mimic those from an API Gateway. This involves configuring the local environment to route requests to the appropriate function. For example, if a function is triggered by a POST request to a specific endpoint, the test would involve sending a POST request to that endpoint with the expected payload and verifying the function's response.

- Other Event Source Simulation: Other event sources, such as SNS (Simple Notification Service) or SQS (Simple Queue Service), can be simulated using local emulators or mock implementations. The process involves sending simulated messages or notifications to these services and verifying that the function is triggered and processes the messages correctly. For example, to test an SNS trigger, a developer would publish a mock message to the local SNS emulator and then verify that the function, subscribed to the SNS topic, is invoked and processes the message as expected.

Designing Event Scenario Simulations

Designing effective event scenario simulations involves identifying the various event types, data formats, and error conditions that the function might encounter in a production environment. These scenarios should cover both positive and negative test cases to ensure the function's robustness.

- Positive Test Cases: These tests verify that the function behaves as expected under normal conditions. For example, when testing an S3 trigger, positive tests would involve uploading valid image files to the S3 bucket and verifying that the image processing function correctly resizes and stores the images.

- Negative Test Cases: These tests check how the function handles errors and unexpected input. For example, when testing an S3 trigger, negative tests would involve uploading corrupted image files or files with incorrect formats to the S3 bucket and verifying that the function handles these errors gracefully, perhaps by logging the error or sending a notification.

- Boundary Conditions: These tests focus on the edges of acceptable input. For example, if a function is designed to process files of a specific size, boundary condition tests would involve uploading files that are just below and just above the size limit to verify the function's behavior.

- Data Validation: Data validation is critical for preventing errors and ensuring data integrity. Test scenarios should include validating input data against expected formats, types, and ranges. For example, if a function expects a numeric value, tests should include non-numeric inputs and values outside the expected range.

- Rate Limiting and Concurrency: Serverless applications may face rate limits or concurrency constraints. Testing should include simulating high volumes of events to verify that the function handles these constraints gracefully, for instance, by implementing retries or queuing mechanisms.

Illustrating Event Flow with a Diagram

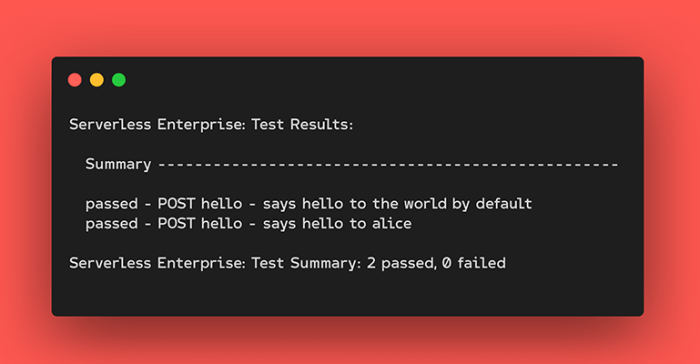

The following diagram illustrates the flow of events through a serverless application, specifically focusing on an S3 event trigger and its associated Lambda function.

The diagram below illustrates the flow of events, depicting the interaction between Amazon S3, AWS Lambda, and a hypothetical external system (e.g., a database or another service).

```

+-----------------+

| User |

+-------+---------+

|

| Upload Object

|

+-------v---------+

| Amazon S3 |

+-------+---------+

| Object Created Event

|

+-------v---------+

| AWS Lambda |

| (Function: |

| ImageProcessor)|

+-------+---------+

| 1.

Event Trigger

| 2. Retrieve Object Metadata

| 3.

Process Image (Resize, etc.)

| 4. Store Processed Image

| 5.

Log Results/Errors

|

+-------v---------+

| Amazon S3 |

| (Processed Images)|

+-------+---------+

|

| (Optional) Trigger

| (e.g., SNS Notification)

|

+-------v---------+

| External System |

| (e.g., Database)|

+-----------------+

```

Description of the Diagram Steps:

- User Uploads Object: A user initiates an upload of an object (e.g., an image) to an Amazon S3 bucket.

- Object Created Event: Amazon S3 detects the object creation event. This event includes information about the uploaded object, such as its key, size, and storage location.

- Lambda Function Triggered: The S3 event triggers the AWS Lambda function (ImageProcessor). The function is configured to be triggered by the `ObjectCreated` event type in the S3 bucket.

- Event Processing:

- The Lambda function receives the event data.

- The function retrieves object metadata from the S3 bucket.

- The function processes the image.

- The function stores the processed image.

- The function logs the results or any errors that occur during processing.

- Processed Image Stored: The processed image is stored back in the S3 bucket, possibly in a different location or with a different key.

- (Optional) Trigger: Optionally, another service or function may be triggered based on the result. For example, SNS may be used to send a notification.

- External System Interaction: The Lambda function may interact with external systems such as a database to store metadata or update records related to the image processing.

Integration Testing Strategies

Integration testing in serverless applications is crucial for validating the interactions between different serverless functions and the services they utilize. It ensures that components work together as expected, mimicking the behavior of the application in a production-like environment. This testing phase goes beyond unit tests, focusing on the interplay of various elements to confirm the overall system's integrity and functional correctness.

Several strategies can be employed for effective integration testing of serverless architectures, each with its advantages and disadvantages. The choice of strategy depends on factors like the complexity of the application, the number of services involved, and the desired level of test coverage.

Strategies for Integration Testing

A robust integration testing strategy is fundamental to the reliability of serverless applications. The following strategies are commonly employed to achieve comprehensive testing:

- Contract Testing: This strategy focuses on verifying that the communication between services adheres to predefined contracts. It ensures that data exchanged between functions and services meets the expected formats and structures. This approach helps to isolate the impact of changes and ensures that services remain compatible after updates.

- End-to-End Testing: This strategy simulates user interactions from start to finish, traversing multiple functions and services. It verifies the entire application flow, including event triggers, data transformations, and responses. This approach provides a holistic view of the application's functionality but can be more complex to set up and maintain.

- Component Testing: This strategy isolates and tests specific components or groups of components. It verifies the interactions within a defined scope, such as a set of functions and their dependencies. This approach offers a balance between isolation and coverage, allowing for focused testing of specific functionalities.

- Mocking and Stubbing: This technique involves replacing external dependencies, such as databases or external APIs, with mock objects or stubs. This allows for controlled testing of function interactions without relying on the actual external services. This approach helps to speed up tests and isolate failures.

Examples of Integration Tests

Effective integration tests validate the interaction between various serverless components. Here are examples demonstrating how to verify interactions within a serverless application:

- Scenario: A user uploads an image to an S3 bucket, triggering a Lambda function to resize the image and store it in another bucket.

- Test Case: Upload a sample image to the designated S3 bucket. Verify that the Lambda function is triggered, the image is resized, and the resized image is stored in the target S3 bucket.

- Verification: Check the logs of the Lambda function for successful execution. Compare the original image size with the resized image size. Confirm the presence of the resized image in the target S3 bucket.

- Scenario: An API Gateway endpoint receives a request, which triggers a Lambda function to retrieve data from a DynamoDB table and return it.

- Test Case: Send a request to the API Gateway endpoint. Verify that the Lambda function is triggered, retrieves the correct data from DynamoDB, and returns the data in the expected format.

- Verification: Inspect the API Gateway response for the retrieved data. Verify that the data matches the expected values stored in the DynamoDB table. Check the Lambda function logs for successful execution.

Integration Testing Scenarios

The following table presents common integration testing scenarios for serverless applications, along with test cases and expected outcomes.

| Scenario | Test Case | Expected Outcome | Services Involved |

|---|---|---|---|

| User submits a form via API Gateway, triggering a Lambda function to store data in DynamoDB. | Submit a test form with valid data through the API Gateway. | Data is successfully stored in the DynamoDB table; a success response is returned. | API Gateway, Lambda, DynamoDB |

| An object is uploaded to an S3 bucket, triggering a Lambda function to process it and send a notification via SNS. | Upload a test object to the S3 bucket. | Lambda function is triggered, object is processed, and a notification is sent to the SNS topic. | S3, Lambda, SNS |

| A message is published to an SQS queue, triggering a Lambda function to process the message and update a database. | Publish a test message to the SQS queue. | Lambda function is triggered, message is processed, and the database is updated with the correct information. | SQS, Lambda, Database (e.g., DynamoDB, RDS) |

| An event occurs in a Kinesis stream, triggering a Lambda function to analyze the event and store the results in a data warehouse (e.g., Redshift). | Inject a test event into the Kinesis stream. | Lambda function is triggered, the event is analyzed, and the results are successfully stored in the data warehouse. | Kinesis, Lambda, Data Warehouse (e.g., Redshift) |

Debugging Serverless Applications Locally

Debugging serverless applications locally is crucial for identifying and resolving issues during development. Effective debugging allows developers to understand the behavior of their functions, pinpoint the root causes of errors, and ensure the application functions as expected before deployment. This section Artikels techniques and tools for effectively debugging serverless functions within a local development environment.

Techniques for Debugging Serverless Functions Locally

Debugging serverless functions locally involves a combination of techniques to gain insights into the function's execution flow and identify potential problems. This typically involves setting breakpoints, examining variable values, and analyzing logs.

- Setting Breakpoints: Breakpoints allow the developer to pause the execution of the function at specific lines of code. This enables the inspection of variable values, the execution path, and the overall state of the function at that point in time. The specific method for setting breakpoints depends on the IDE and the programming language being used. For example, in Visual Studio Code, you can click in the gutter next to a line of code to set a breakpoint.

- Logging: Implementing logging is fundamental for understanding the function's behavior. Developers can use logging statements to record information such as the input parameters, the values of key variables, and any errors or exceptions that occur during execution. Logging provides valuable insights into the function's execution flow and can help diagnose problems that are not immediately apparent. Different logging levels (e.g., DEBUG, INFO, WARN, ERROR) can be used to control the verbosity of the logs.

- Inspecting Variable Values: When the execution of a function is paused at a breakpoint, developers can inspect the values of variables in the current scope. This allows them to verify that the variables contain the expected values and identify any unexpected behavior. Most IDEs provide tools for viewing the values of variables, such as a "watch" window or the ability to hover over a variable name.

- Stepping Through Code: Developers can step through the code line by line, executing each statement and observing its effect. This allows them to understand the exact sequence of operations performed by the function and identify the source of any errors. Stepping through code is typically done using the "step over," "step into," and "step out" commands provided by the IDE.

Utilizing IDE Debugging Tools for Serverless Applications

Integrated Development Environments (IDEs) provide powerful debugging tools that significantly enhance the debugging process. The specific configuration process varies depending on the IDE and the serverless framework being used.

- IDE Selection: Choose an IDE that supports debugging for the programming language and serverless framework you are using. Popular choices include Visual Studio Code, IntelliJ IDEA, and PyCharm, each offering extensive debugging features.

- Framework Integration: Ensure the IDE is properly configured to work with your serverless framework (e.g., AWS SAM, Serverless Framework). This often involves installing necessary extensions or plugins within the IDE.

- Configuration Files: The debugging configuration is typically defined in a configuration file (e.g., `launch.json` in VS Code). This file specifies how the debugger should launch and connect to the serverless function. Key settings include the function's handler, the runtime environment, and any environment variables.

- Attaching the Debugger: The debugger needs to be attached to the locally running serverless function. This can be done by specifying the appropriate configuration in the IDE and then starting the debugging session. The IDE will then connect to the function and allow you to set breakpoints, inspect variables, and step through the code.

- Example Configuration (VS Code with AWS SAM): To debug an AWS Lambda function locally using VS Code and AWS SAM, you would typically create a `launch.json` file in the `.aws-sam` directory of your project. This file would specify the `runtime`, the `handler` (e.g., `index.handler`), and the `template` file (e.g., `template.yaml`) that defines your serverless application. The configuration would then use a `sam local invoke` command to start the local function execution.

Example Debugging Logs and Interpretation

Debugging logs provide valuable insights into the function's behavior. Analyzing these logs allows developers to understand the execution flow, identify errors, and diagnose performance issues.

Example Log Snippet:

2023-10-27T10:00:00.000Z INFO [myFunction]

-Event received: "key": "value"

2023-10-27T10:00:00.001Z DEBUG [myFunction]

-Processing input: value

2023-10-27T10:00:00.002Z ERROR [myFunction]

-Error processing data: Invalid data format

2023-10-27T10:00:00.003Z INFO [myFunction]

-Function completed with errorInterpretation:

- The function `myFunction` received an event containing the key-value pair `"key": "value"`.

- The function attempted to process the value "value".

- An error occurred during data processing, indicating an invalid data format.

- The function completed with an error, signaling that the execution did not complete successfully.

Monitoring and Logging in Local Testing

Monitoring and logging are crucial components of a robust serverless application development lifecycle, even within a local testing environment. While local testing allows for rapid iteration and verification of code changes, it's essential to simulate the production environment's observability aspects. This includes capturing and analyzing logs, and monitoring application behavior to identify and address potential issues before deployment. Effective local monitoring and logging provide insights into function execution, error identification, performance bottlenecks, and the overall health of the application.

Importance of Monitoring and Logging in Local Testing Environments

Monitoring and logging in local testing provide several key benefits, mirroring the advantages observed in production environments. These benefits are crucial for efficient debugging, performance optimization, and overall application stability.

- Early Error Detection: Local logging allows developers to immediately identify errors, exceptions, and warnings that might occur during function execution. This early detection facilitates quicker debugging and resolution of issues.

- Performance Profiling: Monitoring tools can track function execution times, resource utilization (e.g., memory, CPU), and other performance metrics. This data helps pinpoint performance bottlenecks and optimize code for efficiency.

- Debugging Support: Detailed logs provide context for debugging, including timestamps, function names, input parameters, and error messages. This information is invaluable for understanding the flow of execution and diagnosing complex problems.

- Behavioral Analysis: Monitoring tools enable developers to observe how their functions interact with other services and resources. This can reveal unexpected behavior, such as excessive resource consumption or incorrect data handling.

- Simulating Production Observability: By implementing monitoring and logging locally, developers can gain experience with the tools and techniques used in production environments. This prepares them for deploying and managing serverless applications in real-world scenarios.

Setting Up Logging and Monitoring Tools for Serverless Applications in a Local Environment

Setting up logging and monitoring in a local environment involves choosing appropriate tools, configuring them to capture relevant data, and visualizing the collected information. The specific tools and configurations will depend on the chosen programming language, the serverless platform (e.g., AWS Lambda, Azure Functions, Google Cloud Functions), and the desired level of detail. However, the general approach remains consistent.

- Choosing Logging Tools: Select a logging library or framework suitable for the programming language used in the serverless functions. Popular options include:

- Node.js: `winston`, `pino`, `bunyan`

- Python: `logging` (built-in), `structlog`

- Java: `java.util.logging`, `logback`, `log4j`

These libraries allow developers to write log messages with different severity levels (e.g., DEBUG, INFO, WARNING, ERROR) and formats.

- Choosing Monitoring Tools: For local monitoring, developers can utilize:

- Local Log Viewers: Tools like `jq` (for parsing JSON logs), `grep`, and `less` can be used to filter and analyze log files.

- Local Monitoring Agents: Some monitoring tools offer agents that can be installed locally to collect metrics and visualize them. Examples include Prometheus with Grafana, or tools that integrate with the local serverless framework being used (e.g., the Serverless Framework).

- IDE Integration: Some Integrated Development Environments (IDEs) offer built-in logging and debugging features that can be used to inspect logs and monitor function execution.

- Configuring Logging: Configure the chosen logging library to:

- Set Logging Levels: Determine the appropriate logging levels for different types of messages. For example, use DEBUG for detailed information, INFO for general operational information, WARNING for potential issues, and ERROR for critical errors.

- Define Log Format: Specify the format of the log messages. JSON format is often preferred for structured data, making it easier to parse and analyze logs.

- Choose Output Destination: Direct log output to the console (for immediate visibility) and/or to a log file (for persistent storage).

- Configuring Monitoring: Configure the chosen monitoring tools to:

- Collect Metrics: Identify key metrics to track, such as function execution time, memory usage, number of invocations, and error rates.

- Instrument Code: Add instrumentation to the serverless functions to collect these metrics. This may involve using the monitoring tool's API or library to record start and end times, measure resource consumption, and track error occurrences.

- Visualize Data: Set up dashboards or visualizations to display the collected metrics. This can help identify trends, anomalies, and performance bottlenecks.

Steps Required to Implement Logging and Monitoring in a Local Environment

Implementing logging and monitoring in a local serverless environment involves a series of well-defined steps, ensuring that relevant data is captured, analyzed, and visualized effectively. The following steps provide a structured approach:

- Choose a Logging Library: Select a logging library suitable for the programming language used in the serverless functions. For example, in Python, use the built-in `logging` module or a third-party library like `structlog`.

- Configure the Logging Library: Configure the logging library to format log messages in a structured format (e.g., JSON) and direct output to the console and/or a log file. Set the desired logging levels (DEBUG, INFO, WARNING, ERROR) to control the verbosity of the logs.

- Choose a Monitoring Tool: Select a monitoring tool that is appropriate for local development. This could be a local log viewer (like `jq` for JSON logs), a local monitoring agent (like Prometheus with Grafana), or IDE integrated features.

- Instrument Serverless Functions: Add instrumentation to the serverless functions to collect relevant metrics. This may involve using the monitoring tool's API or library to measure execution time, track resource consumption, and count errors. For example, in Python, use the `timeit` module to measure the execution time of a function.

- Integrate with a Local Serverless Framework (Optional): If using a local serverless framework (e.g., Serverless Framework, AWS SAM CLI, Azure Functions Core Tools, Google Cloud Functions Framework), leverage its built-in logging and monitoring capabilities, and configure the framework to route logs and metrics to the chosen tools.

- Run and Test Functions Locally: Execute the serverless functions locally and observe the logs and metrics. Verify that the logs contain the expected information and that the monitoring tools are correctly capturing the relevant metrics.

- Analyze Logs and Metrics: Analyze the logs to identify errors, warnings, and other issues. Examine the metrics to identify performance bottlenecks and optimize function execution. Use the log viewer, the monitoring dashboards, or IDE features to visualize the data.

- Iterate and Refine: Based on the analysis, refine the logging and monitoring configurations as needed. Adjust logging levels, add or remove metrics, and modify the visualizations to improve the clarity and usefulness of the data.

Performance Testing Locally

Performance testing is crucial for serverless applications to ensure they can handle expected workloads and maintain acceptable response times. While cloud-based testing provides the most realistic environment, local performance testing offers a valuable, cost-effective, and rapid feedback loop during development. This approach allows developers to identify performance bottlenecks early in the development cycle, preventing costly issues in production.

Measuring Execution Time and Resource Consumption

Accurately measuring the execution time and resource consumption of serverless functions in a local environment requires careful consideration of available tools and techniques. The goal is to gather data that reflects function behavior under various simulated load conditions.

- Execution Time Measurement: Execution time is a fundamental metric for serverless function performance. Accurate measurements are crucial to identifying performance issues.

- Tools and Techniques:

- Code Profiling: Utilize code profiling tools, such as those integrated into your IDE (e.g., VS Code, IntelliJ) or language-specific profilers (e.g., `cProfile` for Python, `perf` for Linux). These tools provide detailed breakdowns of function execution, identifying time-consuming operations.

- Timing Decorators/Wrappers: Implement custom decorators or wrappers around your function code to record start and end times, calculating the elapsed time. This approach is straightforward and can be easily integrated into your testing framework.

- Logging with Timestamps: Integrate detailed logging with timestamps at the beginning and end of function execution, as well as at key points within the function's logic. This allows for precise timing analysis, especially when dealing with asynchronous operations or complex workflows.

- Resource Consumption Measurement: Monitoring resource usage, such as memory and CPU, provides valuable insights into function efficiency and potential scaling limitations.

- Tools and Techniques:

- Operating System Monitoring Tools: Leverage operating system monitoring tools like `top`, `htop` (Linux), or Task Manager (Windows) to monitor CPU and memory usage of the process running your local serverless environment.

- Containerization Tools: If using containerization (e.g., Docker) for local development, use container monitoring tools (e.g., `docker stats`) to track resource consumption of the container running your function.

- Custom Metrics: Implement custom metrics within your function code to track specific resource usage, such as the number of database queries, the size of data processed, or the number of external API calls. These metrics can be logged and analyzed to pinpoint resource-intensive operations.

- Load Generation: Simulate different load conditions to evaluate function performance under varying workloads. This involves generating a series of requests to the function with different parameters, concurrency levels, and request rates.

- Tools and Techniques:

- Command-Line Tools: Utilize command-line tools such as `curl`, `wrk`, or `siege` to generate HTTP requests to your locally running function.

- Scripting Languages: Use scripting languages like Python or Node.js to write custom load testing scripts that generate requests and measure response times.

- Load Testing Frameworks: Consider using load testing frameworks such as JMeter or Gatling. These frameworks offer advanced features, including simulating complex user behavior, managing concurrent users, and analyzing performance metrics.

Performance Metrics Chart

The following table describes a hypothetical chart illustrating the performance metrics of a serverless function under various load conditions. The chart aims to visualize the relationship between request load (measured in requests per second, RPS), average response time (in milliseconds, ms), and function resource consumption (in terms of memory usage, MB).

| Metric | Description |

|---|---|

| X-Axis: Load (RPS) | Represents the rate at which requests are sent to the serverless function. The range is from 1 RPS to 100 RPS, with increments of 10 RPS. This simulates increasing traffic load on the function. |

| Y-Axis: Average Response Time (ms) | Measures the average time taken for the serverless function to process and return a response for each request. This is a key indicator of function performance. The scale ranges from 0 ms to 500 ms, with appropriate increments. |

| Y-Axis: Memory Usage (MB) | Indicates the amount of memory the function consumes during execution. This metric is crucial for identifying memory leaks or inefficiencies. The scale ranges from 0 MB to 256 MB, with appropriate increments. |

| Data Series: Response Time | A line graph illustrating the average response time at each load level. Initially, the response time is expected to be low (e.g., under 50 ms) at low RPS. As the load increases, the response time might start to increase gradually. If the function is not properly optimized, the response time could increase dramatically at higher RPS levels, indicating performance degradation. |

| Data Series: Memory Usage | A line graph depicting the memory usage at each load level. At low RPS, the memory usage should be relatively constant. As the load increases, the memory usage might increase slightly, depending on the function's logic. If the function has memory leaks or inefficient memory management, the memory usage might increase steadily, potentially leading to out-of-memory errors at higher RPS levels. |

| Observations: |

|

This chart would visually demonstrate how the serverless function's performance changes under different load conditions, highlighting potential bottlenecks and areas for optimization. The data allows for a clear understanding of the function's scalability and resource efficiency.

Security Testing in Local Environments

Incorporating security testing into the local development workflow is crucial for identifying vulnerabilities early in the development lifecycle, reducing the risk of deploying insecure serverless applications. Performing security tests locally allows developers to rapidly iterate and fix issues without incurring the costs and delays associated with deploying to a staging or production environment. This proactive approach helps ensure a more secure and robust application from the outset.Security testing in a local serverless environment involves simulating potential attacks and verifying that the application responds as expected.

This includes testing for common vulnerabilities like injection flaws, authentication and authorization issues, and data exposure. The goal is to proactively identify and mitigate security risks before the application is deployed, leading to a more secure and reliable final product.

Simulating Security Vulnerabilities

Simulating security vulnerabilities in a local serverless application setup requires a strategic approach to identify and exploit potential weaknesses. This involves intentionally introducing vulnerabilities into the application code and then using various tools and techniques to detect and assess their impact. The process allows developers to understand how attackers might exploit these flaws and implement effective countermeasures.To simulate vulnerabilities, developers can modify their local serverless function code or configuration to introduce weaknesses.

For example, to simulate an SQL injection vulnerability, one could modify a function that interacts with a database to accept unsanitized user input directly into an SQL query. Similarly, for cross-site scripting (XSS) vulnerabilities, one could introduce code that reflects user-provided data without proper encoding.Here's a breakdown of how to simulate and test for specific vulnerabilities:

- SQL Injection: Modify functions that interact with databases to accept user input directly within SQL queries. Use tools like SQLMap or manual injection techniques to test if the application is vulnerable. For instance, consider a function that retrieves user data based on a user ID. If the function doesn't properly sanitize the user ID input, an attacker could inject malicious SQL code to access or modify other user data.

- Cross-Site Scripting (XSS): Introduce code that reflects user input without proper encoding. Test by injecting malicious JavaScript code into input fields and observing if it's executed in the browser. This could involve manipulating input fields on a web page to inject JavaScript code. If the application fails to properly sanitize or encode the input, the injected JavaScript will execute in the context of the user's browser, potentially allowing an attacker to steal cookies, redirect the user, or perform other malicious actions.

- Authentication and Authorization Issues: Simulate scenarios where authentication or authorization mechanisms are bypassed or improperly implemented. This could involve manipulating tokens, bypassing access controls, or exploiting weaknesses in the authentication process. This could involve creating a function that checks user roles. If the authorization logic is flawed, an attacker could potentially bypass the role check and access restricted resources.

- Server-Side Request Forgery (SSRF): Configure functions to make HTTP requests to external resources. Test by injecting malicious URLs to see if the application can be tricked into making requests to internal or restricted resources.

- Insecure Dependencies: Introduce outdated or vulnerable dependencies into the project. Utilize tools like npm audit or similar package management tools to identify and test for vulnerabilities.

Common Security Testing Techniques for Serverless Applications

Employing various security testing techniques is essential to identify and mitigate vulnerabilities in serverless applications. These techniques range from static code analysis to penetration testing, each offering unique insights into the application's security posture. A comprehensive approach involves combining multiple techniques to ensure a thorough assessment of the application's security vulnerabilities.Here's a table outlining common security testing techniques, along with the tools used and best practices:

| Test Type | Tools | Best Practices | Local Implementation Considerations |

|---|---|---|---|

| Static Code Analysis | SonarQube, Bandit, ESLint with security plugins | Regularly scan code for vulnerabilities, enforce secure coding standards, and address identified issues promptly. | Integrate static analysis tools into the local development environment, run scans on every code change, and ensure that any identified vulnerabilities are fixed before committing code. |

| Dynamic Application Security Testing (DAST) | OWASP ZAP, Burp Suite, Nuclei | Automated scanning of running applications, simulating attacks and identifying vulnerabilities like XSS, SQL injection, and broken authentication. | Set up a local testing environment that mimics the production environment. Configure the testing tools to interact with the locally running serverless functions and API endpoints. |

| Penetration Testing | Manual testing, Metasploit, custom scripts | Simulate real-world attacks to identify vulnerabilities that automated tools might miss. Focus on business logic flaws and complex attack scenarios. | Conduct penetration tests on the locally deployed application. Use a variety of attack techniques to simulate potential threats, and then carefully document and address any vulnerabilities found. |

| Dependency Scanning | npm audit, Snyk, OWASP Dependency-Check | Identify and mitigate vulnerabilities in project dependencies, including libraries and frameworks. | Run dependency scans regularly as part of the build process and before deployments. Update dependencies to the latest secure versions. Ensure local development environments are configured to flag vulnerable dependencies. |

Final Thoughts

In conclusion, mastering the art of testing serverless applications locally is indispensable for successful serverless development. By embracing the strategies Artikeld in this guide, developers can create robust, reliable, and secure serverless applications. From setting up a local development environment to simulating cloud services, writing unit tests, and conducting comprehensive integration tests, each aspect contributes to a more efficient and dependable development workflow.

As serverless technologies continue to evolve, the importance of rigorous local testing will only amplify, solidifying its role as a cornerstone of modern software engineering practices.

Questions Often Asked

What are the primary benefits of testing serverless applications locally?

Local testing allows for rapid iteration, faster feedback loops, reduced cloud costs during development, and the ability to test offline without an active internet connection, enhancing developer productivity and cost-effectiveness.

What tools are commonly used for simulating AWS services locally?

Tools like LocalStack and Moto are widely used. LocalStack provides a comprehensive set of AWS service emulations, while Moto focuses on mocking specific AWS SDK calls for Python testing.

How can I debug serverless functions running locally?

You can use IDE debuggers with breakpoints, logging statements, and tools like the Serverless Framework or AWS SAM CLI to enable local debugging, allowing you to step through code and inspect variables.

Is it possible to test event triggers locally?

Yes, you can simulate events from services like S3, DynamoDB, and API Gateway by crafting specific event payloads and feeding them to your local function invocations, allowing you to test event-driven architectures.