How to test disaster recovery scenarios in the cloud is a critical process for ensuring business continuity in the event of an outage or disaster. Cloud-based disaster recovery offers numerous advantages over traditional on-premise solutions, including scalability, cost-effectiveness, and geographic redundancy. However, the effectiveness of any disaster recovery strategy hinges on rigorous and consistent testing.

This document provides a detailed examination of the testing process, covering all aspects from initial planning and preparation to execution, verification, and failback. We will delve into the core principles of cloud disaster recovery, explore different recovery models, and offer practical guidance on defining recovery objectives, selecting appropriate tools, and automating key processes. The ultimate goal is to equip readers with the knowledge and strategies needed to build a resilient and reliable disaster recovery plan for their cloud environments.

Understanding Disaster Recovery in the Cloud

Cloud-based disaster recovery (DR) has become increasingly vital for organizations seeking to maintain business continuity in the face of unforeseen events. It represents a strategic approach to data protection and system availability, leveraging the inherent scalability and flexibility of cloud computing. This section delves into the core principles, models, and comparative advantages of cloud DR.

Core Principles of Cloud-Based Disaster Recovery

Cloud-based disaster recovery operates on several fundamental principles, ensuring data and application availability even during disruptive events. These principles prioritize data protection, rapid recovery, and cost-effectiveness.

- Data Replication: Data is replicated from the primary environment to a secondary, geographically diverse location within the cloud provider’s infrastructure. This redundancy ensures that a copy of the data is always available, minimizing data loss in case of a disaster. The replication strategy depends on the Recovery Point Objective (RPO) and Recovery Time Objective (RTO) requirements, with options ranging from near real-time replication to periodic backups.

- Automation: Automation is key to simplifying and accelerating the recovery process. Cloud platforms offer tools to automate failover procedures, system provisioning, and data synchronization. This reduces the manual effort required during a disaster, decreasing the RTO.

- Scalability and Elasticity: Cloud DR solutions leverage the inherent scalability of cloud infrastructure. During a disaster, resources can be rapidly scaled up to meet the demands of the recovered applications, ensuring performance and availability. After the disaster, resources can be scaled down to optimize costs.

- Cost Optimization: Cloud DR models offer various cost optimization strategies. Pay-as-you-go pricing, the ability to only pay for resources when needed, and the use of less expensive storage tiers for backup data can significantly reduce the total cost of ownership (TCO) compared to on-premise solutions.

- Security and Compliance: Cloud providers offer robust security features and compliance certifications, ensuring that DR solutions meet stringent security and regulatory requirements. Data encryption, access controls, and regular security audits are standard practices.

Cloud Disaster Recovery Models

Different cloud DR models offer varying levels of protection, cost, and complexity. The choice of a specific model depends on the organization’s RPO and RTO requirements, budget, and technical capabilities.

- Backup and Restore: This is the simplest and most cost-effective model. Data is backed up regularly to the cloud. In the event of a disaster, the data is restored from the backups.

- RPO: Determined by the backup frequency.

- RTO: Can be relatively long, as the entire system needs to be restored.

- Cost: Lowest cost, primarily involving storage costs.

- Suitability: Suitable for non-critical applications with longer acceptable downtime.

- Pilot Light: A minimal version of the core infrastructure is always running in the cloud. This includes essential components like database servers. In a disaster, the remaining infrastructure is provisioned and scaled up.

- RPO: Typically short, as data is regularly backed up.

- RTO: Moderate, as some infrastructure is already running.

- Cost: Moderate, with some ongoing infrastructure costs.

- Suitability: Suitable for applications with moderate RTO requirements.

- Warm Standby: A fully functional, but scaled-down, environment is always running in the cloud. The environment mirrors the production environment but with fewer resources. Data is synchronized continuously.

- RPO: Very short, often near real-time.

- RTO: Relatively short, as the environment is already prepared.

- Cost: Higher than Pilot Light, due to the ongoing infrastructure costs.

- Suitability: Suitable for applications with stricter RTO requirements.

- Hot Standby: An exact replica of the production environment is always running in the cloud, ready to take over immediately. All data and applications are synchronized in real-time.

- RPO: Near zero, as data is continuously synchronized.

- RTO: Very short, often minutes.

- Cost: Highest cost, due to the full duplication of resources.

- Suitability: Suitable for critical applications requiring minimal downtime.

Advantages and Disadvantages of Cloud Disaster Recovery Compared to On-Premise Solutions

Cloud DR offers several advantages over traditional on-premise solutions, but it also presents some disadvantages that organizations must consider. The decision to use cloud DR depends on a careful evaluation of these factors.

- Advantages:

- Cost-Effectiveness: Cloud DR can be more cost-effective than on-premise solutions, due to the elimination of hardware costs, reduced staffing requirements, and pay-as-you-go pricing models.

- Scalability and Flexibility: Cloud DR solutions offer unparalleled scalability and flexibility. Resources can be rapidly scaled up or down based on demand, providing agility and responsiveness.

- Simplified Management: Cloud providers handle much of the infrastructure management, reducing the burden on IT staff.

- Geographic Redundancy: Cloud providers offer geographically diverse data centers, providing robust protection against regional disasters.

- Automation: Cloud platforms provide extensive automation capabilities for DR processes, reducing manual effort and improving recovery times.

- Disadvantages:

- Internet Dependency: Cloud DR relies on a stable internet connection. Outages can disrupt access to the recovery environment.

- Security Concerns: Organizations must trust their cloud provider to protect their data and systems. Security is a shared responsibility, and organizations must implement their security controls.

- Vendor Lock-in: Migrating data and applications between cloud providers can be complex and costly.

- Compliance Challenges: Organizations must ensure that their cloud DR solutions comply with relevant regulations and industry standards.

- Data Transfer Costs: Transferring large amounts of data to and from the cloud can incur significant costs.

Defining Recovery Objectives

Establishing clear recovery objectives is paramount for effective disaster recovery planning in the cloud. These objectives define the acceptable limits of downtime and data loss, guiding the selection of appropriate recovery strategies and technologies. They ensure that business operations can resume within an acceptable timeframe and with minimal data loss following a disruptive event. This section will delve into the critical components of recovery objectives, specifically focusing on Recovery Time Objective (RTO) and Recovery Point Objective (RPO), and how to determine their values based on business requirements.

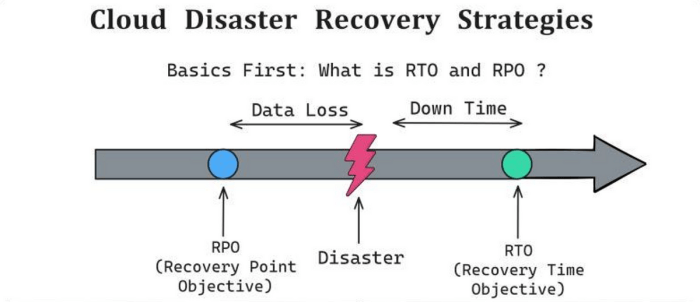

Understanding Recovery Time Objective (RTO) and Recovery Point Objective (RPO)

Recovery Time Objective (RTO) and Recovery Point Objective (RPO) are fundamental metrics in disaster recovery planning. They quantify the acceptable downtime and data loss that a business can tolerate during a disruptive event. These objectives directly influence the design and implementation of the disaster recovery strategy.

Recovery Time Objective (RTO): The maximum acceptable time that a system or application can be unavailable after a disaster. It represents the duration from the moment a disruption occurs to the point when the system is restored to its operational state.

Recovery Point Objective (RPO): The maximum acceptable amount of data loss measured in time. It represents the point in time to which data must be recovered after a disaster. This dictates how frequently data backups and replication must occur.

These two objectives are interconnected. A lower RTO typically requires a more sophisticated and potentially more expensive recovery strategy, such as automated failover. Similarly, a lower RPO demands more frequent backups or real-time data replication, impacting storage and bandwidth costs. For instance, a financial trading platform might require an RTO of minutes and an RPO of seconds, necessitating highly available and real-time data replication.

Conversely, a less critical application might have an RTO of hours and an RPO of several hours, allowing for less stringent and more cost-effective recovery mechanisms.

Determining Acceptable RTO and RPO Values

Determining the appropriate RTO and RPO values requires a thorough assessment of the business impact of downtime and data loss for each application and dataset. This assessment typically involves business stakeholders, application owners, and IT personnel. The process involves several key steps:

The following points Artikel the key considerations:

- Business Impact Analysis (BIA): Conduct a Business Impact Analysis (BIA) to identify the potential impact of downtime and data loss on critical business functions. This involves assessing the financial, operational, and reputational consequences of disruptions. For example, a BIA for an e-commerce platform would analyze the revenue loss per hour of downtime, the impact on customer satisfaction, and the potential damage to brand reputation.

- Application Categorization: Categorize applications based on their criticality to the business. This typically involves classifying applications into tiers, such as:

- Tier 1: Mission-critical applications essential for immediate business operations (e.g., core banking systems).

- Tier 2: Important applications supporting key business functions (e.g., customer relationship management systems).

- Tier 3: Non-critical applications that support business operations but are not essential for immediate functionality (e.g., internal communication tools).

- Data Sensitivity Assessment: Evaluate the sensitivity of data stored within each application. This includes assessing the potential impact of data loss on regulatory compliance, legal obligations, and data privacy. For instance, healthcare data requires stringent RPO and RTO due to HIPAA regulations.

- Stakeholder Consultation: Engage with business stakeholders to determine the acceptable levels of downtime and data loss. This involves understanding their tolerance for disruption and the financial implications of exceeding these limits. This process ensures that the recovery objectives align with business needs and priorities.

- Cost-Benefit Analysis: Perform a cost-benefit analysis to determine the optimal balance between recovery costs and the potential impact of downtime and data loss. Implementing a highly resilient disaster recovery solution can be expensive. This analysis helps determine the appropriate level of investment.

The output of this process should be a clear definition of RTO and RPO values for each application or data set. For instance, a Tier 1 application might have an RTO of less than 1 hour and an RPO of 15 minutes, while a Tier 3 application might have an RTO of 24 hours and an RPO of 4 hours.

Designing a Framework for Prioritizing Applications

Prioritizing applications is essential for allocating resources and focusing disaster recovery efforts. A well-defined framework ensures that the most critical applications are recovered first, minimizing the impact on business operations. The framework should be based on the application criticality determined during the BIA process.

Here’s a structured approach to prioritizing applications:

- Application Tiers: Assign applications to tiers based on their criticality, as previously defined. Tier 1 applications are the highest priority, followed by Tier 2, and then Tier 3. This tiered approach directly influences the order of recovery.

- Dependencies Mapping: Identify the dependencies between applications. Some applications rely on others to function correctly. These dependencies must be understood to ensure the dependent applications are recovered after their dependencies. For example, an e-commerce platform might depend on a payment processing system.

- Recovery Order: Define a recovery order based on application tiers and dependencies. Tier 1 applications are recovered first, followed by their dependent applications, then Tier 2, and so on. This ensures that critical business functions are restored promptly.

- Resource Allocation: Allocate resources (compute, storage, network bandwidth) based on application priorities. Tier 1 applications typically receive the highest allocation of resources to ensure rapid recovery.

- Testing and Validation: Regularly test and validate the recovery order and resource allocation to ensure that the disaster recovery plan functions as intended. This involves simulating disaster scenarios and verifying that applications are recovered according to the defined priorities.

This framework allows for a structured and efficient approach to disaster recovery, ensuring that critical business functions are prioritized and recovered in a timely manner. The framework is dynamic and should be reviewed and updated regularly to reflect changes in business requirements, application dependencies, and technology. For example, if a new application is introduced or an existing application’s criticality changes, the framework needs to be updated to reflect these changes.

Planning and Preparation

Effective disaster recovery in the cloud hinges on meticulous planning and preparation. This phase establishes the foundation for a resilient and recoverable infrastructure. Without a well-defined plan, recovery efforts can be chaotic, time-consuming, and potentially unsuccessful, leading to significant data loss and downtime. This section focuses on critical steps required to build a robust disaster recovery strategy.

Organizing a Checklist of Essential Steps for Creating a Comprehensive Disaster Recovery Plan

A structured checklist ensures all critical aspects of disaster recovery are addressed, minimizing oversight and streamlining the planning process. This checklist should be regularly reviewed and updated to reflect changes in the cloud environment, application architecture, and business requirements.

- Define Recovery Objectives: Clearly articulate Recovery Time Objectives (RTOs) and Recovery Point Objectives (RPOs) for each application and data set. RTO defines the maximum acceptable downtime, while RPO defines the maximum acceptable data loss. These objectives drive all subsequent planning decisions. For example, a critical financial application might have an RTO of minutes and an RPO of seconds, requiring real-time replication, whereas a less critical application might have an RTO of hours and an RPO of hours.

- Assess Risks and Threats: Identify potential threats and vulnerabilities specific to the cloud environment and the organization’s applications. Consider both natural disasters (e.g., earthquakes, hurricanes) and man-made threats (e.g., cyberattacks, human error). Perform a Business Impact Analysis (BIA) to determine the potential impact of each threat on business operations.

- Select Recovery Strategies: Choose appropriate recovery strategies based on the RTOs, RPOs, and risk assessment. Common strategies include:

- Backup and Restore: Regularly back up data and systems to a separate location. This is a cost-effective solution for less critical applications.

- Pilot Light: Maintain a minimal, always-on environment in the recovery region. This environment can be quickly scaled up to handle the full workload during a disaster.

- Warm Standby: Maintain a scaled-down but functional environment in the recovery region. The environment is constantly updated with data, and can be scaled up more quickly than a pilot light.

- Hot Standby/Active-Active: Maintain a fully functional, production-ready environment in the recovery region. This provides the fastest recovery time.

- Design the Recovery Architecture: Develop a detailed architecture diagram outlining the recovery environment, including infrastructure components, networking, and data replication mechanisms. This architecture should align with the chosen recovery strategies.

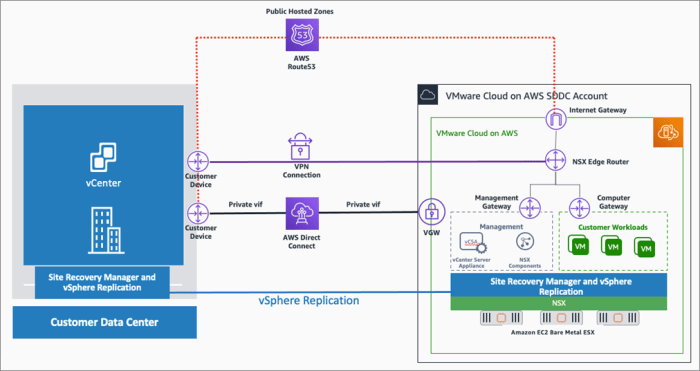

- Implement Data Replication and Backup: Implement data replication mechanisms to ensure data consistency between the primary and recovery regions. Establish a robust backup strategy, including frequency, retention policies, and testing procedures. Examples of data replication tools include cloud-provider-specific services like AWS’s Database Migration Service (DMS) or Azure’s Data Migration Service.

- Develop Recovery Procedures: Create step-by-step procedures for recovering applications and data. These procedures should be clear, concise, and easily understood by the recovery team. The procedures should cover all aspects of the recovery process, from initiating the failover to verifying the restored functionality.

- Establish Communication and Notification Protocols: Define clear communication channels and notification procedures for all stakeholders during a disaster. This includes internal teams, external vendors, and potentially, customers.

- Document the Plan: Create a comprehensive disaster recovery plan document that includes all the above information. This document should be readily accessible to the recovery team and regularly updated.

- Test the Plan: Regularly test the disaster recovery plan to ensure its effectiveness. Testing should include failover simulations, data recovery tests, and performance validation.

- Train the Recovery Team: Provide training to the recovery team on the disaster recovery plan, procedures, and tools. Regular training ensures the team is prepared to respond effectively during a real disaster.

Creating a Template for Documenting Roles, Responsibilities, and Contact Information for the Recovery Team

A well-defined recovery team structure, with clearly assigned roles and responsibilities, is crucial for efficient disaster recovery. A template helps ensure consistency and facilitates rapid communication and action during a crisis. This template should be readily accessible and easily updated as team members and responsibilities change.

| Role | Responsibilities | Primary Contact | Secondary Contact | Contact Information (Phone, Email) |

|---|---|---|---|---|

| Disaster Recovery Manager | Overall responsibility for the disaster recovery plan, coordinating the recovery team, and making critical decisions. | [Name] | [Name] | [Phone, Email] |

| Infrastructure Lead | Responsible for the recovery of infrastructure components, including servers, networks, and storage. | [Name] | [Name] | [Phone, Email] |

| Application Lead | Responsible for the recovery of specific applications, including application configuration and data restoration. | [Name] | [Name] | [Phone, Email] |

| Database Administrator | Responsible for the recovery of databases, including data replication, backup restoration, and data consistency. | [Name] | [Name] | [Phone, Email] |

| Network Administrator | Responsible for restoring network connectivity, including routing, firewalls, and load balancers. | [Name] | [Name] | [Phone, Email] |

| Security Officer | Responsible for ensuring the security of the recovery environment, including access control, data encryption, and vulnerability management. | [Name] | [Name] | [Phone, Email] |

| Communication Lead | Responsible for communicating with stakeholders, including internal teams, external vendors, and customers. | [Name] | [Name] | [Phone, Email] |

This template provides a framework for documenting the recovery team’s structure and contact information. Each row should be populated with the relevant details for each role. This information must be kept up to date. The secondary contact column is especially important, as it provides an alternate contact person in case the primary contact is unavailable during a disaster. The template also allows for the inclusion of contact information (phone numbers and email addresses) for each team member.

Demonstrating How to Select Appropriate Cloud Services and Tools for Disaster Recovery

Selecting the right cloud services and tools is crucial for implementing an effective and cost-efficient disaster recovery solution. The choice of services should align with the defined RTOs, RPOs, and recovery strategies. Cloud providers offer a wide range of services tailored for disaster recovery.

- Compute Services:

- Example: Amazon EC2, Azure Virtual Machines, Google Compute Engine.

- Use Case: Deploying and managing virtual machines in the recovery region. The choice of instance type and configuration should match the production environment’s needs. Consider auto-scaling to dynamically adjust compute resources based on demand.

- Storage Services:

- Example: Amazon S3, Azure Blob Storage, Google Cloud Storage.

- Use Case: Storing backups and replicating data between regions. Consider using object storage for cost-effective backup and archival, and block storage for applications requiring high performance and low latency. Data replication features provided by these services (e.g., S3 Cross-Region Replication) can automatically copy data to a secondary region.

- Database Services:

- Example: Amazon RDS, Azure SQL Database, Google Cloud SQL.

- Use Case: Replicating and recovering databases in the recovery region. Many cloud providers offer built-in replication features for their database services, such as read replicas or failover mechanisms.

- Networking Services:

- Example: Amazon VPC, Azure Virtual Network, Google Cloud Virtual Network.

- Use Case: Creating and managing virtual networks in the recovery region. This includes configuring routing, firewalls, and load balancers. Cloud providers often offer features like VPN gateways and direct connect services to establish secure connections between the primary and recovery regions.

- Backup and Recovery Services:

- Example: AWS Backup, Azure Backup, Google Cloud Backup and DR.

- Use Case: Automating and managing backups, and orchestrating the recovery process. These services typically offer features like automated backup scheduling, data retention policies, and one-click recovery. They simplify the process of backing up and restoring data, reducing the complexity and time required for disaster recovery.

- Orchestration and Automation Tools:

- Example: AWS CloudFormation, Azure Resource Manager, Google Cloud Deployment Manager.

- Use Case: Automating the deployment and configuration of infrastructure in the recovery region. These tools allow for Infrastructure as Code (IaC), enabling the creation of repeatable and consistent recovery environments. They streamline the deployment process and reduce the risk of human error.

When selecting cloud services, consider factors such as cost, performance, scalability, and ease of management. Evaluate the service’s features and capabilities against the specific requirements of the disaster recovery plan. Choose services that offer the best balance of these factors for the organization’s needs. For instance, consider using a combination of object storage for cost-effective backups and block storage for performance-intensive applications.

Selecting Cloud Disaster Recovery Tools

The selection of appropriate cloud disaster recovery (DR) tools is a critical decision, directly impacting the resilience and recoverability of cloud-based infrastructure and applications. The chosen tools must align with the recovery objectives, budget constraints, and the specific requirements of the organization’s IT environment. Careful evaluation of various features, capabilities, and cost models is essential for making an informed choice that minimizes downtime and data loss in the event of a disaster.

Key Features for Evaluating Disaster Recovery Tools

Selecting the right DR tool involves a detailed assessment of several key features. These features, when carefully evaluated, contribute to a robust and effective DR strategy.

- Recovery Time Objective (RTO) and Recovery Point Objective (RPO) Compliance: The tool’s ability to meet the predefined RTO and RPO is paramount. RTO represents the maximum acceptable downtime, while RPO defines the maximum acceptable data loss. A tool that offers rapid failover and efficient data replication mechanisms is crucial for meeting stringent RTO and RPO requirements. For example, if an organization requires an RTO of 1 hour and an RPO of 15 minutes, the chosen tool must facilitate recovery within that timeframe, ensuring minimal disruption to business operations.

- Automation Capabilities: Automation simplifies and accelerates the DR process. Features like automated failover, failback, and orchestration of recovery workflows minimize manual intervention, reducing the potential for human error and accelerating recovery. Tools that offer scripting capabilities and integration with infrastructure-as-code (IaC) frameworks further enhance automation, enabling repeatable and consistent recovery procedures.

- Data Replication Methods: The data replication strategy employed by the DR tool significantly influences RPO and the overall recovery process. Options include synchronous replication (real-time, zero data loss), asynchronous replication (periodic snapshots, potential for data loss), and continuous data protection (CDP, near-zero data loss). The choice of replication method depends on the criticality of the data and the acceptable level of data loss.

- Scalability and Elasticity: The DR tool should be able to scale resources up or down dynamically to accommodate fluctuating workloads and resource demands. Elasticity ensures that resources are available when needed, without requiring significant upfront investment. This is especially important in cloud environments where resource allocation can be adjusted based on real-time requirements.

- Security Features: Robust security measures are essential to protect data during replication, storage, and recovery. Features like encryption at rest and in transit, access controls, and regular security audits are crucial. The tool should also comply with relevant industry regulations and security standards.

- Monitoring and Alerting: Comprehensive monitoring and alerting capabilities are essential for proactive identification of potential issues. The tool should provide real-time visibility into the DR environment, including replication status, resource utilization, and performance metrics. Alerts should be generated for any deviations from the normal operational parameters, enabling prompt action to mitigate risks.

- Testing and Validation: The ability to regularly test and validate the DR plan is crucial to ensure its effectiveness. The tool should provide features for non-disruptive testing, allowing organizations to simulate failover scenarios without impacting production workloads. Regular testing confirms that the DR plan functions as intended and that recovery procedures are up-to-date and accurate.

- Integration with Existing Infrastructure: Seamless integration with existing infrastructure and applications is vital for minimizing disruption and simplifying the DR process. The tool should support integration with the organization’s cloud provider, operating systems, databases, and other critical components.

- Cost-Effectiveness: The total cost of ownership (TCO) of the DR tool should be carefully considered, including licensing fees, resource consumption, and operational expenses. The tool’s pricing model should be transparent and predictable, allowing for accurate budgeting and cost optimization.

Popular Cloud-Based Disaster Recovery Solutions

Several cloud-based DR solutions are available, each with its own strengths and weaknesses. These solutions cater to diverse needs and budgets, offering various features and capabilities.

- AWS Elastic Disaster Recovery (EDR): AWS EDR provides a cost-effective solution for replicating on-premises or cloud-based servers to AWS. It supports both block-level and file-level replication, enabling quick failover and failback. EDR is known for its ease of use and integration with other AWS services. It’s a good fit for organizations already heavily invested in the AWS ecosystem.

- Azure Site Recovery (ASR): Azure Site Recovery is Microsoft’s DR-as-a-Service (DRaaS) offering, designed for replicating workloads to Azure. It supports a wide range of operating systems, applications, and virtualization platforms. ASR offers features like automated replication, failover, and failback, and it integrates seamlessly with other Azure services. This solution is well-suited for organizations using Azure or those with a mixed on-premises and cloud environment.

- Google Cloud Disaster Recovery (GCDR): Google Cloud offers various DR solutions, including solutions built on top of Compute Engine, Cloud Storage, and other services. These solutions enable organizations to replicate and recover their workloads within the Google Cloud environment. GCDR supports both data replication and application recovery, with a focus on automation and scalability. This is a good option for organizations that use Google Cloud as their primary cloud provider.

- Veeam Cloud Connect: Veeam provides a comprehensive data protection and DR solution that supports cloud-based DR. Veeam Cloud Connect enables organizations to replicate their workloads to a service provider or a secondary cloud environment. It supports various replication methods, including image-based replication and file-level replication. Veeam is known for its ease of use and its integration with VMware and Hyper-V virtualization platforms.

- Zerto: Zerto offers a continuous data protection and DR solution that provides near-zero RPOs. It replicates data at the block level, enabling rapid recovery and minimal data loss. Zerto supports both on-premises and cloud-based DR scenarios, and it integrates with various virtualization platforms and cloud providers. Zerto is designed for organizations that require very low RPOs and minimal downtime.

Cost Implications of Different Disaster Recovery Tool Options

The cost of a DR solution varies significantly depending on several factors, including the chosen tool, the complexity of the environment, the required RTO and RPO, and the amount of data being protected. A thorough understanding of the cost implications of different options is crucial for budget planning and cost optimization.

- Pay-as-you-go vs. Subscription-based Pricing: DR tools are typically offered with either a pay-as-you-go or a subscription-based pricing model. Pay-as-you-go models charge based on resource consumption (e.g., storage, compute, network bandwidth) and the duration of usage. Subscription-based models offer a fixed monthly or annual fee, providing cost predictability but potentially leading to higher costs if resources are underutilized. The choice depends on the organization’s workload patterns and resource requirements.

- Resource Consumption: The amount of storage, compute, and network bandwidth consumed by the DR solution directly impacts the cost. Data replication methods, such as synchronous replication, require more resources than asynchronous replication. The frequency of data replication and the size of the data being protected also influence resource consumption. Organizations should carefully estimate their resource requirements to avoid unexpected costs.

- Licensing Fees: Some DR tools require licensing fees, which can be a significant cost component. Licensing models vary, including per-server, per-CPU, or per-user licensing. The licensing fees should be factored into the TCO analysis.

- Operational Costs: Operational costs include the costs associated with managing and maintaining the DR environment. These costs include staff time, training, and the cost of any third-party services used. Automation and orchestration capabilities can help reduce operational costs by minimizing manual intervention.

- Recovery Testing Costs: Regularly testing the DR plan is essential to ensure its effectiveness. The cost of recovery testing includes the cost of the resources used during testing and the cost of any downtime. Automated testing tools and non-disruptive testing methods can help minimize these costs.

- Hidden Costs: Organizations should be aware of potential hidden costs, such as the cost of data transfer, the cost of storage in the DR environment, and the cost of any professional services required for implementation and management. A comprehensive cost analysis should consider all potential costs.

- Example: Consider two hypothetical DR solutions: Solution A (pay-as-you-go) and Solution B (subscription-based). Solution A charges $0.10 per GB of storage per month and $0.05 per GB of data transferred. Solution B charges a flat fee of $1,000 per month for unlimited storage and data transfer. If an organization stores 5 TB (5,120 GB) of data and transfers 1 TB (1,024 GB) of data per month, the cost for Solution A would be ($0.10

– 5,120) + ($0.05

– 1,024) = $512 + $51.20 = $563.20.Solution B would cost $1,000 per month. In this case, Solution A is more cost-effective. However, if the organization stores 20 TB of data and transfers 5 TB of data per month, Solution A would cost ($0.10

– 20,480) + ($0.05

– 5,120) = $2,048 + $256 = $2,304, making Solution B more cost-effective. This demonstrates the importance of carefully evaluating resource consumption and pricing models.

Building the Disaster Recovery Environment

Establishing a robust disaster recovery (DR) environment in the cloud is crucial for business continuity. This involves creating a replica of the production environment in a separate cloud region or availability zone, ensuring data and applications are readily available in case of an outage. This process requires meticulous planning and execution to minimize downtime and data loss.

Setting Up a Replica Environment in the Cloud

Creating a replica environment necessitates a series of carefully orchestrated steps. The objective is to mirror the production environment as closely as possible, ensuring minimal discrepancies in configuration and data.

- Choosing a Cloud Region/Availability Zone: Select a geographically separate region or availability zone within the cloud provider’s infrastructure. This ensures that a regional outage does not affect both the production and recovery environments. The selection should consider latency requirements and regulatory compliance. For example, if the production environment is in the US East region, the recovery environment might be in the US West region.

- Provisioning Infrastructure: Allocate the necessary virtual machines (VMs), storage, and network resources in the chosen region/zone. This includes specifying the size, type, and number of VMs, selecting appropriate storage tiers (e.g., SSD, HDD), and configuring the network infrastructure. The infrastructure should be scaled to match the production environment’s capacity, considering peak loads and future growth.

- Configuring Virtual Machines: Configure the VMs with the same operating systems, software, and configurations as the production VMs. This includes installing applications, setting up user accounts, and configuring security settings. Automate this process using Infrastructure as Code (IaC) tools like Terraform or CloudFormation to ensure consistency and repeatability.

- Setting up Storage Replication: Implement data replication mechanisms to copy data from the production environment to the recovery environment. Cloud providers offer various options for data replication, including block-level replication, object storage replication, and database replication. The choice depends on the Recovery Point Objective (RPO) and Recovery Time Objective (RTO).

- Testing and Validation: Regularly test the replica environment to ensure it is functioning correctly. This includes verifying data integrity, application functionality, and failover procedures. Testing should be performed at regular intervals to identify and address any issues before a disaster occurs.

Configuring Network Settings and Security Protocols for the Recovery Site

Properly configuring network settings and security protocols is paramount for protecting the recovery environment and ensuring its accessibility. This includes setting up network connectivity, firewalls, and access controls.

- Network Connectivity: Establish network connectivity between the production and recovery environments. This can be achieved using various methods, such as:

- Virtual Private Network (VPN): Creates a secure tunnel over the internet.

- Direct Connect: Provides a dedicated network connection directly to the cloud provider.

- Cloud-to-Cloud Networking: Uses the cloud provider’s internal network.

The choice depends on the required bandwidth, latency, and security requirements.

- Firewall Configuration: Configure firewalls to control network traffic and protect the recovery environment from unauthorized access. Define rules to allow only necessary traffic to and from the recovery environment. Use a stateful firewall to inspect traffic and block malicious activity.

- Security Groups/Network Access Control Lists (ACLs): Implement security groups or ACLs to control inbound and outbound traffic at the instance or subnet level. These controls act as virtual firewalls, providing an additional layer of security. Restrict access based on source IP addresses, protocols, and ports.

- Identity and Access Management (IAM): Implement IAM policies to control access to resources in the recovery environment. Grant users and applications only the necessary permissions. Regularly review and update IAM policies to maintain a secure environment.

- Encryption: Encrypt data at rest and in transit to protect sensitive information. Use encryption keys managed by the cloud provider or a dedicated key management service.

- Regular Security Audits: Conduct regular security audits to identify vulnerabilities and ensure compliance with security best practices. Use automated tools to scan for misconfigurations and security threats.

Creating Scripts for Automating Data and Application Replication

Automating data and application replication is essential for minimizing downtime and ensuring a seamless failover. This involves creating scripts or using tools to automate the replication process.

- Choosing Replication Tools: Select appropriate tools for data and application replication based on the specific requirements and cloud provider. Common tools include:

- Cloud Provider-Specific Services: Such as AWS Elastic Disaster Recovery (DRS), Azure Site Recovery, or Google Cloud Disaster Recovery.

- Third-Party Tools: Offering more flexibility and features.

- Data Replication Scripts: Develop scripts to automate data replication. These scripts should handle the following tasks:

- Identifying Data Sources: Determining the location of the data to be replicated.

- Initiating Replication: Starting the replication process using the chosen tools.

- Monitoring Replication Status: Tracking the progress of the replication and logging any errors.

- Performing Failover: Automating the failover process, which involves switching over to the recovery environment in case of a disaster.

For example, using `rsync` for file-level replication or database-specific replication tools like `mysqldump` for MySQL databases.

- Application Replication Scripts: Create scripts to automate application replication. These scripts should:

- Deploy Application Components: Deploy the necessary application components to the recovery environment.

- Configure Application Settings: Configure application settings, such as database connections and API endpoints.

- Test Application Functionality: Verify that the application is functioning correctly in the recovery environment.

- Orchestration and Automation: Integrate the data and application replication scripts with an orchestration tool or automation platform to automate the entire DR process. Popular tools include:

- AWS CloudFormation: For AWS.

- Azure Resource Manager (ARM): For Azure.

- Terraform: For multi-cloud deployments.

These tools allow for the creation of automated workflows to replicate data, deploy applications, and test the DR environment.

- Testing the Scripts: Thoroughly test the replication scripts to ensure they function as expected. This includes testing the scripts under different failure scenarios.

Testing Procedures

The successful implementation of a cloud disaster recovery (DR) strategy hinges on rigorous testing. Testing validates the effectiveness of the DR plan, identifies vulnerabilities, and ensures that recovery objectives are met. Regular testing, conducted with precision and a focus on detail, is crucial for maintaining business continuity and minimizing downtime in the event of a disaster. The following sections Artikel the essential steps involved in initiating and executing a comprehensive DR test.

Preparing for a Disaster Recovery Test

Prior to initiating a DR test, meticulous preparation is paramount. This preparation ensures a controlled environment, minimizes disruption, and maximizes the likelihood of a successful outcome. Proper preparation involves several key steps.

- Define the Scope and Objectives: Clearly delineate the scope of the test, specifying which systems, applications, and data will be included. Define specific recovery objectives, such as Recovery Time Objective (RTO) and Recovery Point Objective (RPO), to be validated during the test. Document these objectives to serve as benchmarks for success.

- Assemble the Test Team: Establish a dedicated test team comprising individuals with relevant expertise in the systems being tested, network administration, security, and DR procedures. Clearly define roles and responsibilities for each team member. This structure ensures accountability and efficient execution.

- Communicate and Coordinate: Inform all stakeholders, including business units, IT teams, and any third-party providers, about the upcoming test, its schedule, and potential impact. Ensure that all necessary approvals are obtained and that communication channels are established for reporting issues and providing updates.

- Verify Prerequisites: Confirm that all prerequisites are in place. This includes verifying that the DR environment is properly configured, that data replication is current, that network connectivity is established, and that all necessary tools and scripts are available. Check that security configurations, such as access controls and firewalls, are appropriately set up in the DR environment.

- Develop a Test Plan: Create a detailed test plan that Artikels the test steps, expected outcomes, and contingency plans. Include specific instructions for initiating the failover, validating the recovered systems, and restoring operations. The plan should also define the criteria for success and failure.

- Back Up Production Data: Before initiating any tests, create a recent backup of the production data. This backup provides a fallback position in case of any unforeseen issues during the test, allowing for a swift return to the pre-test state.

Initiating a Failover Test

Initiating a failover test involves the deliberate transfer of operations from the primary production environment to the designated DR environment. This process should be executed according to a predefined procedure to minimize disruption and ensure a successful recovery.

- Trigger the Failover: Based on the test plan, initiate the failover process. This may involve manually triggering a failover script, using a DR orchestration tool, or simulating a disaster scenario. Ensure the failover mechanism aligns with the pre-defined procedures documented in the test plan.

- Verify Data Replication: Confirm that data replication has completed successfully and that the DR environment contains the most recent data. Use tools provided by the cloud provider or third-party replication solutions to verify data consistency and integrity.

- Bring Up the DR Environment: Start the applications and systems in the DR environment. This may involve starting virtual machines, configuring network settings, and enabling access to the necessary data and services. Ensure that the environment is fully functional and accessible.

- Validate System Functionality: After the DR environment is operational, perform thorough testing to validate the functionality of the applications and systems. This should include checking critical business processes, data integrity, and user access.

- Test Connectivity and Access: Verify that users and applications can access the DR environment and that network connectivity is correctly established. Test various access methods, such as VPNs, load balancers, and DNS settings, to ensure seamless access.

- Monitor for Errors and Issues: Throughout the failover process and validation phase, continuously monitor for any errors or issues. Record all observations and document any deviations from the expected behavior.

Monitoring the Failover Process and Identifying Issues

Monitoring the failover process is critical for identifying and addressing any issues that may arise during the transition. Effective monitoring provides insights into the performance of the DR environment and enables proactive troubleshooting.

- Establish Monitoring Systems: Implement comprehensive monitoring systems to track the performance and health of the DR environment. Utilize monitoring tools provided by the cloud provider, third-party monitoring solutions, or custom scripts to monitor critical metrics.

- Monitor Key Metrics: Monitor key metrics, including CPU utilization, memory usage, network latency, disk I/O, and application response times. Set up alerts for critical thresholds to be notified of potential issues.

- Check Logs and Events: Regularly review system logs, application logs, and security event logs to identify any errors, warnings, or security breaches. Analyze these logs to understand the root cause of any issues.

- Validate Data Integrity: After failover, perform data integrity checks to ensure data consistency and prevent data loss. Compare data sets between the production and DR environments to verify that the data has been successfully replicated.

- Document Issues and Resolutions: Create a detailed log of all issues encountered during the failover test, including the symptoms, root cause, and resolution steps. This documentation is invaluable for future testing and DR plan improvements.

- Analyze Test Results: At the conclusion of the test, analyze the results against the defined recovery objectives (RTO and RPO). Assess whether the failover was successful and whether the DR environment met the performance and availability requirements.

Testing Procedures

Verifying the successful recovery of applications and data is the ultimate goal of any disaster recovery (DR) plan. Rigorous testing ensures that the DR environment functions as expected, enabling business continuity in the face of disruptions. This section Artikels procedures to validate recovery, including data integrity, application functionality, network connectivity, and user access.

Verifying Application and Data Recovery

Successful application and data recovery is the core of a robust DR strategy. It requires methodical verification of both the data’s consistency and the application’s operational capabilities within the recovered environment.

- Data Integrity Checks: The integrity of data is paramount. Data corruption can render the entire recovery process ineffective. Data integrity checks involve comparing data in the recovered environment against the original, production environment.

- Checksum Verification: Employ checksum algorithms (e.g., SHA-256, MD5) to generate unique “fingerprints” for data files. Compare the checksums of files in the recovered and production environments. Discrepancies indicate data corruption.

- Database Consistency Checks: For database systems, utilize built-in consistency check tools. For example, in Oracle, the `DBMS_REPAIR` package can be used to identify and repair data corruption. In MySQL, the `CHECK TABLE` command performs similar functions.

- Data Volume Comparisons: Compare the size of data volumes (e.g., tables, filesystems) between the recovered and production environments. Significant differences warrant investigation.

- Application Functionality Testing: Verify that applications function as expected within the recovered environment. This involves testing core functionalities, user workflows, and integrations.

- Functional Testing: Execute a series of test cases to validate critical application features. For example, if the application processes financial transactions, test the ability to create, modify, and delete transactions. If it is an e-commerce platform, test the order placement and payment processing.

- Performance Testing: Assess the performance of applications in the recovered environment. Measure response times, transaction throughput, and resource utilization (CPU, memory, disk I/O). Performance degradation might indicate infrastructure bottlenecks.

- Integration Testing: Verify that the application integrates correctly with other systems. This includes testing API calls, data synchronization processes, and dependencies on external services.

- Example: Consider a retail company with an e-commerce platform. During a DR test, the following verification steps would be performed:

- Verify the data integrity of the product catalog by comparing checksums of product images and descriptions.

- Test the functionality of the shopping cart by adding items, applying discounts, and proceeding to checkout.

- Measure the response time of the product search function to ensure acceptable performance.

Validating Network Connectivity and User Access

Effective DR requires seamless network connectivity and user access to the recovered environment. Verifying these aspects ensures that users can continue their work with minimal disruption.

- Network Connectivity Verification: Confirm that network connectivity to the recovered environment is correctly configured and operational.

- Ping Tests: Use the `ping` command to test connectivity to servers and network devices within the recovered environment.

- Traceroute/Tracerpath: Employ `traceroute` (Linux/macOS) or `tracert` (Windows) to identify the network path and latency to various resources. This helps pinpoint network bottlenecks or routing issues.

- DNS Resolution Tests: Verify that DNS records are correctly configured to point to the recovered environment’s resources. This can be done using the `nslookup` or `dig` commands.

- Firewall Rule Verification: Ensure that firewall rules are correctly configured to allow traffic to and from the recovered environment. This includes checking inbound and outbound rules for essential ports and protocols.

- User Access Verification: Ensure that users can access applications and data in the recovered environment using their existing credentials and permissions.

- Authentication Testing: Test the authentication process by attempting to log in to applications with various user accounts. Verify that users can log in using their existing credentials (e.g., usernames and passwords).

- Authorization Testing: Verify that users have the correct permissions and access rights to the data and applications they need. Test different user roles and permissions to ensure proper access control.

- Single Sign-On (SSO) Verification: If SSO is used, test the ability of users to seamlessly access applications in the recovered environment using their SSO credentials.

- VPN Connectivity Testing: If VPN is used for remote access, test the ability of users to connect to the recovered environment via VPN.

- Example: A financial institution’s DR test would involve:

- Confirming that users can ping critical servers in the recovered environment.

- Verifying that DNS records for the core banking application point to the correct IP addresses in the recovered environment.

- Testing the ability of authorized users to log in to the banking platform and access their accounts.

Testing Procedures

The final stages of disaster recovery testing involve not only verifying the successful recovery of systems but also ensuring a smooth return to the primary environment and the thorough cleanup of the temporary recovery infrastructure. These processes, failback and cleanup, are critical for maintaining operational continuity and minimizing disruption. Rigorous testing of these procedures is paramount to validate their effectiveness and identify potential vulnerabilities.

Failback to Primary Environment

Failback is the process of returning operations from the recovery environment back to the primary production environment after a successful disaster recovery test. This transition must be carefully planned and executed to avoid data loss or service interruption. The complexity of the failback procedure depends on the chosen disaster recovery strategy and the architecture of the cloud environment.To ensure a successful failback, consider the following steps:

- Data Synchronization: The most critical aspect of failback is ensuring data consistency. This involves synchronizing any data changes that occurred in the recovery environment back to the primary environment. The method of synchronization depends on the data replication strategy used.

- For synchronous replication, minimal data synchronization is needed as the primary environment should already be up-to-date.

- For asynchronous replication, a more complex process is required. The data changes must be identified and replicated back to the primary environment. This process may involve incremental backups, transaction logs, or other mechanisms to capture and apply changes.

- Environment Preparation: The primary environment must be prepared to receive the failback. This includes verifying the health of the primary systems, ensuring sufficient resources are available, and configuring network settings.

- Cutover: The actual cutover process involves switching traffic from the recovery environment back to the primary environment. This may involve updating DNS records, load balancer configurations, or other routing mechanisms. The cutover should be planned to minimize downtime and disruption.

- Validation: After the cutover, it is crucial to validate that the primary environment is functioning correctly and that all services are available. This includes testing applications, verifying data integrity, and monitoring performance.

- Monitoring: Implement robust monitoring during and after the failback to detect any issues and ensure a smooth transition. Monitor critical metrics such as CPU utilization, memory usage, network latency, and application response times.

Cleanup of Recovery Environment

After a successful failback, the recovery environment should be cleaned up to avoid unnecessary costs and security risks. This process involves de-provisioning resources, deleting data, and removing any temporary configurations.To facilitate a comprehensive cleanup, the following steps should be considered:

- Resource De-provisioning: Identify and de-provision all cloud resources that were created or used in the recovery environment. This includes virtual machines, storage volumes, databases, and network components.

- Data Deletion: Delete any data that was created or modified in the recovery environment during the test. This is particularly important for sensitive data. Ensure secure data deletion methods are used to prevent data breaches.

- Configuration Removal: Remove any temporary configurations that were created for the test, such as network settings, security rules, and access controls.

- Cost Optimization: The cloud provider may offer tools to track the cost of the recovery environment. Analyze the costs incurred during the test and identify areas for optimization.

- Security Hardening: Before deleting the environment, perform a security audit to identify and remediate any potential vulnerabilities. This ensures the environment is secure before it is deleted.

Documenting Failback and Cleanup Results

Thorough documentation of the failback and cleanup processes is essential for future testing and incident response. This documentation should include detailed information about the steps taken, the results obtained, and any issues encountered.The documentation should encompass:

- Test Plan Execution: A detailed record of each step taken during the failback and cleanup processes, including the specific commands used, the time taken for each step, and any deviations from the test plan.

- Data Synchronization Details: Document the data synchronization process, including the tools and methods used, the amount of data synchronized, and the time taken.

- Cutover Procedures: Detailed records of the cutover procedures, including DNS updates, load balancer configurations, and any other routing changes. Document the exact time of cutover and any downtime experienced.

- Validation Results: Record the results of all validation tests, including application functionality, data integrity checks, and performance metrics. Note any discrepancies or issues identified during the validation process.

- Cleanup Process Documentation: Detail all steps taken to clean up the recovery environment, including the de-provisioning of resources, the deletion of data, and the removal of configurations.

- Issue Log: Maintain a comprehensive log of any issues encountered during the failback and cleanup processes, including the root cause, the resolution steps, and any lessons learned.

- Performance Metrics: Track key performance indicators (KPIs) such as the time taken for failback and cleanup, the amount of data synchronized, and the downtime experienced.

- Lessons Learned: Capture any lessons learned during the test, including areas for improvement in the disaster recovery plan, the failback and cleanup procedures, or the testing process. This information should be used to refine the disaster recovery plan and improve the effectiveness of future tests.

Documentation and Reporting

Documenting and reporting are critical components of a robust disaster recovery (DR) strategy. Comprehensive documentation provides a historical record of testing activities, identifies areas of weakness, and facilitates continuous improvement. Reporting offers insights into the effectiveness of the DR plan, enabling informed decision-making and resource allocation. This process ensures that the DR plan remains aligned with evolving business needs and technological advancements.

Template for Documenting Disaster Recovery Test Results

A standardized template is essential for consistent and accurate documentation of DR test results. This ensures all relevant information is captured in a uniform format, simplifying analysis and comparison across different tests. The template should encompass key aspects of the test, including objectives, procedures, observations, and findings.

- Test Identification: This section includes the test name, date, and time of execution, along with the individuals involved (tester, observer, and stakeholders). It should also include a unique test identifier for easy referencing.

- Test Objectives: A clear statement of what the test aimed to achieve. This includes verifying specific recovery time objectives (RTOs) and recovery point objectives (RPOs), and validating the functionality of critical systems.

- Test Scope: Defines the systems, applications, and data included in the test. This scope should align with the DR plan and the business impact analysis (BIA).

- Test Environment: Details about the test environment, including the cloud infrastructure used, virtual machine configurations, and network settings.

- Test Procedures: A step-by-step record of the actions performed during the test. This should include the exact commands, scripts, and processes executed. Any deviations from the planned procedures should be documented.

- Observations and Findings: This is the most crucial section. It includes detailed observations made during the test, such as system behavior, performance metrics, and any errors encountered. It should also include the identification of any issues or bottlenecks. For instance, if a database restoration took longer than the RTO, this should be explicitly noted with relevant timestamps and metrics.

- Performance Metrics: Quantitative data collected during the test, such as recovery time, data loss, and system resource utilization (CPU, memory, network I/O). For example, the actual recovery time for a specific application can be measured and compared against the RTO.

- Analysis and Evaluation: An assessment of the test results against the predefined objectives. This section should provide a clear statement of whether the test was successful and, if not, the reasons why.

- Recommendations: Suggestions for improving the DR plan, based on the test findings. This could include changes to procedures, infrastructure, or tools.

- Action Items: Specific tasks that need to be completed to address the recommendations. This should include the responsible party and the target completion date.

- Sign-off: A section for stakeholders to review and approve the test results. This includes the names and signatures of those involved.

Methods for Generating Reports that Summarize Test Performance

Generating reports is crucial for synthesizing the findings of DR tests and communicating them effectively to stakeholders. Automation streamlines this process and reduces the risk of errors. Reports should provide a concise overview of test performance, highlight key findings, and identify areas for improvement.

- Automated Data Collection: Implementing automated data collection tools to gather performance metrics during the test. These tools can monitor system resource utilization, recovery times, and data loss.

- Report Generation Tools: Using specialized reporting tools or scripting languages to automatically generate reports. These tools can pull data from the documentation template and present it in a clear and concise format. Popular options include:

- Cloud Provider’s Reporting Features: Leverage the built-in reporting capabilities of the cloud provider (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring). These tools can generate dashboards and reports based on collected metrics.

- Scripting Languages: Utilize scripting languages like Python (with libraries such as Pandas and Matplotlib) to automate data analysis and report generation. This allows for customization and integration with other systems.

- Business Intelligence (BI) Tools: Integrate with BI tools (e.g., Tableau, Power BI) to visualize the test results and create interactive dashboards. This provides a more comprehensive and user-friendly way to analyze the data.

- Report Content: Reports should include:

- Executive Summary: A brief overview of the test, its objectives, and the key findings.

- Test Overview: Details about the test scope, environment, and procedures.

- Performance Metrics: A summary of the key performance metrics, such as recovery time, data loss, and resource utilization. These should be presented using tables, charts, and graphs for easy interpretation.

- Findings and Analysis: A detailed analysis of the test results, highlighting any issues or bottlenecks.

- Recommendations: Specific recommendations for improving the DR plan, based on the test findings.

- Action Items: A list of action items with assigned owners and target completion dates.

- Frequency of Reporting: Reports should be generated after each DR test. For organizations with frequent testing, consider generating periodic summary reports (e.g., quarterly or annually) to track trends and identify long-term areas for improvement.

Importance of Regular Updates and Revisions to the Disaster Recovery Plan

Regular updates and revisions to the DR plan are essential to ensure its ongoing effectiveness. The IT landscape is constantly evolving, with new technologies, applications, and threats emerging regularly. The DR plan must adapt to these changes to provide adequate protection.

- Test Results as Input: The primary input for updating the DR plan should be the results of DR tests. These tests identify weaknesses in the plan and provide valuable insights into areas that need improvement. For instance, if a test reveals that the recovery time for a critical application exceeds the RTO, the DR plan should be revised to address this issue.

- Change Management: Implementing a robust change management process to track changes to the IT infrastructure, applications, and data. Any changes should be assessed for their potential impact on the DR plan, and the plan should be updated accordingly.

- Business Requirements: The DR plan must align with the current business requirements. As the business evolves, its priorities and critical systems may change. The DR plan should be reviewed regularly to ensure it meets these evolving needs.

- Technology Advancements: Keeping the DR plan updated with the latest technology advancements. This includes adopting new tools, techniques, and best practices to improve the efficiency and effectiveness of the DR process. For example, migrating to a new cloud platform or implementing automated failover mechanisms.

- Threat Landscape: Monitoring the threat landscape and updating the DR plan to address new and emerging threats. This includes incorporating security best practices and regularly reviewing the plan’s vulnerability to cyberattacks and other disasters.

- Frequency of Updates: The DR plan should be reviewed and updated at least annually, or more frequently if there are significant changes to the IT infrastructure, applications, or business requirements. It is also crucial to update the plan after each DR test to incorporate the findings and recommendations.

Automation and Orchestration

Automating disaster recovery (DR) processes is crucial for minimizing downtime, reducing operational costs, and ensuring business continuity. Utilizing scripting and orchestration tools allows for the creation of repeatable, reliable, and efficient DR procedures. This section will explore how to leverage automation for various DR tasks, including failover, failback, and testing.

Automating Disaster Recovery Processes

Automating DR involves using scripts and orchestration tools to streamline and expedite critical recovery steps. This approach reduces the reliance on manual intervention, minimizing human error and accelerating the recovery time objective (RTO). Key elements include scripting languages, configuration management tools, and orchestration platforms.

- Scripting Languages: Languages like Python, Bash, and PowerShell are used to automate individual tasks. For example, a Python script might be used to verify the health of a database, while a Bash script could be used to mount storage volumes.

- Configuration Management Tools: Tools such as Ansible, Chef, and Puppet can be used to manage infrastructure as code (IaC). This allows for the consistent and automated provisioning and configuration of resources in the DR environment. For instance, Ansible playbooks can be used to deploy and configure virtual machines (VMs) in a DR region.

- Orchestration Platforms: Orchestration platforms like AWS CloudFormation, Azure Resource Manager, and Google Cloud Deployment Manager provide a higher level of automation. They allow for the definition of complex workflows that encompass multiple steps, from resource provisioning to application deployment and configuration.

Designing a Workflow for Automation

A well-designed workflow is essential for automating failover, failback, and testing procedures. This workflow should define the sequence of actions, the dependencies between tasks, and the criteria for success or failure. A typical workflow comprises several stages.

- Pre-Failover Checks: These checks verify the health of the primary environment and ensure that all necessary resources are available in the DR environment. For example, these checks might include verifying network connectivity, database health, and application availability.

- Failover Execution: This stage involves the actual transition to the DR environment. This can include tasks such as DNS updates, database replication, and application startup.

- Post-Failover Verification: After failover, the DR environment is verified to ensure that all applications and services are functioning correctly. This can involve running automated tests, monitoring performance metrics, and validating data integrity.

- Failback Execution: This stage involves returning to the primary environment after the issue has been resolved. The process is similar to failover, but in reverse. It includes steps like synchronizing data, updating DNS, and restarting applications.

- Testing Procedures: Automation can be used to regularly test the DR environment. This involves simulating a disaster scenario and verifying that the recovery procedures function as expected. Automated testing can identify potential issues before a real disaster occurs.

Examples of Automation to Improve Speed and Efficiency

Automation significantly improves the speed and efficiency of DR processes. By reducing manual intervention and automating repetitive tasks, organizations can achieve faster RTOs and reduced operational costs. Here are specific examples of how automation can be implemented.

- Automated Failover using CloudFormation (AWS): AWS CloudFormation can be used to define a template that provisions all necessary resources in the DR region. When a failover is triggered, an automated script can update DNS records to point to the DR environment, and CloudFormation can then deploy and configure the application stack in the DR region. This reduces the failover time significantly compared to manual processes.

- Automated Database Replication using Ansible: Ansible playbooks can be used to automate the setup and configuration of database replication. This includes setting up the replication process, monitoring the replication status, and automatically failing over to a replica database in the DR region if the primary database fails. This ensures data consistency and minimizes data loss.

- Automated Testing using Jenkins: Jenkins can be used to create a continuous integration/continuous delivery (CI/CD) pipeline for DR testing. This pipeline can automatically trigger DR tests on a regular schedule. The tests can include simulating various failure scenarios, such as network outages or server failures. Automated testing helps to identify and resolve potential issues proactively.

- Automated Infrastructure Provisioning using Terraform: Terraform can be used to provision and manage the infrastructure in both the primary and DR environments. Terraform ensures that the environments are identical, which simplifies the failover and failback processes. This approach reduces the risk of configuration drift and ensures consistency across environments.

Final Summary

In conclusion, effectively testing disaster recovery scenarios in the cloud is not merely a technical exercise but a strategic imperative. By understanding the various cloud disaster recovery models, meticulously defining recovery objectives, and implementing a robust testing methodology, organizations can significantly enhance their ability to withstand disruptions. Regular testing, thorough documentation, and continuous improvement are essential to maintaining a state of readiness and ensuring business operations can be swiftly restored following an unforeseen event.

The ability to confidently execute a failover, verify recovery, and successfully failback is the ultimate measure of a well-designed and tested disaster recovery plan, providing peace of mind and safeguarding critical business functions.

Q&A

What is the difference between RTO and RPO?

Recovery Time Objective (RTO) is the maximum acceptable time a system can be down after a disaster, while Recovery Point Objective (RPO) is the maximum acceptable data loss measured in time.

How often should disaster recovery tests be conducted?

Disaster recovery tests should be conducted regularly, at least annually, or more frequently depending on the criticality of the applications and the rate of change in the environment. Some organizations test quarterly or even monthly.

What are the key considerations when selecting a cloud disaster recovery provider?

Key considerations include cost, RTO/RPO capabilities, security features, ease of use, integration with existing infrastructure, and the provider’s support and expertise.

How can automation improve disaster recovery testing?

Automation streamlines testing by scripting failover, failback, and verification processes, reducing human error, improving speed, and allowing for more frequent testing.

What are the potential risks of not testing disaster recovery?

Failing to test disaster recovery can lead to unexpected issues during an actual disaster, resulting in prolonged downtime, data loss, and potential damage to the organization’s reputation and financial stability.