In today’s digital landscape, the cloud has become an indispensable asset for businesses of all sizes. However, this shift has also introduced new challenges, particularly concerning the ever-present threats of malware and ransomware. Understanding how to safeguard your cloud environment is no longer optional; it’s a critical necessity for maintaining data integrity, ensuring business continuity, and protecting your valuable assets.

This guide delves into the essential strategies and best practices to fortify your cloud infrastructure against these malicious attacks.

We will explore various facets of cloud security, from understanding the vulnerabilities that make cloud environments susceptible to attacks to implementing robust security measures. This includes detailed guidance on authentication, data encryption, network segmentation, endpoint detection and response, backup and disaster recovery, security monitoring, and the utilization of cloud-specific security tools. Furthermore, we’ll emphasize the importance of user education and awareness programs in creating a strong security posture.

Understanding Cloud Security Risks

Cloud environments, while offering significant advantages in terms of scalability and cost-efficiency, introduce new security challenges. Organizations must understand these risks to effectively protect their data and infrastructure. This section delves into the common entry points, vulnerabilities, and real-world examples of attacks targeting cloud deployments.

Common Entry Points for Malware and Ransomware Attacks

Attackers employ various tactics to gain access to cloud resources. Understanding these entry points is crucial for implementing preventative measures.

- Compromised Credentials: This is a frequent attack vector. Weak, reused, or stolen credentials allow unauthorized access to cloud accounts. Attackers often use phishing, credential stuffing, or brute-force attacks to obtain them.

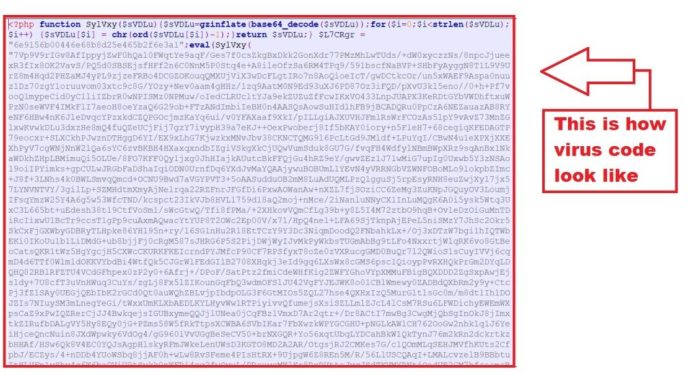

- Vulnerable Applications and Services: Exploiting vulnerabilities in cloud-based applications and services is a common method. This includes outdated software, misconfigured services, and vulnerabilities in third-party integrations. Attackers scan for these vulnerabilities and exploit them to gain access.

- Malicious Insiders: While less common than external attacks, malicious insiders pose a significant threat. This can include disgruntled employees or those who have been compromised. They can use their legitimate access to steal data, deploy malware, or sabotage systems.

- Supply Chain Attacks: These attacks target third-party providers or software dependencies used by cloud environments. If a third-party service is compromised, it can be used as a launchpad to attack the cloud environment. This is becoming increasingly common as organizations rely on a complex ecosystem of providers.

- Phishing and Social Engineering: Phishing emails and social engineering tactics are used to trick cloud users into revealing credentials or installing malware. These attacks are often highly targeted and can be very effective. Attackers use carefully crafted messages to impersonate trusted sources.

Cloud Infrastructure Vulnerabilities

Several vulnerabilities make cloud infrastructure susceptible to malware and ransomware attacks. These weaknesses must be addressed to strengthen cloud security posture.

- Misconfigurations: Incorrectly configured cloud resources are a significant vulnerability. This includes open storage buckets, overly permissive access controls, and improperly secured network settings. Misconfigurations often result from human error or a lack of proper security awareness.

- Lack of Patching and Updates: Failing to apply security patches and updates to cloud software and services leaves systems vulnerable to known exploits. Regular patching is essential to mitigate these risks.

- Inadequate Access Controls: Insufficiently defined or enforced access controls can allow unauthorized users to access sensitive data and resources. This includes weak password policies, lack of multi-factor authentication, and overly permissive role-based access control.

- Insufficient Monitoring and Logging: A lack of robust monitoring and logging capabilities makes it difficult to detect and respond to security incidents. Without proper monitoring, malicious activity can go unnoticed for extended periods.

- Data Leakage and Data Breaches: Data leakage can occur through various means, including misconfigured storage buckets, compromised credentials, and insider threats. Data breaches often result in significant financial and reputational damage.

Examples of Recent Cloud Security Breaches and Attack Vectors

Analyzing recent cloud security breaches provides valuable insights into the tactics used by attackers and the effectiveness of different security measures. These real-world examples illustrate the importance of robust cloud security practices.

- Capital One Data Breach (2019): A misconfigured web application firewall allowed an attacker to steal the personal information of over 100 million individuals. The attacker exploited a vulnerability in the web application and gained access to sensitive data stored in the cloud. This case highlighted the importance of proper configuration and vulnerability management.

- SolarWinds Supply Chain Attack (2020): Attackers compromised the SolarWinds Orion platform, a widely used network management software. This allowed them to inject malicious code into the software updates, which were then distributed to thousands of organizations, including government agencies. This attack demonstrated the devastating impact of supply chain attacks.

- Microsoft Exchange Server Vulnerability (2021): Attackers exploited zero-day vulnerabilities in Microsoft Exchange Server, allowing them to gain access to email servers and steal sensitive data. This attack highlighted the importance of promptly patching critical vulnerabilities.

- Accellion Data Breach (2021): A vulnerability in the Accellion File Transfer Appliance (FTA) was exploited to steal data from several organizations that used the service. Attackers targeted the FTA, gaining access to sensitive information stored within it.

- Ubiquiti Data Breach (2021): Hackers accessed Ubiquiti’s cloud infrastructure, potentially gaining access to user account credentials and other sensitive information. This breach showed the risk of improper access controls and the need for robust security measures.

Implementing Robust Authentication and Authorization

Securing cloud resources requires a multi-layered approach, with strong authentication and authorization mechanisms at its core. These measures ensure that only authorized individuals and systems can access sensitive data and perform critical operations. This section delves into practical strategies for implementing these crucial security controls.

Designing a Multi-Factor Authentication (MFA) Strategy to Secure Cloud Access

Implementing Multi-Factor Authentication (MFA) significantly enhances cloud security by requiring users to provide multiple verification factors before granting access. This mitigates the risk of compromised credentials.To design an effective MFA strategy:

- Define Access Levels: Categorize users based on their roles and responsibilities. Different roles will require different levels of access and, consequently, different MFA requirements. For example, administrators might require more stringent MFA methods than standard users.

- Choose Authentication Factors: Select appropriate authentication factors based on security needs and user experience. Common factors include:

- Something you know: Passwords, PINs, and security questions. However, passwords alone are considered weak security and should always be combined with other factors.

- Something you have: Hardware security keys (like YubiKeys), authenticator apps (Google Authenticator, Microsoft Authenticator), or one-time passwords (OTPs) delivered via SMS or email.

- Something you are: Biometric data, such as fingerprints, facial recognition, or voice recognition.

- Implement MFA Enforcement: Enforce MFA across all critical cloud services, including administrative consoles, email, and remote access. Use policies to mandate MFA for all users or specific groups.

- Consider User Experience: While security is paramount, consider the user experience. Balance security requirements with ease of use. For example, consider using a push notification to a trusted device rather than requiring users to manually enter a code.

- Provide Backup Options: Ensure users have backup methods for accessing their accounts in case their primary MFA factor is unavailable (e.g., lost phone, broken security key). This might include recovery codes or alternative authentication methods.

- Regularly Review and Update: Continuously review and update the MFA strategy to address emerging threats and vulnerabilities. Consider phasing out less secure methods, such as SMS-based OTPs, and adopting more robust options like authenticator apps or hardware security keys.

Implementing Role-Based Access Control (RBAC) within a Cloud Platform

Role-Based Access Control (RBAC) streamlines access management by assigning permissions based on user roles. This approach simplifies administration and reduces the risk of unauthorized access.To implement RBAC effectively:

- Identify Roles and Responsibilities: Analyze the organization’s cloud usage and identify distinct roles, such as administrators, developers, auditors, and users. Define the specific responsibilities and access privileges associated with each role.

- Define Permissions: Determine the specific permissions required for each role. Permissions should be granular and aligned with the principle of least privilege, granting only the minimum necessary access.

- Create Roles: Within the cloud platform’s identity and access management (IAM) system, create roles that correspond to the identified roles.

- Assign Permissions to Roles: Assign the appropriate permissions to each role. This could involve granting access to specific resources (e.g., virtual machines, databases, storage buckets), enabling specific actions (e.g., read, write, delete), or setting up access control lists (ACLs).

- Assign Users to Roles: Assign users to the appropriate roles based on their job functions. This can be done manually or through automated processes, such as user provisioning systems.

- Regularly Review and Audit: Periodically review user assignments and role permissions to ensure they remain appropriate and aligned with the organization’s needs. Audit access logs to identify any anomalies or potential security breaches.

- Use Least Privilege Principle: Always adhere to the principle of least privilege. Grant users only the minimum necessary permissions to perform their tasks. This reduces the potential impact of compromised accounts.

Sharing Best Practices for Managing and Rotating Access Keys and Credentials

Managing and rotating access keys and credentials is a critical security practice. Compromised credentials are a primary attack vector, and proper management minimizes the risk.To manage and rotate access keys and credentials effectively:

- Generate Strong Keys: Generate strong, random access keys using the cloud provider’s key generation tools. Avoid using weak or predictable keys.

- Store Credentials Securely: Never hardcode access keys in code or configuration files. Store credentials securely using a secrets management service or a dedicated vault.

- Rotate Keys Regularly: Rotate access keys and credentials on a regular schedule. The frequency of rotation depends on the sensitivity of the data and the risk tolerance of the organization. A common practice is to rotate keys every 90 days, but more frequent rotations might be necessary for high-risk environments.

- Implement Automated Rotation: Automate the key rotation process to reduce the risk of human error and ensure consistency. Many cloud providers offer automated key rotation features.

- Monitor Key Usage: Monitor key usage to detect any suspicious activity or unauthorized access attempts. Review access logs regularly and set up alerts for unusual patterns.

- Revoke Compromised Keys Immediately: If a key is suspected of being compromised, revoke it immediately. Generate a new key and update all relevant systems.

- Use the Principle of Least Privilege: Grant access keys only the minimum necessary permissions. Avoid granting overly broad permissions that could allow an attacker to access more resources than necessary.

- Avoid Sharing Keys: Do not share access keys with others. Each user or system should have its own unique set of credentials.

- Use Short-Lived Credentials: Where possible, use short-lived credentials, such as temporary security tokens, instead of long-lived access keys. This reduces the window of opportunity for attackers.

Data Encryption and Protection Measures

Protecting data within the cloud environment is paramount to maintaining confidentiality, integrity, and availability. Encryption and data loss prevention (DLP) are critical components of a robust security posture, safeguarding against unauthorized access and data breaches. This section details strategies for encrypting data and employing DLP tools to minimize risks.

Data Encryption at Rest and in Transit

Data encryption ensures that data is unreadable to unauthorized parties, even if they gain access to the storage medium or intercept network traffic. Encryption is applied both when data is stored (at rest) and when it’s moving between systems (in transit).Encryption at rest involves securing data stored in cloud storage services, databases, and virtual machines. This can be achieved through:

- Volume Encryption: Encrypting entire storage volumes. This approach provides a baseline level of security, making the data on the volume unreadable without the decryption key. For example, Amazon Web Services (AWS) offers Elastic Block Storage (EBS) encryption, and Microsoft Azure provides disk encryption.

- Object Encryption: Encrypting individual data objects stored in cloud storage services like Amazon S3 or Azure Blob Storage. This allows for more granular control over data access.

- Database Encryption: Encrypting data within databases, either at the column level or the entire database level. This protects sensitive data stored within the database from unauthorized access. For instance, Azure SQL Database and AWS RDS support encryption at rest.

Encryption in transit protects data as it moves between different locations, such as between a user’s device and the cloud, or between different cloud services. This is typically achieved through:

- Transport Layer Security (TLS/SSL): TLS/SSL encrypts the communication channel between the client and the server. This ensures that data exchanged over the network is protected from eavesdropping. TLS/SSL is commonly used for web browsing (HTTPS) and other network communications.

- Virtual Private Network (VPN): A VPN creates an encrypted tunnel between the user’s device and the cloud network. All traffic passing through the VPN is encrypted, providing a secure connection.

- Encrypted APIs: When accessing cloud services through APIs, it is crucial to use APIs that support encryption and secure protocols. Ensure that API calls use HTTPS and authentication mechanisms to prevent unauthorized access.

Key Management Systems (KMS) Implementation

A Key Management System (KMS) is a critical component for managing encryption keys. It provides a secure way to generate, store, and manage cryptographic keys used to encrypt and decrypt data. Implementing a KMS ensures that encryption keys are protected and that access to encrypted data is controlled.Implementing a KMS involves several key steps:

- Key Generation: The KMS generates strong, unique encryption keys. These keys can be symmetric (used for both encryption and decryption) or asymmetric (used for public-key cryptography).

- Key Storage: The KMS securely stores encryption keys, often using hardware security modules (HSMs) to protect against unauthorized access. HSMs are physical devices that provide a high level of security for cryptographic keys.

- Key Rotation: Regular key rotation is essential to minimize the impact of a potential key compromise. The KMS allows for the automatic rotation of encryption keys on a predefined schedule.

- Access Control: The KMS provides access control mechanisms to restrict who can access and use encryption keys. This ensures that only authorized users or applications can decrypt data.

- Audit Logging: The KMS logs all key management operations, such as key generation, rotation, and access, providing an audit trail for security monitoring.

Examples of cloud-based KMS solutions include:

- AWS KMS: AWS Key Management Service provides a centralized, managed service for creating and controlling encryption keys. It integrates with various AWS services, such as S3, EBS, and RDS.

- Azure Key Vault: Azure Key Vault is a cloud service for securely storing and managing secrets, encryption keys, and certificates. It offers features like key rotation and access control.

- Google Cloud KMS: Google Cloud Key Management Service enables you to manage cryptographic keys in the cloud. It integrates with various Google Cloud services, such as Cloud Storage and Cloud SQL.

Data Loss Prevention (DLP) Tools Usage

Data Loss Prevention (DLP) tools are designed to prevent sensitive data from leaving the organization’s control, whether intentionally or unintentionally. They monitor and control data movement, identifying and preventing data breaches.DLP tools typically employ several techniques:

- Data Discovery and Classification: DLP tools scan data stores to identify and classify sensitive data, such as Personally Identifiable Information (PII), protected health information (PHI), and financial data.

- Policy Enforcement: DLP tools enforce predefined policies that specify how sensitive data can be used, stored, and shared. These policies can be based on data types, locations, or user roles.

- Monitoring and Alerting: DLP tools monitor data movement and alert administrators to policy violations. This allows for timely intervention to prevent data breaches.

- Data Blocking and Remediation: DLP tools can block unauthorized data transfers or automatically remediate policy violations, such as quarantining or encrypting sensitive data.

DLP solutions can be deployed in various ways:

- Network DLP: Monitors network traffic for sensitive data being transmitted outside the organization. It analyzes email, web traffic, and other network protocols.

- Endpoint DLP: Monitors and controls data on endpoints, such as laptops and desktops. It prevents data from being copied to removable media or shared through unauthorized applications.

- Cloud DLP: DLP solutions designed for cloud environments, such as AWS, Azure, and Google Cloud, can identify and protect sensitive data stored in cloud storage services, databases, and applications.

Examples of DLP tools include:

- McAfee DLP: Provides comprehensive data loss prevention capabilities for endpoints, networks, and cloud environments.

- Symantec DLP: Offers a range of DLP solutions for various deployment scenarios, including on-premises, cloud, and hybrid environments.

- Microsoft Purview Data Loss Prevention: A built-in DLP solution for Microsoft 365, which can protect sensitive data across various Microsoft applications and services.

Network Security and Segmentation Strategies

Implementing robust network security and segmentation is paramount in protecting cloud-based resources from malware and ransomware attacks. This involves strategically organizing the network to limit the blast radius of potential breaches, alongside the implementation of security tools designed to detect and prevent malicious activities. Effective network security and segmentation are crucial components of a comprehensive cloud security posture.

Organizing a Network Segmentation Plan to Isolate Critical Cloud Resources

A well-defined network segmentation plan is essential for isolating critical cloud resources, thereby minimizing the impact of a security breach. This strategy involves dividing the cloud network into distinct segments, each with specific access controls and security policies.

- Identifying Critical Assets: Begin by identifying and classifying all cloud resources based on their sensitivity and criticality. This includes data, applications, and infrastructure components. For example, databases containing sensitive customer information should be classified as high-priority assets.

- Defining Network Segments: Create separate network segments for different types of resources. This could include segments for public-facing web servers, application servers, database servers, and administrative access.

- Implementing Access Controls: Define and enforce strict access controls between segments. This limits lateral movement within the network if a segment is compromised. Only authorized traffic should be allowed to pass between segments. For instance, only specific application servers should have access to the database segment.

- Utilizing Security Groups/Network ACLs: Employ security groups (in cloud environments like AWS) or network access control lists (ACLs) to control traffic flow within and between segments. These tools act as virtual firewalls, allowing or denying traffic based on predefined rules.

- Regularly Reviewing and Updating: Network segmentation plans must be regularly reviewed and updated to reflect changes in the cloud environment, such as the addition of new applications or data sources. This ensures that the segmentation remains effective and aligned with the organization’s security needs.

Providing Methods for Implementing Firewalls and Intrusion Detection/Prevention Systems (IDS/IPS)

Firewalls and Intrusion Detection/Prevention Systems (IDS/IPS) are vital for protecting cloud infrastructure. Firewalls control network traffic, while IDS/IPS detect and respond to malicious activities.

- Firewalls: Firewalls act as the first line of defense, controlling network traffic based on predefined rules. Cloud providers offer various firewall solutions.

- Cloud-Native Firewalls: Cloud providers like AWS (AWS Network Firewall), Azure (Azure Firewall), and Google Cloud (Cloud Firewall) offer native firewall services. These are designed to integrate seamlessly with the cloud environment.

- Next-Generation Firewalls (NGFWs): NGFWs offer advanced features like deep packet inspection, intrusion prevention, and application control. They can be deployed as virtual appliances in the cloud.

- Firewall Rule Configuration: Configure firewall rules to allow only necessary traffic. Block all other traffic by default. Regularly review and update firewall rules to adapt to evolving security threats.

- Intrusion Detection Systems (IDS): IDS monitor network traffic for suspicious activities and generate alerts when threats are detected.

- Network-Based IDS (NIDS): NIDS analyze network traffic to identify malicious patterns. They are typically deployed in strategic locations within the network.

- Host-Based IDS (HIDS): HIDS monitor individual servers and endpoints for suspicious activities. They are installed directly on the systems they protect.

- IDS Alert Management: Implement a robust alert management system to handle IDS alerts. Prioritize and investigate alerts based on their severity.

- Intrusion Prevention Systems (IPS): IPS take proactive steps to block or mitigate malicious activities.

- IPS Integration: Integrate IPS with firewalls to automatically block malicious traffic.

- Signature-Based Detection: Use signature-based detection to identify known threats.

- Behavioral Analysis: Employ behavioral analysis to detect anomalies and zero-day attacks.

Elaborating on the Benefits of Using Virtual Private Clouds (VPCs) for Enhanced Security

Virtual Private Clouds (VPCs) provide a logically isolated section of a public cloud, offering enhanced security and control over network resources. VPCs allow organizations to create their own private networks within the public cloud infrastructure.

- Network Isolation: VPCs provide network isolation, separating cloud resources from other users of the public cloud. This isolation reduces the risk of unauthorized access and lateral movement.

- Customizable Network Configuration: VPCs offer a high degree of customization, allowing organizations to define their own IP address ranges, subnets, routing tables, and security groups. This flexibility enables the creation of tailored network architectures.

- Enhanced Security Controls: VPCs integrate with security tools and services, such as firewalls, intrusion detection systems, and access control lists. These tools can be used to create a robust security perimeter.

- Improved Compliance: VPCs support compliance with various regulatory requirements, such as HIPAA and PCI DSS. The ability to control network access and data flow is essential for meeting compliance standards.

- Data Encryption: Data transmitted within a VPC can be encrypted, ensuring the confidentiality of sensitive information. This is particularly important for protecting data in transit.

- Examples and Use Cases:

- Multi-Tier Application Architecture: A VPC can be used to create a multi-tier application architecture, with separate subnets for web servers, application servers, and database servers. Each tier can be secured with its own security group and access controls.

- Secure Hybrid Cloud Connectivity: VPCs can be connected to on-premises networks via VPN or direct connect, enabling secure hybrid cloud connectivity. This allows organizations to extend their private networks to the cloud.

Endpoint Detection and Response (EDR) in the Cloud

Endpoint Detection and Response (EDR) solutions are critical for cloud security, providing real-time threat detection and response capabilities across cloud workloads. Integrating EDR into a cloud environment enhances visibility into endpoint activities, enabling proactive identification and mitigation of malicious behavior. This proactive approach is essential for defending against evolving threats, including ransomware attacks.

Integrating EDR Solutions with Cloud Workloads

Integrating EDR with cloud workloads involves deploying EDR agents onto the virtual machines, containers, and other compute resources within the cloud environment. This process allows the EDR solution to monitor the activity of these resources, collect data, and detect potential threats. The integration process is usually straightforward, but it may vary depending on the cloud provider and the specific EDR solution.

- Agent Deployment: The initial step is deploying the EDR agent onto the target cloud workloads. Most EDR vendors offer pre-built agents compatible with various operating systems and cloud platforms. The deployment can often be automated using cloud-native deployment tools or scripting.

- Configuration: After deployment, the EDR agent needs to be configured to connect to the EDR platform, which is typically a cloud-based service. This configuration includes specifying the agent’s data collection settings, the types of events to monitor, and the frequency of data transmission.

- Data Collection and Analysis: The EDR agent collects data from the endpoint, including process activity, network connections, file system changes, and user behavior. This data is then transmitted to the EDR platform for analysis. The platform uses various techniques, such as behavioral analysis, machine learning, and threat intelligence feeds, to identify malicious activities.

- Integration with Cloud Services: EDR solutions can integrate with other cloud services, such as security information and event management (SIEM) systems and cloud-native security tools. This integration enables security teams to centralize their security operations and correlate threat data from multiple sources.

Configuring EDR Agents for Threat Detection and Response

Configuring EDR agents correctly is essential for effective threat detection and response. This involves defining policies and rules that specify what activities to monitor, how to respond to suspicious events, and how to alert security teams. The configuration should be tailored to the specific cloud environment and the organization’s security requirements.

- Policy Definition: Policies define the rules that the EDR agent uses to detect malicious activities. These policies can be based on various criteria, such as known malware signatures, suspicious process behavior, and network traffic patterns. The policies should be regularly updated to address new threats.

- Alerting and Notification: Configure the EDR agent to generate alerts and notifications when suspicious activities are detected. These alerts should be sent to the appropriate security personnel or systems for investigation and remediation.

- Response Actions: EDR agents can automatically take response actions when a threat is detected. These actions may include isolating the infected endpoint, terminating malicious processes, quarantining infected files, and blocking network connections. The response actions should be carefully configured to minimize disruption to business operations.

- Log Management and Reporting: EDR agents generate logs that record all detected events and response actions. These logs should be collected and stored for analysis and reporting. The reporting capabilities of the EDR solution should provide insights into the threat landscape and the effectiveness of the security measures.

EDR’s Role in Preventing Ransomware Spread in the Cloud

EDR plays a crucial role in preventing the spread of ransomware in cloud environments by detecting and responding to ransomware attacks in their early stages. This proactive approach can limit the damage caused by the attack and prevent data loss.

- Early Detection: EDR solutions can detect ransomware attacks early by monitoring for suspicious activities associated with ransomware, such as file encryption, process injection, and network communication with command-and-control servers.

- Rapid Response: Upon detecting a ransomware attack, EDR can automatically take actions to contain the threat, such as isolating the infected endpoint, terminating malicious processes, and blocking network connections. This rapid response can prevent the ransomware from spreading to other systems.

- File System Monitoring: EDR solutions monitor file system activity, including file creation, modification, and deletion. This monitoring can detect the encryption of files by ransomware, allowing security teams to identify and respond to the attack quickly.

- Behavioral Analysis: EDR solutions use behavioral analysis to detect ransomware by identifying unusual patterns of activity, such as a sudden increase in file encryption or the execution of suspicious processes. This approach can detect ransomware even if it uses new or unknown techniques.

- Example: In 2023, a global cloud provider experienced a ransomware attack targeting its virtual machine infrastructure. The provider used an EDR solution that detected the ransomware’s file encryption activity within minutes. The EDR solution automatically isolated the infected virtual machines, preventing the ransomware from spreading to other systems. This quick response significantly reduced the impact of the attack and minimized data loss.

Backup and Disaster Recovery Planning

Data protection in the cloud necessitates robust backup and disaster recovery (DR) strategies. These plans are crucial for business continuity, ensuring data availability, and minimizing downtime in the face of unforeseen events such as malware attacks, system failures, or natural disasters. Effective planning and execution of backup and DR processes are essential components of a comprehensive cloud security posture.

Implementing Regular Data Backups in the Cloud

Implementing a consistent backup strategy is paramount for data resilience. This involves defining a schedule, selecting appropriate backup types, and choosing a secure storage location.

- Identify Critical Data: Determine which data is essential for business operations and prioritize its backup. This often includes databases, application configurations, and critical user files.

- Choose a Backup Type: Select a backup type that aligns with recovery time objectives (RTO) and recovery point objectives (RPO).

- Full Backups: Copies all selected data. Provides the simplest restore process but can be time-consuming.

- Incremental Backups: Backs up only the data that has changed since the last backup (full or incremental). Faster than full backups but requires a chain of backups for recovery.

- Differential Backups: Backs up only the data that has changed since the last full backup. Offers a balance between full and incremental backups.

- Select a Backup Schedule: Establish a schedule that balances data protection needs with the impact on system performance. Consider the frequency of data changes and the acceptable data loss window. Daily, weekly, or even hourly backups might be appropriate, depending on the criticality of the data.

- Choose a Backup Storage Location: Select a secure and geographically diverse storage location for backups. This often involves using a separate cloud region or a different cloud provider to ensure resilience against regional outages. Consider using object storage services, which offer cost-effective and scalable storage.

- Implement Encryption: Encrypt backups both in transit and at rest to protect data confidentiality. Use strong encryption algorithms and manage encryption keys securely.

- Automate the Backup Process: Utilize cloud-native tools or third-party backup solutions to automate the backup process. Automation reduces the risk of human error and ensures consistent execution.

- Monitor Backup Jobs: Regularly monitor backup jobs to ensure they are completing successfully. Implement alerts to notify administrators of any failures or issues.

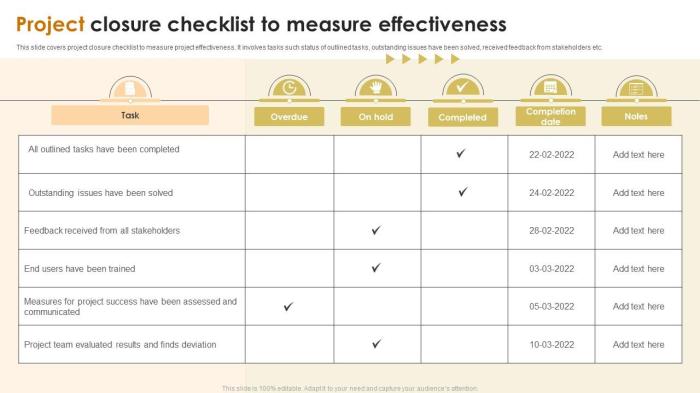

Testing and Validating Disaster Recovery Plans

Regularly testing and validating DR plans is crucial to ensure they are effective when needed. This involves simulating disaster scenarios and verifying the ability to restore data and applications within the defined RTOs.

- Define Recovery Objectives: Clearly define RTO and RPO for each critical application and dataset.

- Recovery Time Objective (RTO): The maximum acceptable time to restore a system after a disaster.

- Recovery Point Objective (RPO): The maximum acceptable data loss window.

- Develop a DR Plan: Document a detailed DR plan that Artikels the steps required to recover systems and data. Include roles and responsibilities, communication protocols, and recovery procedures.

- Conduct Regular Testing: Perform regular DR tests to validate the plan. Test frequency should be determined by the criticality of the systems and data.

- Simulate Disaster Scenarios: Simulate various disaster scenarios, such as a ransomware attack, a server outage, or a regional outage.

- Restore Data and Applications: Restore data and applications from backups to a test environment or a secondary cloud region.

- Verify Functionality: Verify that restored applications and data are functional and meet performance requirements.

- Document Test Results: Document the results of each DR test, including any issues encountered and the steps taken to resolve them.

- Update the DR Plan: Update the DR plan based on the results of the tests and any changes to the IT environment.

Automating Backup and Recovery Processes to Minimize Downtime

Automation is key to minimizing downtime and ensuring rapid recovery in the event of a disaster. Automating backup and recovery processes reduces manual intervention, improves efficiency, and enhances reliability.

- Utilize Cloud-Native Tools: Leverage cloud-native backup and recovery tools, such as those offered by AWS, Azure, and Google Cloud, to automate backup processes. These tools often provide features like automated scheduling, policy-based backups, and one-click recovery.

- Implement Infrastructure as Code (IaC): Use IaC to automate the provisioning and configuration of backup infrastructure. This allows for consistent and repeatable deployments.

- Script Backup and Recovery Procedures: Script backup and recovery procedures to automate repetitive tasks and reduce the risk of human error.

- Automate Data Replication: Automate data replication to a secondary cloud region or storage location to ensure data availability in the event of a primary site failure.

- Implement Orchestration Tools: Use orchestration tools to automate complex recovery workflows. These tools can orchestrate the restoration of multiple systems and applications in a specific order.

- Monitor Automation Processes: Regularly monitor automated backup and recovery processes to ensure they are functioning correctly. Implement alerts to notify administrators of any issues.

- Test Automation Regularly: Regularly test the automated backup and recovery processes to validate their effectiveness.

Security Information and Event Management (SIEM) and Threat Monitoring

Implementing a robust Security Information and Event Management (SIEM) system is critical for proactive cloud security. SIEM solutions aggregate security logs from various sources, analyze them for threats, and provide actionable insights. This section will detail the design, configuration, and utilization of SIEM systems within a cloud environment, focusing on threat detection and incident response.

Designing a SIEM System for Cloud Resources

Designing a SIEM system requires careful planning to ensure comprehensive log collection, effective analysis, and timely incident response. The primary goal is to create a centralized repository of security-related events for monitoring and analysis.

- Identify Data Sources: Determine which cloud resources will generate security logs. These sources include:

- Virtual machines (VMs)

- Cloud storage services (e.g., Amazon S3, Azure Blob Storage)

- Network firewalls and intrusion detection/prevention systems (IDS/IPS)

- Identity and access management (IAM) systems

- Application logs

- Choose a SIEM Solution: Select a SIEM solution that is compatible with your cloud provider and security requirements. Consider factors such as scalability, integration capabilities, and cost. Options include:

- Cloud-Native SIEM: Solutions provided by cloud providers (e.g., AWS CloudWatch, Azure Sentinel, Google Security Command Center). These offer seamless integration and often cost-effective options.

- Third-Party SIEM: Solutions from vendors like Splunk, IBM QRadar, and others. These often provide more extensive features and customization options.

- Configure Log Collection: Configure the SIEM to collect logs from the identified data sources. This involves:

- Setting up agents or connectors to forward logs.

- Configuring log formats and parsing rules to ensure data consistency.

- Establishing secure communication channels for log transmission.

- Define Security Policies and Rules: Develop security policies and rules to detect suspicious activities. These rules should be based on:

- Industry best practices (e.g., the MITRE ATT&CK framework).

- Threat intelligence feeds.

- Internal security policies and compliance requirements.

- Implement Data Storage and Retention Policies: Determine how long security logs should be retained. Consider compliance requirements and business needs. Implement data storage solutions that are scalable and secure.

- Establish Alerting and Reporting Mechanisms: Configure the SIEM to generate alerts based on the defined security rules. Set up reporting mechanisms to provide insights into security events and trends.

Configuring Threat Intelligence Feeds

Integrating threat intelligence feeds enhances a SIEM’s ability to detect malicious activities. Threat intelligence provides information about known threats, indicators of compromise (IOCs), and attacker tactics, techniques, and procedures (TTPs).

- Select Threat Intelligence Sources: Choose reputable threat intelligence feeds. Consider:

- Commercial Feeds: Provided by security vendors (e.g., Recorded Future, CrowdStrike, FireEye).

- Open-Source Feeds: Maintained by the security community (e.g., AlienVault OTX, AbuseIPDB).

- Internal Intelligence: Based on your organization’s own security incidents and analysis.

- Integrate Threat Intelligence Feeds: Configure the SIEM to ingest data from selected threat intelligence feeds. This involves:

- API Integration: Use APIs to automatically fetch and update threat intelligence data.

- STIX/TAXII: Support for the Structured Threat Information Expression (STIX) and Trusted Automated Exchange of Indicator Information (TAXII) standards.

- Custom Parsers: Develop custom parsers to handle data from less common or proprietary feeds.

- Map Threat Intelligence to SIEM Rules: Create rules within the SIEM that correlate threat intelligence data with security logs. Examples include:

- IP Address Blacklists: Block traffic from known malicious IP addresses.

- Domain Blacklists: Detect and block communication with malicious domains.

- File Hash Matching: Identify files with known malicious hashes.

- User-Agent Analysis: Identify suspicious user-agent strings associated with malware.

- Regularly Update Threat Intelligence: Ensure that the SIEM regularly updates its threat intelligence data. Automated updates are essential to keep pace with evolving threats.

- Validate and Tune Rules: Regularly review and tune the SIEM rules to minimize false positives and ensure effective threat detection.

Using SIEM Alerts to Respond to Security Incidents

SIEM alerts are crucial for initiating and managing security incident response. Effective incident response relies on promptly investigating and mitigating security events.

- Establish an Incident Response Plan: Develop a detailed incident response plan that Artikels the steps to be taken when a security incident is detected. The plan should include:

- Roles and Responsibilities: Clearly define who is responsible for different aspects of incident response.

- Communication Procedures: Establish communication channels and protocols.

- Escalation Procedures: Define when and how to escalate incidents.

- Prioritize Alerts: Prioritize SIEM alerts based on their severity and potential impact.

- High-Severity Alerts: Require immediate attention (e.g., ransomware detected, data breach).

- Medium-Severity Alerts: Require investigation and analysis (e.g., suspicious login attempts).

- Low-Severity Alerts: May be informational or require periodic review (e.g., unusual network traffic patterns).

- Investigate Alerts: Conduct a thorough investigation of each alert.

- Gather Context: Collect all available information about the alert, including the source, time, affected resources, and related events.

- Analyze Logs: Examine relevant logs to understand the scope and nature of the incident.

- Identify Root Cause: Determine the underlying cause of the security event.

- Contain the Incident: Take immediate steps to contain the incident and prevent further damage. Containment measures may include:

- Isolating Affected Systems: Disconnect infected systems from the network.

- Blocking Malicious Traffic: Implement firewall rules to block malicious traffic.

- Changing Passwords: Reset compromised credentials.

- Eradicate the Threat: Remove the threat from the environment. This may involve:

- Removing Malware: Scan and remove malware from infected systems.

- Patching Vulnerabilities: Apply security patches to address vulnerabilities.

- Restoring from Backup: Restore systems from a clean backup if necessary.

- Recover Affected Systems: Restore affected systems to their normal operational state.

- Verify System Integrity: Ensure that systems are clean and functioning correctly.

- Monitor Systems: Monitor systems for any signs of recurring activity.

- Document the Incident: Document all aspects of the incident, including the timeline, actions taken, and lessons learned. This documentation is critical for:

- Post-Incident Analysis: Identify areas for improvement in security posture and incident response processes.

- Compliance Requirements: Meeting regulatory requirements.

- Continuously Improve Security Posture: Use the insights gained from incident response to improve security posture. This may include:

- Updating Security Policies: Revise security policies to address vulnerabilities.

- Enhancing SIEM Rules: Improve SIEM rules to detect similar incidents in the future.

- Providing Security Awareness Training: Educate employees on security best practices.

Cloud-Specific Security Tools and Services

Cloud environments offer a plethora of security tools and services designed to protect data and infrastructure. Understanding and utilizing these tools effectively is crucial for maintaining a strong security posture. This section explores cloud-specific security tools and services, comparing offerings from major cloud providers and explaining how to leverage them for enhanced security.

Comparing Cloud Provider Security Services

Cloud providers offer a range of security services that cater to various security needs, from threat detection to compliance monitoring. These services often integrate seamlessly with other cloud resources, simplifying security management.

- AWS Security Services: Amazon Web Services (AWS) provides a comprehensive suite of security services.

- AWS GuardDuty: A threat detection service that continuously monitors for malicious activity and unauthorized behavior. GuardDuty analyzes various data sources, such as VPC Flow Logs, DNS logs, and CloudTrail event logs, to identify potential threats. It leverages machine learning and threat intelligence to detect anomalies.

- Amazon Inspector: An automated security assessment service that helps improve the security and compliance of applications deployed on AWS. It analyzes the behavior of the applications to identify vulnerabilities and provides recommendations.

- AWS Security Hub: Provides a centralized view of security alerts and posture across AWS accounts. It aggregates findings from various security services and allows for automated remediation.

- AWS CloudTrail: A service that enables governance, compliance, operational auditing, and risk auditing of your AWS account. It records API calls and provides a history of actions taken.

- Azure Security Services: Microsoft Azure offers robust security solutions integrated into its cloud platform.

- Azure Security Center: A unified security management platform that provides advanced threat protection across hybrid workloads. It offers security recommendations, threat detection, and vulnerability management.

- Azure Sentinel: A cloud-native security information and event management (SIEM) and security orchestration automated response (SOAR) solution. It collects data from various sources, detects threats, and automates incident response.

- Azure Defender: A set of advanced threat protection capabilities that protect virtual machines, SQL databases, storage accounts, and other Azure resources.

- Azure Policy: Helps to enforce organizational standards and assess compliance at scale. It allows to create, assign, and manage policies that govern the resources in your Azure environment.

- GCP Security Services: Google Cloud Platform (GCP) offers powerful security tools designed to protect data and applications.

- Google Cloud Security Command Center (SCC): A centralized security and data risk platform that provides visibility, control, and threat detection across GCP. It aggregates findings from various security services and offers automated remediation capabilities.

- Cloud Armor: A distributed denial-of-service (DDoS) protection and web application firewall (WAF) service. It protects web applications from attacks.

- Security Health Analytics: Provides continuous security monitoring and identifies misconfigurations, vulnerabilities, and threats in GCP environments.

- Cloud IAM (Identity and Access Management): Enables you to grant granular access to specific Google Cloud resources and prevents unauthorized access.

Utilizing Cloud-Native Security Tools for Vulnerability Scanning and Penetration Testing

Cloud providers offer tools and services that enable organizations to perform vulnerability scanning and penetration testing. These tools help identify and remediate security weaknesses in cloud environments.

- Vulnerability Scanning: Cloud-native vulnerability scanners automatically identify vulnerabilities in cloud resources.

- AWS Inspector is a good example of this. It assesses applications for vulnerabilities or deviations from best practices.

- Azure Security Center offers vulnerability assessment capabilities for virtual machines and SQL databases.

- GCP Security Command Center integrates with vulnerability scanning services to identify and remediate vulnerabilities.

- Penetration Testing: While penetration testing is generally allowed in cloud environments, it’s important to adhere to the provider’s acceptable use policies.

- AWS: Organizations can conduct penetration testing on their AWS infrastructure with some restrictions. Prior authorization is required for certain types of tests.

- Azure: Microsoft allows penetration testing of Azure resources, subject to specific guidelines and requirements.

- GCP: Google permits penetration testing of GCP resources, but requires adherence to its penetration testing policies.

- Automation and Integration: These tools often integrate with automation frameworks, allowing organizations to automate vulnerability remediation and improve their security posture. For instance, you can configure automated patching or configuration changes based on the results of vulnerability scans.

Detailing the Use of Cloud Access Security Brokers (CASBs) to Monitor and Control Cloud Usage

Cloud Access Security Brokers (CASBs) are essential tools for monitoring and controlling cloud usage, providing visibility into cloud applications and data. They act as intermediaries between cloud users and cloud service providers.

- Functionality of CASBs: CASBs provide several key functions.

- Visibility: CASBs provide visibility into cloud application usage, including which applications are being used, who is using them, and how data is being accessed.

- Compliance: CASBs help organizations meet compliance requirements by monitoring and enforcing security policies.

- Data Loss Prevention (DLP): CASBs can identify and prevent sensitive data from being shared or leaked.

- Threat Protection: CASBs can detect and respond to threats, such as malware and insider threats.

- Access Control: CASBs can enforce access control policies, such as multi-factor authentication (MFA) and conditional access.

- Implementation and Deployment: CASBs can be deployed in different modes.

- API-based CASBs: These CASBs connect to cloud applications via APIs to collect data and enforce policies. They offer good visibility and control but may require API access to cloud services.

- Reverse Proxy CASBs: These CASBs act as a reverse proxy, sitting between users and cloud applications. They can enforce policies and provide granular control over user access.

- Forward Proxy CASBs: These CASBs forward traffic from users to cloud applications. They offer similar functionality to reverse proxy CASBs but require users to configure their devices to use the proxy.

- Benefits of Using CASBs: Implementing CASBs provides several benefits.

- Improved Security: CASBs enhance security by providing visibility, threat protection, and data loss prevention.

- Compliance: CASBs help organizations meet compliance requirements by enforcing security policies and monitoring cloud usage.

- Cost Optimization: CASBs can help optimize cloud spending by identifying unused applications and controlling data storage.

- Enhanced User Experience: CASBs can integrate with single sign-on (SSO) and multi-factor authentication (MFA) to provide a seamless user experience.

User Education and Awareness Programs

Educating users is a crucial aspect of cloud security. A well-informed user base acts as the first line of defense against threats, mitigating risks associated with malware and ransomware. Implementing comprehensive user education and awareness programs significantly enhances an organization’s overall security posture.

Training Program for Cloud Users on Identifying and Avoiding Phishing Attacks

Phishing attacks are a primary method employed by cybercriminals to gain unauthorized access to cloud resources. A dedicated training program is essential to equip users with the knowledge and skills necessary to identify and avoid these attacks.

This program should include the following elements:

- Understanding Phishing Tactics: Users should be educated on the various types of phishing attacks, including email phishing, spear phishing, and whaling. Provide examples of common phishing emails, including those that mimic legitimate organizations like Microsoft, Google, or banks.

- Recognizing Suspicious Emails: Teach users how to identify suspicious characteristics in emails, such as:

- Incorrect sender email addresses or domain names.

- Poor grammar and spelling mistakes.

- Urgent or threatening language.

- Requests for personal information or credentials.

- Links to unfamiliar websites or shortened URLs.

- Analyzing Email Headers: Explain how to examine email headers to verify the sender’s authenticity. Demonstrate how to identify spoofed email addresses.

- Verifying Links and Attachments: Train users to hover over links to check the destination URL before clicking. Emphasize the importance of not opening attachments from unknown senders.

- Reporting Suspicious Emails: Provide clear instructions on how to report suspicious emails to the IT security team or designated reporting channels.

- Using Multi-Factor Authentication (MFA): Explain the importance of MFA and how it protects accounts even if credentials are compromised.

- Keeping Software Updated: Explain the importance of keeping software, including web browsers and operating systems, up to date to patch security vulnerabilities.

Educating Users About Safe Cloud Practices and Data Handling

Beyond phishing, users need to understand general safe cloud practices to protect data and prevent security breaches. This education should cover various aspects of cloud usage.

Key areas of focus include:

- Strong Password Management: Emphasize the importance of creating strong, unique passwords for all cloud accounts. Encourage the use of password managers.

A strong password should be at least 12 characters long and include a mix of uppercase and lowercase letters, numbers, and symbols.

- Data Sensitivity and Classification: Educate users on the sensitivity levels of different data types and the appropriate handling procedures for each. This includes data classification policies that categorize information based on its confidentiality, integrity, and availability.

- Secure Data Storage and Sharing: Provide guidelines on securely storing and sharing data in the cloud. This includes using encrypted storage solutions and secure file-sharing platforms.

- Mobile Device Security: Educate users on securing mobile devices used to access cloud resources. This includes using strong passwords or biometrics, enabling remote wipe capabilities, and installing security software.

- Awareness of Public Wi-Fi Risks: Explain the risks of using public Wi-Fi and provide recommendations for secure browsing, such as using a VPN.

- Importance of Logging Out: Emphasize the importance of logging out of cloud accounts when finished using them, especially on shared devices.

- Regular Security Updates: Explain the importance of staying informed about the latest security threats and best practices.

Simulated Phishing Exercises to Test User Awareness

Simulated phishing exercises are an effective way to test user awareness and identify vulnerabilities in the organization’s security posture. These exercises should be conducted regularly to reinforce training and assess the effectiveness of the security awareness program.

Key aspects of conducting simulated phishing exercises include:

- Planning and Design: Carefully plan the phishing campaigns, considering different attack scenarios and user groups. Create realistic phishing emails that mimic legitimate communications.

- Targeted Campaigns: Tailor phishing campaigns to specific user groups or departments based on their roles and responsibilities.

- Email Content: Craft emails that are believable and relevant to the users. Include realistic subject lines, sender addresses, and calls to action.

- Testing and Deployment: Deploy the phishing emails to the target users and monitor the results. Track the click-through rates, the number of users who provided credentials, and the number of reported phishing attempts.

- Reporting and Analysis: Analyze the results of the phishing exercises to identify areas where users are most vulnerable. Provide feedback to users who fell for the phishing attempts and offer additional training.

- Iteration and Improvement: Continuously refine the phishing exercises based on the results and evolving threat landscape. Use the findings to improve the security awareness program.

- Example: A common simulated phishing scenario involves an email claiming a password reset is required, directing the user to a fake login page. If a user enters their credentials, it indicates a need for additional training on identifying suspicious links and verifying website authenticity.

Final Summary

In conclusion, protecting against malware and ransomware in the cloud requires a multifaceted approach that combines technical safeguards, proactive monitoring, and a well-informed user base. By implementing the strategies Artikeld in this guide, organizations can significantly reduce their risk exposure, safeguard their data, and maintain a resilient cloud infrastructure. Remember that cloud security is an ongoing process, demanding constant vigilance and adaptation to stay ahead of evolving threats.

Embracing these practices will not only protect your current cloud assets but also ensure a secure and prosperous future in the digital realm.

FAQ Insights

What is the most common entry point for malware in the cloud?

Phishing attacks and compromised credentials are among the most prevalent entry points. Attackers often exploit weak passwords or trick users into revealing their login information, granting them unauthorized access to cloud resources.

How often should I back up my cloud data?

The frequency of backups depends on your Recovery Point Objective (RPO) and Recovery Time Objective (RTO). However, a general recommendation is to back up your data daily, or even more frequently for critical data, and test the backups regularly.

What is the role of a Cloud Access Security Broker (CASB)?

A CASB acts as a gatekeeper between your on-premises users and your cloud applications. It provides visibility into cloud usage, enforces security policies, and protects sensitive data by monitoring and controlling cloud access.

Are free cloud security tools sufficient for protection?

While free tools can offer basic security, they often lack the advanced features and comprehensive protection of paid solutions. It’s important to assess your specific security needs and choose tools that provide the appropriate level of protection.

How can I test my cloud security posture?

Regularly conduct vulnerability scans, penetration testing, and security audits. These practices help identify weaknesses in your cloud environment and ensure that your security measures are effective.