Understanding how to prioritize workloads for optimization is crucial for ensuring efficient system performance and maximizing resource utilization. In today’s dynamic digital landscape, where various processes compete for limited resources, effectively managing workloads is paramount for maintaining responsiveness, reliability, and overall system health.

This guide provides a comprehensive overview of the principles, strategies, and techniques essential for prioritizing workloads. From understanding fundamental concepts to implementing advanced prioritization schemes, this resource equips you with the knowledge and tools to optimize your systems and achieve peak performance. We will explore the intricacies of workload types, resource consumption, dependencies, and the critical role of scheduling algorithms, empowering you to make informed decisions and build robust, efficient systems.

Understanding Workload Prioritization Fundamentals

Effective workload prioritization is a cornerstone of system optimization, ensuring that critical tasks receive the necessary resources to perform efficiently. This involves understanding the different types of workloads, their characteristics, and the metrics used to evaluate their performance. Prioritizing workloads allows for better resource allocation, reduced latency, and improved overall system responsiveness.

Core Principles of Workload Prioritization

The core principles underpinning workload prioritization are based on the concept of allocating resources strategically to meet specific business objectives. These principles guide how tasks are ranked and scheduled, directly influencing system performance.

- Business Value Alignment: Workload prioritization should directly align with the organization’s strategic goals. Tasks contributing most to revenue generation, customer satisfaction, or regulatory compliance should receive higher priority.

- Resource Allocation: Prioritization dictates how available resources, such as CPU, memory, and I/O, are distributed among competing workloads. Effective allocation minimizes contention and maximizes overall system throughput.

- Service Level Agreements (SLAs): Adherence to SLAs is a crucial aspect of prioritization. Workloads governed by stringent SLAs (e.g., real-time applications) must be prioritized to ensure timely completion and avoid penalties.

- Dynamic Prioritization: The prioritization scheme should be adaptable and capable of adjusting to changing conditions. This includes responding to fluctuations in workload demands, hardware failures, or evolving business priorities.

- Transparency and Monitoring: The prioritization strategy should be transparent, with clear rules and policies. Continuous monitoring of workload performance is essential to identify bottlenecks and fine-tune the prioritization scheme.

Different Workload Types and Their Characteristics

Understanding the characteristics of different workload types is fundamental to effective prioritization. Each type has unique resource requirements and performance profiles.

- Batch Workloads: These are typically non-interactive tasks that process large datasets. They are often characterized by high CPU and I/O usage and can be less time-sensitive. Examples include data warehousing, report generation, and nightly backups. Prioritization strategies might focus on throughput rather than immediate response times.

- Interactive Workloads: These workloads involve user interaction and demand rapid response times. They are often characterized by low CPU utilization and high I/O activity, with a focus on responsiveness. Examples include web applications, online transaction processing (OLTP) systems, and desktop applications. Prioritization aims to minimize latency and ensure a positive user experience.

- Real-time Workloads: These workloads require strict deadlines and deterministic behavior. They are time-critical and must meet stringent performance requirements. Examples include financial trading systems, industrial control systems, and medical devices. Prioritization is paramount to prevent data loss or system failures.

- Database Workloads: Database workloads are often a mix of read and write operations. Their characteristics depend on the database type, data volume, and query complexity. Prioritization involves optimizing query performance, managing contention, and ensuring data integrity.

- Network Workloads: Network workloads involve data transmission and processing. They are characterized by bandwidth usage, latency, and packet loss. Prioritization focuses on managing network congestion, ensuring quality of service (QoS), and providing sufficient bandwidth for critical applications.

Common Metrics Used to Measure Workload Performance and Identify Bottlenecks

Measuring workload performance requires the use of appropriate metrics to identify bottlenecks and areas for optimization. These metrics provide insights into system behavior and enable informed decision-making.

- Response Time/Latency: This measures the time it takes for a workload to complete a task or respond to a request. Low latency is critical for interactive and real-time workloads.

- Throughput: This measures the amount of work completed per unit of time. It is often expressed in transactions per second (TPS), operations per second (OPS), or other relevant units. High throughput is essential for batch workloads.

- CPU Utilization: This measures the percentage of time the CPU is actively processing tasks. High CPU utilization can indicate a bottleneck, especially if accompanied by high response times.

- Memory Utilization: This measures the amount of memory being used by workloads. Insufficient memory can lead to swapping, which significantly degrades performance.

- I/O Operations per Second (IOPS): This measures the number of input/output operations performed per second. High IOPS requirements can stress storage systems, causing bottlenecks.

- Disk Queue Length: This measures the number of I/O requests waiting to be processed by the storage system. A long queue length indicates a storage bottleneck.

- Network Latency: This measures the time it takes for data to travel between two points on a network. High network latency can impact application performance.

- Network Bandwidth Utilization: This measures the amount of network bandwidth being used. High bandwidth utilization can lead to congestion and performance degradation.

Identifying Workload Dependencies

Understanding workload dependencies is crucial for effective optimization. Dependencies determine the order in which workloads must be executed and how they interact with each other. Identifying these relationships allows for the creation of more efficient execution plans and helps prevent bottlenecks.

Methods for Identifying Workload Dependencies

Several methods exist to uncover the dependencies between different workloads. These methods range from manual analysis to automated tools. Each approach offers different levels of detail and effort.

- Analyzing Application Code and Documentation: Examining the source code, configuration files, and associated documentation of each workload can reveal explicit dependencies. This includes identifying which workloads read data from or write data to shared resources, such as databases, files, or message queues. Documentation, such as system architecture diagrams or data flow diagrams, can also be invaluable.

- Monitoring System Logs and Metrics: System logs and performance metrics provide insights into how workloads interact during execution. Analyzing these data points can reveal implicit dependencies, such as when one workload consistently waits for another to complete. Tools for monitoring and analysis, such as performance monitoring software or log aggregation systems, are often essential.

- Employing Dependency Tracking Tools: Specialized tools can automatically analyze code, network traffic, and system behavior to identify dependencies. These tools often generate dependency graphs that visually represent the relationships between workloads. Examples include static code analysis tools and dynamic tracing tools.

- Conducting Interviews and Workshops: Engaging with the teams responsible for each workload can provide valuable insights into dependencies that might not be readily apparent from code or logs. Workshops and interviews facilitate knowledge sharing and help to clarify complex interactions.

Visualizing Workload Dependencies

Visualizing workload dependencies significantly aids in understanding and managing complex systems. Different visualization techniques are available, each offering unique advantages in terms of clarity and detail.

- Flowcharts: Flowcharts use a standardized set of symbols to represent the steps in a process, including workloads and their interactions. They are effective for illustrating the sequence of execution and the decision points that influence workload flow. They provide a high-level overview, suitable for conveying the basic dependencies.

- Dependency Diagrams: Dependency diagrams, also known as directed graphs, represent workloads as nodes and dependencies as edges. The direction of the edge indicates the direction of the dependency (e.g., workload A depends on workload B). These diagrams are excellent for showing complex relationships and potential bottlenecks.

- Gantt Charts: Gantt charts are used to visualize the schedule of workloads over time. They can be adapted to show dependencies by linking the start and end times of related workloads. This helps in understanding the timing of dependencies and potential concurrency issues.

- Matrices: Dependency matrices represent workloads in both rows and columns. The intersection of a row and column indicates the nature of the relationship between the corresponding workloads (e.g., read, write, or no dependency). They are particularly useful for quickly identifying which workloads interact with each other.

Impact of Dependencies on System Performance and Optimization Strategies

Workload dependencies significantly influence system performance and dictate the strategies that can be employed for optimization. Understanding these impacts is essential for achieving optimal system efficiency.

- Increased Latency: Dependencies can introduce latency as one workload must wait for another to complete. This is particularly true for sequential dependencies, where workloads must execute in a specific order.

- Resource Contention: Workloads that share resources, such as databases or network bandwidth, can create contention, leading to performance degradation. Dependencies exacerbate this issue, as workloads may compete for the same resources at the same time.

- Bottleneck Identification: Dependencies help to identify bottlenecks in the system. By analyzing the dependencies and performance characteristics of each workload, it is possible to pinpoint the areas where optimization efforts will yield the greatest results.

- Optimization Strategies: Several optimization strategies can be employed to mitigate the negative impacts of dependencies. These strategies include:

- Parallelization: If possible, workloads can be executed in parallel to reduce overall execution time.

- Caching: Caching frequently accessed data can reduce the load on dependent workloads.

- Resource Allocation: Efficient resource allocation can help to minimize contention and improve performance.

- Dependency Removal/Reduction: Restructuring workloads to reduce dependencies or remove unnecessary ones can improve performance.

Assessing Workload Resource Consumption

Understanding and quantifying the resource consumption of your workloads is crucial for effective prioritization. This involves meticulously identifying the resources each workload utilizes and then implementing strategies to monitor and categorize them based on their demands. This process allows for informed decisions about optimization efforts, ensuring that resources are allocated efficiently and that performance bottlenecks are addressed proactively.

Identifying Key Resources

Workloads consume various resources, and understanding which resources are most critical for each workload is the first step in effective resource management. This identification process enables a targeted approach to optimization.The primary resources to consider include:

- CPU (Central Processing Unit): The CPU is the “brain” of the system, responsible for executing instructions. Workloads that are CPU-bound spend a significant amount of time processing data and performing calculations. High CPU utilization can indicate a need for code optimization, more powerful hardware, or distributing the workload across multiple CPUs or servers.

- Memory (RAM – Random Access Memory): Memory stores data and instructions that the CPU needs to access quickly. Memory-intensive workloads often involve large datasets or complex data structures. Insufficient memory can lead to performance degradation due to swapping (using hard drive space as virtual memory), which is significantly slower than RAM.

- I/O (Input/Output): I/O operations involve reading from and writing to storage devices (hard drives, SSDs) or network interfaces. I/O-bound workloads spend a lot of time waiting for data to be read or written. Bottlenecks in I/O can be addressed by optimizing storage configuration, using faster storage devices (e.g., SSDs instead of HDDs), or caching frequently accessed data.

- Network: Network resources encompass bandwidth, latency, and packet loss. Network-bound workloads are those that rely heavily on data transfer over a network. Network congestion or high latency can significantly impact performance. Optimization strategies include optimizing network configurations, load balancing, and caching.

Tools and Techniques for Monitoring Resource Usage in Real-Time

Real-time monitoring is essential for understanding workload behavior and identifying performance issues as they occur. Several tools and techniques can be employed to gain insights into resource consumption patterns.Common monitoring tools include:

- Operating System Monitoring Tools: Operating systems provide built-in tools for monitoring resource usage.

- Linux: Tools like `top`, `htop`, `vmstat`, `iostat`, and `netstat` offer real-time views of CPU, memory, I/O, and network usage. `top` provides a dynamic real-time view of running processes and their resource consumption, including CPU and memory usage. `htop` offers a more user-friendly, interactive interface. `vmstat` provides information about virtual memory, CPU, and I/O statistics.

`iostat` is used for monitoring disk I/O performance. `netstat` is used to monitor network connections and statistics.

- Windows: Task Manager, Performance Monitor, and Resource Monitor provide detailed information about CPU, memory, disk, and network usage. Performance Monitor allows for the collection and analysis of performance data over time. Resource Monitor offers real-time insights into resource usage by processes.

- Linux: Tools like `top`, `htop`, `vmstat`, `iostat`, and `netstat` offer real-time views of CPU, memory, I/O, and network usage. `top` provides a dynamic real-time view of running processes and their resource consumption, including CPU and memory usage. `htop` offers a more user-friendly, interactive interface. `vmstat` provides information about virtual memory, CPU, and I/O statistics.

- Application Performance Monitoring (APM) Tools: APM tools provide deeper insights into application performance, including resource consumption at the code level.

- Examples include Dynatrace, AppDynamics, New Relic, and Datadog. These tools can identify performance bottlenecks within applications, such as slow database queries or inefficient code execution. They often offer features like distributed tracing, which helps track requests as they move through different services.

- Cloud Provider Monitoring Tools: Cloud platforms like AWS (Amazon Web Services), Azure (Microsoft Azure), and GCP (Google Cloud Platform) offer their own monitoring services.

- AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring provide detailed metrics and dashboards for monitoring resources used by cloud-based workloads. These tools integrate with other cloud services and provide features like alerting and automated scaling.

Techniques for effective monitoring:

- Setting Baselines: Establishing baseline performance metrics under normal operating conditions is crucial. This allows for easy identification of deviations that might indicate a performance issue.

- Establishing Alerts: Configure alerts to be triggered when resource utilization exceeds predefined thresholds. This enables proactive identification and resolution of performance issues. For example, an alert can be triggered when CPU utilization exceeds 90% for more than 5 minutes.

- Analyzing Historical Data: Regularly review historical performance data to identify trends and patterns. This can help predict future resource needs and proactively address potential bottlenecks.

- Using Dashboards: Create dashboards to visualize key performance metrics in real-time. This allows for quick identification of performance issues and facilitates data-driven decision-making.

Designing a System for Categorizing Workloads Based on Resource Demands

Categorizing workloads based on their resource demands allows for more effective prioritization and optimization. This system helps to understand which workloads are most resource-intensive and where optimization efforts should be focused.The system for categorizing workloads can be structured using the following steps:

- Define Resource Consumption Metrics: Determine the key metrics to track for each resource. These metrics should be relevant to the workload’s behavior. Examples include CPU utilization percentage, memory usage (in GB), I/O operations per second (IOPS), and network bandwidth usage (in Mbps).

- Establish Thresholds: Define thresholds for each metric. These thresholds can be used to categorize workloads based on their resource consumption levels. For example:

- CPU Utilization:

- Low: < 50%

- Medium: 50%

-80% - High: > 80%

- Memory Usage:

- Low: < 2 GB

- Medium: 2 GB – 8 GB

- High: > 8 GB

- CPU Utilization:

- Categorize Workloads: Based on the defined metrics and thresholds, categorize each workload into different tiers or categories. A simple categorization system could use three tiers: Low, Medium, and High. For example:

- Low: Workloads with low CPU, memory, I/O, and network utilization.

- Medium: Workloads with moderate resource consumption.

- High: Workloads with high resource consumption, potentially causing performance bottlenecks.

- Implement Automated Categorization: Automate the categorization process by integrating monitoring tools and scripting. This ensures that workloads are automatically categorized based on real-time resource consumption data. For instance, a script could be used to query monitoring tools for metrics and update the workload categorization in a database or a configuration management system.

- Regularly Review and Refine: The categorization system should be reviewed and refined regularly. This ensures that the thresholds and categories are still relevant and reflect the current workload behavior. The review process should consider factors like changes in workload characteristics, new hardware, and evolving business requirements.

This categorization system facilitates informed decisions about resource allocation, performance tuning, and capacity planning. For example, workloads categorized as “High” resource consumers might be prioritized for optimization, while those categorized as “Low” consumers might be given a lower priority. This approach ensures that optimization efforts are focused on the areas that will yield the greatest performance gains.

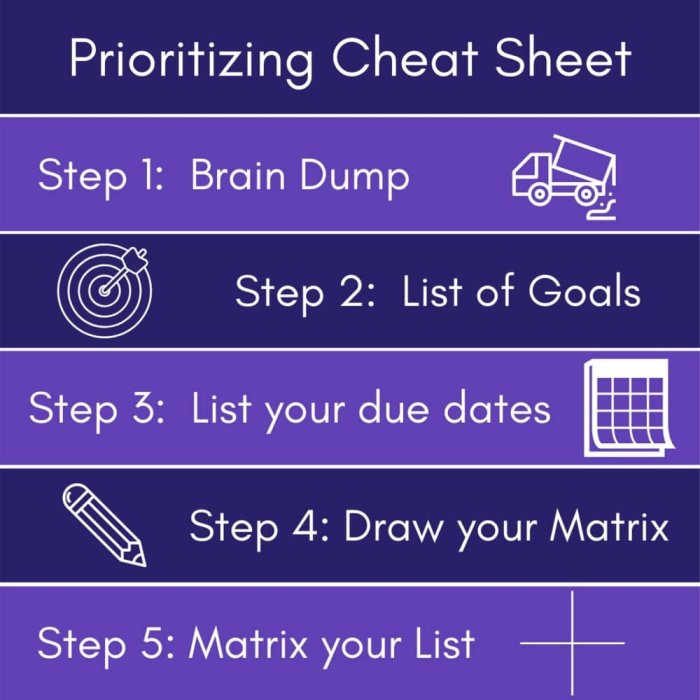

Defining Prioritization Criteria

Establishing clear prioritization criteria is essential for effective workload optimization. Without a defined framework, decisions become arbitrary, leading to inefficiencies and potentially impacting critical business functions. This section Artikels the process of defining and implementing such criteria, ensuring a structured and data-driven approach to workload management.

Factors for Prioritization

Several factors should be considered when determining workload priorities. Each factor contributes to a holistic view, enabling informed decisions that align with overall business objectives.

- Business Value: This assesses the contribution of a workload to the organization’s strategic goals. Workloads directly supporting revenue generation, customer satisfaction, or market expansion typically receive higher priority. Consider the potential impact on key performance indicators (KPIs) like revenue, profit margin, or customer acquisition cost. For example, a workload related to processing online sales transactions would have a high business value due to its direct impact on revenue.

- Urgency: This factor considers the time sensitivity of the workload. Urgent tasks require immediate attention, while less time-critical tasks can be scheduled for later. The potential consequences of delay, such as missed deadlines, service level agreement (SLA) breaches, or regulatory penalties, should be considered. For example, a critical security patch deployment would have high urgency to mitigate potential vulnerabilities.

- Risk: Evaluating the potential risks associated with a workload helps determine its priority. Workloads with high-risk profiles, such as those involving sensitive data or critical infrastructure, should be prioritized to minimize potential damage. This involves assessing the likelihood and impact of potential failures or security breaches. For instance, a workload handling financial transactions carries significant risk, necessitating high priority.

- Dependencies: Identifying dependencies helps determine the order in which workloads should be executed. Workloads that are prerequisites for other tasks must be prioritized accordingly. Consider both internal and external dependencies, as well as the potential cascading effects of delays. For example, a data processing job might depend on the completion of a data ingestion process, requiring the latter to be prioritized.

- Cost: The cost associated with running a workload can influence its priority. Workloads with high operational costs, such as those consuming significant resources or requiring expensive infrastructure, may be subject to optimization efforts and, therefore, potentially prioritized based on their cost-saving potential. This involves analyzing resource utilization and identifying opportunities for efficiency improvements.

- Compliance: Prioritizing workloads that ensure regulatory compliance and adherence to industry standards is crucial to avoid penalties and maintain operational integrity. This includes workloads related to data privacy, security, and reporting requirements. For example, a workload generating audit trails would be prioritized to meet regulatory compliance needs.

Creating a Scoring System

A scoring system provides a structured approach to assigning priorities based on predefined criteria. This system helps quantify subjective factors, enabling more objective decision-making.

The scoring system typically involves the following steps:

- Define Criteria and Weights: Assign weights to each prioritization factor based on its relative importance. For example, business value might be weighted at 40%, urgency at 30%, risk at 20%, and dependencies at 10%. The sum of all weights should equal 100%.

- Develop Scoring Scales: Create a scoring scale for each factor. This could be a numerical scale (e.g., 1-5, where 1 is low and 5 is high) or a qualitative scale (e.g., Low, Medium, High).

- Score Workloads: Evaluate each workload against each factor and assign a score based on the defined scales.

- Calculate Total Score: Multiply each factor’s score by its weight and sum the results to calculate the total score for each workload.

- Prioritize Workloads: Rank workloads based on their total scores, with higher scores indicating higher priority.

Example of a simple scoring system:

| Factor | Weight | Scoring Scale | Workload A Score | Workload B Score |

|---|---|---|---|---|

| Business Value | 40% | 1-5 (1=Low, 5=High) | 5 | 3 |

| Urgency | 30% | 1-5 (1=Low, 5=High) | 3 | 5 |

| Risk | 20% | 1-5 (1=Low, 5=High) | 2 | 4 |

| Dependencies | 10% | 1-5 (1=Low, 5=High) | 4 | 2 |

| Total Score | 100% | 3.7 | 3.7 |

Calculation example for Workload A:

(5

- 0.40) + (3

- 0.30) + (2

- 0.20) + (4

- 0.10) = 3.7

In this example, both workloads A and B have the same total score. Further analysis may be required to break the tie, or the business may need to reassess the weights assigned to each factor.

Adjusting Prioritization Criteria

Prioritization criteria are not static; they must be adjusted to reflect changing business needs and market dynamics. Regular reviews and updates are crucial to ensure the system remains relevant and effective.

Adjustments may be necessary due to:

- Changes in Business Strategy: If the company’s strategic priorities shift (e.g., from market expansion to cost reduction), the weights assigned to the prioritization factors should be reevaluated. Business value and cost factors might gain increased importance.

- New Regulations or Compliance Requirements: The introduction of new regulations or compliance mandates will necessitate the reevaluation of the compliance factor and potentially other related factors.

- Emerging Risks: New risks, such as cybersecurity threats or supply chain disruptions, should be incorporated into the risk assessment and potentially influence the prioritization of related workloads.

- Performance Monitoring and Feedback: Continuous monitoring of workload performance and gathering feedback from stakeholders help identify areas for improvement in the prioritization process. If the system consistently misprioritizes workloads, adjustments may be needed.

- Technological Advancements: New technologies may introduce new optimization opportunities or change the relative importance of certain workloads. The criteria should be updated to reflect these changes.

To adjust prioritization criteria effectively, organizations should establish a formal review process. This may include:

- Regular Reviews: Schedule periodic reviews (e.g., quarterly or annually) to assess the effectiveness of the prioritization system.

- Stakeholder Input: Gather feedback from key stakeholders, including business leaders, IT staff, and end-users, to understand their perspectives and identify areas for improvement.

- Data Analysis: Analyze performance data to identify trends and patterns that may indicate the need for adjustments.

- Documentation: Maintain clear documentation of the prioritization criteria, scoring system, and any adjustments made over time. This ensures transparency and facilitates communication.

Implementing Prioritization Strategies

Effectively implementing workload prioritization is crucial for optimizing resource allocation and ensuring critical tasks are completed efficiently. The chosen strategy significantly impacts system performance, user experience, and overall business outcomes. This section details various prioritization strategies and provides practical guidance for their implementation.

Prioritization Strategy Types

Several prioritization strategies exist, each with its own strengths and weaknesses. The choice of strategy depends on the specific needs and constraints of the environment.

- Static Prioritization: This strategy assigns fixed priorities to workloads. These priorities remain constant over time. It is simple to implement but may not adapt well to changing conditions.

- Dynamic Prioritization: Dynamic prioritization adjusts workload priorities based on real-time factors, such as resource usage, deadlines, or business importance. This approach offers greater flexibility and responsiveness than static methods.

- Adaptive Prioritization: Adaptive prioritization is a more sophisticated approach that combines static and dynamic elements. It may use a static baseline but adjusts priorities dynamically based on observed system behavior and predefined rules. This allows for a balance between predictability and responsiveness.

Implementing a Static Prioritization Scheme

A static prioritization scheme is relatively straightforward to implement. The following steps Artikel the procedure:

- Define Workload Categories: Identify and categorize the different types of workloads within the system. This could include interactive user requests, batch processes, background tasks, and system maintenance operations.

- Assign Priority Levels: Determine the number of priority levels and assign each workload category a priority level. Higher priority levels indicate greater importance. For example:

- Priority 1: Critical user requests (e.g., financial transactions).

- Priority 2: Interactive user requests (e.g., web browsing).

- Priority 3: Batch processes (e.g., daily reports).

- Priority 4: Background tasks (e.g., data backups).

- Establish Priority Rules: Define the rules for how the system will handle workloads of different priorities. This might include:

- Resource allocation: How much CPU, memory, and I/O will each priority level receive?

- Queueing: How will workloads be queued and processed based on their priority?

- Preemption: Will higher-priority workloads be allowed to interrupt lower-priority workloads?

- Configure the System: Configure the system’s resource management tools (e.g., operating system scheduler, database management system) to enforce the defined priority rules.

- Test and Monitor: Thoroughly test the system to ensure that the prioritization scheme is working as intended. Continuously monitor system performance and adjust the scheme as needed. Observe response times for high-priority tasks and ensure they meet the defined service level agreements (SLAs).

Using a Dynamic Prioritization Strategy

Dynamic prioritization adapts to changing conditions, making it suitable for environments with fluctuating workloads and resource demands. Examples of its use include:

- Deadline-Driven Prioritization: Workloads with approaching deadlines receive higher priority. This ensures timely completion of critical tasks.

For example, a financial trading system could dynamically prioritize trades based on their proximity to a market closing time. The closer a trade is to the deadline, the higher its priority.

- Resource-Based Prioritization: Workloads that are consuming a large amount of a critical resource (e.g., CPU, memory, I/O) might be temporarily deprioritized to prevent resource starvation.

For instance, a database server might dynamically lower the priority of a long-running query that is consuming excessive CPU resources to allow other, more time-sensitive queries to execute. This is a common practice in database management systems.

- Business Value Prioritization: Workloads associated with high-value transactions or critical business processes receive higher priority.

An e-commerce platform might dynamically prioritize order processing based on the order value. High-value orders, such as those containing premium products or large quantities, would receive higher priority to ensure faster processing and customer satisfaction. This strategy often involves integrating with business intelligence systems to assess the value of each workload in real-time.

Utilizing Scheduling Algorithms

Scheduling algorithms are critical components of workload management, dictating the order in which tasks are executed and how resources are allocated. Effective use of these algorithms can significantly improve system performance, responsiveness, and overall efficiency. Choosing the right algorithm is essential for optimizing workload execution based on specific needs and priorities.

The Role of Scheduling Algorithms in Workload Management

Scheduling algorithms play a fundamental role in managing and optimizing workloads. They determine the order in which processes or tasks are executed on a system, aiming to achieve various goals, such as maximizing throughput, minimizing response time, and ensuring fairness among processes. The algorithm selected directly impacts the system’s ability to meet its performance objectives and effectively utilize available resources.

They are responsible for allocating CPU time, memory, and other resources to different processes. They also handle context switching, which involves saving the state of a process and loading the state of another process to allow for the execution of multiple processes concurrently. The selection of an appropriate scheduling algorithm depends on the specific characteristics of the workload and the desired performance goals.

Comparing and Contrasting Different Scheduling Algorithms

Various scheduling algorithms exist, each with its strengths and weaknesses. The choice of which algorithm to use depends on the specific requirements of the workload.

- First-Come, First-Served (FIFO): FIFO is a simple scheduling algorithm where processes are executed in the order they arrive in the ready queue. It is easy to implement but can lead to long waiting times for short processes if a long process arrives first, resulting in a “convoy effect” where short processes are delayed behind a single, long-running process.

- Round Robin (RR): RR is a time-sharing algorithm designed to provide fairness. Each process is given a fixed amount of CPU time, known as a time slice or quantum. When a process’s time slice expires, it is preempted, and the CPU is assigned to the next process in the ready queue. RR is effective for interactive systems as it provides a reasonable response time for all processes.

However, the choice of time slice is critical; a too-short time slice increases overhead due to frequent context switching, while a too-long time slice degrades responsiveness.

- Priority Scheduling: In priority scheduling, each process is assigned a priority, and the CPU is allocated to the process with the highest priority. Priorities can be assigned statically or dynamically based on factors such as process importance, resource requirements, or estimated execution time. Priority scheduling can lead to starvation, where low-priority processes may never get executed if high-priority processes continuously arrive.

- Shortest Job First (SJF): SJF is a non-preemptive algorithm that selects the process with the shortest estimated execution time to run next. SJF minimizes the average waiting time but requires accurate estimates of execution times, which are not always available. This algorithm is particularly efficient in scenarios where processes are short and predictable.

- Multilevel Feedback Queue: This algorithm is a more complex approach that combines multiple queues with different priorities and time slices. Processes can move between queues based on their behavior (e.g., CPU-bound or I/O-bound). This approach aims to balance responsiveness and throughput by adapting to the dynamic needs of the processes.

Selecting the Appropriate Scheduling Algorithm for Specific Workloads

Selecting the appropriate scheduling algorithm requires careful consideration of the workload’s characteristics and performance objectives. Different algorithms are suitable for different types of workloads.

- Batch Processing: For batch processing systems, where tasks are executed without user interaction, FIFO or SJF may be suitable. SJF can minimize average turnaround time if execution times are predictable.

- Interactive Systems: For interactive systems, Round Robin is often preferred due to its ability to provide fair and relatively quick response times to user requests.

- Real-Time Systems: Real-time systems, which require strict deadlines, often use priority-based scheduling or specialized real-time scheduling algorithms (e.g., Rate Monotonic Scheduling or Earliest Deadline First). These algorithms prioritize tasks based on their deadlines or importance.

- Mixed Workloads: For mixed workloads, where different types of tasks are present, a multilevel feedback queue may be the best option. This allows the system to adapt to the varying needs of different tasks.

For example, consider a web server. It may use Round Robin or a similar time-sharing algorithm to ensure all incoming requests receive a timely response. In contrast, a scientific computing cluster might use SJF to optimize the processing of a large number of jobs with varying computational requirements.

Optimizing Resource Allocation

Optimizing resource allocation is crucial for maximizing workload performance and efficiency. Effective resource management ensures that workloads receive the necessary resources, such as CPU, memory, and I/O, to operate effectively, while minimizing contention and preventing bottlenecks. This section explores strategies for optimizing resource allocation, managing resource contention, and preventing resource starvation.

Strategies for Optimizing Resource Allocation

To improve workload performance, several strategies can be employed for optimizing resource allocation. These strategies aim to match resource needs with available capacity efficiently.

- Resource Pooling and Virtualization: Resource pooling involves grouping resources together to be dynamically allocated to workloads as needed. Virtualization, a key enabler of resource pooling, allows for the creation of virtual machines (VMs) that can share physical resources. This approach offers several benefits:

- Improved Resource Utilization: Resources are utilized more efficiently as they are allocated dynamically based on demand. For example, a server might be running several VMs, each with different resource requirements.

When one VM is idle, its resources can be allocated to a more demanding VM.

- Increased Flexibility: VMs can be easily created, moved, and resized, providing flexibility in resource allocation.

- Simplified Management: Centralized management tools can simplify the administration of virtualized resources.

- Improved Resource Utilization: Resources are utilized more efficiently as they are allocated dynamically based on demand. For example, a server might be running several VMs, each with different resource requirements.

- Dynamic Resource Allocation: Implement systems that dynamically adjust resource allocation based on real-time workload demands. This can involve monitoring resource usage and automatically scaling resources up or down. For instance, if a web server experiences a sudden increase in traffic, the system can automatically allocate more CPU and memory to handle the load.

- Capacity Planning: Perform regular capacity planning to forecast future resource needs. This involves analyzing historical data and predicting resource requirements based on expected workload growth. By proactively planning for future capacity, organizations can avoid performance bottlenecks and ensure that sufficient resources are available. For example, a retail company might analyze its sales data from the previous year to predict resource needs during the upcoming holiday season.

- Right-Sizing: Ensure that workloads are allocated the appropriate amount of resources. Over-provisioning resources can lead to waste, while under-provisioning can result in performance issues. Right-sizing involves carefully assessing the resource needs of each workload and allocating the minimum resources necessary to meet its performance requirements. This may involve monitoring resource utilization and adjusting allocations as needed.

- Resource-Aware Scheduling: Utilize scheduling algorithms that consider resource requirements when assigning tasks to resources. For example, a scheduler might prioritize tasks that require a lot of memory on servers with available memory. This approach ensures that workloads are assigned to resources that can meet their needs and can improve overall system performance.

Techniques for Managing Resource Contention

Resource contention occurs when multiple workloads compete for the same resources. Managing resource contention is crucial for maintaining performance and preventing bottlenecks. Several techniques can be used to mitigate the impact of resource contention.

- Prioritization and Quality of Service (QoS): Implement QoS mechanisms to prioritize critical workloads and ensure they receive preferential access to resources. This can involve assigning higher priority levels to important tasks or limiting the resources available to lower-priority tasks. For example, a database server might be given higher priority than a batch processing job to ensure that database queries are processed quickly.

- Resource Quotas and Limits: Set resource quotas and limits to control the amount of resources each workload can consume. This prevents a single workload from monopolizing resources and starving other workloads. For instance, a cloud provider might set limits on the CPU and memory usage of each virtual machine.

- Load Balancing: Distribute workloads across multiple resources to reduce contention on any single resource. Load balancing can be implemented at various levels, such as at the network level (e.g., using load balancers to distribute traffic across multiple web servers) or at the application level (e.g., using a message queue to distribute tasks among multiple worker processes).

- Caching: Implement caching mechanisms to reduce the load on resources. Caching involves storing frequently accessed data in a faster storage medium, such as memory or a content delivery network (CDN). For example, caching static content on a CDN can reduce the load on web servers and improve response times.

- Database Optimization: Optimize database queries and schema to reduce resource consumption. This can involve techniques such as indexing, query optimization, and data partitioning. For instance, properly indexing a database table can significantly speed up query execution times.

Plan to Prevent Resource Starvation

Resource starvation occurs when a workload is repeatedly denied access to the resources it needs to complete its tasks. Preventing resource starvation is essential for ensuring fairness and preventing critical workloads from being blocked. A comprehensive plan to prevent resource starvation should include the following elements:

- Fair Scheduling Algorithms: Employ scheduling algorithms that provide fair access to resources. Fair-share scheduling, for example, ensures that each workload receives a fair share of the resources over time.

- Resource Reservation: Reserve a minimum amount of resources for critical workloads to guarantee they have access to the resources they need. This can involve setting aside dedicated CPU cores, memory, or I/O bandwidth for important tasks.

- Preemption: Implement preemption mechanisms that allow higher-priority workloads to preempt lower-priority workloads when necessary. This ensures that critical tasks can always gain access to resources.

- Monitoring and Alerting: Monitor resource usage and set up alerts to detect potential resource starvation situations. This allows for proactive intervention before performance is impacted.

- Resource Isolation: Use resource isolation techniques, such as containers or virtual machines, to isolate workloads and prevent one workload from impacting the resource availability of others.

- Admission Control: Implement admission control mechanisms to limit the number of workloads that can be admitted to the system. This can help prevent resource overload and reduce the likelihood of resource starvation.

Monitoring and Performance Tuning

Monitoring and performance tuning are crucial for maintaining optimal workload performance and ensuring efficient resource utilization. By continuously tracking key performance indicators (KPIs) and proactively responding to performance bottlenecks, organizations can prevent service degradation, improve user experience, and reduce operational costs. This section details the essential aspects of monitoring and tuning workloads.

Key Performance Indicators (KPIs) for Monitoring Workload Performance

Establishing a set of KPIs is fundamental to understanding workload behavior and identifying areas for improvement. These metrics provide a quantitative basis for evaluating performance and making data-driven decisions.

- Response Time: Measures the time taken to complete a request. This is a critical metric for user-facing applications, as slow response times directly impact user experience.

- Throughput: Represents the amount of work completed within a specific timeframe, often measured in transactions per second (TPS) or requests per second (RPS). High throughput indicates efficient processing.

- Error Rate: Indicates the percentage of requests that result in errors. A high error rate signals potential problems with the application or underlying infrastructure.

- CPU Utilization: Shows the percentage of CPU resources being used by the workload. High CPU utilization can indicate a CPU bottleneck.

- Memory Utilization: Represents the amount of memory being consumed by the workload. Excessive memory usage can lead to performance issues and resource exhaustion.

- Disk I/O: Measures the rate at which data is read from and written to disk. High disk I/O can indicate disk bottlenecks.

- Network Latency: Measures the delay in data transmission over the network. High network latency can affect application performance, especially for distributed systems.

- Queue Length: Indicates the number of requests waiting to be processed. Long queue lengths can signal resource contention or bottlenecks.

Creating a System for Generating Alerts Based on Performance Thresholds

Implementing an effective alerting system is essential for proactively addressing performance issues. This involves defining thresholds for each KPI and configuring alerts to be triggered when these thresholds are exceeded.

A robust alerting system should include the following components:

- Threshold Definition: Establishing appropriate upper and lower bounds for each KPI. These thresholds should be based on historical data, performance benchmarks, and service level agreements (SLAs).

- Alerting Rules: Configuring rules that trigger alerts when KPIs exceed their defined thresholds. These rules can be based on static thresholds or dynamic baselines.

- Notification Channels: Defining the channels through which alerts will be delivered, such as email, SMS, or integration with incident management systems.

- Escalation Policies: Establishing escalation paths to ensure that alerts are addressed promptly, including assigning responsibility and notifying the appropriate teams.

For example, a system might alert when:

- Response time exceeds 2 seconds for more than 5 minutes.

- Error rate exceeds 1% for more than 10 minutes.

- CPU utilization exceeds 90% for more than 15 minutes.

Demonstrating How to Use Monitoring Data to Tune Workload Priorities and Resource Allocation

Monitoring data provides the insights necessary to make informed decisions about workload priorities and resource allocation. By analyzing performance trends and identifying bottlenecks, organizations can optimize their systems for improved efficiency and performance.

The process involves the following steps:

- Analyze Performance Data: Regularly review the KPIs to identify performance trends, anomalies, and bottlenecks. Tools such as dashboards and reporting systems can help visualize the data.

- Identify Bottlenecks: Determine the root causes of performance issues. Common bottlenecks include CPU, memory, disk I/O, and network.

- Adjust Workload Priorities: Re-prioritize workloads based on their importance and resource consumption. Higher-priority workloads should receive more resources to meet their performance requirements.

- Optimize Resource Allocation: Adjust the allocation of resources, such as CPU cores, memory, and network bandwidth, to address bottlenecks. Consider using resource management tools or scheduling algorithms.

- Iterate and Refine: Continuously monitor the performance of the system and adjust workload priorities and resource allocation as needed. The tuning process is iterative, requiring ongoing analysis and optimization.

For example, if a high-priority workload experiences slow response times due to CPU contention, the following actions might be taken:

- Increase the CPU allocation for the workload.

- Reduce the CPU allocation for lower-priority workloads.

- Identify and address any code inefficiencies within the high-priority workload.

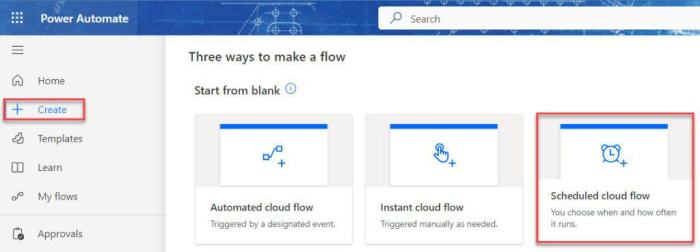

Automation and Orchestration

Automating workload prioritization and resource allocation is crucial for efficient and responsive IT operations. This involves leveraging tools and techniques to streamline the management of workloads, ensuring optimal performance and minimizing manual intervention. Automation not only improves efficiency but also reduces the potential for human error, leading to more consistent and reliable results.

Automating Workload Prioritization and Resource Allocation

Automating workload prioritization and resource allocation involves creating systems that dynamically adjust resource allocation based on predefined criteria and real-time conditions. This typically involves the following steps:

- Defining Automation Rules: Establish clear rules that govern how workloads are prioritized and resources are allocated. These rules should consider factors such as workload importance, resource consumption, and service-level agreements (SLAs). For example, a rule might prioritize critical business applications over less important background processes.

- Implementing Automated Monitoring: Deploy monitoring tools to continuously track workload performance, resource utilization, and system health. This data is then used to trigger automated actions.

- Integrating with Orchestration Tools: Utilize orchestration tools to execute the automation rules. These tools can automatically adjust resource allocation, scale applications, and manage workload placement based on the monitored data and defined rules.

- Automated Alerting and Remediation: Configure alerts to notify administrators of any issues or deviations from the defined rules. The system should also be capable of automatically remediating common problems, such as scaling up resources in response to increased demand.

- Continuous Optimization: Regularly review and refine the automation rules and monitoring configurations to ensure they remain effective and aligned with changing business needs. This includes analyzing performance data and identifying areas for improvement.

Orchestration Tools for Workload Management

Orchestration tools play a vital role in automating workload management. These tools provide a centralized platform for defining, managing, and executing automated tasks related to workload prioritization and resource allocation.

- Kubernetes: Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications. It can automatically schedule and manage workloads based on resource availability and predefined policies. Kubernetes excels at managing complex deployments and ensuring high availability.

Example: A retail company uses Kubernetes to automatically scale its e-commerce application during peak shopping seasons. The platform monitors resource utilization and dynamically adjusts the number of application instances to handle the increased traffic.

- Apache Mesos: Apache Mesos is a cluster manager that provides resource isolation and sharing across distributed systems. It allows organizations to efficiently manage a diverse set of workloads, including batch jobs, streaming data, and long-running services.

Example: A financial institution uses Mesos to manage its big data processing pipelines. Mesos ensures that resources are allocated efficiently to different processing tasks, such as risk analysis and fraud detection, based on their priority and resource requirements.

- AWS Auto Scaling: AWS Auto Scaling is a service offered by Amazon Web Services that automatically adjusts the capacity of various AWS resources, such as EC2 instances, based on demand. It integrates with other AWS services to provide a comprehensive solution for workload management.

Example: A media streaming service uses AWS Auto Scaling to automatically scale its video encoding and delivery infrastructure. The service monitors the number of concurrent users and automatically increases or decreases the number of EC2 instances to meet demand, ensuring a smooth user experience.

- Google Kubernetes Engine (GKE): Google Kubernetes Engine (GKE) is a managed Kubernetes service that simplifies the deployment, management, and scaling of containerized applications. It offers features like automated upgrades, scaling, and monitoring.

Example: A gaming company utilizes GKE to manage its online game servers. GKE automatically scales the server instances based on the number of active players, providing a responsive and reliable gaming experience.

- HashiCorp Nomad: Nomad is a flexible and easy-to-use workload orchestrator designed for deploying and managing applications across a variety of infrastructure platforms. It’s well-suited for both containerized and non-containerized applications.

Example: A software development company uses Nomad to deploy and manage its microservices-based applications. Nomad handles the scheduling and deployment of the services across the company’s infrastructure, ensuring efficient resource utilization and high availability.

Integrating Automated Systems into Existing Infrastructure

Integrating automated systems into existing infrastructure requires a well-defined strategy and careful planning. The following steps Artikel the process:

- Assess Current Infrastructure: Conduct a thorough assessment of the existing infrastructure, including servers, network devices, storage systems, and applications. This assessment should identify any existing automation tools, dependencies, and potential integration points.

- Choose the Right Tools: Select orchestration and automation tools that are compatible with the existing infrastructure and meet the specific workload management requirements. Consider factors such as scalability, ease of use, and integration capabilities.

- Develop a Phased Implementation Plan: Implement the automated systems in a phased approach to minimize disruption to existing operations. This allows for testing and validation at each stage. Start with non-critical workloads and gradually expand to more critical applications.

- Configure and Test: Configure the chosen tools to align with the defined prioritization criteria and resource allocation policies. Thoroughly test the automated systems to ensure they function correctly and meet performance expectations.

- Integrate with Existing Monitoring Systems: Integrate the automated systems with existing monitoring tools to provide a unified view of workload performance and resource utilization. This integration allows for centralized monitoring and alerting.

- Provide Training and Documentation: Train the IT staff on the use of the new automation tools and provide comprehensive documentation. This ensures that the staff can effectively manage and troubleshoot the automated systems.

- Monitor and Optimize: Continuously monitor the performance of the automated systems and make adjustments as needed. Regularly review the automation rules and configurations to ensure they remain effective and aligned with changing business needs.

Designing a Prioritization Framework

Designing a robust prioritization framework is crucial for effectively managing workloads and optimizing resource allocation. This framework provides a structured approach to determine the order in which workloads are executed, ensuring that critical tasks receive the necessary resources and attention. A well-defined framework helps align IT operations with business objectives, improving overall efficiency and performance.

Structured Approach for Framework Design

A systematic approach ensures the prioritization framework is comprehensive and adaptable. It involves several key steps, from defining goals to continuous refinement.

- Define Objectives and Goals: The initial step involves clearly identifying the business objectives and goals that the prioritization framework aims to support. These goals could include improving application performance, reducing operational costs, or enhancing customer experience. Understanding these objectives is fundamental to setting the right priorities.

- Identify Workloads: Catalog all workloads that will be managed by the framework. This includes applications, services, processes, and any other tasks that consume resources. Categorizing workloads helps in understanding their nature and impact.

- Establish Prioritization Criteria: Develop a set of criteria to assess the importance of each workload. These criteria should be measurable and relevant to the defined objectives. Examples include business impact, urgency, resource consumption, and dependencies.

- Assign Weights to Criteria: Determine the relative importance of each prioritization criterion by assigning weights. This reflects the organization’s priorities. For example, business impact might be weighted more heavily than resource consumption.

- Develop a Scoring System: Create a scoring system to evaluate each workload against the established criteria. This system could use a numerical scale or a qualitative assessment. The scoring system should be consistent and easy to apply.

- Document Dependencies: Map the dependencies between workloads. Understanding dependencies is crucial to avoid cascading failures and to ensure that dependent tasks are prioritized appropriately.

- Create a Prioritization Matrix: Develop a matrix or table that displays the priority scores for each workload, taking into account all the factors. This matrix provides a clear overview of workload priorities.

- Implement and Test: Implement the prioritization framework and test it in a controlled environment. This allows for identifying any issues or areas for improvement before full-scale deployment.

- Monitor and Evaluate: Continuously monitor the performance of the framework and evaluate its effectiveness. Regularly review and update the framework to adapt to changing business needs and technological advancements.

Template for Documenting Priorities and Dependencies

A well-structured template facilitates clear communication and consistency in documenting workload priorities and dependencies. This template should include key information to ensure everyone understands the prioritization process.

The template could be a spreadsheet or a database, with the following columns:

| Workload ID | Workload Name | Description | Business Impact (Score) | Urgency (Score) | Resource Consumption (Score) | Dependencies | Priority Score (Total) | Priority Level | Owner | Notes |

|---|---|---|---|---|---|---|---|---|---|---|

| 101 | Order Processing System | Handles online order transactions | 9 | 8 | 7 | Database Server, Payment Gateway | 24 | High | IT Department | Critical for revenue generation |

| 102 | Reporting Application | Generates business performance reports | 7 | 6 | 5 | Data Warehouse | 18 | Medium | Business Intelligence Team | Reports are generated daily |

Explanation of Columns:

- Workload ID: A unique identifier for each workload.

- Workload Name: The name of the workload.

- Description: A brief description of the workload’s function.

- Business Impact (Score): A numerical score reflecting the impact on the business (e.g., 1-10).

- Urgency (Score): A numerical score reflecting the urgency of the workload (e.g., 1-10).

- Resource Consumption (Score): A numerical score reflecting resource usage (e.g., 1-10).

- Dependencies: List of other workloads or resources that this workload depends on.

- Priority Score (Total): The total score calculated based on the weighted criteria.

- Priority Level: The overall priority level (e.g., High, Medium, Low) based on the score.

- Owner: The individual or team responsible for the workload.

- Notes: Additional information or comments.

Communicating the Prioritization Framework to Stakeholders

Effective communication is essential for the successful adoption and ongoing use of the prioritization framework. Stakeholders must understand how the framework works and its impact on their roles.

The communication plan should include:

- Initial Announcement: Announce the implementation of the prioritization framework to all relevant stakeholders. Clearly state the purpose, benefits, and scope of the framework.

- Training and Education: Provide training sessions or documentation to educate stakeholders on the framework’s details, including how priorities are determined and how they will affect their tasks.

- Regular Updates: Provide regular updates on the performance of the framework, any changes to priorities, and any issues that arise.

- Transparency: Maintain transparency in the prioritization process. Make the prioritization matrix and scoring criteria accessible to stakeholders.

- Feedback Mechanisms: Establish mechanisms for stakeholders to provide feedback on the framework, such as surveys, feedback forms, or regular meetings.

- Visual Aids: Use visual aids, such as diagrams, charts, and dashboards, to communicate complex information in an easy-to-understand manner. For example, a simple chart showing the distribution of workloads across priority levels can be very effective.

- Executive Sponsorship: Secure support from senior management to ensure the framework is respected and enforced across the organization. Executive sponsorship reinforces the importance of the framework and ensures that resources are allocated appropriately.

- Tailored Communication: Tailor the communication to different stakeholder groups. For example, technical teams might require detailed technical documentation, while business users might need explanations of the impact on their operations.

Illustrative Examples and Case Studies

Effective workload prioritization can transform system performance, especially in complex environments. Real-world examples and case studies provide concrete evidence of the benefits and highlight the practical application of the strategies discussed. These examples illustrate how to identify and address performance bottlenecks, improve resource utilization, and ultimately enhance overall system efficiency.

Real-World Scenario: E-commerce Platform During Peak Shopping Season

Consider a large e-commerce platform experiencing a surge in traffic during its annual holiday shopping season. The platform handles various workloads, including user requests for product browsing, adding items to carts, processing payments, and updating inventory. Without proper prioritization, these workloads can compete for resources, leading to slow response times, abandoned carts, and lost revenue.The platform implemented a workload prioritization strategy based on business impact and service-level agreements (SLAs).

- Prioritization Criteria: Critical workloads, such as payment processing and order fulfillment, were assigned the highest priority. User-facing requests for browsing and adding items to carts received medium priority, while background tasks like inventory updates and analytics processing were assigned lower priority.

- Implementation: The platform utilized a combination of techniques, including quality of service (QoS) settings, resource quotas, and scheduling algorithms. For example, payment processing servers were allocated dedicated CPU and memory resources to ensure rapid transaction completion.

- Results: This prioritization strategy significantly improved system performance. Payment processing times decreased by 40%, and the platform experienced a 25% reduction in abandoned carts. The system remained stable even during peak traffic, leading to a substantial increase in sales and customer satisfaction.

Complex System Illustration: Prioritized Workloads in a Financial Institution

In a financial institution, multiple critical applications compete for system resources. A table can illustrate the workloads and their priorities.

| Workload | Priority | Description | Resource Requirements (Example) |

|---|---|---|---|

| Online Banking Transactions | High | Processing user logins, fund transfers, and account inquiries. | CPU: High, Memory: Medium, Network: High |

| Fraud Detection | High | Analyzing transactions in real-time to identify and prevent fraudulent activities. | CPU: High, Memory: Medium, Network: Medium |

| Batch Processing (End-of-Day) | Medium | Generating reports, updating account balances, and performing regulatory compliance checks. | CPU: High, Memory: High, Disk I/O: High |

| Market Data Feeds | Medium | Receiving and processing real-time stock market data. | CPU: Medium, Memory: Medium, Network: High |

| Internal Reporting and Analytics | Low | Generating reports for internal analysis and decision-making. | CPU: Medium, Memory: High, Disk I/O: High |

This table provides a clear overview of the various workloads, their priorities, and their resource demands. The financial institution can use this information to implement appropriate prioritization strategies, ensuring that critical workloads receive the necessary resources to maintain system stability and performance.

Lessons Learned from Implementing Workload Prioritization

Implementing workload prioritization strategies offers valuable lessons applicable across various environments. These lessons encompass technical, operational, and strategic aspects.

- The Importance of Accurate Workload Profiling: Thoroughly understanding the characteristics of each workload is critical. This includes identifying resource consumption patterns, dependencies, and performance requirements. Without accurate profiling, prioritization strategies may be ineffective.

- The Value of Monitoring and Tuning: Workload prioritization is not a set-it-and-forget-it process. Continuous monitoring and performance tuning are essential to adapt to changing workloads and system conditions. Regular reviews and adjustments to prioritization rules are necessary to maintain optimal performance.

- The Need for Collaboration: Successful workload prioritization requires collaboration between different teams, including application developers, system administrators, and business stakeholders. Aligning priorities with business goals and service-level agreements is essential for achieving the desired outcomes.

- The Impact of Automation: Automating the prioritization process can significantly improve efficiency and reduce manual intervention. This includes automating resource allocation, scheduling, and performance monitoring. Automation ensures consistent and reliable prioritization across the system.

- The Role of Capacity Planning: Prioritization can help optimize existing resources, but it cannot compensate for insufficient capacity. Effective capacity planning is essential to ensure that the system has enough resources to handle all workloads, even during peak periods. Consider the formula:

Capacity = (Average Workload) + (Peak Load) + (Buffer for Unexpected Events)

Summary

In conclusion, mastering the art of how to prioritize workloads for optimization is an ongoing journey that requires a deep understanding of system dynamics and a proactive approach to resource management. By applying the principles and strategies Artikeld in this guide, you can transform your systems into high-performing, reliable assets. Continuous monitoring, performance tuning, and adaptation to changing business needs are key to sustaining optimal performance and ensuring your systems remain at the forefront of efficiency and effectiveness.

Embrace these strategies, and unlock the full potential of your infrastructure.

Query Resolution

What is the primary benefit of workload prioritization?

The primary benefit is improved system performance, leading to faster response times, reduced latency, and more efficient resource utilization, ultimately enhancing user experience and business outcomes.

How often should I review and adjust my workload priorities?

Priorities should be reviewed and adjusted regularly, ideally as part of a continuous monitoring and tuning process. The frequency depends on the system’s dynamics, but monthly or quarterly reviews are a good starting point, with more frequent adjustments if business needs or system behavior changes significantly.

What are the potential risks of poor workload prioritization?

Poor prioritization can lead to performance bottlenecks, resource contention, and system instability. This can result in slow response times, application crashes, and ultimately, a negative impact on user experience and business operations.

Can workload prioritization be automated?

Yes, workload prioritization can and should be automated using tools and scripts. Automation ensures consistent application of priorities, reduces manual effort, and allows for dynamic adjustments based on real-time system conditions.