The pervasive belief that latency is zero in system architecture often leads to performance bottlenecks and system instability. This comprehensive guide delves into the intricacies of this fallacy, exploring its manifestations, associated metrics, and strategies for mitigating its detrimental effects. We’ll examine real-world examples and discuss resilience techniques to ensure robust and efficient systems.

This guide meticulously dissects the ‘latency is zero’ fallacy, exposing its underlying assumptions and demonstrating how to design systems that accurately account for real-world latency. By understanding and addressing latency, we can optimize performance, enhance user experience, and build more reliable systems.

Defining the Fallacy

The “latency is zero” fallacy is a common misconception in system architecture, arising from the desire for instantaneous responses and seamless user experiences. It stems from a simplified view of systems, often overlooking the fundamental physical limitations inherent in any computing environment. This simplified model, while appealing, leads to unrealistic expectations and can hinder the design of robust and efficient systems.The core of the fallacy is the belief that data transfer or processing can occur instantly.

This ignores the complexities of network communication, data storage retrieval, and the inherent delays in hardware operations. While modern technologies strive to minimize latency, achieving true zero latency remains unattainable. Understanding the limits and variations in latency is crucial for creating accurate system models and expectations.

Definition of “Latency is Zero”

“Latency is zero” in the context of system architecture refers to the unrealistic assumption that data transmission, processing, or response time can be instantaneous. This often manifests in architectural designs that neglect the delays inherent in various components of a system, leading to misaligned expectations and potential for system failure or instability.

Common Misconceptions

Common misconceptions surrounding zero latency often stem from focusing on individual components in isolation. For example, a high-speed network connection might seem to offer instantaneous communication, but this overlooks the delays introduced by intermediary components, such as routing protocols, network congestion, and the recipient’s processing capabilities. Similarly, a powerful processor might seem capable of handling any load, but its processing speed is limited by the input data rate, memory access time, and other architectural bottlenecks.

The fallacy often neglects the cumulative effect of these delays.

Inherent Limitations

Achieving true zero latency is fundamentally impossible in any system. The physical laws governing information transmission and processing impose inherent limits. Light speed, for instance, dictates a finite speed at which information can travel. Even in the fastest optical networks, latency exists due to the time required for signals to traverse the physical medium. Additionally, hardware operations, such as data retrieval from memory, involve delays.

Complex algorithms require processing time, and network congestion can introduce unpredictable delays.

Theoretical vs. Practical System Limitations

| Characteristic | Theoretical Zero Latency | Practical System Limitations |

|---|---|---|

| Data Transmission Speed | Instantaneous | Limited by the speed of light and the physical medium. Examples include signal propagation delay in copper or fiber optic cables. |

| Processing Time | Instantaneous | Limited by the clock cycle time of the processor, memory access latency, and the complexity of the algorithm. Examples include cache misses and branch mispredictions. |

| Network Congestion | Absent | Highly variable and unpredictable. Examples include network traffic spikes, routing issues, and queuing delays. |

| System Response Time | Instantaneous | Determined by the cumulative delays across all system components. Examples include database queries, user interface updates, and complex calculations. |

| Error Handling | Error-free | Potential for errors, delays, and failures due to hardware malfunctions, software bugs, and external factors. Examples include network packet loss, server outages, and data corruption. |

Identifying Manifestations

The “latency is zero” fallacy, while often tempting for simplifying architecture design, can lead to significant performance issues and degraded user experiences. Recognizing its manifestations across various system types is crucial for developing robust and performant systems. This section delves into common architectural patterns contributing to this fallacy and illustrates how it presents itself in diverse system environments. Understanding the consequences of ignoring latency is vital for building systems that meet user expectations.Architectural designs that implicitly assume latency-free interactions often mask underlying complexities.

This simplification can lead to brittle systems that struggle to handle realistic workloads. The hidden costs of ignoring latency manifest in reduced scalability, increased error rates, and degraded performance. Moreover, the perceived speed can create a false sense of efficiency, delaying the identification of real bottlenecks.

Common Architectural Patterns Contributing to the Fallacy

Several architectural patterns can contribute to the “latency is zero” fallacy. Microservices architectures, while promoting modularity, can introduce latency if not carefully designed to handle inter-service communication. Event-driven architectures, if not meticulously optimized for asynchronous messaging, can experience delays in processing events. Furthermore, message queues, designed for decoupling, can introduce delays in message transmission if not handled with appropriate scaling considerations.

In distributed systems, a failure to anticipate network delays and data synchronization overhead can quickly expose the fallacy.

Examples of Manifestation in Different System Types

The “latency is zero” fallacy appears in diverse system types. In web applications, this manifests as immediate responses to user actions, even when data fetching or complex computations are occurring behind the scenes. This can lead to sluggish performance or unresponsive interfaces, particularly under load. In game servers, the fallacy can manifest in the illusion of instantaneous player actions being reflected on the game world.

This can lead to desynchronization and a jarring experience, particularly in online multiplayer games.

Consequences of Adhering to the Fallacy

Ignoring latency can have significant repercussions for system performance and user experience. Reduced scalability is a key consequence. Systems designed without considering latency constraints may struggle to handle increased workloads, resulting in performance degradation. Increased error rates can arise from the assumption of instantaneous interactions. For example, data inconsistencies, incorrect state updates, or failed transactions can occur due to the lack of appropriate handling for latency.

Ultimately, a poor user experience is a common consequence, leading to frustration and dissatisfaction.

Latency Contributions Across System Components

| System Component | Typical Latency Contribution |

|---|---|

| Network Communication | Variable, dependent on network conditions, distance, and congestion. Can be significant in geographically distributed systems. |

| Database Queries | Database operations can introduce delays, especially with large datasets or complex queries. |

| API Calls | Inter-service communication latency can be substantial, especially if services are not optimized for quick response times. |

| Caching Mechanisms | Caching can reduce latency but may introduce delays if cache misses occur. |

| Computational Tasks | Complex calculations or data processing can take time, introducing delays that should be anticipated. |

This table provides a simplified overview. The actual latency contributions vary significantly based on specific implementation details and operational conditions.

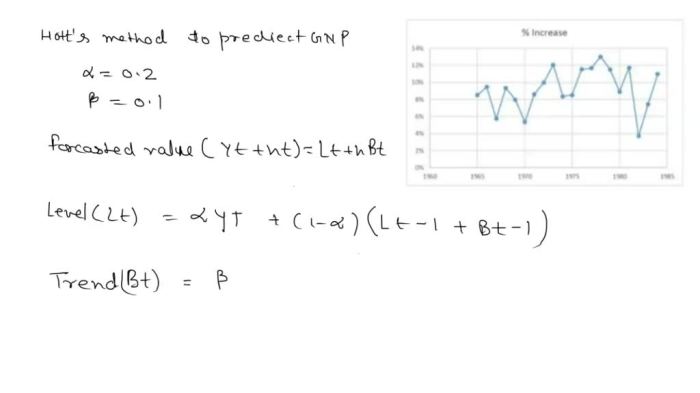

Understanding the Metrics

Accurate measurement of latency is crucial for identifying and addressing performance bottlenecks in any system. Inaccurate or incomplete latency measurements can lead to misleading conclusions and ineffective optimization strategies. This section explores the importance of various latency metrics and how they can reveal the “latency is zero” fallacy.Understanding latency metrics is essential to debunking the fallacy that latency is zero.

This requires careful examination of various latency aspects and tools for measurement, leading to a comprehensive understanding of the system’s true performance characteristics.

Importance of Accurate Latency Measurement

Accurate latency measurement is fundamental to system performance analysis. Inaccurate measurements can obscure real performance issues and lead to misinformed decisions about optimization strategies. A system might appear to perform exceptionally well based on incomplete latency data, masking underlying bottlenecks. This can lead to inefficient resource allocation and ultimately a degradation of overall system performance. Thorough and precise latency measurements are essential to achieving optimal system efficiency.

Latency Metric Examples

This section details various latency metrics and their roles in exposing the “latency is zero” fallacy.

- End-to-End Latency: This metric measures the total time taken for a request to traverse the entire system, from initiation to completion. It provides a holistic view of the system’s performance, encompassing all stages of processing and communication. End-to-end latency is a vital metric for understanding user experience and overall system responsiveness. For example, in an e-commerce website, end-to-end latency would encompass the time taken for a user to click “purchase” and receive confirmation.

- Network Latency: This metric focuses on the time spent transmitting data over a network. It can reveal bottlenecks in network infrastructure, such as slow internet connections or congested routers. Network latency is critical in distributed systems, where communication across multiple locations is essential.

- Processing Latency: This metric measures the time taken by a system to process a request or task. This includes the time spent executing algorithms, interacting with databases, or performing other computational operations. High processing latency can stem from insufficient computing resources or inefficient algorithms. A user inputting data into a form, which takes a long time to process and display validation, is an example of high processing latency.

Using Metrics to Expose the Fallacy

Latency metrics can expose the “latency is zero” fallacy by highlighting the inherent delays in various system components. By analyzing end-to-end, network, and processing latency, it becomes apparent that there are always delays, regardless of how fast individual parts of the system may appear.

Latency Measurement Tools Comparison

The following table compares and contrasts popular latency measurement tools. Choosing the appropriate tool depends on the specific needs and characteristics of the system under investigation.

| Tool | Strengths | Weaknesses | Use Cases |

|---|---|---|---|

| SystemTap | Precise profiling capabilities, deep insight into system behavior | Steeper learning curve, requires familiarity with scripting | Identifying performance bottlenecks within the operating system, kernel level analysis |

| jMeter | Versatile, supports various protocols, good for simulating large loads | May not be as precise for low-level latency measurements | Testing web applications under heavy load, simulating user interactions |

| Wireshark | Captures network traffic in detail, excellent for network latency analysis | Requires manual analysis of captured data, not suitable for high-frequency events | Analyzing network packets, identifying network bottlenecks |

| perf | Excellent for precise performance analysis of applications, accurate timing measurements | Requires Linux environment, not universally applicable | Measuring CPU and memory usage, pinpointing bottlenecks in application code |

Architecting for Realistic Latency

Acknowledging that latency is inherent in any distributed system is crucial for building robust and performant applications. Ignoring this fundamental aspect can lead to unexpected performance bottlenecks and user frustration. This section delves into the practicalities of designing systems that explicitly account for and manage non-zero latency.Designing for realistic latency necessitates a shift in mindset. Instead of striving for instantaneous responses, we focus on building systems that effectively manage and compensate for the inevitable delays inherent in network communication, data processing, and resource contention.

This proactive approach results in applications that are more resilient, predictable, and ultimately, more user-friendly.

Importance of Acknowledging Non-Zero Latency

Systems must be designed with the understanding that latency is not a bug but an inherent part of their operation. Accepting this reality allows for the development of strategies that optimize performance within the constraints imposed by latency. Neglecting this factor can lead to frustrating performance issues, particularly in distributed systems where delays are more pronounced.

Strategies for Efficient Latency Management

Latency management involves multiple strategies, tailored to specific system layers. Careful consideration of these strategies ensures that applications are prepared for and can mitigate delays effectively. This proactive approach contributes to greater application resilience and performance.

- Network Optimization: Choosing appropriate network protocols, optimizing network configurations, and utilizing techniques such as caching can significantly reduce latency in data transfer. Utilizing content delivery networks (CDNs) and strategically placing servers closer to users can further improve network performance by reducing the distance data must travel.

- Data Caching: Caching frequently accessed data at various points in the system can significantly reduce latency by eliminating the need to retrieve data from slower sources. Effective caching strategies often require understanding data access patterns and utilizing appropriate caching mechanisms.

- Asynchronous Operations: Employing asynchronous operations, such as message queues, allows the system to continue processing other requests while waiting for responses from external services or resources. This approach is vital in handling tasks that might involve significant delays, preventing a bottleneck in the main application thread.

Examples of Architectures Accounting for Latency

Numerous architectures explicitly account for latency in their design. These approaches range from simple caching strategies to complex distributed systems.

- Microservices Architecture: Microservices, by their nature, are designed with the expectation of some latency between individual services. Proper service discovery and inter-service communication protocols are critical in managing this latency.

- Event-Driven Architectures: These architectures often handle delays gracefully by decoupling components and enabling asynchronous processing. They are particularly well-suited for scenarios involving potentially lengthy operations.

- Distributed Caching Systems: Distributed caching systems, such as Redis or Memcached, are explicitly designed to minimize latency by caching frequently accessed data closer to the requesting components. This approach reduces the need to access slower data sources, thereby significantly improving response times.

Design Strategies Across System Layers

This table summarizes strategies for handling latency in various system layers.

| System Layer | Design Strategy |

|---|---|

| Network | Utilize optimized network protocols, leverage CDNs, and strategically place servers close to users. |

| Application | Implement caching mechanisms, employ asynchronous operations, and design for decoupled components. |

| Database | Optimize database queries, employ appropriate indexing strategies, and consider database sharding. |

Strategies for Mitigation

Minimizing latency without the unrealistic pursuit of zero is crucial for building robust and performant systems. This involves understanding and addressing the various factors contributing to latency, from network communication to data access and I/O operations. Effective mitigation strategies focus on optimization and intelligent resource allocation, ensuring responsiveness and efficiency.Real-world systems invariably exhibit latency. Strategies for mitigation target practical improvements rather than unattainable ideals.

By acknowledging and addressing specific latency sources, architects can design systems that deliver acceptable performance without sacrificing crucial functionality.

Network Optimization Techniques

Network communication often significantly impacts overall system latency. Optimizing network interactions is vital for reducing delays. These strategies involve understanding network characteristics, protocol choices, and the underlying infrastructure.

- Choosing appropriate protocols:

- Network topology considerations:

- Implementing network caching:

Selecting protocols optimized for specific data types and transmission characteristics can drastically reduce latency. For instance, using UDP for real-time data streams, where some packet loss is acceptable, can be faster than TCP, which prioritizes reliable delivery but incurs higher overhead.

Physical network layouts significantly influence latency. Proximity of servers and clients minimizes transmission distance and, therefore, latency. Utilizing content delivery networks (CDNs) can reduce latency for geographically dispersed users by caching content closer to them.

Caching frequently accessed data at intermediary points in the network can dramatically decrease the latency for subsequent requests. This reduces the load on the origin server, leading to faster responses.

Data Caching Strategies

Caching frequently accessed data significantly improves system responsiveness. Intelligent caching strategies reduce latency by storing data closer to the point of access.

- Employing various caching levels:

- Implementing an expiration policy:

- Utilizing caching in-memory data structures:

Implementing multiple caching levels (e.g., browser cache, CDN cache, application cache) provides a hierarchical approach to data retrieval. Data retrieved from the fastest available cache minimizes latency.

Cached data expires after a defined period. Implementing an expiration policy ensures data remains fresh and relevant. This avoids serving stale data and necessitates updating the cache with new information.

Using in-memory data structures for frequently accessed data significantly speeds up retrieval compared to database queries. This approach, however, requires careful consideration of memory usage and potential data consistency issues.

I/O Optimization Strategies

I/O operations, including disk access and database interactions, are often significant latency bottlenecks. Strategies for optimizing I/O operations can greatly improve system performance.

- Utilizing SSDs instead of HDDs:

- Optimizing database queries:

- Implementing asynchronous I/O:

Solid-state drives (SSDs) offer substantially faster read and write speeds compared to traditional hard disk drives (HDDs). This results in quicker data retrieval, thus reducing I/O latency.

Properly structured queries, indexing, and optimized database configurations are essential for efficient data retrieval. These techniques minimize the time required for database interactions.

Asynchronous I/O operations allow the system to continue processing other tasks while waiting for I/O completion. This reduces latency by preventing the system from being blocked by slow I/O operations.

Techniques for Performance Evaluation

Evaluating the impact of latency on system performance requires meticulous techniques. Simply measuring response times isn’t sufficient; a holistic approach is needed to pinpoint the bottlenecks and understand the nuances of latency across various stages. This necessitates tools and methodologies that delve into the intricacies of request processing, from initial input to final output. Furthermore, these techniques must be adaptable to diverse architectures and incorporate latency considerations into the design process.Effective performance evaluation involves not only measuring latency but also analyzing its components and identifying areas for improvement.

By understanding how latency affects different stages of a request, we can isolate the root causes and implement targeted solutions. This systematic approach allows for the creation of robust, performant systems that anticipate and manage latency effectively.

Measuring the Impact of Latency on System Performance

Various metrics provide insight into the impact of latency on system performance. Response time, measured from the initiation of a request to its completion, is a primary indicator. Latency in specific stages, such as database queries or network transmissions, can be measured independently. Furthermore, the distribution of response times, often represented by percentiles (e.g., 95th percentile response time), reveals the system’s resilience to outliers.

Monitoring the frequency of timeouts, errors, and dropped requests also provides valuable data on the system’s robustness in the face of latency.

Analyzing Latency in Different Stages of a Request

Precise analysis of latency involves dissecting the request lifecycle. Tools capable of capturing timestamps at key points in the process—such as database access, network transmission, and application logic execution—allow for detailed latency breakdowns. Identifying bottlenecks in specific stages, like database queries or API calls, helps pinpoint areas needing optimization. Visual representations, such as latency traces, enable a comprehensive understanding of the latency profile and highlight bottlenecks.

Incorporating Latency into System Design Decisions

Designing systems with latency in mind involves proactively incorporating latency considerations throughout the development lifecycle. This proactive approach includes estimating latency for each component and designing for graceful degradation under pressure. Performance testing with realistic workloads, simulating anticipated user loads and request patterns, allows developers to understand system behavior under various latency conditions. Designing systems with redundancy and failover mechanisms to absorb spikes and mitigate the impact of component failures is crucial.

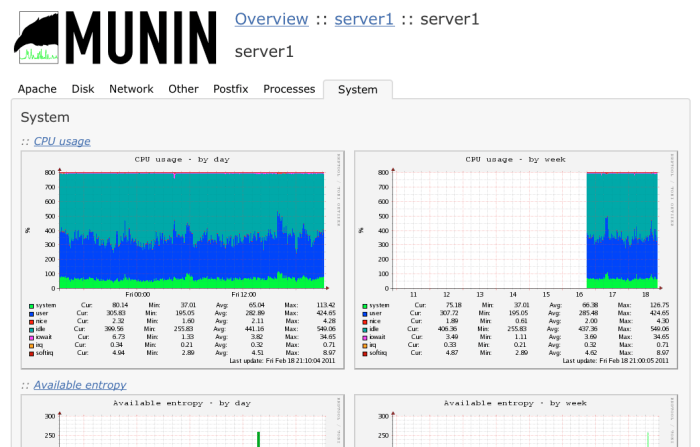

Tools for Monitoring and Analyzing System Performance Related to Latency

Various tools facilitate the monitoring and analysis of system performance related to latency. Network monitoring tools, like Wireshark, can capture and analyze network traffic, revealing bottlenecks in communication paths. Profiling tools, like Java VisualVM, offer insight into the execution time of different parts of the application code, highlighting areas needing optimization. Specialized performance monitoring tools, such as Dynatrace or New Relic, provide comprehensive views of system behavior, including latency metrics, across various components.

Log aggregation tools, like ELK stack, allow for analyzing logs to correlate events with latency spikes.

Case Studies

The “latency is zero” fallacy, while often tempting in theoretical models, frequently leads to disappointing real-world performance. Examining real-world examples where this fallacy was embraced and its consequences provides invaluable insights for building robust and performant systems. Understanding both failures and successes allows us to develop more realistic architectural strategies.

Real-World Examples of Performance Issues Due to the Fallacy

The pursuit of seemingly instantaneous interactions can obscure the complexities of distributed systems. Many systems, particularly those relying on microservices architectures or cloud-based components, have suffered from performance degradation when developers underestimated the network and processing delays inherent in distributed operations.

- A popular e-commerce platform experienced significant delays in checkout processes. Initial design assumptions, based on a simplified model of the system, failed to account for the aggregate latency introduced by multiple database interactions, payment gateway processing, and asynchronous order confirmations. This resulted in user frustration and a decrease in conversion rates.

- A financial trading platform, optimizing for speed, prioritized low-latency database connections. However, the underlying infrastructure exhibited unexpected variability in response times, leading to occasional “zero-latency” transactions failing due to connection drops or congestion. Unforeseen network issues significantly impacted transaction reliability.

Successful Mitigation of Latency Issues

Effective latency mitigation strategies involve a holistic approach, recognizing the diverse components contributing to overall response time.

- A social media platform implemented a caching strategy for frequently accessed data, such as user profiles and post feeds. This reduced the load on the backend database servers, improving response times for user requests. They also implemented load balancing to distribute the traffic evenly across servers, further enhancing scalability and performance.

- A streaming service adopted a content delivery network (CDN) to cache static assets closer to users’ geographic locations. This minimized the latency associated with fetching media files, providing a more responsive user experience, even for users located in geographically dispersed areas. They carefully measured the effectiveness of the CDN implementation, focusing on performance improvements in different regions.

Lessons Learned from Case Studies

The experiences described highlight the importance of thorough performance modeling and rigorous testing.

- Assumptions about zero latency are often unrealistic. Realistic models of system latency are crucial for accurate performance predictions. Systems must account for network conditions, database interactions, and resource contention.

- Ignoring the variability of network and processing times can lead to unreliable performance and system failures. Design strategies should incorporate fault tolerance and resilience to handle unexpected delays or failures.

- Careful monitoring and continuous evaluation of system performance are vital. Using appropriate metrics to track latency and resource usage provides insights into system behavior and enables proactive mitigation of performance issues.

Summary of Key Takeaways

| Case Study | Fallacy Manifestation | Mitigation Strategy | Key Takeaway |

|---|---|---|---|

| E-commerce Platform | Oversimplified latency model, neglecting aggregate delays | Caching, load balancing | Realistic modeling is crucial for accurate performance prediction. |

| Financial Trading Platform | Unrealistic assumption of constant low latency | Robust network monitoring, redundancy | Variability in network conditions must be considered. |

| Social Media Platform | High database load | Caching, load balancing | Optimized caching and resource distribution enhance performance. |

| Streaming Service | Network latency from remote users | Content Delivery Network (CDN) | Geographic distribution and caching improve responsiveness. |

Designing for Resilience

System latency is not a static value; it fluctuates based on various factors. Designing systems to withstand these fluctuations, rather than assuming a constant “zero” latency, is crucial for reliable performance and user experience. Resilience ensures that the system continues to function effectively even when unexpected latency spikes occur. This involves anticipating potential problems and building mechanisms to mitigate their impact.

Importance of Resilience in Distributed Systems

Distributed systems, by their nature, involve multiple components interacting over potentially unreliable networks. Latency variations across these components can lead to cascading failures if not anticipated and mitigated. Robust resilience strategies are essential to maintain system availability and performance during these fluctuations. Unforeseen issues like network congestion or component failures can cause significant performance degradation if not addressed proactively.

Building Redundancy for Latency Compensation

Redundancy is a fundamental strategy for compensating for latency spikes. It involves creating backup components or pathways that can take over if the primary ones experience high latency. This can include having multiple data centers, geographically distributed servers, or redundant network connections. By having alternative routes or resources available, the system can maintain performance even if a part of the system experiences a delay.

For instance, a web application could have multiple servers handling requests. If one server experiences high latency, traffic can be redirected to other available servers.

Load Balancing and Failover Strategies

Load balancing distributes incoming requests across multiple servers to prevent overload on any single component. This helps distribute latency effectively, reducing the impact of individual server slowdowns. Failover mechanisms automatically switch to backup servers if the primary server becomes unavailable due to high latency or failure. This ensures continuous service delivery, even during periods of high demand or network issues.

An example is a cloud-based application using a load balancer to distribute requests among multiple virtual machines. If one VM experiences latency issues, the load balancer can quickly redirect requests to healthy VMs.

Table Illustrating Resilience Approaches

| Approach | Description | Example |

|---|---|---|

| Redundant Servers | Deploying multiple servers to handle the same workload. | A web application with multiple backend servers in different data centers. |

| Geographic Distribution | Distributing servers across different geographic locations. | A global e-commerce platform with servers in multiple countries. |

| Load Balancing | Distributing incoming requests across multiple servers. | A web server using a load balancer to distribute traffic to multiple backend servers. |

| Failover Mechanisms | Automatically switching to backup servers if the primary server fails or experiences high latency. | A database system with a standby server that automatically takes over if the primary server fails. |

| Caching Strategies | Storing frequently accessed data in a cache to reduce latency. | A web application using a CDN (Content Delivery Network) to cache static content closer to users. |

Illustrative Scenarios

Acknowledging that latency is inherently non-zero in real-world systems is crucial for robust architectural design. This section presents practical examples and methodologies to effectively account for and manage latency in system architectures. Avoiding the “latency is zero” fallacy prevents system failures and ensures predictable performance.

A System Architecture Avoiding the “Latency is Zero” Fallacy

Consider a distributed e-commerce platform. Instead of assuming instantaneous communication between the user interface, inventory management system, and payment gateway, the architecture incorporates explicit latency handling. The system employs asynchronous messaging for interactions, allowing components to operate independently and handle variable network conditions. Timeouts are implemented at each stage, preventing indefinite delays and ensuring the user experience remains responsive.

Error handling mechanisms are also incorporated to gracefully manage potential network issues. This architecture explicitly models the latency of each component and the communication channels, allowing for realistic performance estimations and proactively mitigating latency bottlenecks.

Designing for Variable Latency

Designing a system for variable latency involves anticipating potential delays in various components and communication channels. This approach requires careful consideration of the following steps:

- Identifying Critical Paths: Pinpointing the segments of the system with the highest latency potential is essential. This analysis helps to focus mitigation efforts on the most impactful areas.

- Employing Asynchronous Communication: Utilizing asynchronous communication patterns, such as message queues, decouples components, enabling them to operate independently and handle delays gracefully.

- Implementing Timeouts and Retries: Incorporating timeouts prevents indefinite waits for responses from other components. Retries offer an opportunity to recover from temporary network issues or component failures.

- Leveraging Caching Strategies: Caching frequently accessed data significantly reduces latency by avoiding repeated retrieval from slower sources. Implementing intelligent caching strategies, such as expiration policies and eviction algorithms, optimizes performance.

Assessing and Addressing Latency Bottlenecks

Assessing potential latency bottlenecks involves a systematic approach:

- Profiling System Performance: Employing tools and techniques to analyze the execution time of various parts of the system, allowing identification of bottlenecks.

- Analyzing Network Metrics: Monitoring network latency and bandwidth usage helps identify network-related issues that might be contributing to system slowdowns.

- Identifying and Isolating Components: Identifying specific components or modules that are contributing to high latency is critical for targeted optimization.

- Optimizing Component Performance: Optimizing the performance of individual components, such as database queries or API calls, is essential to improve overall latency.

Comparing Latency Characteristics of System Architectures

The table below illustrates the latency characteristics of various system architectures, highlighting the trade-offs between complexity and performance. This comparison emphasizes the importance of understanding the latency implications of design choices.

| Architecture | Latency Characteristics | Complexity |

|---|---|---|

| Monolithic | Generally lower latency within the application, but high latency for external services; difficult to scale. | Low |

| Microservices | Potentially higher latency due to inter-service communication, but scalable and flexible. | High |

| Event-driven | Variable latency depending on message processing time; highly scalable. | High |

| Serverless | Highly variable latency depending on cloud provider infrastructure and function execution time; highly scalable. | Very High |

Closing Summary

In conclusion, effectively mitigating the ‘latency is zero’ fallacy requires a multifaceted approach. By meticulously measuring latency, understanding its manifestations, and architecting for realistic latency values, we can design systems that perform optimally under various conditions. This guide provides a practical roadmap to building resilient and high-performing systems that accurately reflect real-world performance characteristics.

Query Resolution

What are some common misconceptions about zero latency?

Many assume that modern hardware and network infrastructure eliminate all latency. However, there are inherent delays in processing, data transmission, and other components that must be considered. This often leads to unexpected performance issues.

How can I accurately measure latency in my system?

Employing various latency metrics, including end-to-end, network, and processing time, can identify potential bottlenecks and expose the fallacy of zero latency. Tools for measuring and analyzing these metrics are crucial for system optimization.

What are some examples of architectures that account for latency?

Systems that incorporate caching mechanisms, efficient data transfer protocols, and load balancing strategies effectively manage and compensate for latency, demonstrating a proactive approach to realistic latency.

How can I build redundancy into my system to compensate for latency spikes?

Implementing load balancing, failover mechanisms, and backup systems can enhance system resilience and mitigate the negative impact of latency fluctuations.