Database migration, a critical undertaking for any organization evolving its data infrastructure, often presents a significant challenge: minimizing downtime. The process, involving the transfer of data from one database system to another, or even within the same system, can disrupt business operations if not executed meticulously. This guide delves into the core strategies, techniques, and best practices necessary to mitigate downtime, ensuring a smooth transition while preserving data integrity and application functionality.

This exploration encompasses various aspects of database migration, from meticulous planning and preparation to post-migration optimization. We will examine different migration methodologies, including in-place and blue/green deployments, and delve into the crucial role of data backup and recovery. Furthermore, the discussion extends to zero-downtime techniques, comprehensive testing and validation procedures, robust monitoring strategies, and the importance of effective communication and coordination throughout the entire process.

Automation and scripting, alongside schema migration strategies and handling application downtime, are also vital components of this guide.

Planning and Preparation for Database Migration

Database migration, a critical process for modern data management, necessitates meticulous planning and preparation to minimize downtime and ensure data integrity. A well-defined strategy encompasses comprehensive pre-migration checks, a clear understanding of migration approaches, and robust rollback procedures. This proactive approach is crucial for a successful transition, mitigating risks and preserving business continuity.

Pre-Migration Checklist

A detailed pre-migration checklist acts as a roadmap, guiding the process and ensuring all necessary steps are completed before the actual migration. The checklist should cover various aspects, from environment readiness to data validation, to minimize potential disruptions.

- Environment Assessment: Evaluate the source and target database environments. This involves verifying hardware specifications (CPU, RAM, storage), network connectivity, and software compatibility. Document the current configuration and identify any potential bottlenecks.

- Data Backup: Create a full backup of the source database. This is the most crucial step in the preparation phase. This backup serves as the foundation for the rollback plan and provides a safety net in case of unexpected issues. Verify the integrity of the backup by restoring it to a test environment.

- Compatibility Checks: Confirm compatibility between the source and target database systems, including data types, character sets, and supported features. Address any incompatibilities proactively, such as data type conversions or schema modifications.

- Schema and Code Review: Review the database schema and application code to identify any dependencies or customizations that might require adaptation during the migration. This includes stored procedures, triggers, views, and user-defined functions.

- Performance Testing: Conduct performance tests in a pre-production environment. Simulate production workloads to assess the performance of the target database after migration. Identify and address any performance degradation issues before the migration.

- Data Validation: Implement data validation steps to ensure data integrity throughout the migration process. This involves comparing data between the source and target databases.

- Data Profiling: Analyze the data in the source database to understand its characteristics, including data types, value ranges, and data quality. This helps identify potential data quality issues and facilitates the development of validation rules.

- Data Comparison: Compare data between the source and target databases using various techniques, such as checksums, row counts, and data samples. Implement automated data comparison tools to ensure accuracy and efficiency.

- Data Transformation Validation: If data transformation is required during the migration, validate the transformations by testing them in a pre-production environment. Verify that the transformed data meets the required standards and business rules.

- Security Assessment: Review and configure security settings for the target database. This includes user authentication, authorization, and data encryption. Ensure that security measures are in place to protect sensitive data during and after the migration.

- Documentation: Document all migration steps, including the checklist, migration strategy, rollback plan, and communication plan. This documentation serves as a reference for the migration team and facilitates troubleshooting and post-migration analysis.

- Training and Communication: Train the migration team on the migration procedures and tools. Communicate the migration plan to stakeholders, including application developers, database administrators, and business users.

Migration Strategies

Different migration strategies offer varying levels of downtime and complexity. The choice of strategy depends on factors such as the size of the database, the criticality of the application, and the acceptable downtime window. Understanding the advantages and disadvantages of each strategy is crucial for selecting the most appropriate approach.

- In-Place Migration: This strategy involves upgrading the database in its existing environment.

- Pros: Simplest approach, minimal infrastructure changes, and potentially lower cost.

- Cons: Highest downtime, higher risk of failure, and difficult to rollback.

- Blue/Green Deployment: This strategy involves creating a parallel environment (green) identical to the existing production environment (blue). Data is replicated to the green environment, and after validation, traffic is switched over.

- Pros: Minimal downtime, easier rollback, and allows for testing in a production-like environment.

- Cons: Requires double the infrastructure, more complex to manage, and can be more expensive.

- Offline Migration: The database is taken offline, backed up, and restored to the new environment.

- Pros: Relatively straightforward, less complex than blue/green.

- Cons: Significant downtime, and the source database is unavailable during the migration.

- Trickle Migration: Data is migrated in smaller batches, allowing the application to gradually switch to the new database.

- Pros: Reduced downtime compared to offline migration.

- Cons: Requires careful coordination and may involve data synchronization challenges.

Rollback Plan

A comprehensive rollback plan is essential to minimize the impact of a failed migration. This plan Artikels the steps to revert to the pre-migration state, ensuring business continuity. The plan should be tested and documented.

- Backup Verification: Verify the integrity of the pre-migration backup. This is the foundation of the rollback process. Restore the backup to a test environment to confirm its usability.

- Data Synchronization (If Applicable): If the migration involves data synchronization (e.g., trickle migration), identify the data changes that occurred during the migration and determine how to revert them.

- Application Rollback: Rollback the application to the previous version that is compatible with the source database.

- Database Rollback: Restore the pre-migration database backup to the source database environment. This returns the database to its original state.

- Testing: Test the rollback process in a non-production environment before initiating the rollback in the production environment.

- Communication: Communicate the rollback plan and progress to all stakeholders. Keep stakeholders informed throughout the rollback process.

- Documentation: Document the rollback steps and any issues encountered during the rollback process. This documentation is valuable for future migrations.

Resource Requirements Comparison

Different migration methods have varying resource requirements. Understanding these requirements helps in planning and provisioning the necessary infrastructure. The table below provides a comparative overview of resource needs.

| Migration Method | CPU | RAM | Storage | Downtime |

|---|---|---|---|---|

| In-Place Migration | High (during upgrade/conversion) | Moderate (depending on database size) | Moderate (for temporary storage) | High |

| Blue/Green Deployment | High (for both environments) | High (for both environments) | High (for both environments) | Low |

| Offline Migration | Moderate (during backup/restore) | Moderate (during backup/restore) | High (for backup and target database) | High |

| Trickle Migration | Moderate (during data transfer) | Moderate (during data transfer) | Moderate (for staging and temporary storage) | Moderate |

Choosing the Right Migration Method

Selecting the optimal database migration method is a critical decision that significantly impacts downtime, data integrity, and overall project success. This choice demands a careful evaluation of various factors, ranging from the tolerance for service interruption to the volume of data involved. A well-informed selection process, supported by rigorous testing and a clear understanding of the trade-offs, is essential for minimizing disruption and ensuring a smooth transition.

Factors Influencing Migration Approach Selection

Several key factors must be considered when determining the most appropriate database migration strategy. These factors directly influence the choice between online and offline methods, as well as the selection of specific tools and techniques.

- Downtime Tolerance: The acceptable duration of service interruption is a primary driver. Systems with strict uptime requirements necessitate online migration strategies to minimize downtime. Conversely, applications with less stringent uptime demands may accommodate offline methods, which often offer greater simplicity and potentially faster data transfer rates. The criticality of the database to business operations dictates the permissible downtime window. For example, a financial transaction system will have a much lower tolerance for downtime than a reporting database.

- Data Size: The volume of data significantly impacts the migration time and the feasibility of different approaches. Large datasets typically favor methods optimized for parallel processing and efficient data transfer. Smaller datasets may be suitable for simpler, potentially slower, migration techniques. As data size increases, the complexity of the migration process also increases, necessitating more robust tools and planning. Consider the impact of network bandwidth and storage I/O on the migration timeline.

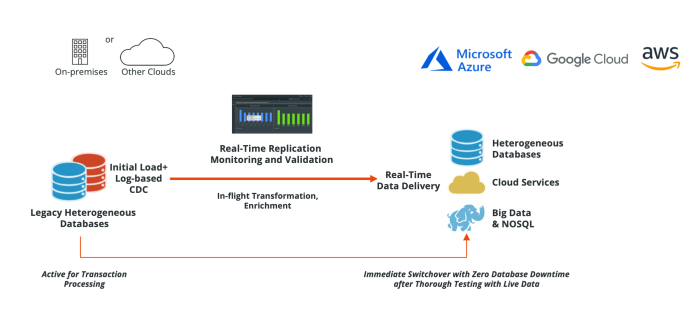

- Source and Target Database Compatibility: The compatibility between the source and target database systems is a crucial consideration. Migrations between different database vendors (e.g., Oracle to PostgreSQL) introduce complexities related to data type mapping, SQL dialect differences, and feature parity. Homogeneous migrations (e.g., Oracle to Oracle) generally simplify the process but may still require schema changes and data transformation. Evaluate the supported features and limitations of the target database in relation to the source database’s functionality.

- Application Dependencies: The dependencies of applications on the database, including connection strings, stored procedures, and application code, must be carefully analyzed. Migrations may necessitate application code modifications to accommodate changes in database structure or functionality. Identify and address potential compatibility issues early in the planning phase to avoid disruptions. Consider the impact of the migration on application performance and scalability.

- Budget and Resources: The available budget and the resources (personnel, tools, infrastructure) allocated to the migration project influence the choice of migration method. Complex migrations, such as those involving heterogeneous databases or significant data transformation, may require specialized expertise and more sophisticated tools, which can increase costs. The availability of skilled database administrators and developers is essential for successful execution.

- Security and Compliance Requirements: Security and compliance requirements, such as data encryption, access control, and audit logging, must be integrated into the migration plan. Ensure that the chosen migration method maintains data security and complies with relevant regulations. Consider the security implications of data transfer, storage, and access during and after the migration.

Comparison of Database Migration Tools and Their Downtime Impact

Various database migration tools are available, each offering different capabilities and impacting downtime. The selection of a tool depends on factors like the database systems involved, the data volume, and the acceptable downtime.

Here’s a comparison of some tools, emphasizing their impact on downtime:

| Tool Type | Tool Examples | Downtime Impact | Key Features | Considerations |

|---|---|---|---|---|

| Native Tools | Oracle Data Pump, MySQL Workbench, PostgreSQL pg_dump/pg_restore | Offline: Significant downtime, Online: Potentially minimal downtime (e.g., using Oracle GoldenGate or MySQL Replication) | Cost-effective, often included with the database, good for homogeneous migrations, efficient for large datasets. | Requires in-depth knowledge of the database system, can be complex to configure for online migrations, may require manual scripting. |

| Third-Party Tools | AWS Database Migration Service (DMS), IBM InfoSphere Data Replication, Informatica PowerCenter | Online: Minimal downtime (continuous replication), Offline: Moderate downtime (bulk data loading) | Support for heterogeneous migrations, features like schema conversion and data transformation, automation capabilities, often provide monitoring and alerting. | Can be more expensive than native tools, may require specialized training, performance can vary depending on the tool and the migration scenario. |

| Scripting and Custom Tools | Custom scripts (e.g., using Python with database connectors) | Highly variable, depends on implementation. Can be online or offline. | Highly flexible, allows for precise control over the migration process, suitable for complex data transformations and custom requirements. | Requires significant development effort, can be difficult to maintain, requires deep understanding of both source and target databases. |

Trade-offs Between Online and Offline Migration Methods

Choosing between online and offline migration methods involves trade-offs between downtime, complexity, and data integrity. Understanding these trade-offs is crucial for making an informed decision.

- Offline Migration: This method involves taking the source database offline, performing the migration, and then bringing the target database online.

- Advantages: Simpler to implement, potentially faster data transfer rates (especially for large datasets), and can be more cost-effective.

- Disadvantages: Requires significant downtime, data consistency issues if data changes during the migration process, and a higher risk of data loss if the migration fails.

- Use Cases: Suitable for non-critical applications, development and testing environments, or situations where downtime is acceptable.

- Online Migration: This method involves migrating data while the source database remains online, minimizing downtime.

- Advantages: Minimizes or eliminates downtime, reduces the impact on business operations, and ensures data consistency.

- Disadvantages: More complex to implement, can be more expensive due to the need for specialized tools and expertise, and may impact source database performance during the migration.

- Use Cases: Essential for critical applications with strict uptime requirements, where even short periods of downtime are unacceptable.

Process of Conducting a Proof-of-Concept (POC) Migration

A proof-of-concept (POC) migration is a crucial step in validating the chosen migration method and mitigating risks. It involves a small-scale, controlled migration that allows testing the process, identifying potential issues, and refining the migration plan before the full-scale migration.

- Define Scope and Objectives: Clearly define the scope of the POC, including the data to be migrated, the target database, and the success criteria. The objectives should include verifying data integrity, assessing performance, and estimating the downtime.

- Select Representative Data: Choose a representative subset of the data to be migrated. This subset should reflect the characteristics of the entire dataset, including data types, volumes, and complexities.

- Choose Migration Tools and Techniques: Select the migration tools and techniques to be used based on the requirements and constraints. Document the configuration and settings used.

- Execute the POC: Perform the migration according to the chosen method. Monitor the process closely, including data transfer rates, resource utilization, and any errors.

- Validate Data Integrity: Verify the data integrity in the target database. Compare the data in the source and target databases to ensure that all data has been migrated correctly.

- Measure Performance: Measure the performance of the migration process, including the time taken, the data transfer rate, and the impact on the source database.

- Document Findings and Refine Plan: Document all findings, including any issues encountered, the time taken, and the performance metrics. Refine the migration plan based on the results of the POC. This includes adjusting the tools, techniques, and the timeline for the full-scale migration.

Data Backup and Recovery Strategies

A robust data backup and recovery strategy is critical for minimizing data loss and downtime during a database migration. This strategy should encompass comprehensive backup procedures, rigorous verification processes, and well-defined restoration protocols. The selection of appropriate backup methods significantly influences the duration of the migration process, and understanding their characteristics is essential for informed decision-making.

Designing a Comprehensive Backup Strategy

A comprehensive backup strategy necessitates a multi-faceted approach, incorporating various backup types, storage locations, and testing procedures. The primary objective is to ensure data integrity and availability throughout the migration lifecycle.

- Backup Types: Implement a combination of full, incremental, and differential backups. A full backup creates a complete copy of the database, serving as a baseline. Incremental backups capture only the changes made since the last backup (full or incremental), resulting in faster backup times but longer restoration times. Differential backups capture changes since the last full backup, providing a balance between backup and restoration speed.

- Backup Frequency: The frequency of backups should be determined by the Recovery Point Objective (RPO) and Recovery Time Objective (RTO). RPO defines the maximum acceptable data loss, while RTO defines the maximum acceptable downtime. For example, a critical system with an RPO of 1 hour might require hourly incremental backups and daily full backups.

- Backup Storage: Store backups in multiple locations, including on-site and off-site storage, to protect against hardware failures, natural disasters, and other unforeseen events. Consider using cloud-based storage for off-site backups due to its scalability and cost-effectiveness.

- Backup Automation: Automate the backup process using database management system (DBMS) tools or third-party backup solutions. Automation reduces the risk of human error and ensures consistent backup schedules.

- Backup Encryption: Encrypt backups to protect sensitive data from unauthorized access. Implement strong encryption algorithms and securely manage encryption keys.

Verifying Backup Integrity

Verifying the integrity of backups is a crucial step before starting the migration process. This ensures that the backups are usable and that data can be restored successfully.

- Testing Backup Restoration: Regularly test the restoration process to validate the integrity of backups. This involves restoring backups to a test environment and verifying data consistency.

- Checksum Verification: Use checksums or hash values to verify the integrity of backup files. Compare the checksum of the backup file with the checksum calculated during the backup process. Any discrepancy indicates a corrupted backup.

- Database Consistency Checks: Run database consistency checks (e.g., `DBCC CHECKDB` in SQL Server) on the restored database to identify any logical or physical inconsistencies.

- Data Validation: After restoring the database, validate the data by comparing it with a known good copy or by running data validation scripts.

- Documentation: Document the verification process, including the steps taken, the tools used, and the results obtained.

Creating a Database Restoration Procedure

A well-defined restoration procedure is essential for minimizing downtime in case of issues during the migration. This procedure should Artikel the steps required to restore the database from a backup.

- Identify the Source of the Issue: Before initiating the restoration process, identify the root cause of the issue. This will help in selecting the appropriate backup and restoration method.

- Select the Appropriate Backup: Choose the backup that minimizes data loss and downtime. This might involve restoring the most recent full backup and applying subsequent incremental or differential backups.

- Prepare the Target Environment: Ensure that the target environment (e.g., a new database server) is properly configured and ready to receive the restored database. This includes installing the necessary software and configuring network settings.

- Initiate the Restoration Process: Use the DBMS’s built-in restoration tools or third-party solutions to restore the database from the selected backup.

- Verify Data Integrity: After the restoration is complete, verify the integrity of the restored database by performing consistency checks, data validation, and application testing.

- Failover Procedure: Develop a failover procedure to switch to the restored database quickly. This might involve updating DNS records or redirecting application traffic to the new database server.

- Document the Restoration Process: Document each step of the restoration process, including the commands used, the time taken, and any issues encountered. This documentation will be invaluable for future restoration efforts.

Impact of Backup Methods on Migration Downtime

The choice of backup method significantly impacts the duration of the migration downtime. Understanding the characteristics of each method is essential for planning the migration process.

- Full Backup:

- Advantages: Simple to restore, provides a complete copy of the database.

- Disadvantages: Longest backup time, potentially significant downtime during restoration if a large database is involved.

- Impact on Downtime: The restoration time is directly proportional to the size of the database. For example, restoring a 1 TB database might take several hours, depending on hardware resources.

- Incremental Backup:

- Advantages: Fastest backup time, minimizes storage space.

- Disadvantages: Requires restoring the full backup and all subsequent incremental backups, leading to longer restoration times.

- Impact on Downtime: Restoration time is dependent on the number of incremental backups and the time taken to apply each one. If there are many incremental backups, the restoration time can be significant.

- Differential Backup:

- Advantages: Faster restoration than incremental backups (restores the full backup and one differential backup).

- Disadvantages: Backup time increases over time as the amount of changed data grows.

- Impact on Downtime: Restoration time is dependent on the size of the full backup and the differential backup. This approach offers a balance between backup and restoration speed.

Minimizing Downtime with Zero-Downtime Techniques

Minimizing downtime is a critical objective during database migrations, directly impacting business continuity and user experience. Zero-downtime migration techniques represent the pinnacle of this effort, enabling the seamless transfer of data and operations with minimal or no interruption. This approach is particularly vital for applications requiring constant availability and stringent service level agreements (SLAs).

Zero-Downtime Migrations Concept

Zero-downtime migrations are strategies designed to migrate a database from one environment to another without causing any service interruption to the end-users. This is achieved by employing techniques that allow both the old and new databases to coexist, with the application gradually shifting traffic from the old database to the new one. The core principle is to ensure that the application remains available throughout the migration process, preserving data integrity and user access.

These methods are often complex and require careful planning and execution.

Database Technologies Supporting Zero-Downtime Migrations

Several database technologies and platforms provide built-in features or support third-party tools to facilitate zero-downtime migrations. The specific capabilities and approaches vary based on the database system.

- PostgreSQL: PostgreSQL supports logical replication, which enables the creation of a replica database and the continuous synchronization of data changes from the source database to the replica. This feature allows for migrating to a new PostgreSQL instance or even a different cloud provider with minimal downtime. Tools like pglogical and Slony are commonly used for more advanced replication scenarios.

- MySQL: MySQL offers features such as binary log replication, which allows data changes to be streamed from a primary database to a secondary database. This is the basis for many zero-downtime migration strategies. Percona XtraDB Cluster provides a synchronous multi-master replication solution that can minimize downtime during upgrades or migrations.

- Oracle Database: Oracle supports features such as Oracle GoldenGate, a comprehensive data integration product. GoldenGate enables real-time data replication and transformation across various platforms. It is commonly used for zero-downtime migrations, upgrades, and data integration projects.

- Microsoft SQL Server: SQL Server provides features like Always On Availability Groups (AGs), which support high availability and disaster recovery scenarios. Using AGs, it is possible to perform database migrations with minimal downtime by failing over to a secondary replica.

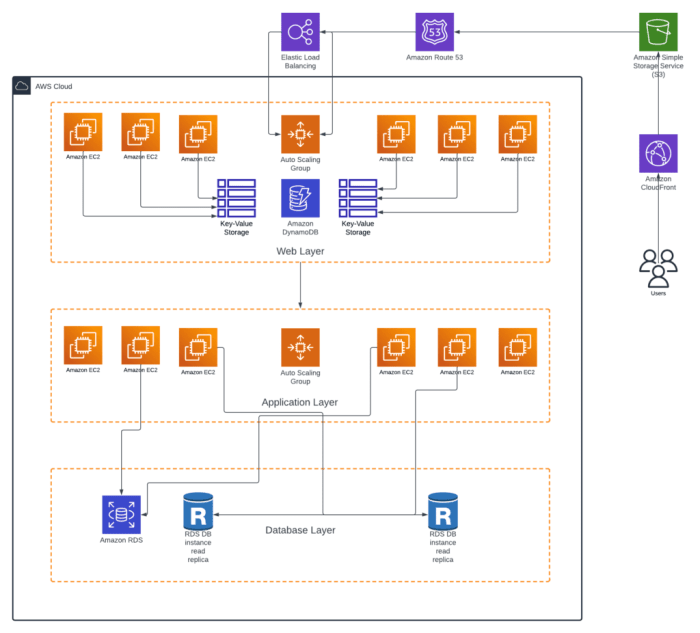

- Cloud-Based Database Services: Cloud providers like AWS, Google Cloud, and Azure offer managed database services (e.g., Amazon RDS, Google Cloud SQL, Azure SQL Database) that often incorporate features designed to simplify zero-downtime migrations, such as built-in replication, automated backups, and point-in-time recovery.

Zero-Downtime Migration Techniques Examples

Several techniques are commonly employed to achieve zero-downtime migrations. Each method has its advantages and disadvantages, and the best choice depends on the specific database technology, application architecture, and migration requirements.

- Blue/Green Deployment: This technique involves creating two identical environments: the “blue” (current production) and the “green” (new environment). Data is synchronized from the blue to the green environment. Once the green environment is ready, the application traffic is switched from the blue to the green environment.

- Logical Replication: This method involves replicating data changes from the source database to the target database. Applications can be updated to point to the new database while the old and new databases remain in sync. This method is suitable for migrations between different database versions or platforms.

- Change Data Capture (CDC): CDC captures and tracks changes made to data in a database. These changes are then applied to the target database. This approach is particularly useful for incremental data migration and keeping the target database synchronized with the source database.

- Database Upgrade/In-Place Migration: Some database systems offer in-place upgrade capabilities. This can involve upgrading the database software while the database is online. This method is generally less disruptive than migrating to a new database instance.

- Dual-Write Approach: In this approach, the application writes to both the old and new databases simultaneously. Once the data is fully synchronized, the application is updated to read from and write to the new database only.

Blue/Green Deployment Strategy Detailed Diagram

The blue/green deployment strategy is a popular zero-downtime migration technique. The diagram below illustrates the steps involved.

+---------------------+ +---------------------+ +---------------------+| Current Database | | Data Sync | | New Database || (Blue) |----->| (Replication/CDC) |----->| (Green) |+---------------------+ +---------------------+ +---------------------+ | | | Traffic Routing (Load Balancer/DNS) | | |+---------------------+ +---------------------+ +---------------------+| Users/Clients |----->| Application |----->| Application |+---------------------+ +---------------------+ +---------------------+ | (Initially Blue) | | (Transition Phase) | +---------------------+ +---------------------+ | | ^ | | ^ | | | | | | | | | | | | | | | | | | | v | | v | | | Traffic | | Traffic | | Switch | | Switch | | (Load Balancer) | | (Load Balancer) | | | | +---------------------+ +---------------------+ | | | | +---------------------+ +---------------------+ | Application |----->| Application | | (Transition Phase) | | (Green) | +---------------------+ +---------------------+ Description of the Diagram:

The diagram illustrates the blue/green deployment strategy for a database migration. The process starts with the existing database (Blue), which serves the current production traffic.

1. Data Synchronization: Data from the Blue database is continuously synchronized to the new Green database using replication or Change Data Capture (CDC) mechanisms.

2. Traffic Routing: Initially, all user traffic is directed to the application, which in turn accesses the Blue database.

3. Transition Phase: During the transition, the application’s traffic routing is gradually shifted from the Blue to the Green database. This is usually managed by a load balancer or DNS configuration changes.

4. Traffic Switch: After thorough testing and validation, all traffic is fully switched to the Green database, making it the new production environment.

5. Post-Migration: The Blue environment can then be decommissioned, or it may be retained as a rollback option.

Testing and Validation Procedures

Data integrity and application functionality are paramount following a database migration. Rigorous testing and validation procedures are crucial to ensure the success of the migration, minimizing disruptions and guaranteeing the availability and accuracy of data. This section Artikels a comprehensive approach to testing, including data comparison, functional validation, and post-migration checks.

Robust Testing Plan for Data Integrity Validation

A robust testing plan is essential to verify that data has been accurately migrated and remains consistent between the source and target databases. This plan should encompass several stages, ranging from initial data validation to ongoing monitoring. The plan should include specific test cases, acceptance criteria, and documented procedures.The following elements are critical for a comprehensive data integrity validation plan:

- Data Comparison: Implement automated data comparison tools to verify data consistency between the source and target databases. This involves comparing data at different levels, from individual records to aggregated summaries.

- Data Sampling: Use statistical sampling techniques to select representative subsets of data for detailed inspection. This helps to identify potential issues without the need to examine the entire dataset.

- Data Profiling: Conduct data profiling exercises to understand data characteristics, such as data types, null values, and data distribution, both before and after migration. This helps to identify data quality issues that may have arisen during the migration process.

- Error Handling and Reporting: Establish clear procedures for handling and reporting data integrity errors. This includes defining error thresholds, escalation paths, and remediation strategies.

- Performance Testing: Conduct performance tests to assess the impact of the migration on query performance, data loading times, and other critical operations.

- Security Testing: Ensure that all security configurations and access controls are correctly migrated and functioning as intended.

Post-Migration Testing and Validation Checklist

A checklist provides a structured approach to ensure all critical aspects of the post-migration validation are covered. This checklist serves as a reference guide, ensuring thoroughness and consistency.Here is a post-migration testing and validation checklist:

- Data Comparison:

- Verify the total number of records in each table matches between source and target.

- Compare data checksums or hash values for large tables.

- Sample a representative subset of data for detailed comparison.

- Data Quality:

- Check for missing or corrupted data.

- Validate data types and formats.

- Ensure data constraints (e.g., primary keys, foreign keys) are enforced.

- Functional Testing:

- Test critical application functionalities (e.g., data entry, reporting, data retrieval).

- Verify that all user roles and permissions are correctly configured.

- Test integrations with other systems.

- Performance Testing:

- Measure query response times.

- Assess data loading and processing times.

- Monitor database resource utilization (CPU, memory, disk I/O).

- Security Testing:

- Verify user authentication and authorization.

- Test data encryption and access controls.

- Conduct vulnerability scans.

- Backup and Recovery:

- Test the database backup and recovery procedures.

- Verify that backups can be restored successfully.

- Documentation:

- Update all documentation to reflect the new database environment.

- Document any issues encountered and their resolutions.

Data Comparison Process between Source and Target Databases

Comparing data between the source and target databases is a critical step in verifying the success of the migration. This process requires a combination of automated tools and manual verification to ensure data consistency.The data comparison process typically involves the following steps:

- Establish Baseline: Determine the source database’s state before migration. This baseline serves as the reference point for comparison.

- Choose Comparison Method: Select the appropriate comparison method based on the data volume, complexity, and available resources. Options include:

- Row-by-Row Comparison: Suitable for smaller datasets, comparing each row’s values.

- Checksum Comparison: Calculating checksums for tables or subsets of data for a quick check of data integrity.

- Aggregate Comparison: Comparing aggregate values (e.g., sums, counts, averages) to identify discrepancies.

- Select Comparison Tools: Use automated data comparison tools, such as database-specific tools or third-party software, to streamline the process. Tools should be capable of comparing data across different database platforms.

- Execute Comparison: Run the selected comparison method and analyze the results. Identify any discrepancies or errors.

- Investigate Discrepancies: Investigate any discrepancies found. This may involve manual inspection of data, reviewing migration logs, or analyzing application code.

- Remediate Issues: Implement corrective actions to address any data integrity issues. This might involve correcting data, reapplying the migration, or adjusting the application logic.

- Document Results: Document all comparison results, including any discrepancies found, the steps taken to resolve them, and the final verification results.

Test Cases for Validating Application Functionality

Application functionality testing is vital to ensure that the migrated database supports all application features and performs as expected. The test cases should cover a wide range of scenarios, including data entry, data retrieval, reporting, and user access.The following test cases are designed to validate application functionality after migration:

- Data Entry: Verify that users can successfully enter new data into the system.

- Test data entry forms with various data types (text, numbers, dates).

- Check for data validation rules (e.g., required fields, format constraints).

- Ensure that data is correctly stored in the database.

- Data Retrieval: Confirm that users can retrieve existing data from the system.

- Test search functionalities using various search criteria.

- Verify that search results are accurate and complete.

- Check the performance of data retrieval queries.

- Reporting: Validate that reports generate the correct data.

- Test different report types (e.g., summary reports, detailed reports).

- Verify the accuracy of report calculations and aggregations.

- Check the formatting and presentation of reports.

- User Authentication and Authorization: Ensure that users can log in to the system with the correct permissions.

- Test different user roles and their associated access rights.

- Verify that unauthorized users cannot access restricted data or functionalities.

- Test password reset and account management features.

- Data Updates and Deletions: Confirm that users can update and delete data without errors.

- Test data update functionalities with various data types.

- Verify that data deletions are performed correctly and that related data is also removed or updated (if applicable).

- Check for data integrity constraints during updates and deletions.

- Data Integrations: Verify that integrations with other systems are working correctly.

- Test data synchronization between the database and other systems.

- Verify that data is correctly transferred and processed by integrated systems.

- Check for error handling and logging in data integration processes.

- Performance Testing:

- Measure query response times under various load conditions.

- Assess data loading and processing times.

- Monitor database resource utilization (CPU, memory, disk I/O).

Monitoring and Performance Tuning

Effective monitoring and performance tuning are crucial for a successful database migration. They ensure the migration progresses smoothly, identify and resolve performance bottlenecks, and optimize the database’s performance post-migration. A proactive approach to these aspects minimizes downtime and maximizes the benefits of the new database environment.

Designing a Monitoring Strategy for Migration Progress and Performance

A robust monitoring strategy provides real-time insights into the migration’s status and performance. It allows for prompt identification of issues and proactive intervention.

- Define Key Performance Indicators (KPIs): Determine specific metrics to track migration progress and database performance. Examples include:

- Data transfer rate (GB/hour or rows/second).

- Latency (time taken for data to be transferred).

- CPU utilization of source and target servers.

- Memory utilization of source and target servers.

- Network bandwidth usage.

- Number of errors or failed transactions.

- Select Monitoring Tools: Choose appropriate monitoring tools based on the database platform and the migration method. Tools like:

- Database-specific tools: Oracle Enterprise Manager, SQL Server Management Studio (SSMS), pgAdmin for PostgreSQL.

- Third-party tools: SolarWinds Database Performance Analyzer, Datadog, New Relic.

- Operating System Monitoring Tools: `top`, `htop` (Linux), Task Manager (Windows).

- Establish Baseline Performance: Before the migration, establish a baseline of the source database’s performance under normal operating conditions. This serves as a reference point for comparison during and after the migration.

- Configure Alerts and Notifications: Set up alerts for KPIs that exceed predefined thresholds. This ensures timely notification of potential problems. For example, if the data transfer rate drops below a certain threshold, an alert should be triggered.

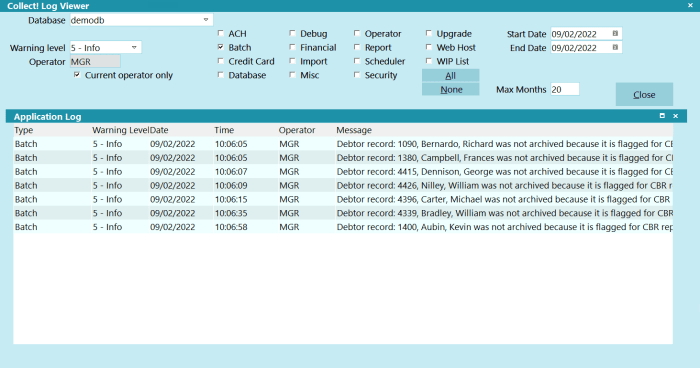

- Implement a Centralized Logging System: Centralize logs from the source and target databases, migration tools, and operating systems. This simplifies troubleshooting and performance analysis. Tools like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk can be used.

- Regularly Review and Refine the Monitoring Strategy: The monitoring strategy should be reviewed and refined periodically based on the migration’s progress and the database’s performance. Adjust thresholds, add new KPIs, or change monitoring tools as needed.

Monitoring Key Performance Indicators (KPIs) During the Migration

Monitoring specific KPIs during the migration provides real-time visibility into the process, enabling informed decision-making and timely intervention.

- Data Transfer Rate: Monitor the rate at which data is being transferred from the source to the target database. A consistently low transfer rate may indicate network bottlenecks, inefficient migration processes, or resource constraints. For instance, if a migration is expected to transfer 1 TB of data in 24 hours and the current transfer rate is only 20 GB/hour, further investigation is needed.

- Latency: Measure the delay between data transfer operations. High latency can indicate network congestion, disk I/O bottlenecks, or inefficient data transformation processes. Tools like `ping` can be used to assess network latency, and database-specific tools can provide latency metrics related to data transfer operations.

- Resource Utilization (CPU, Memory, Disk I/O): Track CPU utilization, memory usage, and disk I/O on both the source and target servers. High resource utilization on either server can indicate bottlenecks. For example, if the target server’s CPU utilization consistently exceeds 80% during the migration, consider increasing the server’s resources or optimizing the migration process.

- Error Rates: Monitor the number of errors or failed transactions during the migration. A high error rate indicates potential data integrity issues or problems with the migration process. Examine the logs for specific error messages to diagnose and resolve the issues.

- Transaction Throughput: Measure the number of transactions processed per second. This is particularly important for online transaction processing (OLTP) systems. A drop in transaction throughput during the migration can indicate performance issues.

- Network Bandwidth: Monitor network bandwidth usage to identify potential network bottlenecks. If the network bandwidth is saturated, it can slow down the data transfer rate.

- Replication Lag (for replication-based migrations): Monitor the lag between the source and target databases in replication-based migrations. High replication lag can indicate that the target database is not keeping up with the changes on the source database.

Methods for Optimizing Database Performance After the Migration

Optimizing database performance after the migration is crucial to ensure the database operates efficiently in the new environment.

- Index Optimization: Analyze and optimize database indexes to improve query performance. Inefficient or missing indexes can significantly impact query execution times. Use database-specific tools to identify and optimize indexes. For example, in MySQL, use the `EXPLAIN` command to analyze query execution plans and identify missing indexes.

- Query Optimization: Review and optimize SQL queries to ensure they are efficient. Poorly written queries can lead to slow performance. Use query optimization tools and techniques, such as:

- Rewriting complex queries.

- Using appropriate join strategies.

- Avoiding unnecessary subqueries.

- Using parameterized queries to prevent SQL injection and improve performance.

- Configuration Tuning: Tune database configuration parameters to optimize performance. These parameters vary depending on the database platform. For example:

- Buffer pool size (for caching data): Increasing the buffer pool size can improve read performance.

- Connection limits: Adjust connection limits to handle the expected number of concurrent users.

- Caching mechanisms: Configure caching mechanisms to reduce disk I/O.

- Hardware Optimization: Evaluate and optimize the hardware resources allocated to the database server.

- CPU: Ensure sufficient CPU cores are available.

- Memory: Allocate enough memory to the database server.

- Storage: Use fast storage, such as SSDs, to improve I/O performance.

- Network: Ensure the network infrastructure is adequate for the database workload.

- Database Statistics Updates: Regularly update database statistics to ensure the query optimizer has accurate information about the data. Outdated statistics can lead to poor query execution plans. Schedule regular statistics updates using database-specific commands (e.g., `ANALYZE TABLE` in MySQL, `UPDATE STATISTICS` in SQL Server).

- Data Partitioning: Consider data partitioning to improve performance for large tables. Partitioning divides a table into smaller, more manageable pieces, which can improve query performance and data management.

- Monitoring and Ongoing Tuning: Implement continuous monitoring and performance tuning to identify and address performance bottlenecks as the database workload evolves. Regularly review performance metrics and adjust configurations as needed.

Illustrating the Use of Database Performance Dashboards

Database performance dashboards provide a visual representation of key performance indicators, allowing for quick identification of performance issues and trends.

A database performance dashboard typically includes various charts and graphs that display real-time and historical performance data. The specific components of a dashboard vary depending on the database platform and the monitoring tools used, but common elements include:

- CPU Utilization Chart: A line chart displaying the CPU utilization of the database server over time. This chart helps identify periods of high CPU usage, which may indicate performance bottlenecks.

- Memory Utilization Chart: A chart showing the memory usage of the database server, including buffer pool usage, cache hit ratio, and memory allocation. High memory utilization can impact performance if the database is constantly swapping data to disk.

- Disk I/O Chart: A chart displaying disk I/O metrics, such as read/write operations per second and disk latency. High disk I/O can indicate bottlenecks related to storage performance.

- Data Transfer Rate Chart: A chart showing the rate at which data is being transferred from the source to the target database during the migration. This helps monitor the progress of the migration.

- Query Performance Metrics: Charts and graphs showing query execution times, query wait times, and the number of queries per second. This helps identify slow-running queries and potential performance issues.

- Error Rate Chart: A chart displaying the number of errors or failed transactions. A high error rate can indicate data integrity issues or problems with the migration process.

- Concurrency Metrics: Charts showing the number of active connections, the number of concurrent transactions, and lock wait times. This can help identify concurrency bottlenecks.

Example:

Imagine a dashboard showing a sudden spike in CPU utilization and disk I/O during a migration. This could indicate that a particular data transfer operation or query is causing a performance bottleneck. By analyzing the dashboard, database administrators can quickly identify the root cause of the issue and take corrective actions, such as optimizing the query or increasing the server’s resources.

Another example involves a dashboard displaying the data transfer rate dropping significantly. This prompts investigation, possibly revealing a network issue that requires attention to maintain the migration timeline. Dashboards offer a crucial advantage by allowing for real-time monitoring, swift identification of performance bottlenecks, and data-driven decision-making throughout the migration process.

Communication and Coordination

Effective communication and meticulous coordination are critical for the success of any database migration. A well-defined communication strategy ensures that all stakeholders are informed of the progress, potential risks, and any necessary actions. This proactive approach minimizes confusion, facilitates timely decision-making, and reduces the likelihood of unforeseen issues derailing the migration process. Consistent and clear communication fosters trust and collaboration among the teams involved.

Creating a Communication Plan for Stakeholders

Developing a comprehensive communication plan is paramount for managing stakeholder expectations and ensuring a smooth migration. This plan Artikels the frequency, methods, and content of communication, tailored to different stakeholder groups.

- Identify Stakeholders: Begin by identifying all relevant stakeholders, including:

- Executive Management: Requires high-level updates on progress, risks, and potential impact on business operations.

- Database Administrators (DBAs): Need detailed technical updates, troubleshooting information, and instructions for their specific tasks.

- Application Developers: Require information about changes to the database schema, connection strings, and potential application compatibility issues.

- System Administrators: Need updates on infrastructure changes, server configurations, and network dependencies.

- Business Users: Require information about planned downtime, new features, and any impact on their day-to-day operations.

- Define Communication Channels: Select appropriate communication channels based on the target audience and the type of information being conveyed. Consider using:

- Email: For formal announcements, detailed reports, and action items.

- Instant Messaging (e.g., Slack, Microsoft Teams): For quick updates, real-time troubleshooting, and informal communication.

- Project Management Software (e.g., Jira, Asana): For tracking tasks, documenting progress, and managing dependencies.

- Regular Meetings: For detailed discussions, issue resolution, and team coordination. These meetings can be daily stand-ups, weekly progress reviews, or ad-hoc meetings as needed.

- Establish Communication Frequency: Determine the frequency of communication based on the stage of the migration and the criticality of the information. For example:

- During the planning and preparation phase: Weekly or bi-weekly status reports to stakeholders.

- During the migration execution phase: Daily updates for the core migration team, and more frequent updates (e.g., every few hours) during critical downtime windows.

- Post-migration: Weekly or bi-weekly reports to ensure everything is stable.

- Develop a Communication Template: Create a standardized template to ensure consistency and clarity in communication. This template should include:

- Project Name and Version

- Date and Time of the Communication

- Migration Phase and Status

- Key Accomplishments

- Upcoming Activities

- Known Issues and Risks

- Action Items and Owners

- Contact Information for Support

- Assign Roles and Responsibilities: Clearly define who is responsible for creating, distributing, and receiving information. This includes:

- Communication Lead: Responsible for overseeing the communication plan and ensuring its execution.

- Technical Leads: Responsible for providing technical updates and addressing technical questions.

- Project Manager: Responsible for coordinating communication across teams and managing stakeholder expectations.

Providing a Template for Communicating Migration Status and Potential Issues

A standardized template ensures consistent and clear communication of the migration status, enabling stakeholders to stay informed and make informed decisions. This template should cover essential aspects of the migration process.

- Header:

- Project Name: Database Migration [Project Name]

- Date and Time: [Date] [Time]

- Communication Type: [Status Update/Issue Report/Alert]

- Executive Summary: A concise overview of the current status and any critical issues. For example: “The migration of database [Database Name] is currently in the [Phase] phase. We are on track to complete the migration within the planned timeframe. However, we have encountered [issue] which may affect the downtime window.”

- Migration Status: Detailed information about the progress of the migration.

- Phase: [Planning/Preparation/Execution/Validation/Post-Migration]

- Tasks Completed: [List of completed tasks]

- Tasks in Progress: [List of ongoing tasks]

- Percentage Complete: [Percentage]

- Key Accomplishments: Highlight significant achievements. Example: “Successfully completed the data replication from the source database to the target database.”

- Upcoming Activities: Artikel the next steps and planned activities. Example: “Next, we will be performing the cutover and application testing.”

- Issues and Risks: Identify any issues encountered and their potential impact.

- Issue: [Description of the issue]

- Impact: [Potential impact on the migration timeline or business operations]

- Mitigation Plan: [Steps being taken to address the issue]

- Action Items: List any actions required from stakeholders.

- Action: [Specific action required]

- Owner: [Person responsible for the action]

- Due Date: [Date the action is due]

- Contact Information: Provide contact details for support and further information.

- Project Manager: [Name and Contact Information]

- Technical Lead: [Name and Contact Information]

- Support Team: [Name and Contact Information]

Detailing Roles and Responsibilities

Clearly defined roles and responsibilities are crucial for a coordinated and efficient migration process. Each team member should understand their specific tasks, reporting structure, and decision-making authority.

- Project Manager:

- Overall responsibility for the migration project.

- Develops and manages the project plan, including timelines, budget, and resources.

- Coordinates communication with stakeholders.

- Manages risks and issues, ensuring they are addressed promptly.

- Tracks progress and reports on the project status.

- Database Administrator (DBA):

- Responsible for the technical aspects of the database migration.

- Creates and maintains database backups.

- Configures and monitors database replication.

- Performs data migration and validation.

- Troubleshoots database-related issues.

- Application Developer:

- Responsible for application compatibility and testing.

- Modifies application code to connect to the new database.

- Tests the application after the migration to ensure functionality.

- Addresses any application-related issues.

- System Administrator:

- Responsible for the infrastructure aspects of the migration.

- Configures and manages servers and network components.

- Ensures sufficient resources are available for the migration.

- Monitors system performance and addresses any infrastructure-related issues.

- Business Analyst:

- Gathers and documents business requirements.

- Validates the migrated data to ensure accuracy.

- Provides support to business users during and after the migration.

- Communication Lead:

- Responsible for executing the communication plan.

- Creates and distributes status reports.

- Manages communication channels.

- Ensures that stakeholders are kept informed.

Designing a Communication Workflow Flowchart

A communication workflow flowchart provides a visual representation of the communication process, clarifying the flow of information and the roles of each team member. This helps to ensure that information is disseminated efficiently and effectively.

The flowchart would depict the following steps and elements:

1. Start: Project Kick-off/Migration Phase Begins.

2. Data Gathering/Issue Identification: (Input from DBAs, Application Developers, System Admins, etc.)

- Gather technical information and status updates from technical teams.

- Identify potential issues, risks, and mitigation plans.

3. Communication Lead/Project Manager Review: (Decision Point)

- Review the gathered information.

- Determine the severity and impact of issues.

4. Status Report/Issue Report Generation: (Action)

- The Communication Lead creates a status report or issue report based on the information gathered. The report includes the current status, accomplishments, upcoming activities, issues, and action items.

5. Distribution to Stakeholders: (Action)

- Distribute the report via defined communication channels (email, messaging, meetings).

6. Stakeholder Review/Feedback: (Input from all stakeholders)

- Stakeholders review the report and provide feedback, questions, or requests for clarification.

7. Issue Escalation/Resolution: (Decision/Action)

- If issues are identified, escalate to the appropriate team members (DBAs, Application Developers, etc.) for resolution.

- The responsible team resolves the issue and updates the status report.

8. Follow-up and Monitoring: (Ongoing)

- The Project Manager and Communication Lead monitor the progress and ensure that action items are completed.

- Regularly scheduled meetings and updates are held to ensure all stakeholders remain informed.

9. End: Project Completion/Migration Phase Ends.

Flowchart Elements Description:

- Rectangles: Represent processes or actions (e.g., “Create Status Report”).

- Diamonds: Represent decision points (e.g., “Issue Identified?”).

- Arrows: Indicate the direction of the workflow.

- Oval/Terminator: Start and end points.

Illustrative Example of Flowchart Sections:

A rectangle showing: “DBA Reports Data Replication Status” then an arrow points to another rectangle: “Project Manager Reviews Replication Status and Identifies any Problems”. If a problem is identified, an arrow points to a diamond labeled “Replication Problem? Yes/No”. If “Yes,” an arrow points to a rectangle “DBA Resolves Replication Problem.” If “No,” the arrow proceeds to the rectangle “Project Manager Compiles Status Report.”

Automation and Scripting

Automating tasks within a database migration process significantly enhances efficiency, reduces human error, and minimizes downtime. Scripting allows for repeatable, consistent execution of complex operations, streamlining the overall migration lifecycle. This section details the benefits of automation, provides examples of scripting techniques, and Artikels a sample script for schema migration.

Benefits of Automating Migration Tasks

Automating database migration tasks yields several critical advantages.

- Reduced Downtime: Automated processes execute faster and more predictably than manual operations, thereby shortening the window of required downtime. For instance, the automation of data transfer can significantly reduce the time needed to move large datasets.

- Minimized Human Error: Automation eliminates the potential for errors introduced by manual data entry or incorrect execution of commands. Automated scripts consistently follow predefined steps, reducing the risk of mistakes.

- Increased Efficiency: Scripts can execute repetitive tasks quickly and efficiently, freeing up database administrators (DBAs) to focus on more strategic activities, such as planning and monitoring.

- Improved Repeatability: Automated processes are easily repeatable across different environments (development, testing, production), ensuring consistent migration results.

- Enhanced Auditability: Automated scripts can be version-controlled and logged, providing a clear audit trail of all migration activities. This simplifies troubleshooting and compliance efforts.

Examples of Scripts for Automating Migration Processes

Several aspects of a database migration can be automated using various scripting languages and tools. Here are some examples:

- Schema Migration Scripts: Scripts to create tables, indexes, views, and other database objects in the target environment, mirroring the schema of the source database. These scripts often utilize SQL or a database-specific scripting language (e.g., PL/SQL for Oracle, T-SQL for SQL Server).

- Data Transfer Scripts: Scripts to extract data from the source database and load it into the target database. These can use tools like `mysqldump` for MySQL, `pg_dump` for PostgreSQL, or proprietary tools offered by database vendors.

- Data Validation Scripts: Scripts to compare data between the source and target databases to ensure data integrity. These scripts might use SQL queries to compare row counts, checksums, or specific data values.

- Pre- and Post-Migration Scripts: Scripts to perform tasks before and after the data migration, such as creating users, granting permissions, and configuring database settings.

- Monitoring Scripts: Scripts to monitor the progress of the migration, track resource utilization, and alert administrators to potential issues. These scripts often integrate with monitoring tools.

Scripting Data Transfer and Validation

Scripting data transfer and validation involves a structured approach.

- Data Extraction: Employ a script to extract data from the source database. This can involve exporting data to flat files (CSV, TXT) or using database-specific tools for more efficient extraction. For example, in MySQL, the `mysqldump` command can export the entire database schema and data into a single SQL file or separate files.

- Data Transformation (if necessary): If data needs to be transformed during migration (e.g., data type conversions, data cleansing), a script can handle these transformations before loading the data into the target database. This might involve using scripting languages like Python or Perl to process the extracted data.

- Data Loading: Use a script to load the transformed data into the target database. This can involve using the `mysql` client to execute SQL files, or database-specific tools for bulk loading.

- Data Validation: Implement scripts to validate the data in the target database. This might involve comparing row counts, checksums, or specific data values between the source and target databases. Tools like `pt-table-checksum` (Percona Toolkit) can be used for efficient data validation.

- Error Handling and Logging: Incorporate error handling and logging mechanisms into the scripts to capture any issues that arise during the data transfer and validation processes. This allows for efficient troubleshooting.

Sample Script to Automate Database Schema Migration

This is a simplified example script (using a hypothetical scripting language) to automate the schema migration process. This script assumes the use of SQL files for schema definition.“`#!/usr/bin/env python3import subprocessimport os# Configurationsource_db_host = “source_db_host”source_db_user = “source_db_user”source_db_password = “source_db_password”source_db_name = “source_db_name”target_db_host = “target_db_host”target_db_user = “target_db_user”target_db_password = “target_db_password”target_db_name = “target_db_name”schema_migration_scripts_path = “/path/to/schema/scripts” # Directory containing SQL files for schema creationdef execute_sql_script(script_path, db_host, db_user, db_password, db_name): try: command = f”mysql -h db_host -u db_user -p’db_password’ -D db_name < script_path" result = subprocess.run(command, shell=True, check=True, capture_output=True, text=True) print(f"Script script_path executed successfully.") print(result.stdout) except subprocess.CalledProcessError as e: print(f"Error executing script script_path:") print(e.stderr) return False return Truedef migrate_schema(): # Iterate through SQL files in the schema directory and execute them in order. for filename in sorted(os.listdir(schema_migration_scripts_path)): if filename.endswith(".sql"): script_path = os.path.join(schema_migration_scripts_path, filename) print(f"Executing script: script_path") if not execute_sql_script(script_path, target_db_host, target_db_user, target_db_password, target_db_name): print(f"Schema migration failed. Check logs for details.") return print("Schema migration completed successfully.")if __name__ == "__main__": migrate_schema()```This script first defines configuration variables for database connection details and the location of the schema migration scripts. The `execute_sql_script` function executes a single SQL script against the target database using the `mysql` client. The `migrate_schema` function iterates through all `.sql` files in the specified directory, executing each script in order. Error handling is included to catch failures during script execution. This example is intended for illustrative purposes, and specific implementations will vary based on the target database system and the complexity of the schema. A real-world implementation would likely include more robust error handling, logging, and the ability to handle dependencies between schema objects.

Database Schema Migration Strategies

Schema migration is a critical component of database migration, involving the adaptation of the database structure (schema) from the source to the target environment.

This process encompasses alterations to tables, views, indexes, stored procedures, and other database objects. Effective schema migration is essential to maintain data integrity, application functionality, and minimize downtime during the overall migration process.

Different Approaches to Schema Migration

Several strategies can be employed for schema migration, each with its trade-offs in terms of complexity, downtime, and impact on the application. These strategies can be broadly categorized into online and offline approaches.

- Offline Schema Changes: This approach involves taking the database offline to apply schema changes. This typically entails stopping the application, making the necessary schema modifications, and then restarting the application. While straightforward, this method results in significant downtime, making it less suitable for production environments requiring high availability. This approach is often used for smaller databases or during scheduled maintenance windows.

- Online Schema Changes: Online schema changes aim to minimize downtime by allowing schema modifications while the database remains operational. This is typically achieved using techniques that allow changes to be applied incrementally, without locking the entire table or database. This approach is preferred for large databases and environments with strict uptime requirements. Common techniques include:

- Online DDL (Data Definition Language) Operations: Certain database systems support online DDL operations, allowing changes like adding columns or indexes without blocking read/write operations.

The specific implementation varies depending on the database platform.

- Shadow Tables/Dual Writes: This technique involves creating a shadow table with the new schema. During the migration, data is written to both the original and the shadow table. Once the data is synchronized, the original table is replaced with the shadow table.

- Triggers: Triggers can be used to synchronize data between the original and the new schema during the migration. This can be useful for complex schema transformations, but can also impact performance.

- Online DDL (Data Definition Language) Operations: Certain database systems support online DDL operations, allowing changes like adding columns or indexes without blocking read/write operations.

- Hybrid Approaches: Some migrations might utilize a combination of online and offline techniques, depending on the specific schema changes required and the acceptable downtime window. For instance, complex schema changes might be performed offline during a scheduled maintenance window, while less impactful changes are done online.

Tools and Techniques for Managing Schema Changes

Various tools and techniques are available to facilitate schema migration, automating and streamlining the process.

- Database-Specific Tools: Most database systems provide their own tools for schema migration. These tools often support online schema changes, schema comparison, and automated scripting.

- MySQL: MySQL offers tools like `pt-online-schema-change` (Percona Toolkit) for performing online schema changes.

- PostgreSQL: PostgreSQL supports online `CREATE INDEX` and `ALTER TABLE` operations, and tools like `pg_dump` and `pg_restore` can be used for schema transfer.

- SQL Server: SQL Server provides tools like SQL Server Management Studio (SSMS) for schema comparison and scripting. Additionally, features like online indexing can minimize downtime.

- Third-Party Migration Tools: Several third-party tools are designed to automate schema migration across different database platforms. These tools often offer features like schema comparison, data mapping, and change management. Examples include:

- Flyway: A database migration tool that uses SQL scripts to manage schema changes. It supports various database systems and is often used in DevOps workflows.

- Liquibase: Another popular database migration tool that supports declarative schema changes and automated deployments.

- Scripting and Automation: Scripting languages like Python, Perl, or shell scripting can be used to automate schema migration tasks, such as generating DDL scripts, executing schema changes, and verifying the results. This approach provides flexibility and control over the migration process.

Handling Schema Differences

Schema differences between the source and target databases are inevitable, especially when migrating between different database platforms or versions. Careful planning and execution are crucial to address these differences.

- Schema Comparison and Analysis: Before migration, a thorough comparison of the source and target schemas is essential. This involves identifying differences in data types, constraints, indexes, and other database objects. Tools like schema comparison utilities can automate this process, highlighting the discrepancies.

- Data Type Mapping: Data types often vary between different database systems. A data type mapping strategy is needed to ensure data integrity during migration. This might involve converting data types, adjusting data precision, or handling unsupported data types.

- Constraint Mapping: Constraints, such as primary keys, foreign keys, and unique constraints, must be mapped and replicated in the target database. This ensures data consistency and referential integrity.

- Index Optimization: Indexes might need to be recreated or optimized in the target database. This is particularly important for performance-critical applications.

- Stored Procedure and Function Migration: Stored procedures and functions might need to be rewritten or adapted for the target database platform. This requires understanding the syntax and functionality differences.

- Schema Transformation Scripts: In complex scenarios, schema transformation scripts are required to convert the source schema to the target schema. These scripts might involve altering table structures, renaming columns, or applying data transformations.

Comparison of Schema Migration Strategies

The following table compares different schema migration strategies, highlighting their key characteristics.

| Strategy | Downtime | Complexity | Tools/Techniques | Use Cases |

|---|---|---|---|---|

| Offline Schema Changes | High | Low | Database-specific tools, manual scripting | Small databases, scheduled maintenance windows |

| Online Schema Changes (Online DDL) | Low | Medium | Database-specific online DDL features, `pt-online-schema-change` (MySQL) | Large databases, high-availability environments |

| Online Schema Changes (Shadow Tables/Dual Writes) | Low | High | Application logic, triggers, database-specific tools | Complex schema transformations, minimizing downtime |

| Online Schema Changes (Triggers) | Low to Medium | Medium to High | Triggers, database-specific tools | Data synchronization, complex transformations |

| Hybrid Approaches | Variable | Variable | Combination of online and offline techniques | Balancing downtime and complexity |

Handling Application Downtime

Application downtime is an inevitable consequence of database migration. Minimizing its duration and mitigating its impact on users is crucial for a successful migration process. Careful planning, strategic execution, and proactive communication are essential to achieve this. This section explores methods for managing downtime, reducing its impact, and ensuring a smooth transition for users.

Methods for Managing Application Downtime

Managing application downtime involves a multifaceted approach, encompassing pre-migration planning, execution strategies, and post-migration validation. The selection of appropriate methods depends on the application’s architecture, the criticality of its data, and the acceptable downtime window.

- Planned Downtime: This involves scheduling downtime during periods of low user activity, such as off-peak hours or weekends. This approach allows for a controlled migration process and reduces the risk of disrupting a large number of users. However, it may not be feasible for applications with 24/7 availability requirements.

- Zero-Downtime Migration Techniques: Employing techniques such as blue/green deployments or database replication minimizes downtime by allowing users to continue accessing the application during the migration process. These methods often involve creating a parallel environment (e.g., a “green” environment) and gradually switching traffic to it once the migration is complete.

- Graceful Degradation: This strategy involves designing the application to function with reduced functionality during downtime. For example, if the database is unavailable, the application might serve cached data or provide read-only access. This approach minimizes the impact on users by ensuring some level of service availability.

- Database Connection Pooling: Using connection pooling can improve the efficiency of database interactions, which may indirectly reduce downtime by optimizing resource utilization and potentially accelerating migration steps. Connection pooling maintains a pool of database connections, reducing the overhead of establishing new connections for each request.

Techniques for Reducing the Impact of Downtime on Users

Several techniques can be employed to reduce the negative impact of downtime on users. These techniques focus on communication, transparency, and providing alternative options during the migration process.

- Pre-Migration Communication: Announcing the planned downtime in advance via email, in-app notifications, and website banners allows users to prepare for the disruption. Provide a clear explanation of the reasons for the downtime, the expected duration, and any potential impact on user access.

- Progress Updates: During the migration, provide regular updates on the progress, including the estimated time remaining and any known issues. This helps to manage user expectations and reduces anxiety.

- Alternative Access Methods: If possible, provide alternative access methods during the downtime, such as a read-only version of the application or access to cached data.

- Error Handling and Redirection: Implement robust error handling to gracefully handle database connection failures and redirect users to an appropriate page, such as a maintenance page or a support contact form.

- Post-Migration Verification: After the migration, confirm the completion and verify functionality. Inform users the service is back online.

Strategies for Redirecting Traffic During Migration

Traffic redirection is a crucial component of managing application downtime, especially when using zero-downtime migration techniques. The objective is to seamlessly switch users to the new database environment while minimizing disruption.

- DNS Switching: This involves updating the Domain Name System (DNS) records to point the application’s domain name to the new database server. This approach is relatively simple but can take time to propagate across the internet, leading to a delay before all users are redirected.

- Load Balancer Configuration: Load balancers can be configured to direct traffic to the new database server once the migration is complete. This allows for a gradual switchover, allowing the application to test the new system with a small subset of users before redirecting all traffic.